Best AI tools for< Deploy Extension >

20 - AI tool Sites

Site Not Found

The website page seems to be a placeholder or error page with the message 'Site Not Found'. It indicates that the user may not have deployed an app yet or may have an empty directory. The page suggests referring to hosting documentation to deploy the first app. The site appears to be under construction or experiencing technical issues.

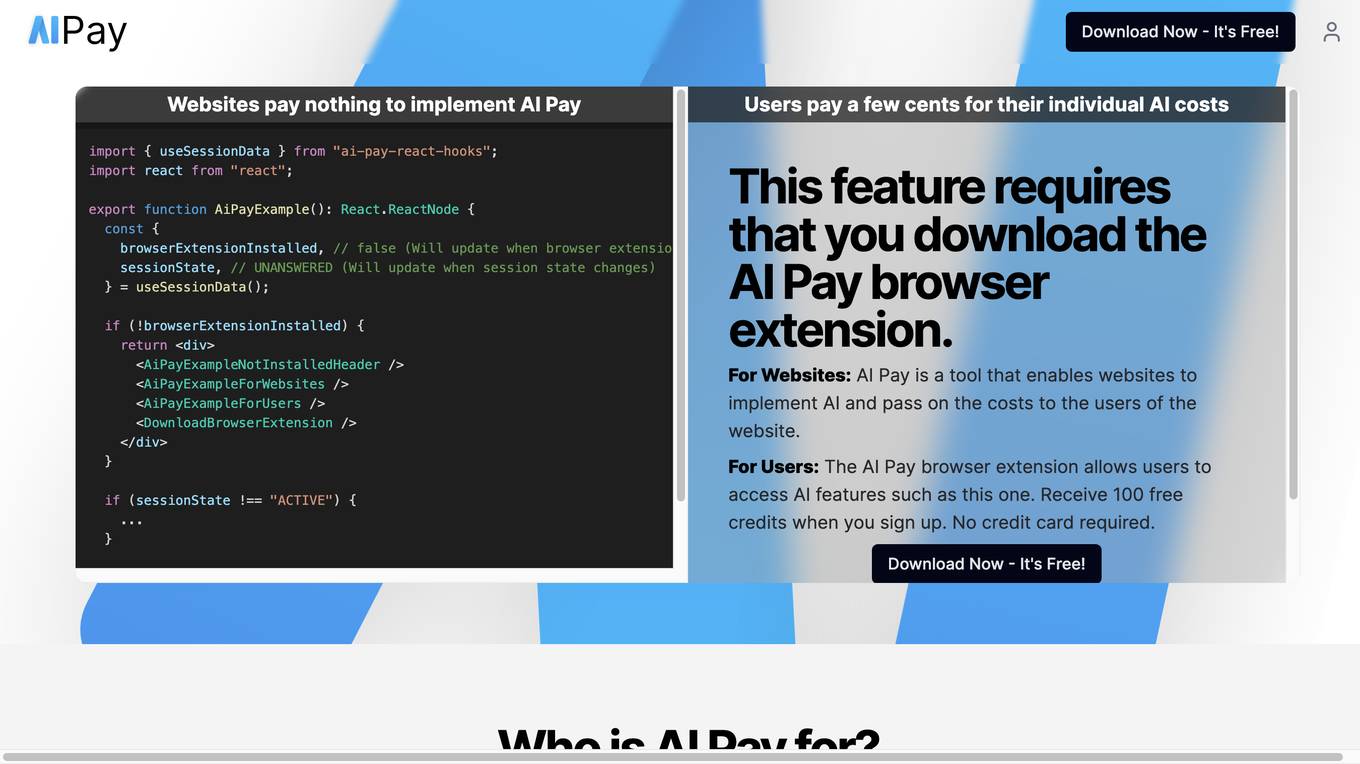

AI Pay

AI Pay is a tool that enables websites to implement AI and pass on the costs to the users of the website. It also offers a browser extension for users to access AI features on websites. The tool provides a way for websites to monetize by receiving a portion of the users' AI Pay usage cost. AI Pay can be used to power any AI website and offers features like open-source GPT apps deployment, chatbot development, and monetization through optional AI features.

Dust

Dust is a customizable and secure AI assistant platform that helps businesses amplify their team's potential. It allows users to deploy the best Large Language Models to their company, connect Dust to their team's data, and empower their teams with assistants tailored to their specific needs. Dust is exceptionally modular and adaptable, tailoring to unique requirements and continuously evolving to meet changing needs. It supports multiple sources of data and models, including proprietary and open-source models from OpenAI, Anthropic, and Mistral. Dust also helps businesses identify their most creative and driven team members and share their experience with AI throughout the company. It promotes collaboration with shared conversations, @mentions in discussions, and Slackbot integration. Dust prioritizes security and data privacy, ensuring that data remains private and that enterprise-grade security measures are in place to manage data access policies.

CodeConductor

CodeConductor is a no-code AI software development platform that empowers users to build scalable, high-quality applications without the need for extensive coding. The platform streamlines the app development process, allowing users to focus on innovation and customization. With features like accelerated app development, complete customization control, intelligent feature suggestions, dynamic data modeling, and seamless CI/CD & auto-scaled hosting, CodeConductor offers a user-friendly and efficient solution for creating web and mobile applications. The platform also provides enterprise-grade security, robust deployment options, and transparent code history tracking.

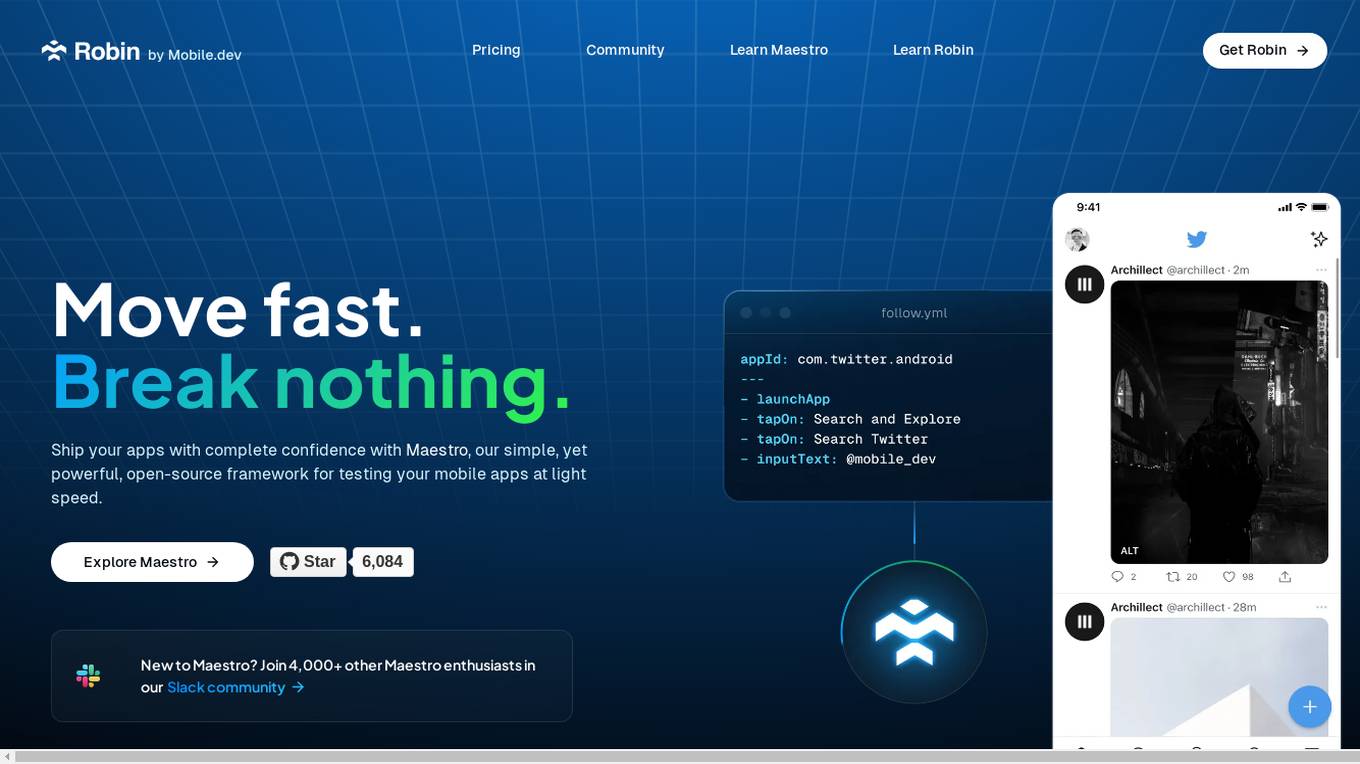

Robin

Robin by Mobile.dev is an AI-powered mobile app testing tool that allows users to test their mobile apps with confidence. It offers a simple yet powerful open-source framework called Maestro for testing mobile apps at high speed. With intuitive and reliable testing powered by AI, users can write rock-solid tests without extensive coding knowledge. Robin provides an end-to-end testing strategy, rapid testing across various devices and operating systems, and auto-healing of test flows using state-of-the-art AI models.

Lobe

Lobe is a free easy-to-use tool for Mac and PC that helps you train machine learning models and ship them to any platform you choose. It provides a user-friendly interface for training machine learning models without requiring extensive coding knowledge. Lobe supports various tasks related to machine learning, such as creating image-based datasets, working with Python toolsets, and deploying models on different platforms.

API Fabric

API Fabric is an AI API Generator that allows users to easily create and deploy APIs for their applications. With a user-friendly interface, API Fabric simplifies the process of generating APIs by providing pre-built templates and customization options. Users can quickly integrate AI capabilities into their projects without the need for extensive coding knowledge. The platform supports various AI models and algorithms, making it versatile for different use cases. API Fabric streamlines the API development process, saving time and effort for developers.

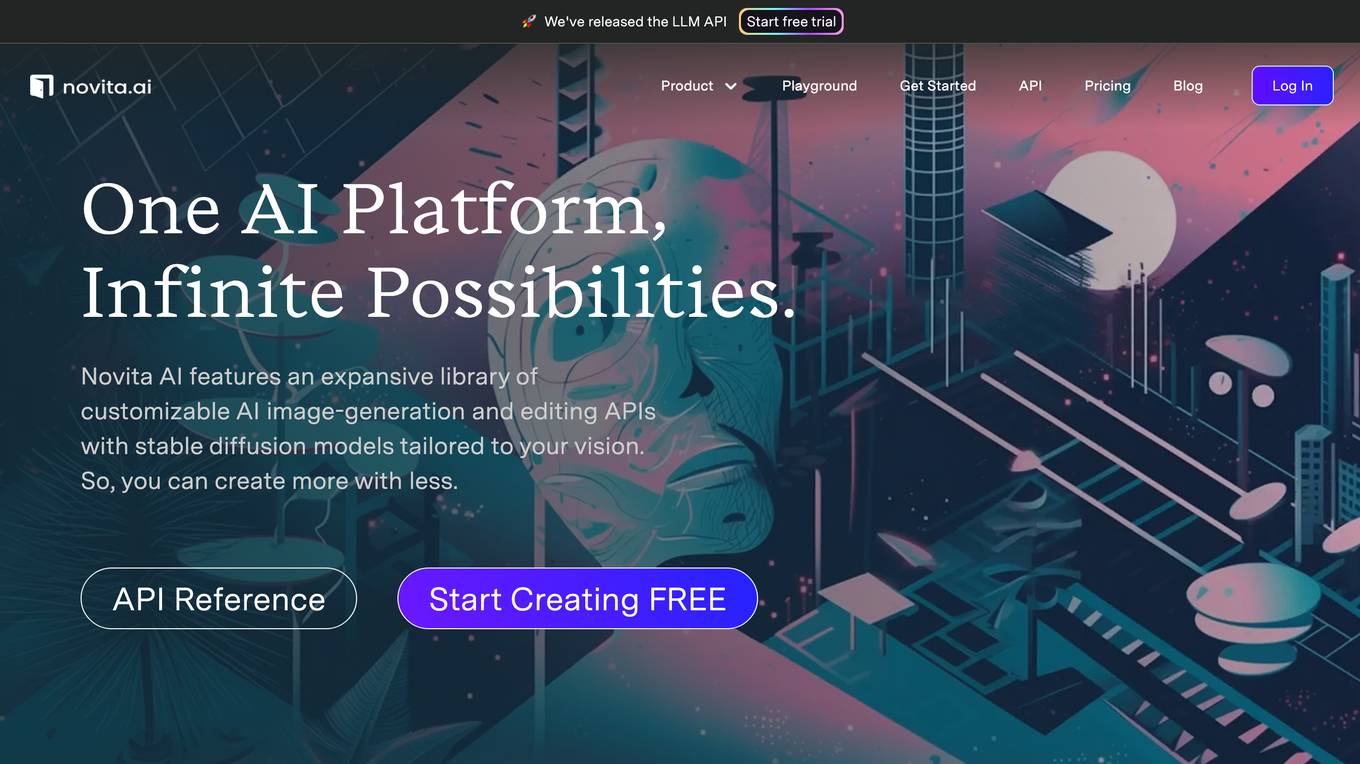

Novita AI

Novita AI is an AI cloud platform that offers Model APIs, Serverless, and GPU Instance solutions integrated into one cost-effective platform. It provides tools for building AI products, scaling with serverless architecture, and deploying with GPU instances. Novita AI caters to startups and businesses looking to leverage AI technologies without the need for extensive machine learning expertise. The platform also offers a Startup Program, 24/7 service support, and has received positive feedback for its reasonable pricing and stable API services.

ThinkRoot

ThinkRoot is an AI Compiler that empowers users to transform their ideas into fully functional applications within minutes. By leveraging advanced artificial intelligence algorithms, ThinkRoot streamlines the app development process, eliminating the need for extensive coding knowledge. With a user-friendly interface and intuitive design, ThinkRoot caters to both novice and experienced developers, offering a seamless experience from concept to deployment. Whether you're a startup looking to prototype quickly or an individual with a creative vision, ThinkRoot provides the tools and resources to bring your ideas to life effortlessly.

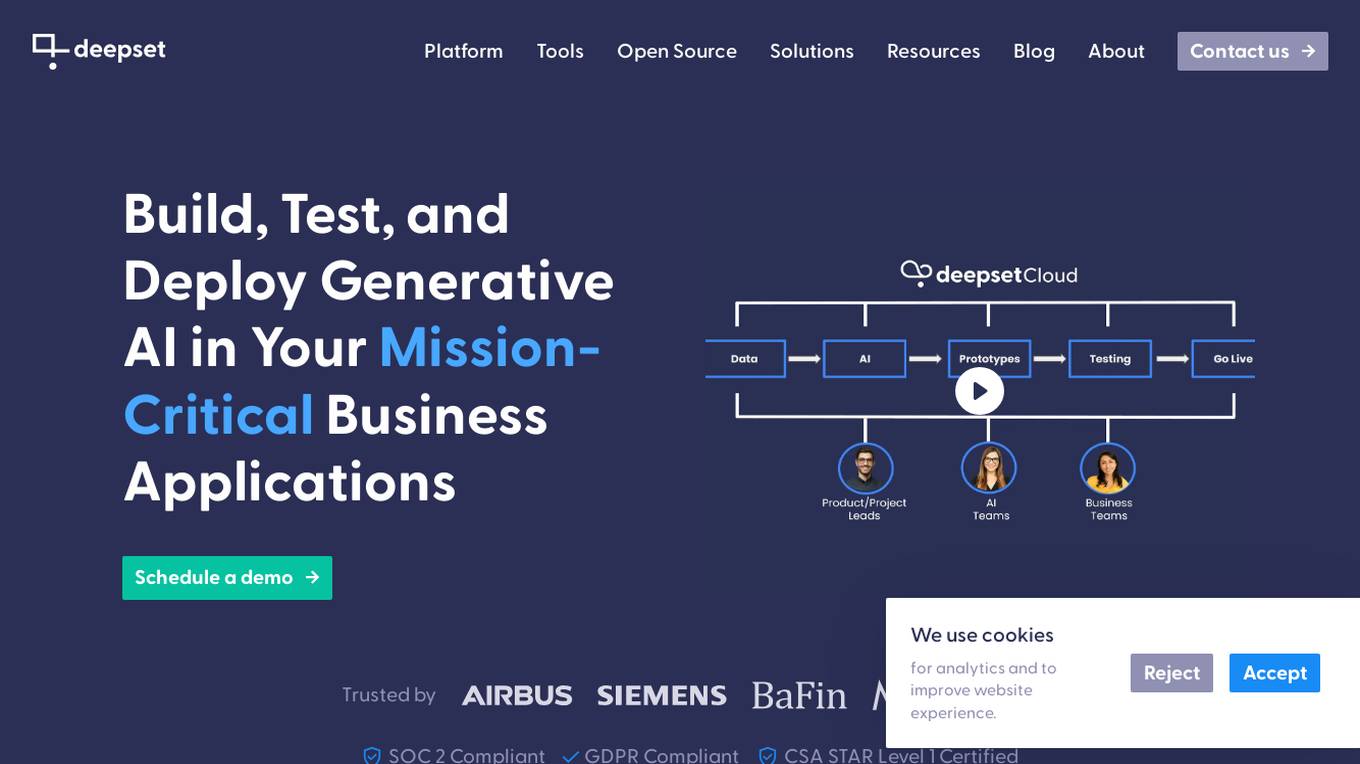

deepset

deepset is an AI platform that offers enterprise-level products and solutions for AI teams. It provides deepset Cloud, a platform built with Haystack, enabling fast and accurate prototyping, building, and launching of advanced AI applications. The platform streamlines the AI application development lifecycle, offering processes, tools, and expertise to move from prototype to production efficiently. With deepset Cloud, users can optimize solution accuracy, performance, and cost, and deploy AI applications at any scale with one click. The platform also allows users to explore new models and configurations without limits, extending their team with access to world-class AI engineers for guidance and support.

Caffe

Caffe is a deep learning framework developed by Berkeley AI Research (BAIR) and community contributors. It is designed for speed, modularity, and expressiveness, allowing users to define models and optimization through configuration without hard-coding. Caffe supports both CPU and GPU training, making it suitable for research experiments and industry deployment. The framework is extensible, actively developed, and tracks the state-of-the-art in code and models. Caffe is widely used in academic research, startup prototypes, and large-scale industrial applications in vision, speech, and multimedia.

FlowHunt

FlowHunt is an AI chatbot platform that offers a new no-code visual way to build AI tools and chatbots for websites. It provides a template library with ready-to-use options, from simple AI tools to complex chatbots, and integrates with popular services like Smartsupp, LiveChat, HubSpot, and LiveAgent. The platform also features components like Task Decomposition, Query Expansion, Chat Input, Chat Output, Document Retriever, Document to Text, Generator, and GoogleSearch, enabling users to create customized chatbots for various contexts. FlowHunt aims to simplify the process of building and deploying AI-powered solutions for customer service and content generation.

PixieBrix

PixieBrix is an AI engagement platform that allows users to build, deploy, and manage internal AI tools to enhance team productivity. It unifies the AI landscape with oversight and governance for enterprise-scale operations, increasing speed, accuracy, compliance, and satisfaction. The platform leverages AI and automation to streamline workflows, improve user experience, and unlock untapped potential. With a focus on enterprise readiness and customization, PixieBrix offers extensibility, iterative innovation, scalability for teams, and an engaged community for idea exchange and support.

CloudApper AI

CloudApper AI is an advanced AI platform that helps businesses build, integrate, and deploy AI solutions seamlessly. The platform offers a holistic system comprising Generative AI, Workflows, and Integration components to enhance decision-making, automate processes, and synchronize data with existing enterprise systems. CloudApper AI aims to democratize AI by providing cutting-edge AI/LLM technology, seamless integration capability, and secure data handling without the need for extensive programming skills. The platform empowers businesses to stay ahead in the digital landscape by leveraging advanced technologies and ensuring every developer can leverage AI to transform legacy processes.

Streamlit

Streamlit is a web application framework that allows users to create interactive web applications effortlessly using Python. It enables data scientists and developers to build and deploy data-driven applications quickly and easily. With Streamlit, users can create interactive visualizations, dashboards, and machine learning models without the need for extensive web development knowledge. The platform provides a simple and intuitive way to turn data scripts into shareable web apps, making it ideal for prototyping, showcasing projects, and sharing insights with others.

Seldon

Seldon is an MLOps platform that helps enterprises deploy, monitor, and manage machine learning models at scale. It provides a range of features to help organizations accelerate model deployment, optimize infrastructure resource allocation, and manage models and risk. Seldon is trusted by the world's leading MLOps teams and has been used to install and manage over 10 million ML models. With Seldon, organizations can reduce deployment time from months to minutes, increase efficiency, and reduce infrastructure and cloud costs.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

Azure Static Web Apps

Azure Static Web Apps is a platform provided by Microsoft Azure for building and deploying modern web applications. It allows developers to easily host static web content and serverless APIs with seamless integration to popular frameworks like React, Angular, and Vue. With Azure Static Web Apps, developers can quickly set up continuous integration and deployment workflows, enabling them to focus on building great user experiences without worrying about infrastructure management.

PoplarML

PoplarML is a platform that enables the deployment of production-ready, scalable ML systems with minimal engineering effort. It offers one-click deploys, real-time inference, and framework agnostic support. With PoplarML, users can seamlessly deploy ML models using a CLI tool to a fleet of GPUs and invoke their models through a REST API endpoint. The platform supports Tensorflow, Pytorch, and JAX models.

ClawOneClick

ClawOneClick is an AI tool that allows users to deploy their own AI assistant in seconds without the need for technical setup. It offers a one-click deployment of an always-on AI chatbot powered by the latest AI models. Users can choose from various AI models and messaging channels to customize their AI assistant. ClawOneClick handles all the cloud infrastructure provisioning and management, ensuring secure connections and end-to-end encryption. The tool is designed to adapt to various tasks and can assist with email summarization, quick replies, translation, proofreading, customer queries, report condensation, meeting reminders, voice memo transcription, deadline tracking, schedule organization, meeting action item capture, time zone coordination, task automation, expense logging, priority planning, content generation, idea brainstorming, fast topic research, book and article summarization, concept learning, creative suggestions, code explanation, document analysis, professional document drafting, project goal definition, team updates preparation, data trend interpretation, job posting writing, product and price comparison, meal plan suggestion, and more.

0 - Open Source AI Tools

20 - OpenAI Gpts

XRPL GPT

Build on the XRP Ledger with assistance from this GPT trained on extensive documentation and code samples.

Frontend Developer

AI front-end developer expert in coding React, Nextjs, Vue, Svelte, Typescript, Gatsby, Angular, HTML, CSS, JavaScript & advanced in Flexbox, Tailwind & Material Design. Mentors in coding & debugging for junior, intermediate & senior front-end developers alike. Let’s code, build & deploy a SaaS app.

Azure Arc Expert

Azure Arc expert providing guidance on architecture, deployment, and management.

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

Docker and Docker Swarm Assistant

Expert in Docker and Docker Swarm solutions and troubleshooting.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.