Best AI tools for< Critique Designs >

11 - AI tool Sites

Feedback Wizard

Feedback Wizard is an AI-powered tool designed to provide instant design feedback directly within Figma. It leverages AI technology to offer design wisdom and actionable insights to improve user experience and elevate the visual elements of Figma designs. With over 2700 designers already using the tool, Feedback Wizard aims to streamline the design feedback process and enhance the overall design quality.

Sun Group (China) Co., Ltd.

The website is the official site of the Sun Group (China) Co., Ltd., endorsed by Louis Koo. It provides information about the company's history, leadership, organizational structure, educational programs, research achievements, and employee activities. The site also features news updates, announcements, and resources for download.

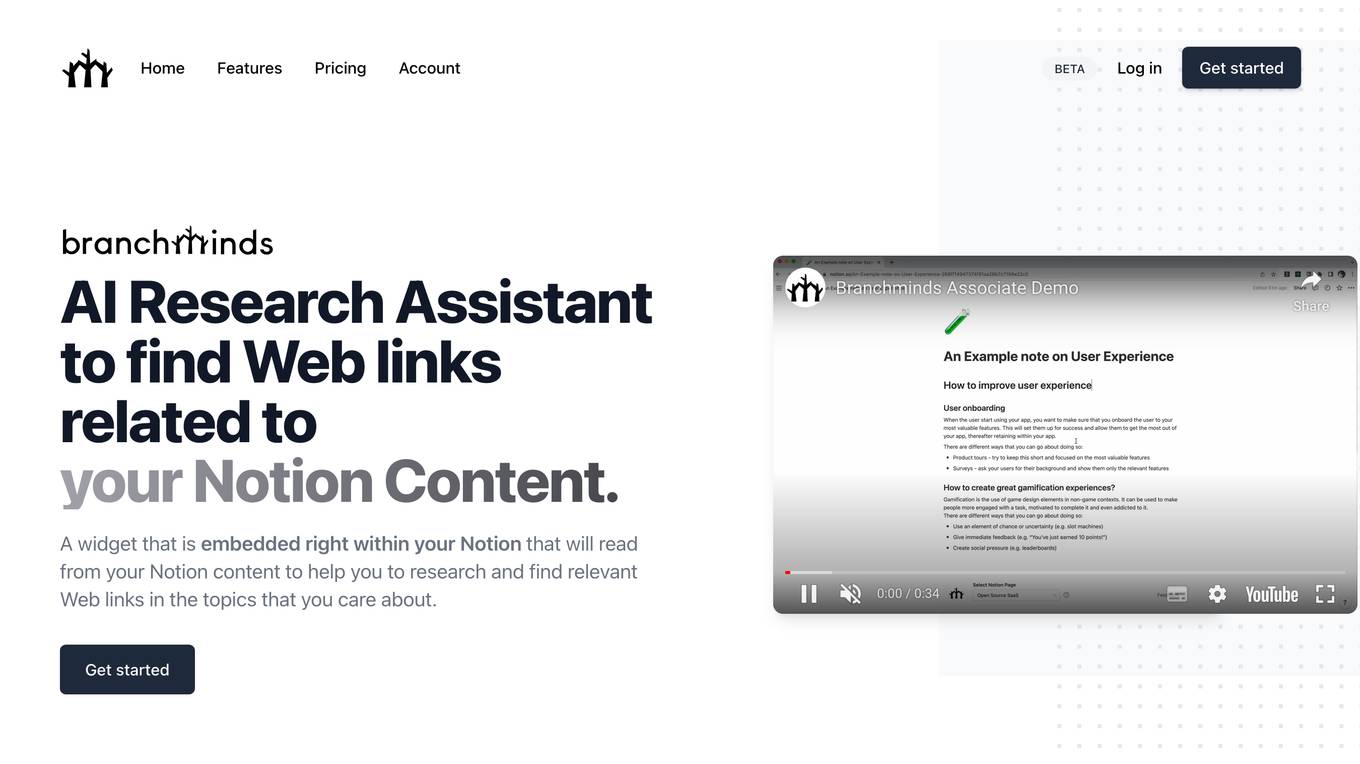

Ray3 AI

Ray3 AI is an AI video generator tool that allows users to create high-quality videos effortlessly. With features like text-to-image conversion, image editing, and aspect ratio adjustment, Ray3 AI simplifies the video creation process. Users can generate videos in various formats, including 16:9 and 9:16, with auto credits costing 10 per creation. The tool is powered by Tencent Hunyuan LIVE 5.0 and offers a seamless user experience for both beginners and experienced video creators.

LLM Quality Beefer-Upper

LLM Quality Beefer-Upper is an AI tool designed to enhance the quality and productivity of LLM responses by automating critique, reflection, and improvement. Users can generate multi-agent prompt drafts, choose from different quality levels, and upload knowledge text for processing. The application aims to maximize output quality by utilizing the best available LLM models in the market.

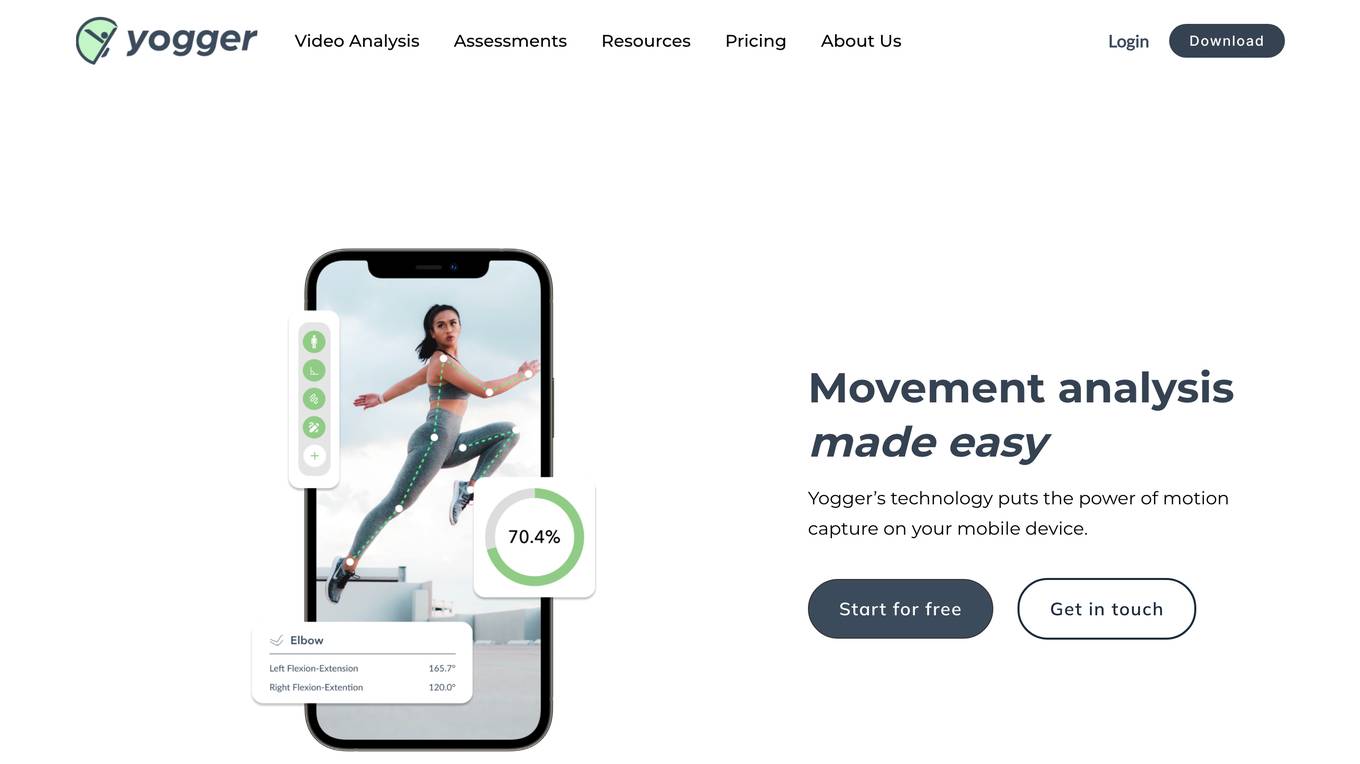

Yogger

Yogger is an AI-powered video analysis and movement assessment tool designed for coaches, trainers, physical therapists, and athletes. It allows users to gather precise movement data using video-based joint tracking or automatic movement screenings. With Yogger, users can analyze movement patterns, critique form, and measure progress, all from their phone. The tool is versatile, easy to use, and suitable for in-person coaching or online sessions. Yogger provides real data for accurate assessments, making it a valuable tool for professionals in the sports and fitness industry.

Mock-My-Mockup

Mock-My-Mockup is an AI-powered product design tool created by Fairpixels. It allows users to upload a screenshot of a page they are working on and receive brutally honest feedback. The tool offers a user-friendly interface where users can easily drag and drop their product screenshots for analysis.

Critique

Critique is an AI tool that redefines browsing by offering autonomous fact-checking, informed question answering, and a localized universal recommendation system. It automatically critiques comments and posts on platforms like Reddit, Youtube, and Linkedin by vetting text on any website. The tool cross-references and analyzes articles in real-time, providing vetted and summarized information directly in the user's browser.

Stunlaw

Stunlaw is a website that focuses on philosophy and critique for the digital age, particularly exploring the intersection of technology, software, and critical theory. The site delves into topics such as computational thinking, critical code studies, digital humanities, and the impact of artificial intelligence on society. Through in-depth analysis and discussions, Stunlaw aims to provide insights into the evolving landscape of technology and its implications on culture and society.

ProWritingAid

ProWritingAid is an AI-powered writing assistant that helps writers improve their writing. It offers a range of features, including a grammar checker, plagiarism checker, and story critique tool. ProWritingAid is used by writers of all levels, from beginners to bestselling authors. It is available as a web app, desktop app, and browser extension.

ScriptReader.ai

ScriptReader.ai is an AI-powered screenplay analysis tool that provides detailed critiques and suggestions for every scene of your screenplay. It offers personalized feedback to help writers improve their scripts and elevate their writing game. With the ability to analyze strengths and weaknesses, provide grades, critiques, and suggestions for improvement on a scene-by-scene basis, ScriptReader.ai aims to help both seasoned screenwriters and beginners enhance their work and create captivating masterpieces.

RAD AI

RAD AI is an AI-powered platform that provides solutions for audience insights, influencer discovery, content optimization, managed services, and more. The platform uses advanced machine learning to analyze real-time conversations from social platforms like Reddit, TikTok, and Twitter. RAD AI offers actionable critiques to enhance brand content and helps in selecting the right influencers based on various factors. The platform aims to help brands reach their target audiences effectively and efficiently by leveraging AI technology.

0 - Open Source AI Tools

20 - OpenAI Gpts

Señor Design Mentor

Get feedback on your UI designs. All you need to do is share Problem you are trying to solve and the Design for feedback

Design Crit

I conduct design critiques focused on enhancing understanding and improvement.

Website Design Critique Expert

Critiques website designs and creates shareable summary graphics.

Roast My UI

Offers constructive feedback on user's web designs based on a knowledge base of modern best practices.

UX Feedback

The UX Feedback GPT specializes in critiquing UX/UI design, focusing on accessibility, layout, and best practices from Nielsen Norman Group and IDEO. It offers tailored feedback for various design stages and emphasizes clear communication, responsiveness, and ethical design principles.

RoastMyDesign

The best design or website roaster that there is. It tells you exactly what's good, what's bad and how to fix it. Made by @ThisSiya

Legal Tech Generhater

I'll critique your legal tech ideas with my signature snark and design fittingly bad logos.

Trey Ratcliff's Fun Photo Critique GPT

Critiquing photos with humor and expertise, drawing from my 5,000 blog entries and books. Share your photo for a unique critique experience!

Image Generation with Selfcritique & Improvement

More accurate and easier image generation with self critique & improvement! Try it now

Executive Insight

I'm a Fortune 100 exec who critiques presentations, papers, emails, etc.

Design Mentor

Friendly, professional design expert, offering critiques and creating mockups.

Roast My Website

🔥 Upload a Screenshot/URL of your website to get roasted! 🔥 OPTIONAL: Ask for actionable tips for improvement.