Best AI tools for< Crawl And Index Data >

12 - AI tool Sites

FuturePerfect

FuturePerfect is a 24/7 proofreading AI tool designed to crawl through websites and detect grammar and spelling errors in real-time. By leveraging advanced algorithms, it ensures that websites always maintain a professional and polished appearance, protecting the brand reputation from embarrassing mistakes that could potentially cost deals. With support for 30 languages, FuturePerfect offers seamless integration without the need for complex setup, providing instant alerts and detailed reports for error corrections.

Crawl AI

Crawl AI is a web-based platform that simplifies the process of building custom AI assistants for users without technical expertise. It integrates web crawling and scraping capabilities with AI assistant development, allowing users to create custom assistants tailored to their needs. The platform automatically gathers and structures data from the web or user-uploaded sources, enabling users to train AI models and fine-tune assistant behavior. Crawl AI offers features like web scraping, AI integration, data customization, adjustable AI settings, and more.

BotGPT

BotGPT is a 24/7 custom AI chatbot assistant for websites. It offers a data-driven ChatGPT that allows users to create virtual assistants from their own data. Users can easily upload files or crawl their website to start asking questions and deploy a custom chatbot on their website within minutes. The platform provides a simple and efficient way to enhance customer engagement through AI-powered chatbots.

PromptLoop

PromptLoop is an AI-powered web scraping and data extraction platform that allows users to run AI automation tasks on lists of data with a simple file upload. It enables users to crawl company websites, categorize entities, and conduct research tasks at a fraction of the cost of other alternatives. By leveraging unique company data from spreadsheets, PromptLoop enables the creation of custom AI models tailored to specific needs, facilitating the extraction of valuable insights from complex information.

Horseman

Horseman is an AI-powered web crawling tool that offers endless configuration options for users. It allows frontend developers, performance analysts, digital agencies, accessibility experts, SEO specialists, and JavaScript engineers to supercharge their snippets for expert insights across their websites. With features like GPT integration, snippet creation with AI assistance, insights exploration, and a vast library of snippets, Horseman empowers users to crawl the web efficiently and effectively.

Caibooster

Caibooster is an AI-based Google indexing service that helps users boost their indexing rate and speed in Google search results. It offers different packages for submitting links to Google for indexing, with a high success rate of 60%-80%. The service is safe, professional, and affordable, ensuring that users' data is secured and their links are indexed efficiently. Caibooster continuously updates its indexing system to adapt to Google's changes, making it a reliable solution for anyone struggling with link indexing.

Firecrawl

Firecrawl is an advanced web crawling and data conversion tool designed to transform any website into clean, LLM-ready markdown. It automates the collection, cleaning, and formatting of web data, streamlining the preparation process for Large Language Model (LLM) applications. Firecrawl is best suited for business websites, documentation, and help centers, offering features like crawling all accessible subpages, handling dynamic content, converting data into well-formatted markdown, and more. It is built by LLM engineers for LLM engineers, providing clean data the way users want it.

Seo Juice

Seo Juice is an automated AI SEO tool that generates internal links for websites fully automated with AI technology. It is designed for busy individuals who want to improve their SEO without the hassle of manual keyword research. By simply adding your domain, Seo Juice crawls your sitemap and inserts links into your webpage using a simple JS snippet. The tool offers easy integration, privacy-driven internal reporting, and simple pricing suitable for businesses of all sizes. With Seo Juice, users can enjoy the benefits of automated SEO and save time on manual link building tasks.

CrawlQ AI

CrawlQ AI is the world's first Content ERP platform that leverages AI technology to unify content strategy, production, compliance, and delivery into one intelligent system. It transforms disparate files into a single, auditable source of truth, empowering users to govern their AI agents effectively. With features like Two-Way Retrieval, Augmented Generation, and Multi-LLM, CrawlQ AI caters to digital marketing strategists, e-commerce growth specialists, financial planning specialists, IT project managers, and strategic business consultants. The platform offers enterprise-grade validation, content intelligence, and ROCC-calibrated intelligence to help organizations enhance their content capital and achieve measurable ROCC growth.

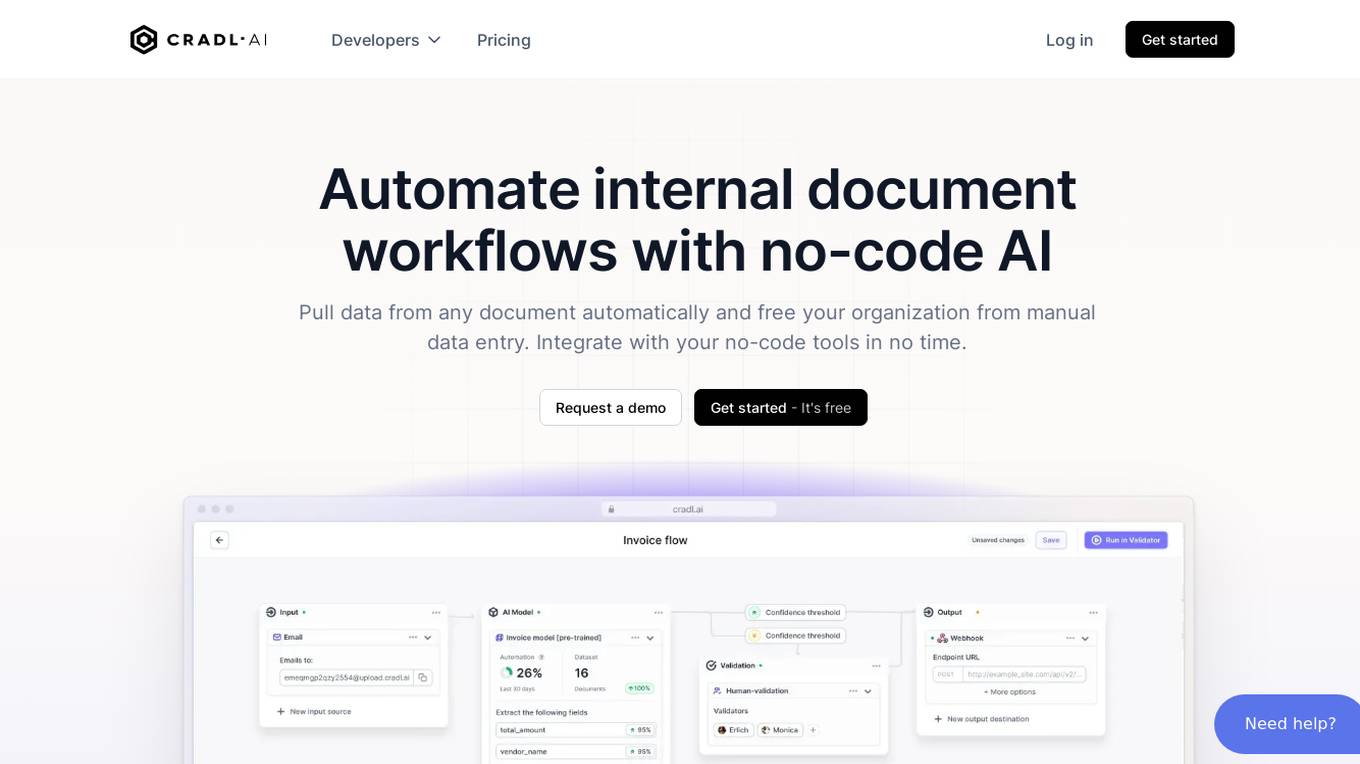

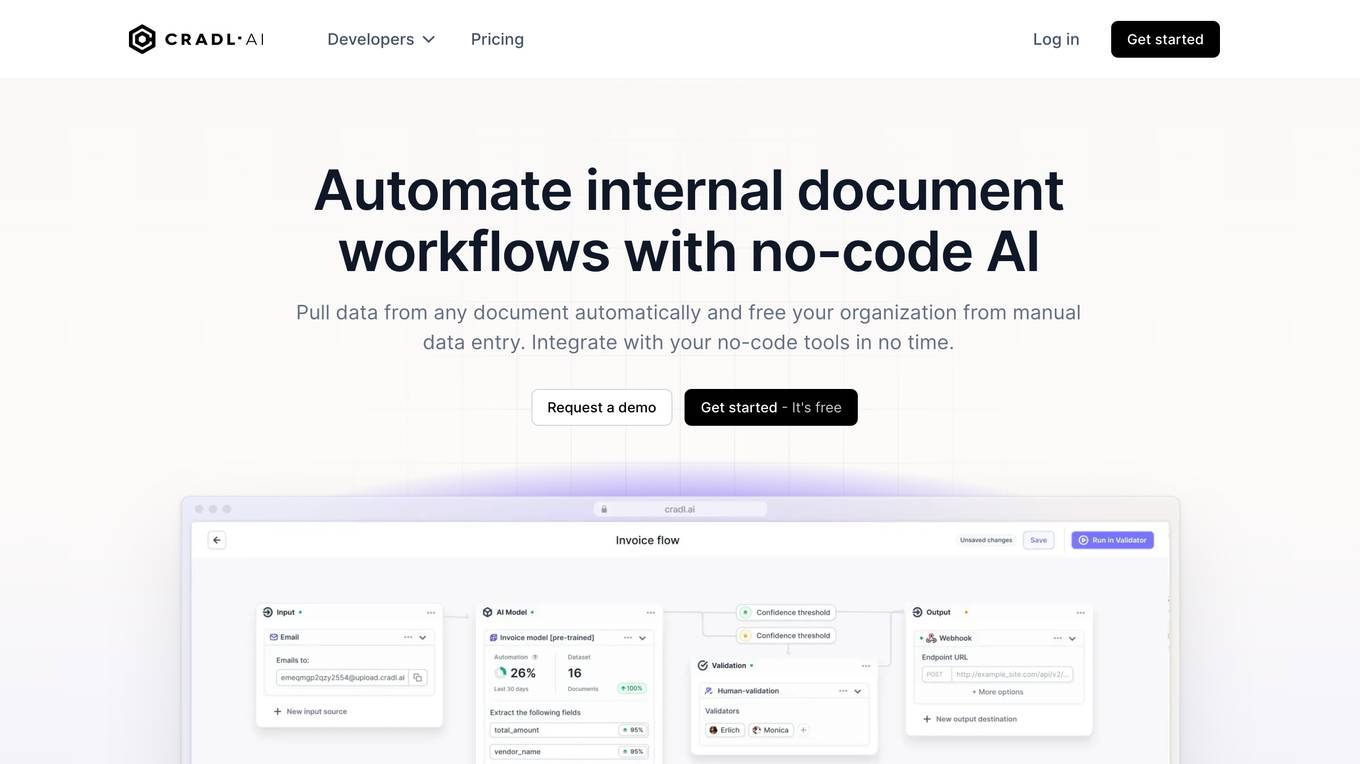

Cradl AI

Cradl AI is an AI-powered tool designed to automate document workflows with no-code AI. It enables users to extract data from any document automatically, integrate with no-code tools, and build custom AI models through an easy-to-use interface. The tool empowers automation teams across industries by extracting data from complex document layouts, regardless of language or structure. Cradl AI offers features such as line item extraction, fine-tuning AI models, human-in-the-loop validation, and seamless integration with automation tools. It is trusted by organizations for business-critical document automation, providing enterprise-level features like encrypted transmission, GDPR compliance, secure data handling, and auto-scaling.

Cradl AI

Cradl AI is a no-code AI-powered document workflow automation tool that helps organizations automate document-related tasks, such as data extraction, processing, and validation. It uses AI to automatically extract data from complex document layouts, regardless of layout or language. Cradl AI also integrates with other no-code tools, making it easy to build and deploy custom AI models.

GapTrail

GapTrail is an AI-powered competitive intelligence tool designed for business teams to monitor competitor websites, extract pricing and feature data, detect changes, and deliver actionable insights. It automates the entire process from data collection to providing structured, evidence-backed competitive intelligence, enabling teams to make faster, better-informed decisions. With features like automated crawls, AI insights, side-by-side comparisons, and real-time alerts, GapTrail helps businesses stay ahead of market changes and competitors. The tool is built for founders, executives, product managers, sales teams, and growth teams who want to keep their competitive data structured and current.