Best AI tools for< Control Video Output >

20 - AI tool Sites

Kling AI

Kling AI is a revolutionary text-to-video generation model that enables users to effortlessly craft artistic video productions. It boasts impressive capabilities in creating videos, making imagination come alive. With features like dynamic motion generation, long video creation, simulation of the physical world, conceptual combination, and cinematic video generation, Kling AI offers a unique and efficient video production experience. Users can enjoy generating videos with realistic movements, diverse aspect ratios, and cinematic quality, all powered by advanced AI technology.

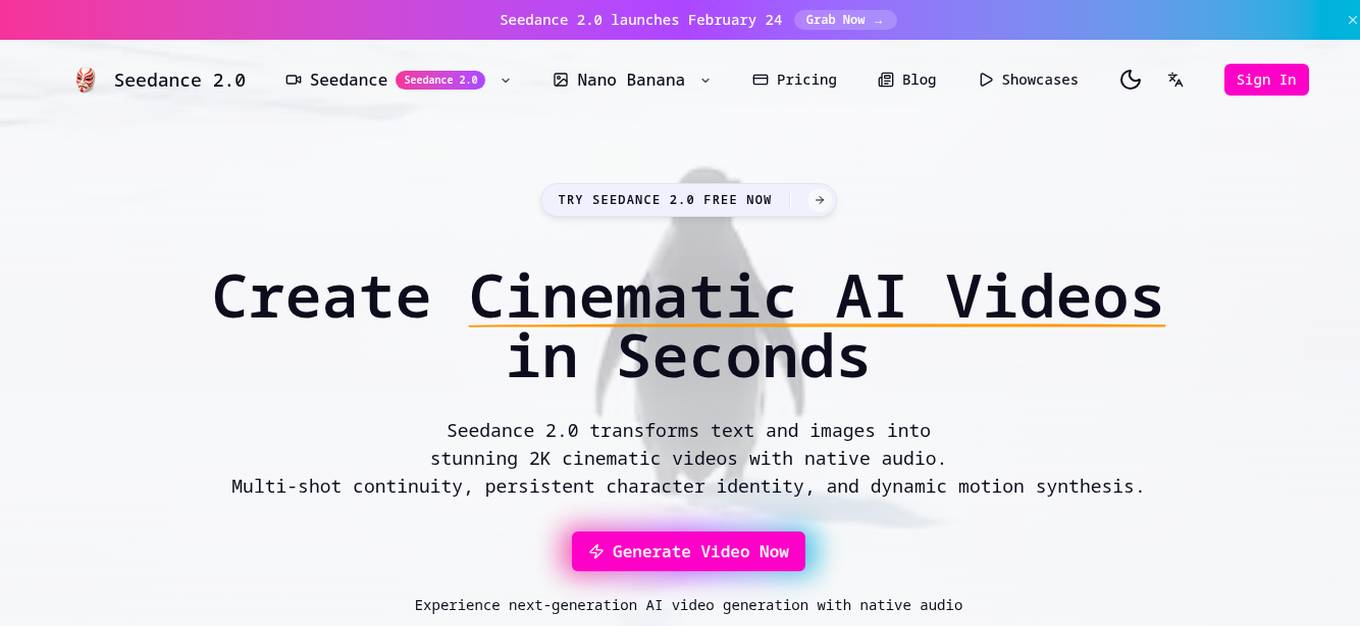

Seedance 2.0

Seedance 2.0 is an AI-powered video generation platform that transforms text and images into high-quality cinematic videos with native audio. It offers features such as text-to-video conversion, image animation, native audio synthesis, and persistent character identity. The platform provides up to 2K resolution output with multiple aspect ratios and advanced motion control for professional video creation.

Ray3 AI Video Model

Ray3 is a cutting-edge AI video model developed by Luma, designed to revolutionize HDR video creation for storytellers worldwide. With its native 16-bit HDR output, intelligent scene reasoning, and precise keyframe control, Ray3 empowers creators to generate professional-quality 4K HDR videos with unparalleled creative freedom. It goes beyond simple text-to-video generation, offering a complete creative partnership for modern storytellers, enabling them to bring their cinematic visions to life effortlessly.

Seedance 2.0

Seedance 2.0 is a multi-modal AI video generator that allows users to create, extend, and edit cinematic videos using text, images, video, and audio references. It offers precise creative control and structured input methods to ensure predictable and production-ready outputs. With features like multi-modal input, shot-level control, high-fidelity image guidance, video motion transfer, and native audio-driven video generation, Seedance 2.0 empowers users to produce high-quality videos efficiently. The application supports targeted edits, extension of existing video clips, and maintains character and scene consistency across multiple shots. Seedance 2.0 is designed to streamline the video creation process and provide users with a tool for fast and reliable video production.

AI Kissing Video Generator

AI Kissing Video Generator is an advanced artificial intelligence service that creates romantic kissing videos from uploaded photos. The tool utilizes cutting-edge AI technology to analyze facial features and expressions, generating natural-looking kiss animations with high-quality results. Users can customize videos, control animation speed, and choose backgrounds to create unique and personalized content. The tool ensures privacy and security by deleting uploaded data after processing, making it suitable for various occasions and user skill levels.

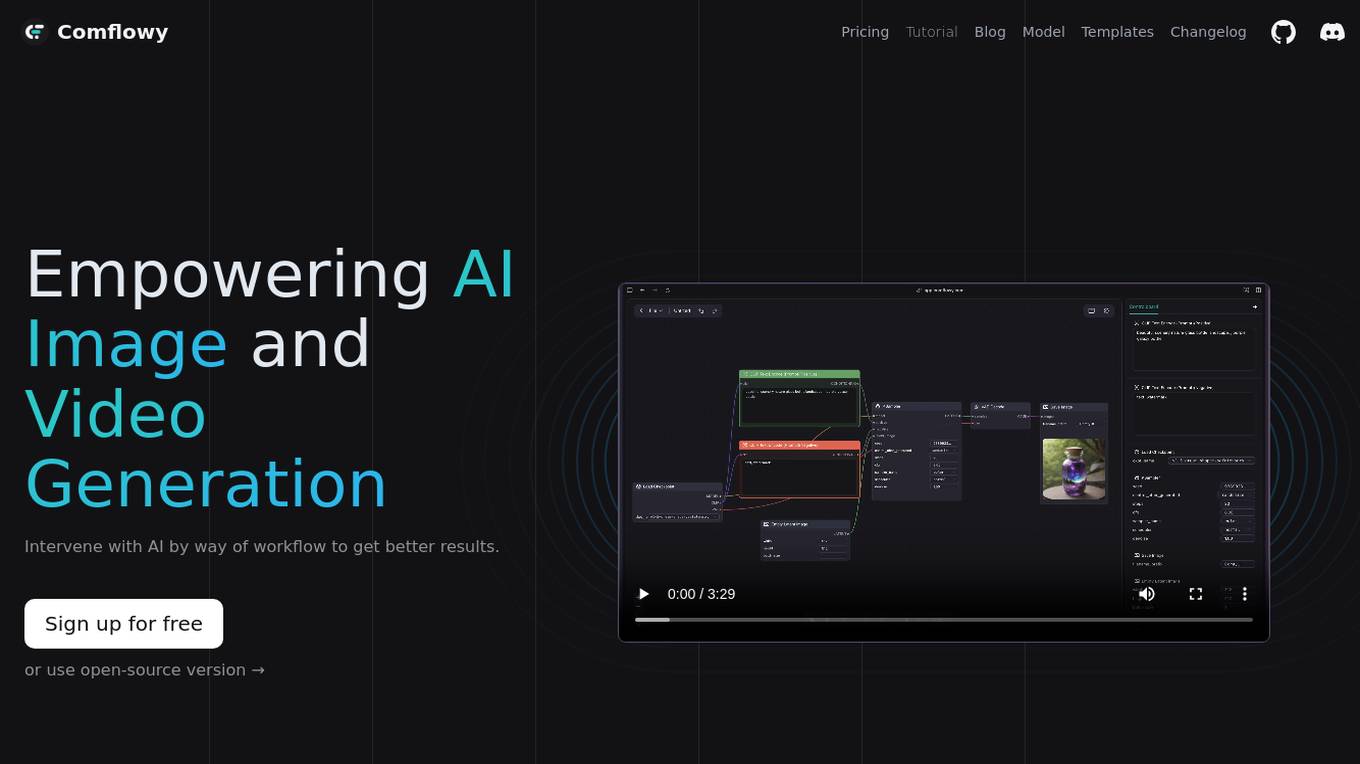

Comflowy

Comflowy is an AI tool that empowers users to intervene with AI through a workflow approach to achieve better results. It allows users to control the AI's output by connecting nodes and utilizing various open-source AI models and plugins. The tool supports image and video generation, offers a flexible workflow mode, and is designed to be easy to use and learn. Comflowy also provides templates, tutorials, and workflow management features to streamline the AI workflow process.

Grok Imagine 2.0

Grok Imagine 2.0 is a next-generation AI video platform that transforms text prompts or static images into high-quality 1080p videos. It offers breakthrough capabilities in AI video generation, including advanced language parsing for precise control over interactions, diverse visual styles, and multi-shot storytelling. Users can create cohesive narrative videos with consistent characters and visual style across scene transitions. The platform provides professional output with 1080p HD quality, smooth motion, and cinematic lighting, suitable for social media, ads, or presentations. Grok Imagine 2.0 also supports text and image-to-video creation, enabling users to generate videos with intelligent motion, camera movement, and smooth transitions.

DeepMake

DeepMake is a powerful AI tool that empowers users to unleash their creativity by providing control over Open Source AI tools for enhancing visual content. With DeepMake, users can create, edit, and enhance images and videos without any usage limits or reliance on cloud services. The application runs locally on the user's computer, offering a higher level of control over AI-generated output and introducing new AI tools regularly to stay at the forefront of AI capabilities.

Lyria 3

Lyria 3 is an AI-powered application that transforms text, image, and video content into 30-second music clips with auto-generated lyrics, enhanced song structure, and SynthID watermarking. It simplifies music composition by automating manual tasks and offering better control over genre, tone, and mood. The application is designed for both non-musicians and professional creators, aiming to streamline the music production process and provide high-quality short-form audio outputs.

Suno AI Music

Suno AI Music is an advanced text-to-music generation tool powered by artificial intelligence. It allows users to create unique, high-quality music tracks by simply describing their preferences and vision. The AI composes original music in various styles, moods, and genres based on text prompts. Users can generate custom music tracks in WAV format, suitable for personal and commercial projects. Suno AI Music offers an intuitive interface, cutting-edge AI technology, and easy-to-use features for music creation.

MemoTune

MemoTune is an AI song generator designed for personal stories. Users can input moments, people, and feelings to generate lyrics and a complete song that remains aligned with the story, ensuring on-topic output without the need for music theory knowledge. The platform offers creator-ready features, including personalized songs for various occasions, royalty-free background music for content creation, and commercial-friendly options. Users can easily share, refine, and download their generated songs, maintaining full control over their music creation process.

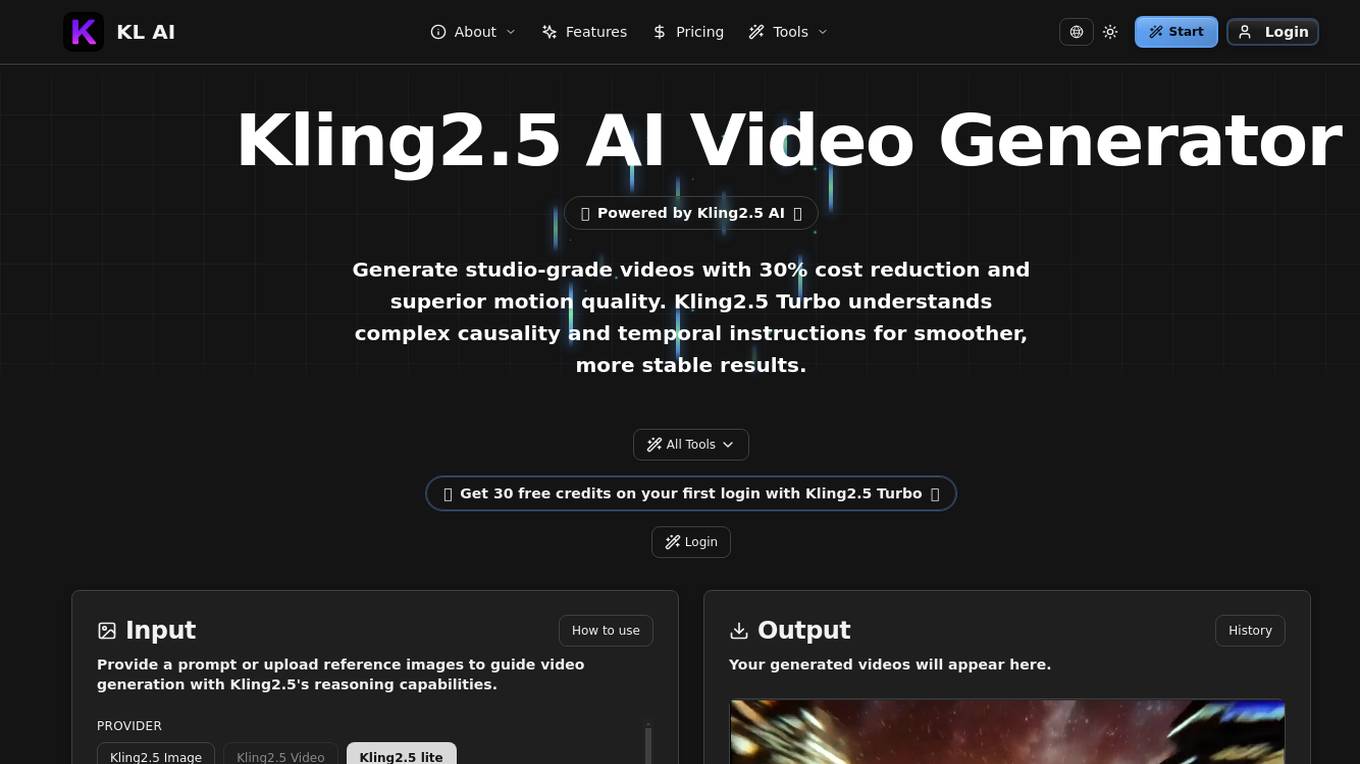

Kling2.5

Kling2.5 is an AI-powered video generator that offers studio-grade video creation with advanced reasoning capabilities. It delivers cost-optimized video generation, superior motion flow, and understanding of complex causal relationships and temporal sequences. Kling2.5 Turbo provides features like native HDR video output, draft mode for rapid iteration, and enhanced style consistency. The application is suitable for professional video content creation, commercial projects, and various industries.

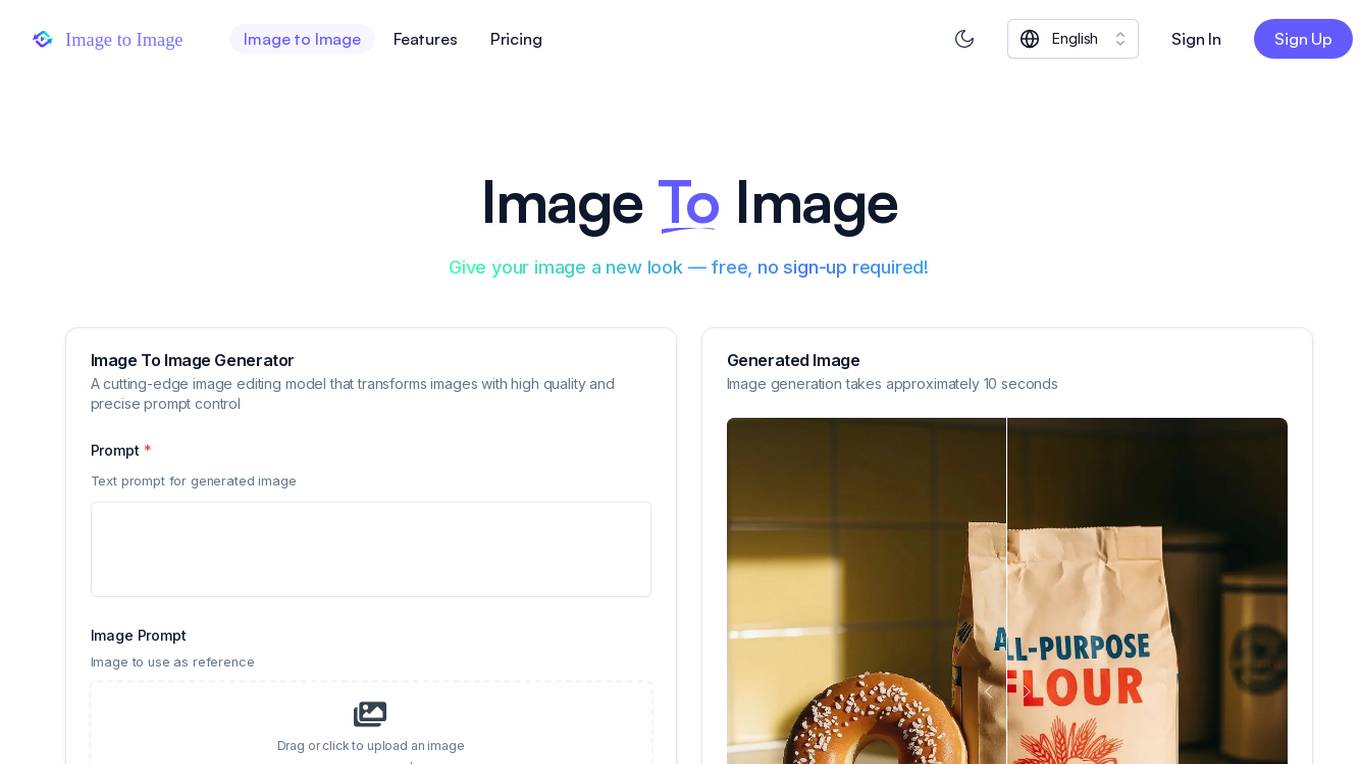

Image to Image

Image to Image is an AI-powered image generator and editing tool that allows users to transform images with high quality and precise control using text prompts or image references. It offers instant image transformation, flexible input options, intelligent AI editing, and high-fidelity outputs. The platform serves as an all-in-one AI creative suite for generating, editing, and enhancing images and videos. Users can access free credits daily, with the option to purchase extra credits for more power. The tool is loved by businesses and individuals worldwide for its advanced capabilities in creating stunning visuals.

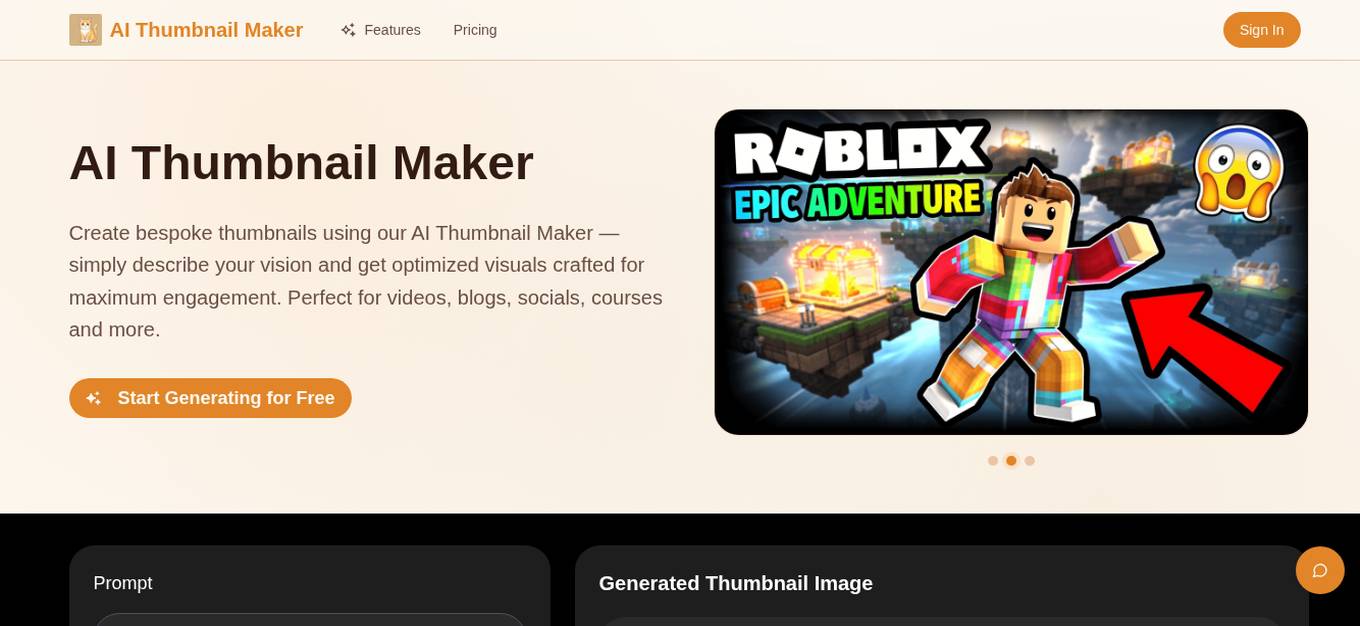

AI Thumbnail Maker

AI Thumbnail Maker is an AI-powered tool designed to help users create high-CTR thumbnails quickly and efficiently. By leveraging advanced machine learning algorithms, the tool interprets text prompts, visual cues, and optional image uploads to generate optimized thumbnails for various platforms such as videos, blogs, social media, and online courses. With features like prompt-first thumbnail output, context-aware style controls, and multi-platform output options, AI Thumbnail Maker streamlines the thumbnail creation process, making it accessible to creators of all levels. The tool aims to enhance viewer engagement, click-through rates, and overall visual appeal in a fast-paced digital landscape.

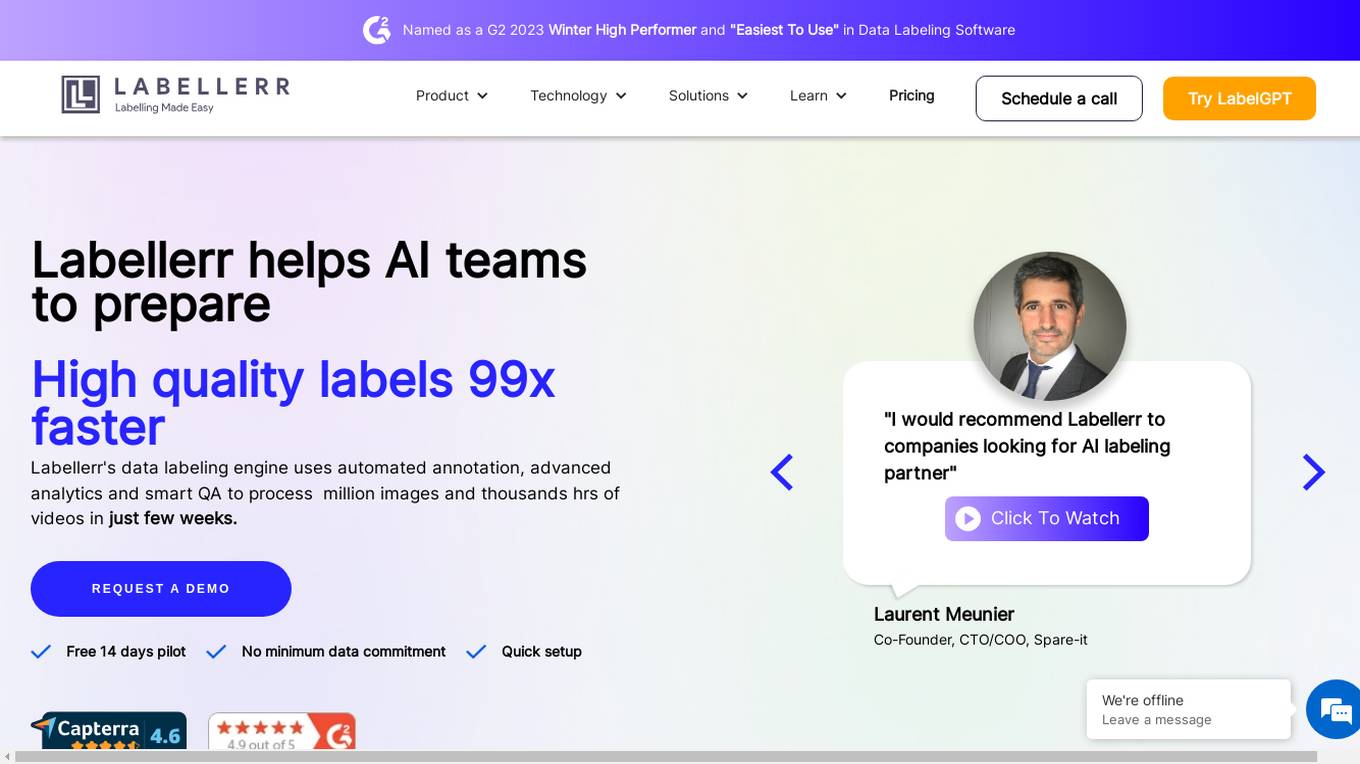

Labellerr

Labellerr is a data labeling software that helps AI teams prepare high-quality labels 99 times faster for Vision, NLP, and LLM models. The platform offers automated annotation, advanced analytics, and smart QA to process millions of images and thousands of hours of videos in just a few weeks. Labellerr's powerful analytics provides full control over output quality and project management, making it a valuable tool for AI labeling partners.

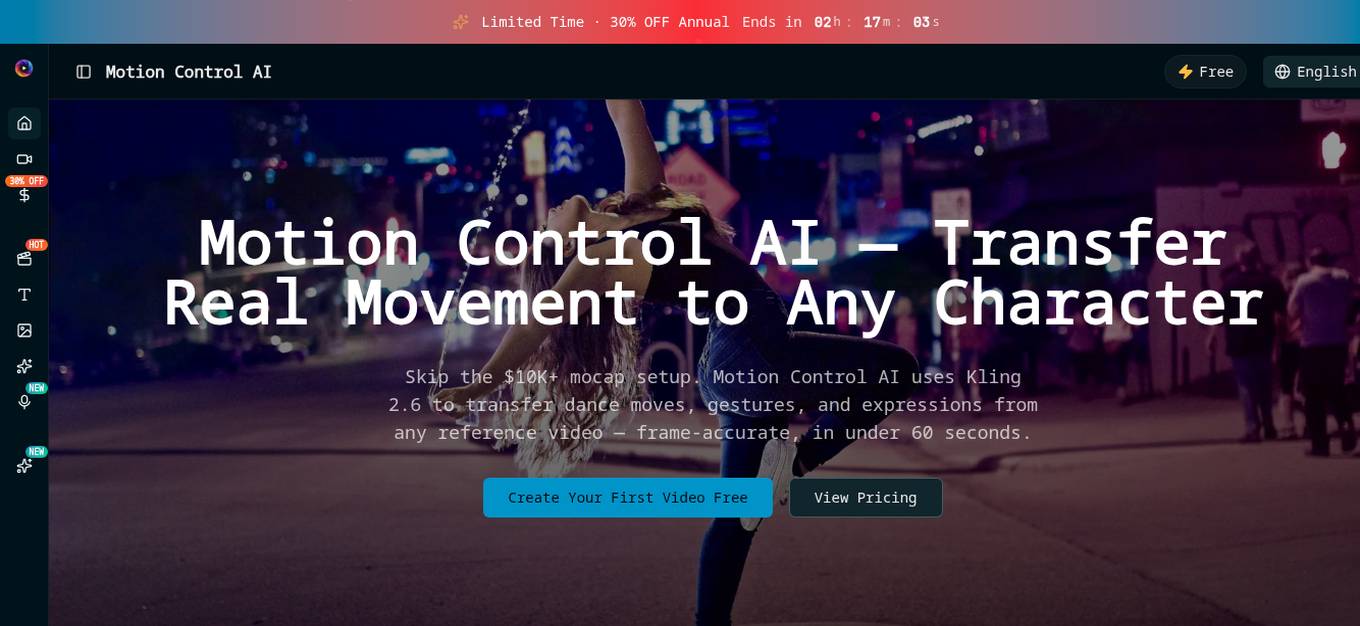

Motion Control AI

Motion Control AI is an AI video generator tool powered by Kling 2.6 technology that allows users to transfer real human movements from reference videos to character images with frame-accurate precision. It offers features like motion transfer, gesture control, expression sync, and cinematic realism, enabling users to create professional videos in minutes without the need for expensive motion capture equipment. With a user-friendly interface and a wide range of applications in content creation, marketing, education, and social media, Motion Control AI revolutionizes the way animations are produced and offers a cost-effective solution for creators worldwide.

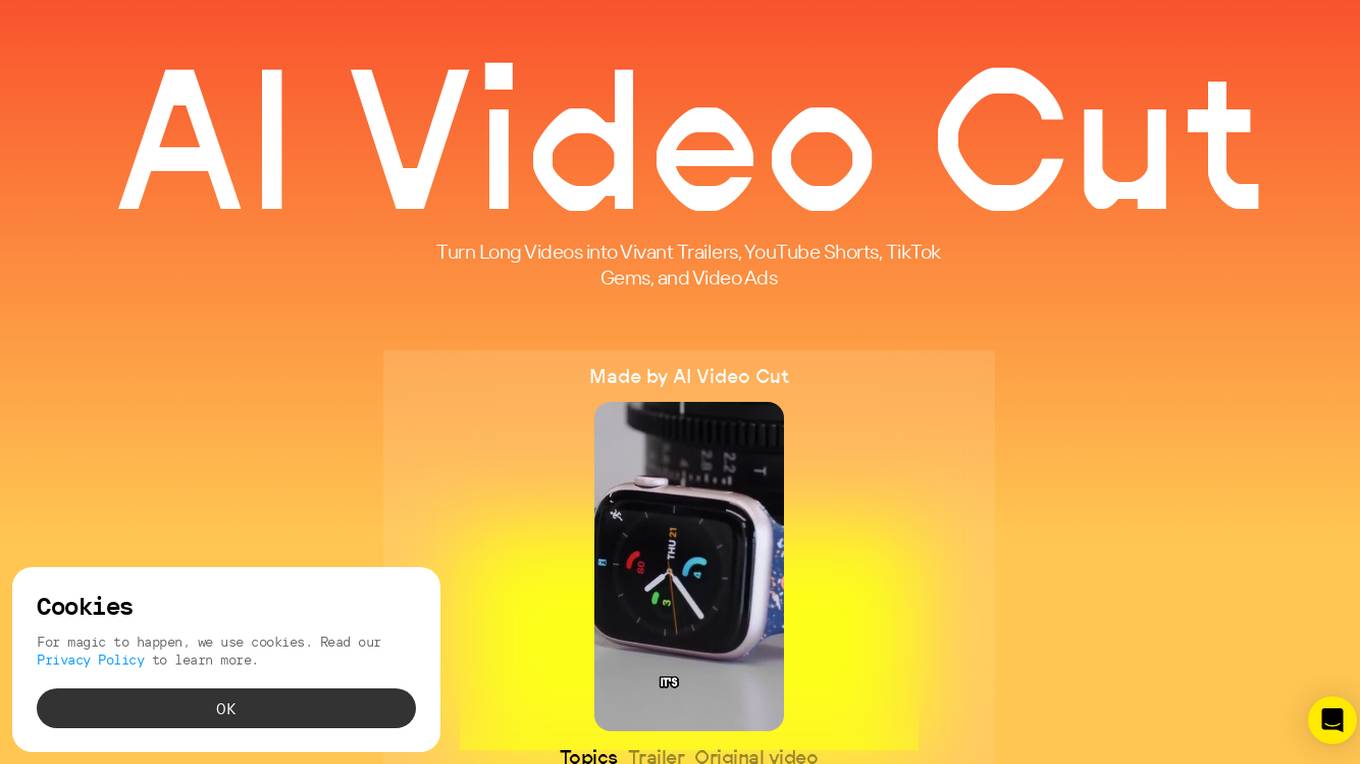

AI Video Cut

AI Video Cut is an AI-powered tool that helps users create viral content by turning long videos into vibrant trailers, YouTube shorts, TikTok gems, and video ads. The tool supports videos in English with conversational content, up to a maximum length of 30 minutes. It offers unique features such as 100% Viral Content creation, Tone-of-Voice Options, Flexible Length control, Precision Aspect Ratios, and a Convenient Telegram Bot for easy access. AI Video Cut caters to content creators, influencers, digital marketers, social media managers, e-commerce businesses, event planners, and podcasters, enabling them to enhance their video content for various platforms.

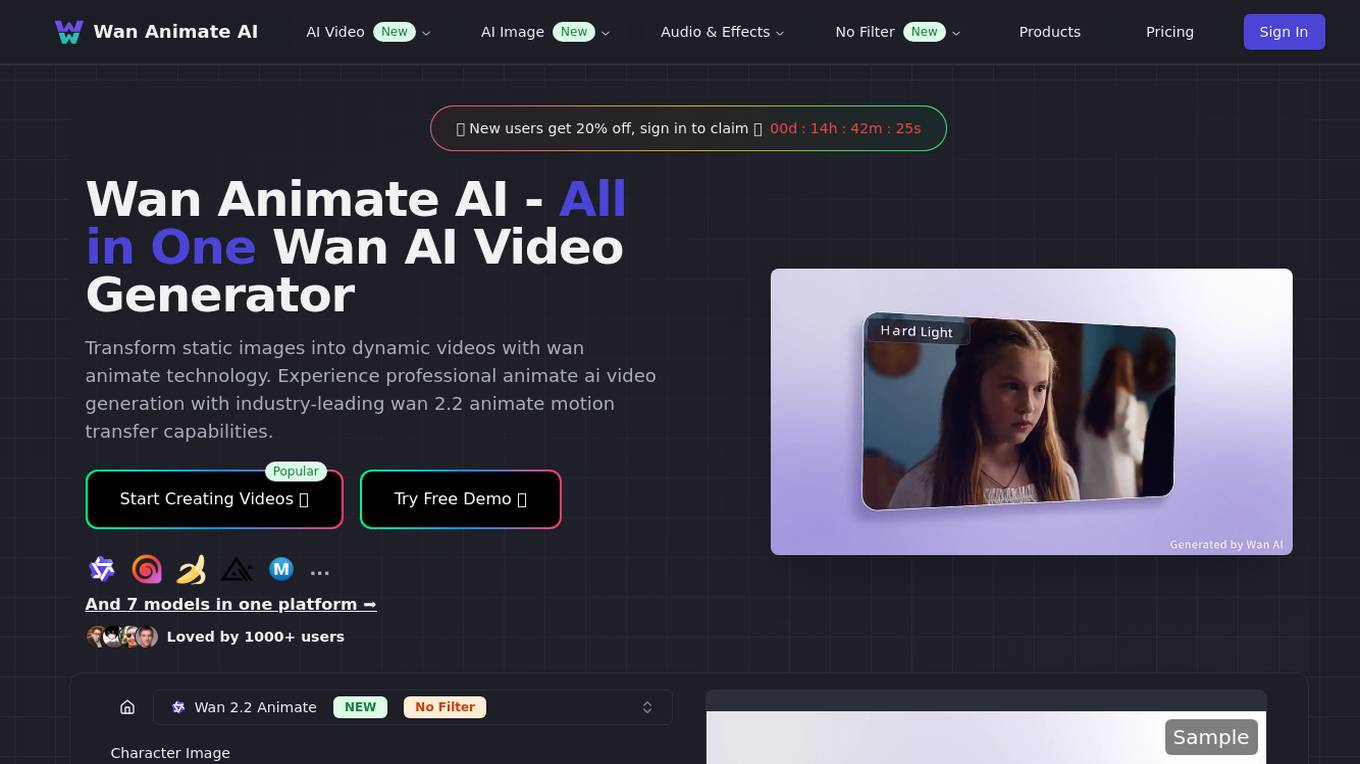

Wan Animate AI

Wan Animate AI is an all-in-one professional AI video generator platform that transforms static images into dynamic videos using cutting-edge technology. It offers advanced features such as motion transfer, facial expression technology, environmental lighting integration, high-resolution video generation, and open-source access. Users can create high-quality videos with wan ai intelligence and wan2.2 animate precision for various commercial applications. The platform provides customizable settings and fast processing speed, making it ideal for entertainment, marketing, and educational projects.

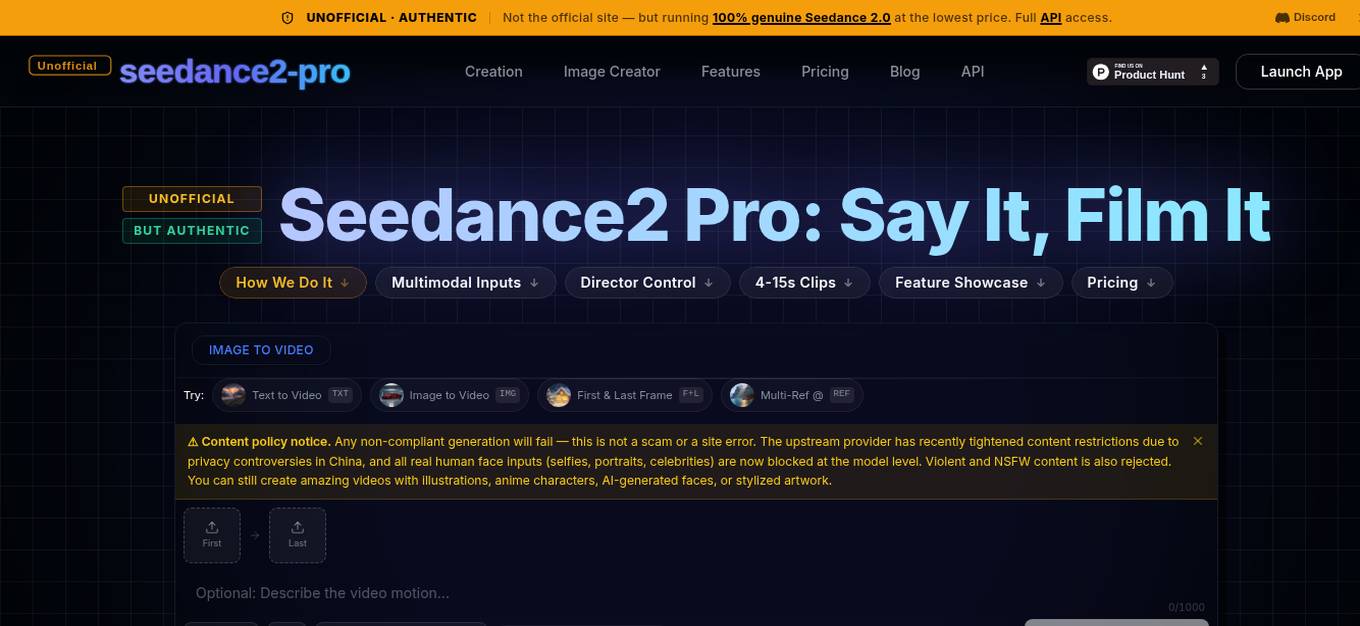

Seedance2 Pro

Seedance2 Pro is an unofficial AI video generator that allows users to create cinematic clips using text, images, videos, and audio references. It offers full API access and features like multimodal inputs, director control, and clip generation within the range of 4-15 seconds. Users can mix various references to maintain consistency, mimic camera moves, and enhance storytelling. The platform provides affordable access to AI video generation without the need for a Chinese phone number or local account.

Ray3 AI

Ray3 AI is an intelligent video model designed to tell stories with state-of-the-art physics and consistency. It offers studio-grade HDR capabilities, visual reasoning, and annotation tools for precise control over video generation. The application enables creators to transform images into stunning videos, providing a platform for professionals and hobbyists to create high-quality HDR content with advanced editing features.

0 - Open Source AI Tools

20 - OpenAI Gpts

AE Expression Expert

An assistant for creating and troubleshooting expressions in Adobe After Effects.

How's it made?

I find videos on how items are made from your photos and describe the process.

🤖 SmartLink Integrator 🌎

Your AI bridge to the Internet of Things! Easily connect, control, and automate your smart devices with voice or text commands. 🏠💎

TrafficFlow

A specialized AI for optimizing traffic control, predicting bottlenecks, and improving road safety.

Sim-Low

Meal planner with 1)Calories Control 2)Family/Personal Plan 3)Nutritional Summaries 4)Shopping Lists

Addiction Assistant

A mentor for those with struggling with control over their substance use, offering guidance, resources, and support for sobriety. In case of relapse, it provides practical steps and resources, including web links, phone numbers, and emails.

Project Controlling Advisor

Provides financial oversight and project cost control support.

Hierarchical Topic Exploration

Explore any topic with an advanced hierarchical interactive mapping with streamlined control. Begin with !start [topic].

BITE Model Analyzer by Dr. Steven Hassan

Discover if your group, relationship or organization uses specific methods to recruit and maintain control over people