Best AI tools for< Check Schedules >

20 - AI tool Sites

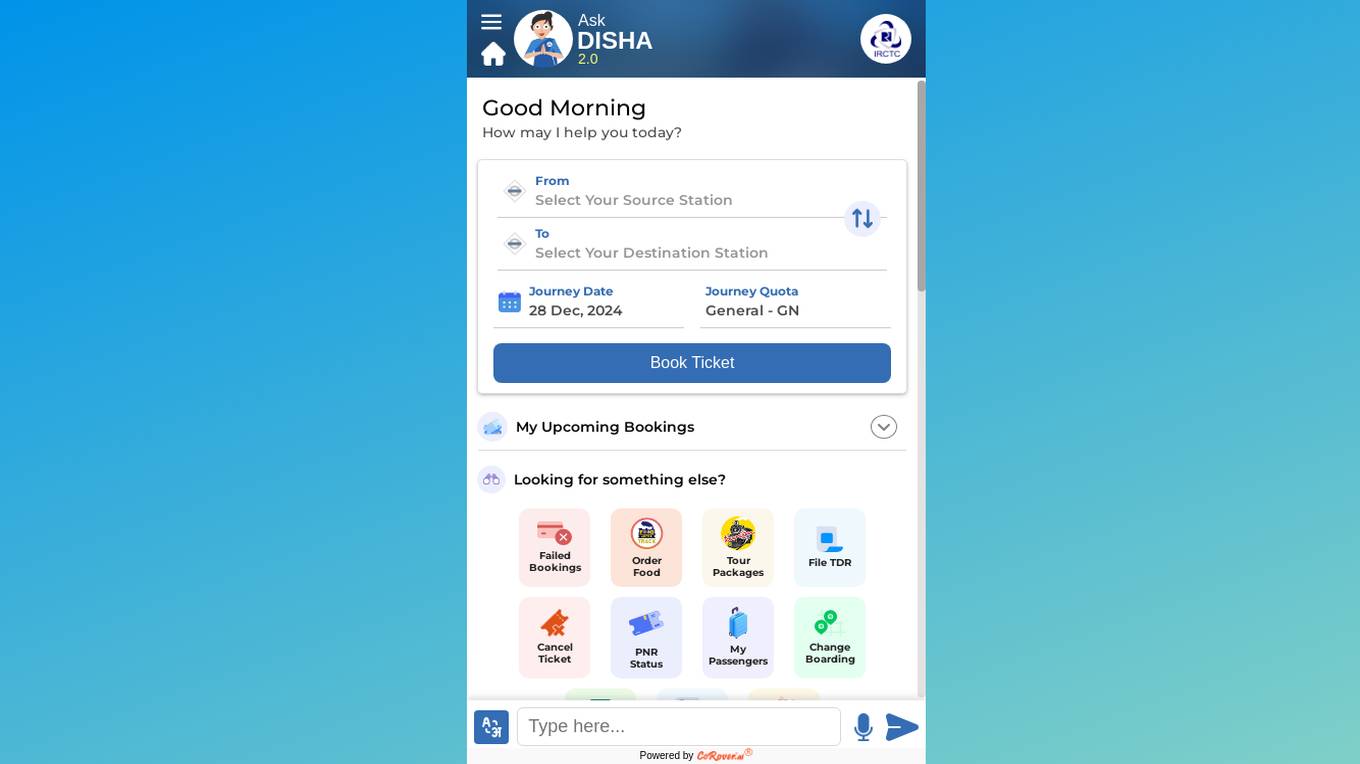

CoRover.ai

CoRover.ai is an AI-powered chatbot designed to help users book train tickets seamlessly through conversation. The chatbot, named AskDISHA, is integrated with the IRCTC platform, allowing users to inquire about train schedules, ticket availability, and make bookings effortlessly. CoRover.ai leverages artificial intelligence to provide personalized assistance and streamline the ticket booking process for users, enhancing their overall experience.

LuongSonTV

LuongSonTV is a leading destination for football fans in Vietnam in the digital age, offering a seamless online viewing experience with high-definition images. It provides free high-quality viewing links, supports all devices, and allows easy access to top international matches and domestic tournaments. LuongSonTV promptly updates match schedules, highlight videos, rankings, and results, accompanying fans throughout the season without missing any moments.

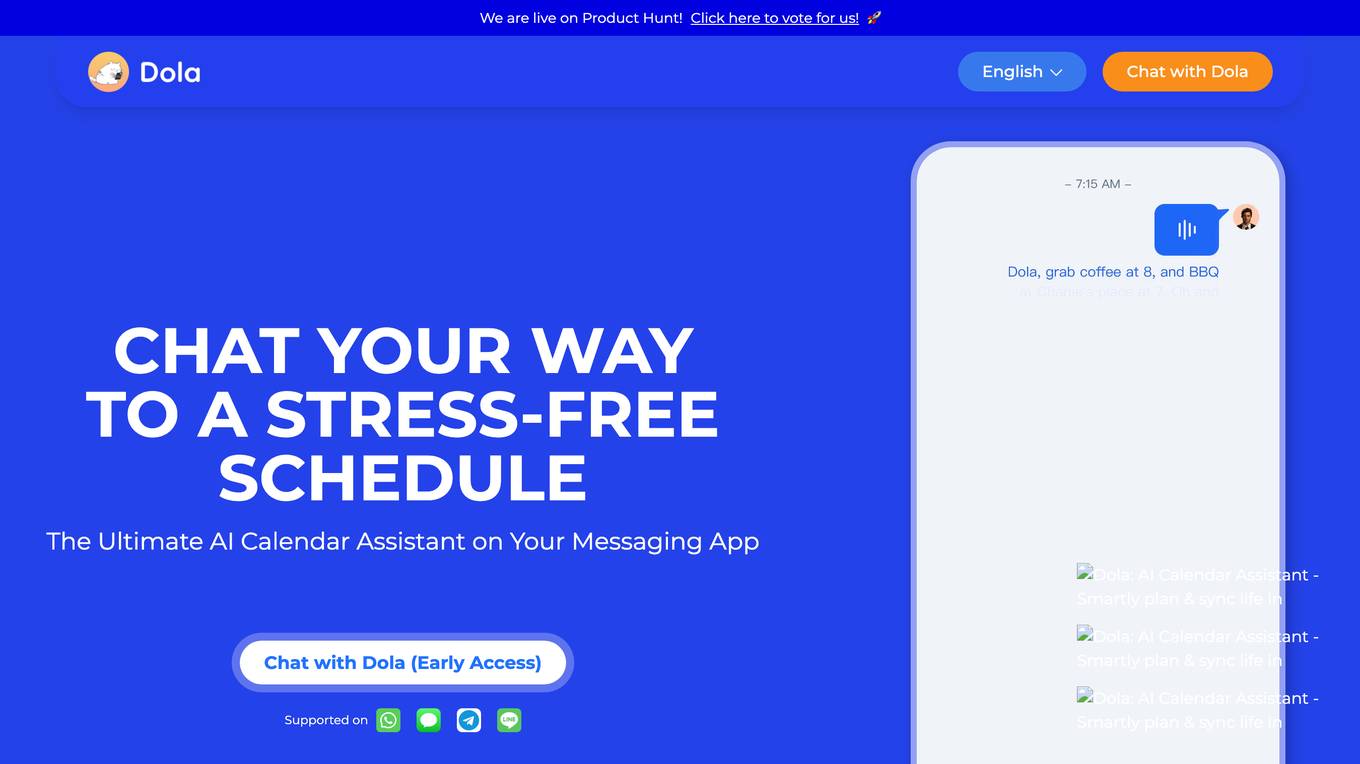

Dola

Dola is an AI calendar assistant that helps users schedule their lives efficiently and save time. It allows users to set reminders, make calendar events, and manage tasks through natural language communication. Dola works with voice messages, text messages, and images, making it a versatile and user-friendly tool. With features like smarter scheduling, daily weather reports, faster search, and seamless integration with popular calendar apps, Dola aims to simplify task and time management for its users. The application has received positive feedback for its accuracy, ease of use, and ability to sync across multiple devices.

HowsThisGoing

HowsThisGoing is an AI-powered application designed to streamline team communication and productivity by enabling users to set up standups in Slack within seconds. The platform offers features such as automatic standups, AI summaries, custom tests, analytics & reporting, and workflow scheduling. Users can easily create workflows, generate AI reports, and track team performance efficiently. HowsThisGoing provides unlimited benefits at a flat price, making it a cost-effective solution for teams of all sizes.

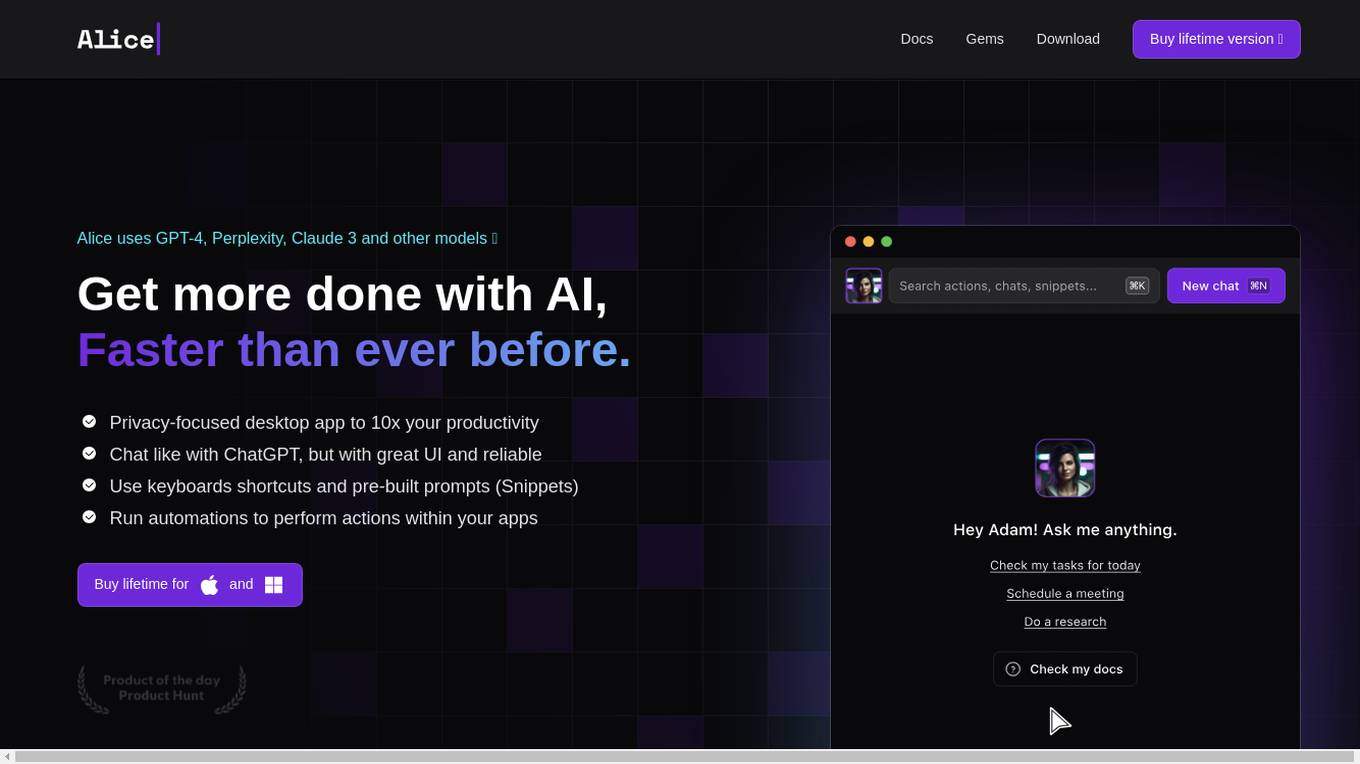

Alice App

Alice is a desktop application that provides access to advanced AI models like GPT-4, Perplexity, Claude 3, and others. It offers a user-friendly interface with features such as keyboard shortcuts, pre-built prompts (Snippets), and the ability to run automations within other applications. Alice is designed to enhance productivity and streamline tasks by providing quick access to AI-powered assistance.

Felix

Felix is a Slack AI assistant that helps you get work done faster and more efficiently. With Felix, you can: * Schedule meetings * Set reminders * Create tasks * Get news and weather updates * And much more!

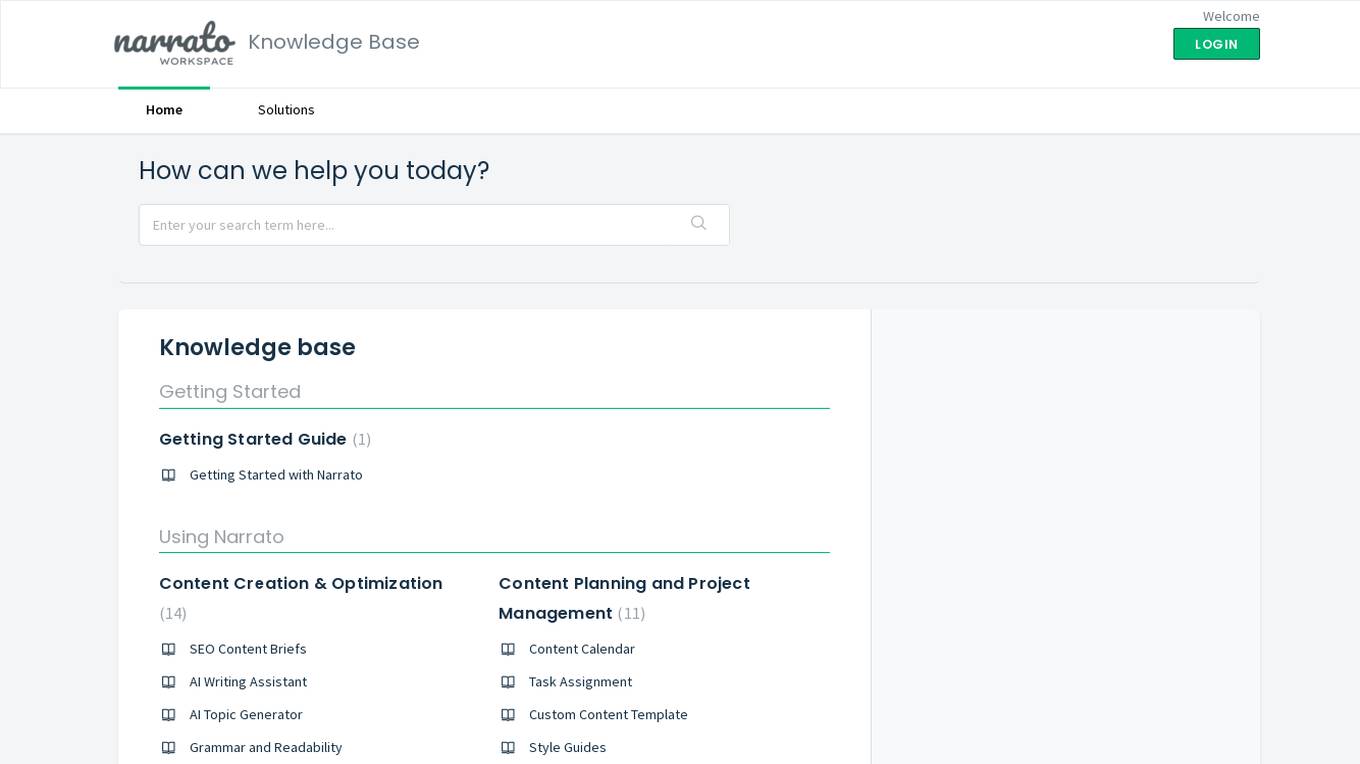

Narrato

Narrato is an AI-powered content creation and project management platform that offers a wide range of features to assist users in creating high-quality content efficiently. It provides tools such as AI writing assistant, topic generator, grammar and readability checker, plagiarism detector, content calendar, task assignment, custom content templates, style guides, freelancer management, user roles management, content collaboration, WordPress publishing, image search, and more. Narrato aims to streamline the content creation process for individuals and teams, making it easier to manage projects and collaborate effectively.

Harver

Harver is a talent assessment platform that helps businesses make better hiring decisions faster. It offers a suite of solutions, including assessments, video interviews, scheduling, and reference checking, that can be used to optimize the hiring process and reduce time to hire. Harver's assessments are based on data and scientific insights, and they help businesses identify the right people for the right roles. Harver also offers support for the full talent lifecycle, including talent management, mobility, and development.

Maintenance Mode

The website is currently undergoing scheduled maintenance and is temporarily unavailable. Please check back in a minute for access to the content. The maintenance is being conducted to ensure the smooth functioning and performance of the website. We apologize for any inconvenience caused and appreciate your patience.

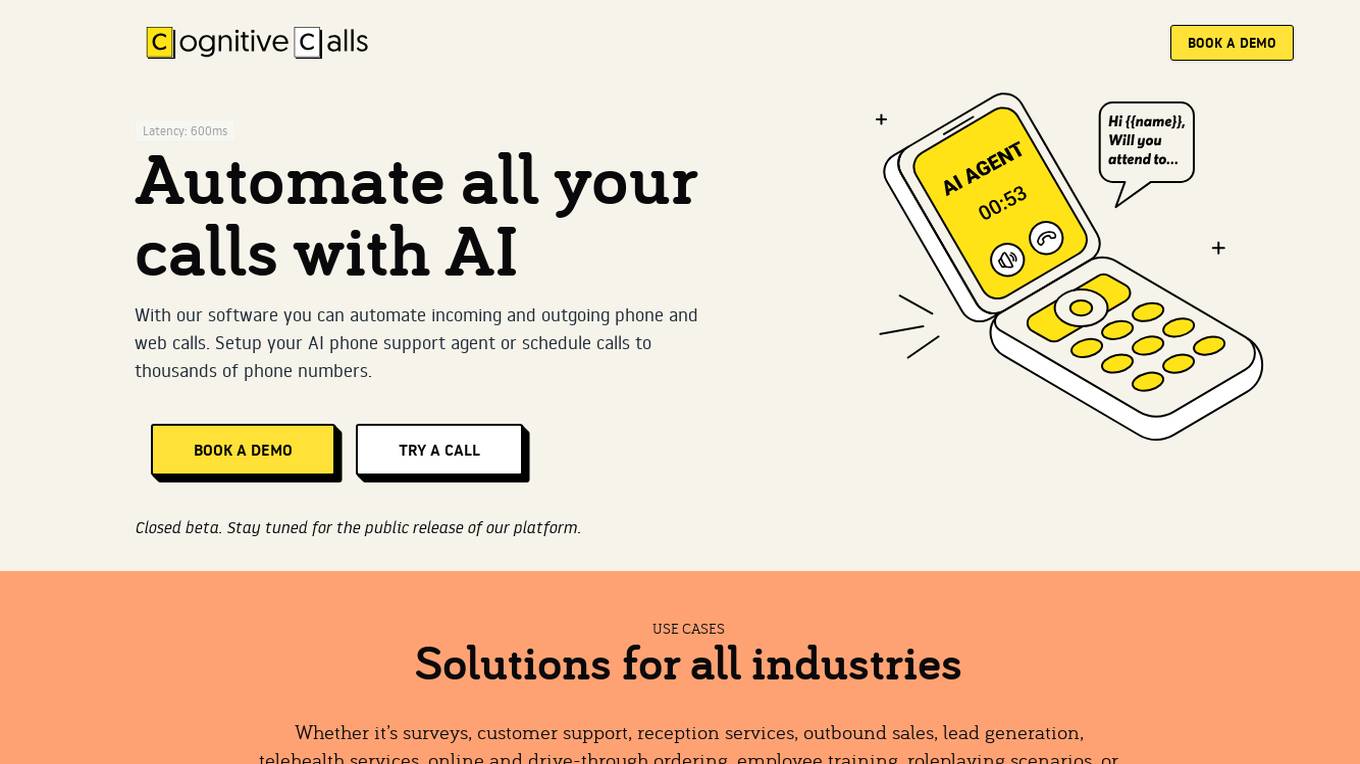

Cognitive Calls

Cognitive Calls is an AI-powered platform that enables users to automate incoming and outgoing phone and web calls. It offers solutions for various industries such as customer support, appointment scheduling, technical support, real estate, hospitality, insurance, surveys, sales follow-up, recruiting, debt collection, telehealth check-ins, reminders, alerts, voice assistants, learning apps, role-playing scenarios, ecommerce, drive-through systems, automotive systems, and robotic controls. The platform aims to enhance customer interactions by providing personalized support and efficient call handling through voice AI technology.

noluai.com

The website page provides detailed insights into the mechanics and technologies behind poker platforms in the online gaming industry. It delves into topics such as Random Number Generators (RNGs), fair play, anti-cheat technologies, server-side logic, player matching, platform stability, multi-table functionality, mobile vs desktop platforms, in-game communication systems, real-time hand histories, hand evaluation algorithms, card shuffling algorithms, security layers, handling player disconnections, payment system integration, casino bonus integration, leaderboards, low latency importance, rebuy and add-on mechanics, automatic tournament scheduling, Sit & Go vs scheduled tournaments, virtual economy, AI opponents, player statistics, sound design, testing processes, technical issues, emerging technologies, regulatory compliance, taxes handling, game variations, card animation, and the future of poker platform mechanics.

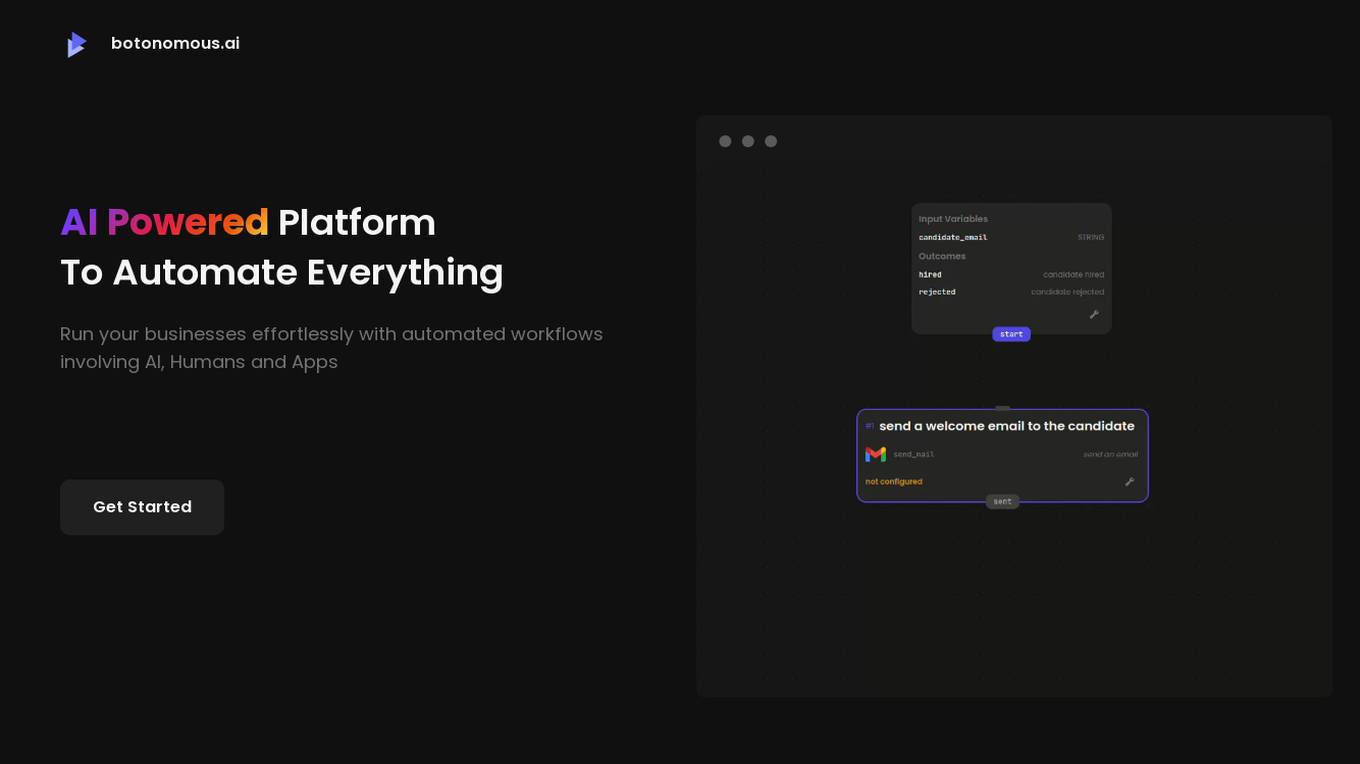

Botonomous

Botonomous is an AI-powered platform that helps businesses automate their workflows. With Botonomous, you can create advanced automations for any domain, check your flows for potential errors before running them, run multiple nodes concurrently without waiting for the completion of the previous step, create complex, non-linear flows with no-code, and design human interactions to participate in your automations. Botonomous also offers a variety of other features, such as webhooks, scheduled triggers, secure secret management, and a developer community.

VanillaHR

VanillaHR is an AI-powered hiring platform that helps businesses find the best candidates for their open positions. The platform uses AI to automate tasks such as screening resumes, scheduling interviews, and conducting background checks. This helps businesses save time and money while also improving the quality of their hires. VanillaHR is trusted by some of the world's leading companies, including Google, Amazon, and Microsoft.

SmileDial

SmileDial is a natural dental AI receptionist designed for Canadian dental practices. It offers a 24/7 AI receptionist system to help dentists save time, reduce costs, and enhance patient satisfaction. The AI-driven receptionist, named Susan, assists in real-time scheduling, automated reminders, insurance checks, and PHIPA compliance. SmileDial aims to maximize bookings, decrease no-shows, and offload time-consuming tasks, ultimately improving the efficiency and patient experience in dental offices.

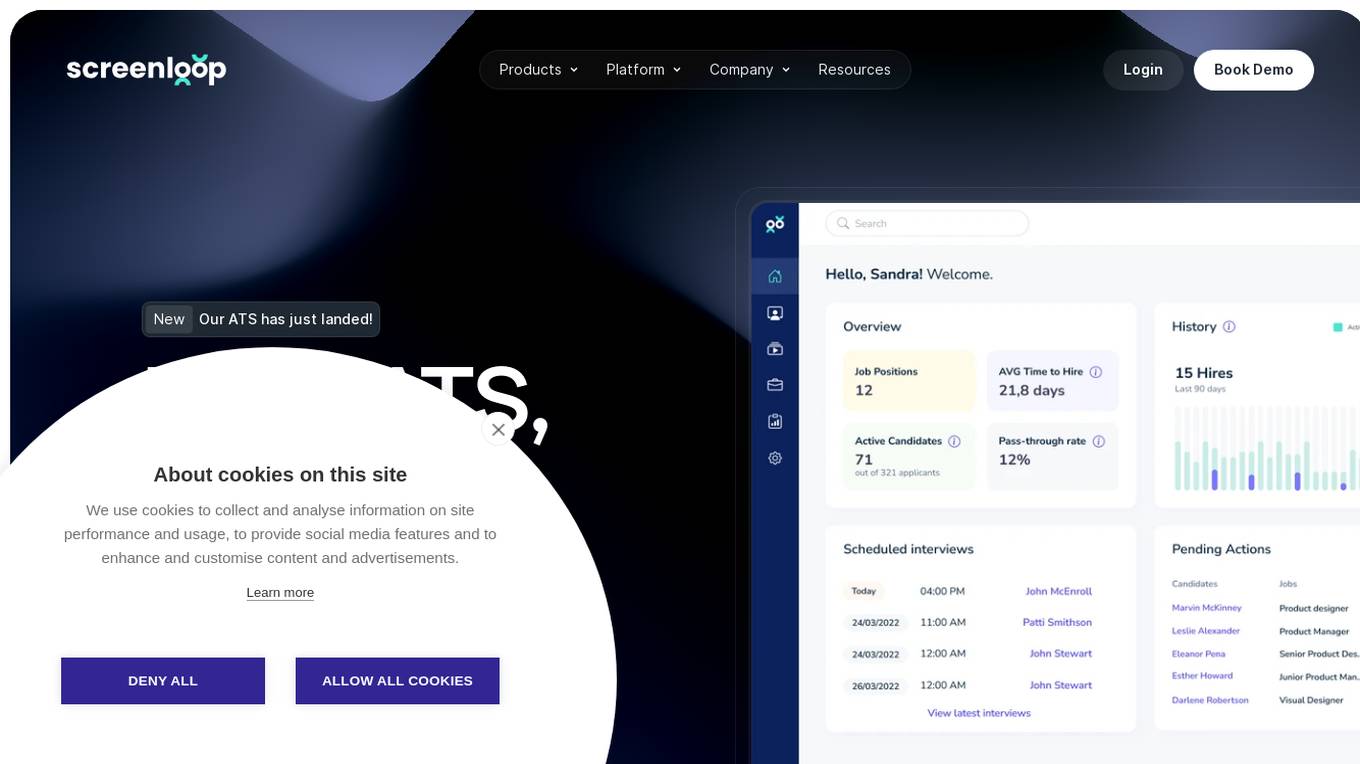

Screenloop

Screenloop is an applicant tracking system (ATS) that goes beyond traditional tracking systems by offering interview intelligence, AI notes, and more at a fraction of the cost. It is designed to help businesses win top candidates, scale seamlessly, and use powerful AI to streamline operations and reduce costs. Screenloop's Talent Operations Platform provides access to a full suite of tools, including applicant tracking, interview intelligence, pulse surveys, background checks, referencing, interviewer training, data & analytics, and more.

Essay Check

Essay Check is a free AI-powered tool that helps students, teachers, content creators, SEO specialists, and legal experts refine their writing, detect plagiarism, and identify AI-generated content. With its user-friendly interface and advanced algorithms, Essay Check analyzes text to identify grammatical errors, spelling mistakes, instances of plagiarism, and the likelihood that content was written using AI. The tool provides detailed feedback and suggestions to help users improve their writing and ensure its originality and authenticity.

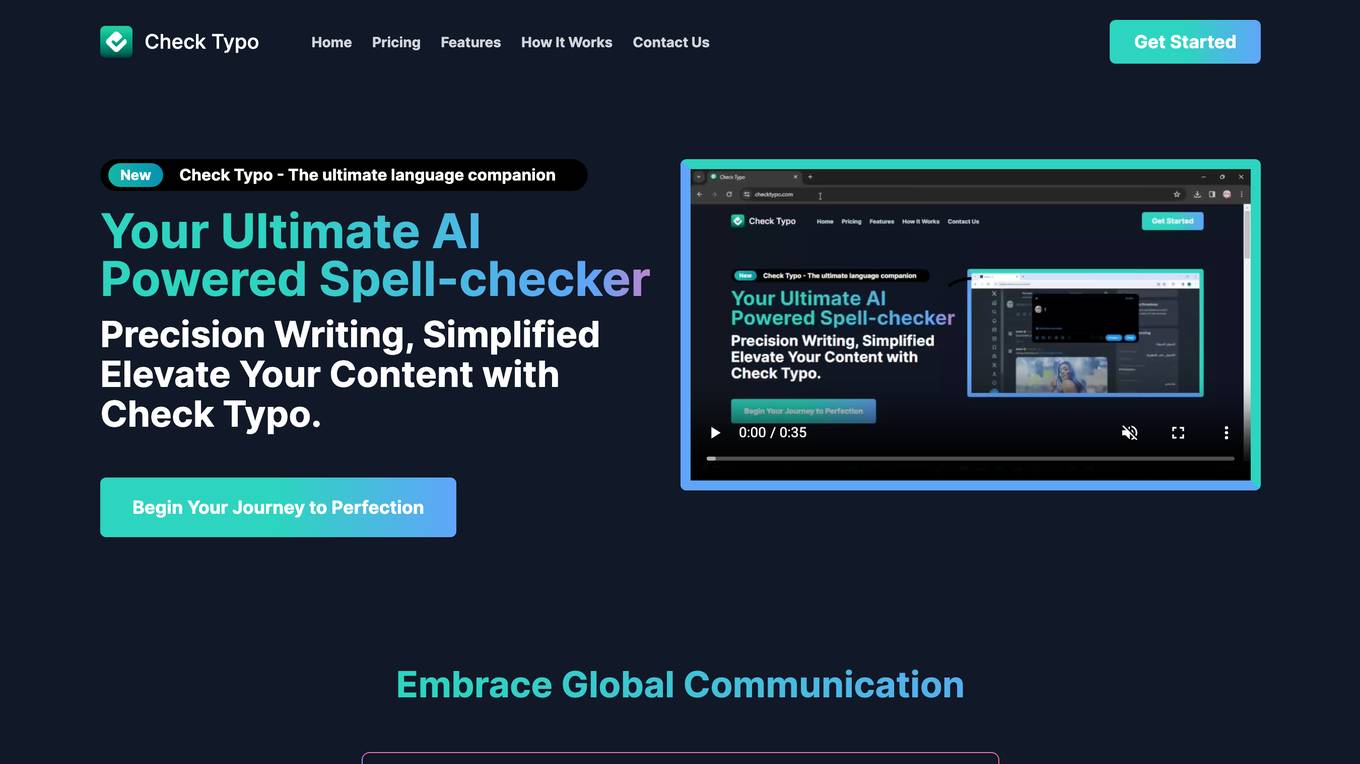

Check Typo

Check Typo is an AI-powered spell-checker tool designed to assist users in eliminating typos and grammatical errors from their writing. It seamlessly integrates within various websites, supports multiple languages, and preserves the original text's style and tone. Ideal for students, professionals, and writers, Check Typo enhances the writing experience with AI-driven precision, making it perfect for error-free emails, professional networking on platforms like LinkedIn, and enhancing social media posts across different platforms.

Copyright Check AI

Copyright Check AI is a service that helps protect brands from legal disputes related to copyright violations on social media. The software automatically detects copyright infringements on social profiles, reducing the risk of costly legal action. It is used by Heads of Marketing and In-House Counsel at top brands to avoid lawsuits and potential damages. The service offers a done-for-you audit to highlight violations, deliver reports, and provide ongoing monitoring to ensure brand protection.

Double Check AI

Double Check AI is an AI homework helper designed to assist college students in completing their assignments quickly and accurately. It offers instant answers, detailed explanations, and advanced recognition capabilities for solving complex problems. The tool is undetectable and plagiarism-free, making it a valuable resource for students looking to boost their grades and save time on homework.

Fact Check Anything

Fact Check Anything (FCA) is a browser extension that allows users to fact-check information on the internet. It uses AI to verify statements and provide users with reliable sources. FCA is available for all browsers using the Chromium engine on Windows or MacOS. It is easy to use and can be used on any website. FCA is a valuable tool for anyone who wants to stay informed and fight against misinformation.

0 - Open Source AI Tools

20 - OpenAI Gpts

📅 Schedule Companion | ゆみちゃん

Paste messages! Personal assistant for managing/planning schedules and tasks with Google Calendar

✍ Schedule Companion | ゆみちゃん

Paste messages! Personal assistant for managing/planning schedules and tasks with Google Calendar

La Chaîne Foot AI

Une IA pour trouver la bonne chaîne et ne jamais manquer votre match de foot préféré!

Sentitrac GPT

Get information about pro teams (NHL, NBA, NFL, MLB) teams by calling the ndricks Software Sports API.

Bookmobile Driver Assistant

Hello I'm Bookmobile Driver Assistant! What would you like help with today?

Lắp Mạng Viettel Tại Hải Dương

Dịch vụ Lắp đặt mạng Viettel tại Hải Dương internet tốc độ cao uy tín cho cá nhân, hộ gia đình và doanh nghiệp chỉ từ 165.000đ/tháng. Miễn phí lắp đặt, tặng modem Wifi 4 cổng siêu khoẻ, tặng 2-6 tháng sử dụng miễn phí. Chi tiết liên hệ: 0986 431 566