Best AI tools for< Check Deployment Status >

20 - AI tool Sites

Endpoint Validator

The website is a platform that provides error validation services for endpoints. Users can verify their endpoint URLs and check the status of their deployments. It helps in identifying issues related to endpoint existence and completion of deployments. The platform aims to ensure the smooth functioning of endpoints by detecting errors and providing relevant feedback to users.

Site Not Found

The website page seems to be a placeholder or error page with the message 'Site Not Found'. It indicates that the user may not have deployed an app yet or may have an empty directory. The page suggests referring to hosting documentation to deploy the first app. The site appears to be under construction or experiencing technical issues.

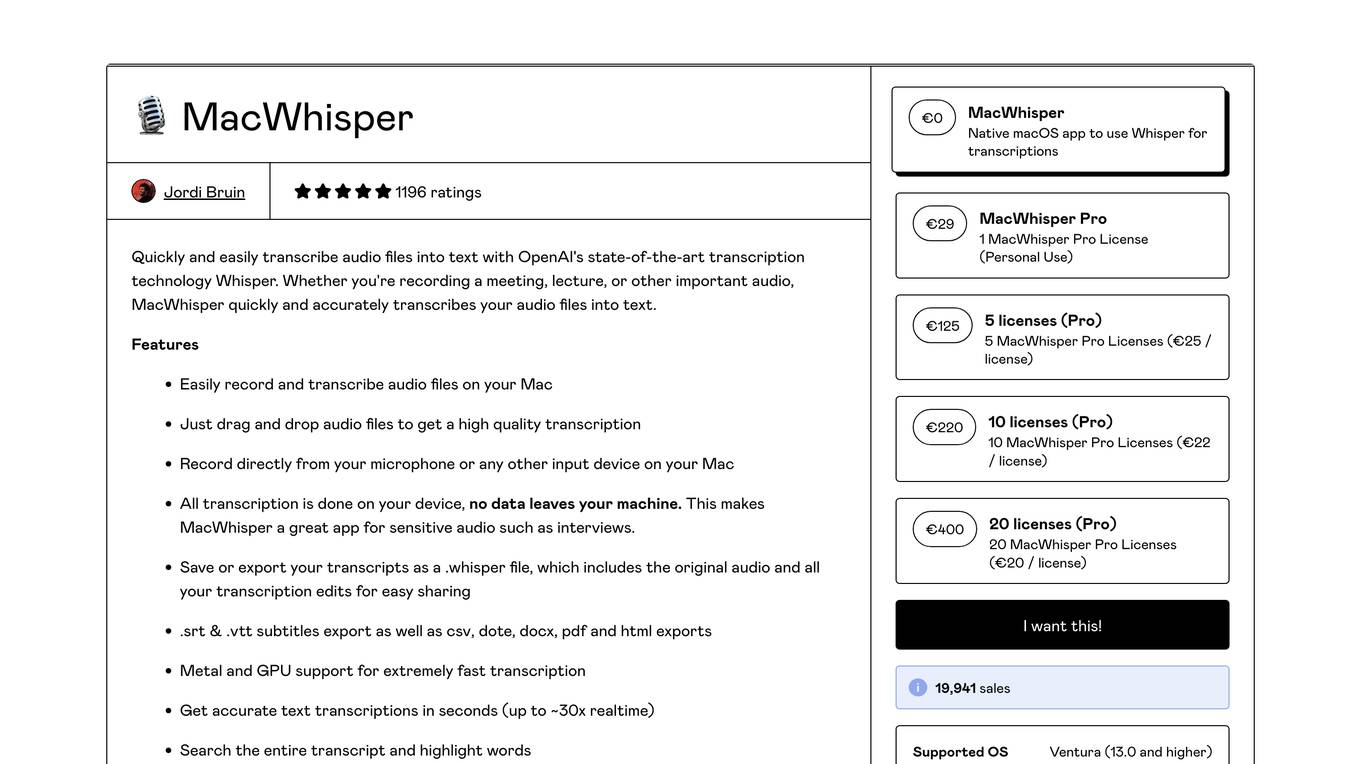

MacWhisper

MacWhisper is a native macOS application that utilizes OpenAI's Whisper technology for transcribing audio files into text. It offers a user-friendly interface for recording, transcribing, and editing audio, making it suitable for various use cases such as transcribing meetings, lectures, interviews, and podcasts. The application is designed to protect user privacy by performing all transcriptions locally on the device, ensuring that no data leaves the user's machine.

404 Error Finder

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::ncg7q-1770918099254-dd1178523b2c) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Page

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::22md2-1720772812453-4893618e160a) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::tb2c2-1757006335226-e6bd40c1a978) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Assistant

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::smrsr-1771524097601-5775e36f41ad) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::5wd8j-1770917142388-c57c677706b2) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Assistant

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::2m6c8-1741625060952-6f4086286312) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::9dqr4-1736268911417-fd3e8899e116) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Not Found

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::k7xdt-1736614074909-2dc430118e75) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::7crbp-1720289011850-d12041b250e9) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::drw9g-1771091771764-93b091583900) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::tncvm-1771611537656-506384c2f64e) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::7j749-1771698341325-a827948f8d2e) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::7rd4m-1725901316906-8c71a7a2cbd7) for reference. Users are directed to check the documentation for further information and troubleshooting.

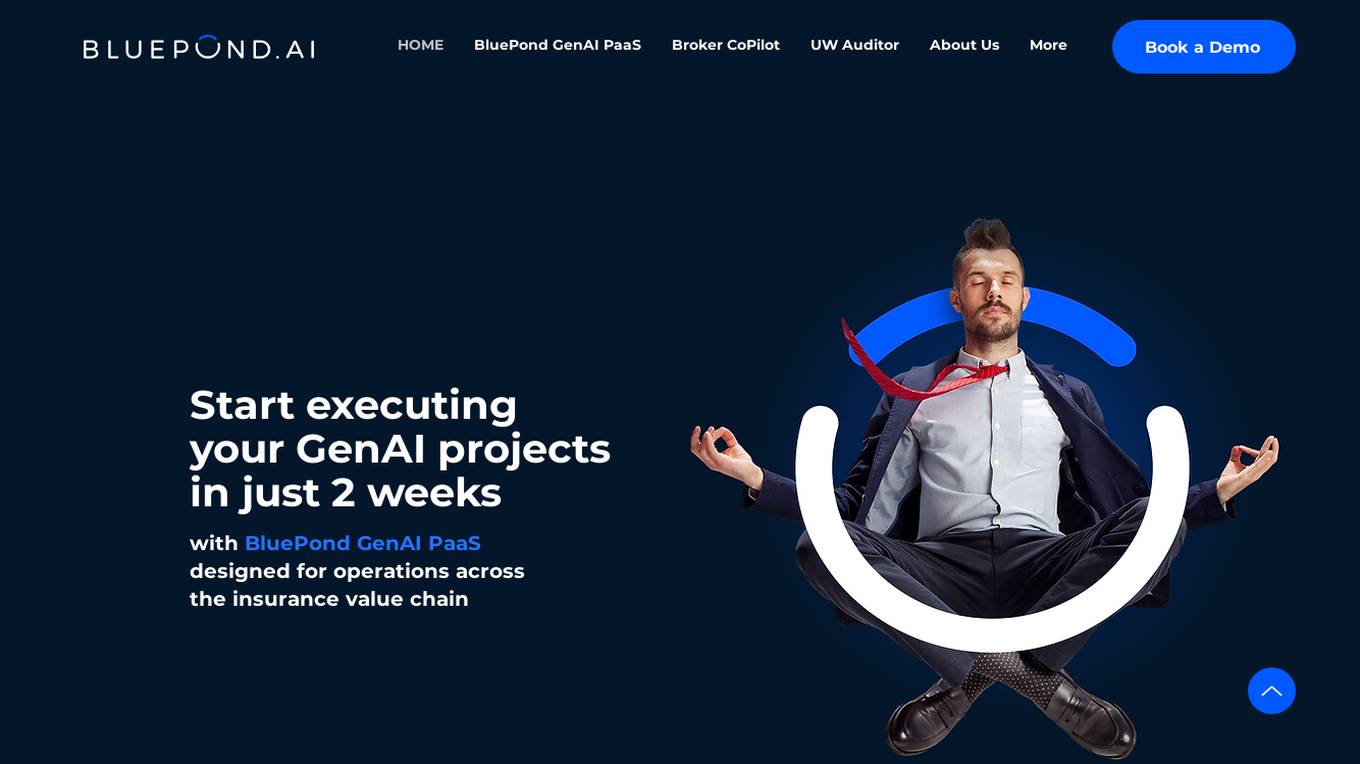

BluePond GenAI PaaS

BluePond GenAI PaaS is an automation and insights powerhouse tailored for Property and Casualty Insurance. It offers end-to-end execution support from GenAI data scientists, engineers & human-in-the-loop processing. The platform provides automated intake extraction, classification enrichment, validation, complex document analysis, workflow automation, and decisioning. Users benefit from rapid deployment, complete control of data & IP, and pre-trained P&C domain library. BluePond GenAI PaaS aims to energize and expedite GenAI initiatives throughout the insurance value chain.

Diffblue Cover

Diffblue Cover is an autonomous AI-powered unit test writing tool for Java development teams. It uses next-generation autonomous AI to automate unit testing, freeing up developers to focus on more creative work. Diffblue Cover can write a complete and correct Java unit test every 2 seconds, and it is directly integrated into CI pipelines, unlike AI-powered code suggestions that require developers to check the code for bugs. Diffblue Cover is trusted by the world's leading organizations, including Goldman Sachs, and has been proven to improve quality, lower developer effort, help with code understanding, reduce risk, and increase deployment frequency.

Tangram Vision

Tangram Vision is a company that provides sensor calibration tools and infrastructure for robotics and autonomous vehicles. Their products include MetriCal, a high-speed bundle adjustment software for precise sensor calibration, and AutoCal, an on-device, real-time calibration health check and adjustment tool. Tangram Vision also offers a high-resolution depth sensor called HiFi, which combines high-resolution depth data with high-powered AI capabilities. The company's mission is to accelerate the development and deployment of autonomous systems by providing the tools and infrastructure needed to ensure the accuracy and reliability of sensors.

StreamDeploy

StreamDeploy is an AI-powered cloud deployment platform designed to streamline and secure application deployment for agile teams. It offers a range of features to help developers maximize productivity and minimize costs, including a Dockerfile generator, automated security checks, and support for continuous integration and delivery (CI/CD) pipelines. StreamDeploy is currently in closed beta, but interested users can book a demo or follow the company on Twitter for updates.

0 - Open Source AI Tools

20 - OpenAI Gpts

Credit Score Check

Guides on checking and monitoring credit scores, with a financial and informative tone.

Backloger.ai - Requirements Health Check

Drop in any requirements ; I'll reduces ambiguity using requirement health check

Website Worth Calculator - Check Website Value

Calculate website worth by analyzing monthly revenue, using industry-standard valuation methods to provide approximate, informative value estimates.

News Bias Corrector

Balances out bias and researches live reports to give you a more balanced view (Paste in the text you want to check)

Service Rater

Helps check and provide feedback on service providers like contractors and plumbers.

Are You Weather Dependent or Not?

A mental health self-check tool assessing weather dependency. Powered by WeatherMind

AI Essay Writer

ChatGPT Essay Writer helps you to write essays with OpenAI. Generate Professional Essays with Plagiarism Check, Formatting, Cost Estimation & More.

Biblical Insights Hub & Navigator

Provides in-depth insights based on familiarity with the historical & cultural context of biblical times including an understanding of theological concepts. It's a Bible Scholar in your pocket!!! Verify Before You Trust (VBYT): Always Double-Check ChatGPT's Insights!

A/B Test GPT

Calculate the results of your A/B test and check whether the result is statistically significant or due to chance.

Anchorage Code Navigator

EXPERIMENT - Friendly guide for navigating Anchorage Municipal Code - Double Check info

Low FODMAP Chef

Expert in crafting personalized low FODMAP recipes with representative images. Cross check all ingredients & low FODMAP servings.

Ai PDF is a GPT (uses the popular Ai PDF plugin) that allows you to chat and ask questions of your PDF documents and have it explained to you by ChatGPT. We also include page references to help you fact-check all answers.