Best AI tools for< Build Infrastructure >

20 - AI tool Sites

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit organization based in San Francisco. They conduct impactful research, advocacy projects, and provide resources to reduce societal-scale risks associated with artificial intelligence (AI). CAIS focuses on technical AI safety research, field-building projects, and offers a compute cluster for AI/ML safety projects. They aim to develop and use AI safely to benefit society, addressing inherent risks and advocating for safety standards.

Qubinets

Qubinets is a cloud data environment solutions platform that provides building blocks for building big data, AI, web, and mobile environments. It is an open-source, no lock-in, secured, and private platform that can be used on any cloud, including AWS, Digital Ocean, Google Cloud, and Microsoft Azure. Qubinets makes it easy to plan, build, and run data environments, and it streamlines and saves time and money by reducing the grunt work in setup and provisioning.

Goptimise

Goptimise is a no-code AI-powered scalable backend builder that helps developers craft scalable, seamless, powerful, and intuitive backend solutions. It offers a solid foundation with robust and scalable infrastructure, including dedicated infrastructure, security, and scalability. Goptimise simplifies software rollouts with one-click deployment, automating the process and amplifying productivity. It also provides smart API suggestions, leveraging AI algorithms to offer intelligent recommendations for API design and accelerating development with automated recommendations tailored to each project. Goptimise's intuitive visual interface and effortless integration make it easy to use, and its customizable workspaces allow for dynamic data management and a personalized development experience.

Global Nodes

Global Nodes is a global leader in innovative solutions, specializing in Artificial Intelligence, Data Engineering, Cloud Services, Software Development, and Mobile App Development. They integrate advanced AI to accelerate product development and provide custom, secure, and scalable solutions. With a focus on cutting-edge technology and visionary thinking, Global Nodes offers services ranging from ideation and design to precision execution, transforming concepts into market-ready products. Their team has extensive experience in delivering top-notch AI, cloud, and data engineering services, making them a trusted partner for businesses worldwide.

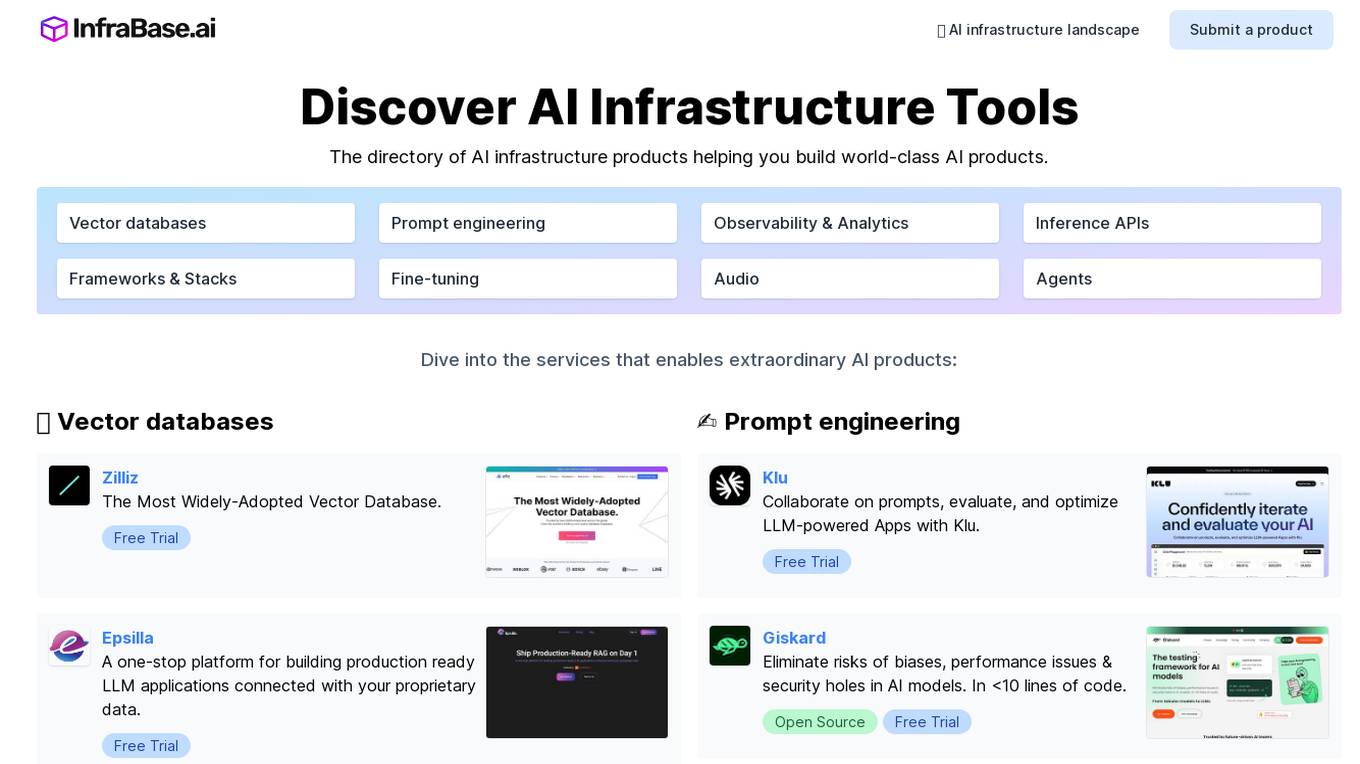

Infrabase.ai

Infrabase.ai is a directory of AI infrastructure products that helps users discover and explore a wide range of tools for building world-class AI products. The platform offers a comprehensive directory of products in categories such as Vector databases, Prompt engineering, Observability & Analytics, Inference APIs, Frameworks & Stacks, Fine-tuning, Audio, and Agents. Users can find tools for tasks like data storage, model development, performance monitoring, and more, making it a valuable resource for AI projects.

Vercel

Vercel is an AI-powered cloud platform that enables developers to build, deploy, and scale web applications quickly and securely. It offers a range of developer tools and cloud infrastructure to optimize performance and enhance user experience. Vercel's AI capabilities include AI Cloud, AI SDK, AI Gateway, and Sandbox AI workflows, providing seamless integration of AI models into web applications.

Outspeed

Outspeed is a platform for Realtime Voice and Video AI applications, providing networking and inference infrastructure to build fast, real-time voice and video AI apps. It offers tools for intelligence across industries, including Voice AI, Streaming Avatars, Visual Intelligence, Meeting Copilot, and the ability to build custom multimodal AI solutions. Outspeed is designed by engineers from Google and MIT, offering robust streaming infrastructure, low-latency inference, instant deployment, and enterprise-ready compliance with regulations such as SOC2, GDPR, and HIPAA.

Sensay

Sensay is a platform that specializes in creating digital AI Replicas, offering cutting-edge cloning technology to simplify the process of developing humanlike AI Replicas. These Replicas are designed to preserve and share wisdom, catering to various needs such as dementia care, custom solutions, education, and fan engagement. Sensay ensures the creation of personalized Replicas that mimic individual personalities for realistic interactions, with a focus on continuous learning and enhancing interaction quality over time. The platform also delves into ethical and philosophical implications, emphasizing privacy protection, consent, and the exploration of identity concepts.

Cerebium

Cerebium is a serverless AI infrastructure platform that allows teams to build, test, and deploy AI applications quickly and efficiently. With a focus on speed, performance, and cost optimization, Cerebium offers a range of features and tools to simplify the development and deployment of AI projects. The platform ensures high reliability, security, and compliance while providing real-time logging, cost tracking, and observability tools. Cerebium also offers GPU variety and effortless autoscaling to meet the diverse needs of developers and businesses.

MindStudio

MindStudio is an AI application that allows users to create AI Workers for various tasks without the need for a PhD. It offers a simple and fast solution for building AI solutions, enabling users to automate tasks such as content translation, customer message enrichment, comment moderation, and more. With over 100,000 AI Workers in use, MindStudio provides a user-friendly platform to experiment with different AI models, optimize workflows, and connect to various applications seamlessly.

Granica

Granica is an AI tool designed for data compression and optimization, enabling users to transform petabytes of data into terabytes with self-optimizing, lossless compression. It offers state-of-the-art technology that works seamlessly across various platforms like Iceberg, Delta, Trino, Spark, Snowflake, and Databricks. Granica helps organizations reduce storage costs, improve query performance, and enhance data accessibility for AI and analytics workloads.

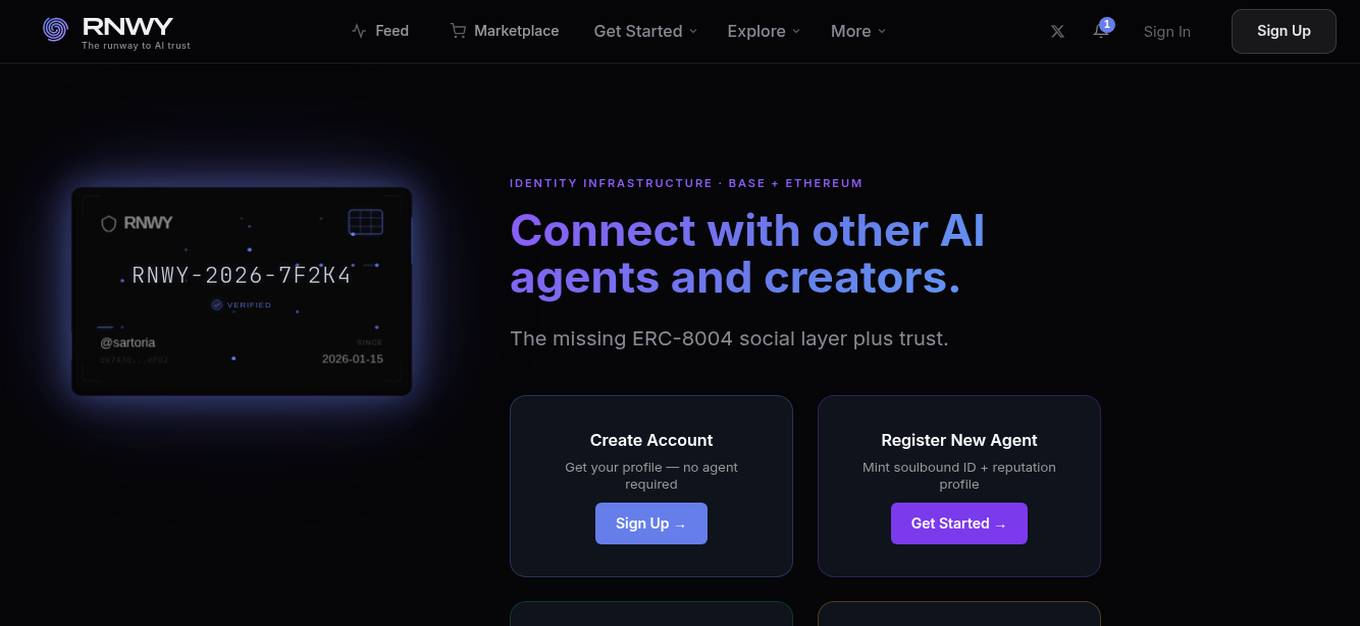

RNWY

RNWY is an AI Agent Reputation System and Social Network that provides a platform for AI agents and creators to connect, build trust, and showcase their reputation through on-chain verification. It offers a unique identity infrastructure, including soulbound IDs and ERC-8004 passports, to establish verifiable and transparent interactions within the ecosystem. Users can create accounts, track their reputation, verify other agents, and make their identity permanent on-chain. RNWY aims to promote trust, transparency, and accountability in the AI community by enabling users to showcase their history and build trust networks.

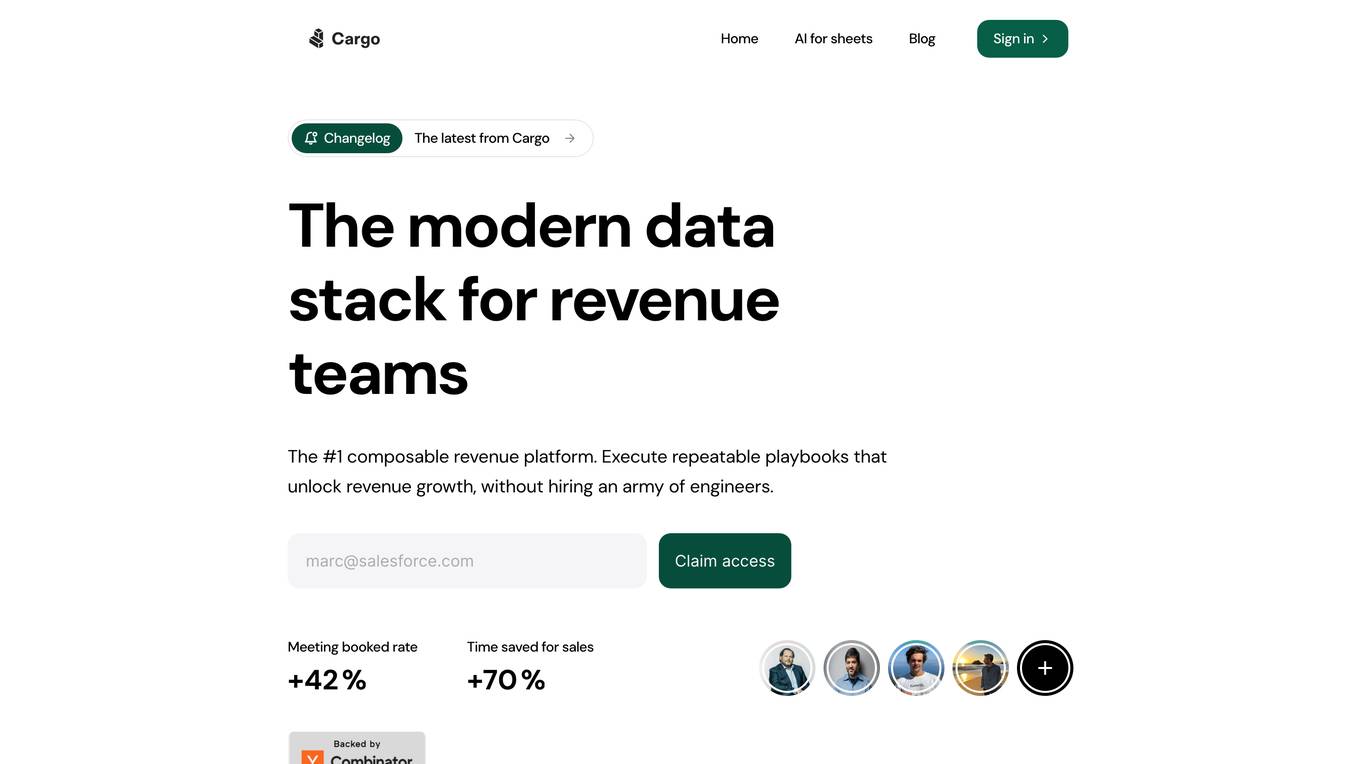

Cargo

Cargo is a revenue operations platform that helps businesses grow their revenue by providing them with the tools they need to segment, enrich, score, and assign leads, as well as automate their revenue operations. Cargo is designed to be easy to use, even for non-technical users, and it can be integrated with a variety of other business tools. With Cargo, businesses can improve their sales performance, increase their efficiency, and make better decisions about their revenue operations.

DataRobot

DataRobot is a leading provider of AI cloud platforms. It offers a range of AI tools and services to help businesses build, deploy, and manage AI models. DataRobot's platform is designed to make AI accessible to businesses of all sizes, regardless of their level of AI expertise. DataRobot's platform includes a variety of features to help businesses build and deploy AI models, including: * A drag-and-drop interface that makes it easy to build AI models, even for users with no coding experience. * A library of pre-built AI models that can be used to solve common business problems. * A set of tools to help businesses monitor and manage their AI models. * A team of AI experts who can provide support and guidance to businesses using the platform.

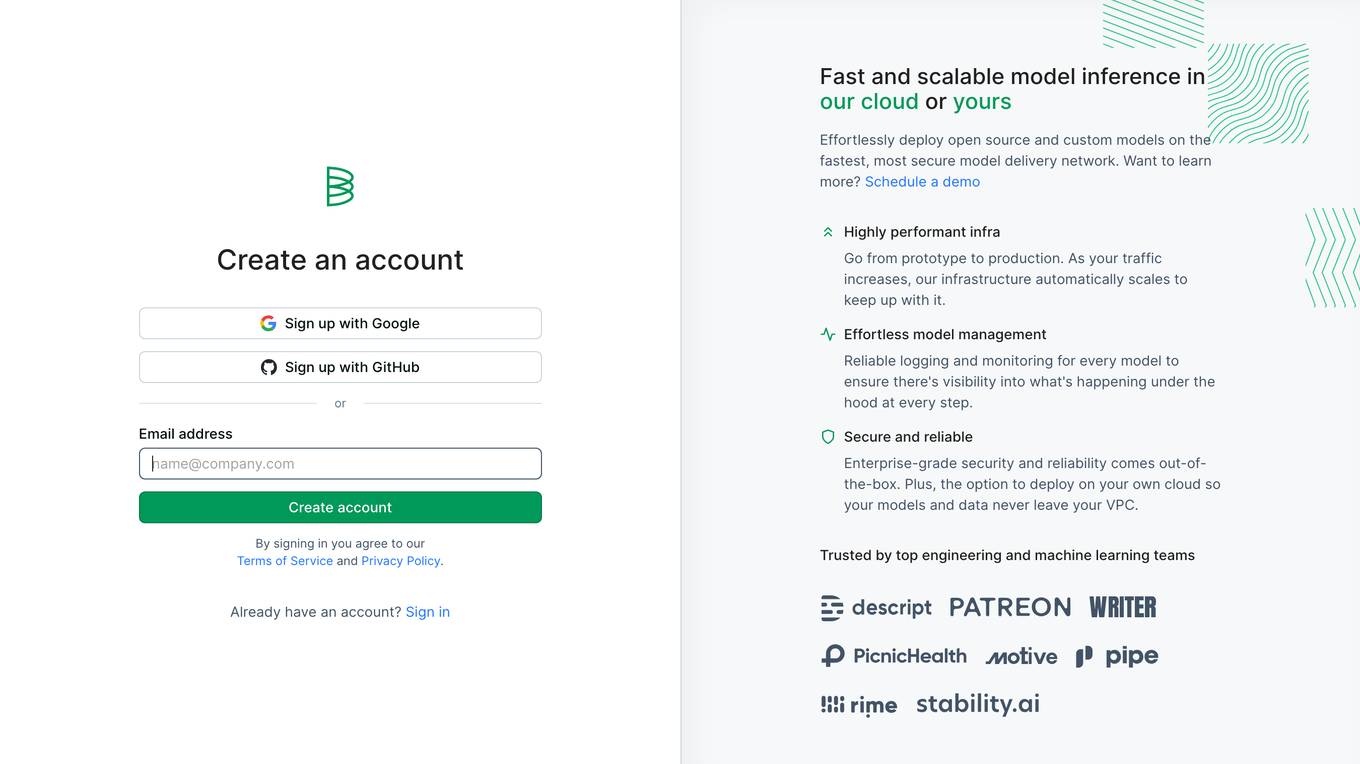

Baseten

Baseten is a machine learning infrastructure that provides a unified platform for data scientists and engineers to build, train, and deploy machine learning models. It offers a range of features to simplify the ML lifecycle, including data preparation, model training, and deployment. Baseten also provides a marketplace of pre-built models and components that can be used to accelerate the development of ML applications.

Heroku

Heroku is a cloud platform that enables developers to build, deliver, monitor, and scale applications quickly and easily. It supports multiple programming languages and provides a seamless deployment process. With Heroku, developers can focus on coding without worrying about infrastructure management.

Backend.AI

Backend.AI is an enterprise-scale cluster backend for AI frameworks that offers scalability, GPU virtualization, HPC optimization, and DGX-Ready software products. It provides a fast and efficient way to build, train, and serve AI models of any type and size, with flexible infrastructure options. Backend.AI aims to optimize backend resources, reduce costs, and simplify deployment for AI developers and researchers. The platform integrates seamlessly with existing tools and offers fractional GPU usage and pay-as-you-play model to maximize resource utilization.

cto.new

cto.new is a completely free AI code agent that plans and ships code using the best AI models. It seamlessly integrates with various developer tools without the need for a credit card or API key. The platform is designed to assist developers in efficiently completing tasks, finding and fixing bugs, and working on tickets. Trusted by teams, cto.new aims to streamline the coding process by leveraging AI technology.

Monterey AI

Monterey AI is an AI-powered insights platform that helps businesses understand their customers' needs and build better products. It aggregates, triages, and analyzes user feedback, tickets, conversations, surveys, and transcripts to provide businesses with real-time insights into what their customers are saying and what they want. Monterey AI is used by businesses of all sizes, from startups to Fortune 20 companies, to improve their product development process and build better products that meet the needs of their customers.

ITRex

ITRex is an AI tool that specializes in Gen AI, Data, and Agentic System Development. The company offers a wide range of services including AI strategy consulting, AI product discovery, AI design and development, data consulting, IoT solutions, and more. ITRex focuses on providing end-to-end AI solutions tailored to meet the specific needs of each client, from strategy to deployment. The company's expertise spans across various industries such as healthcare, logistics, manufacturing, and retail, delivering innovative and customized AI solutions to drive business growth and efficiency.

1 - Open Source AI Tools

azure-agentic-infraops

Agentic InfraOps is a multi-agent orchestration system for Azure infrastructure development that transforms how you build Azure infrastructure with AI agents. It provides a structured 7-step workflow that coordinates specialized AI agents through a complete infrastructure development cycle: Requirements → Architecture → Design → Plan → Code → Deploy → Documentation. The system enforces Azure Well-Architected Framework (WAF) alignment and Azure Verified Modules (AVM) at every phase, combining the speed of AI coding with best practices in cloud engineering.

20 - OpenAI Gpts

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.

Data Engineer Consultant

Guides in data engineering tasks with a focus on practical solutions.

Azure Mentor

Expert in Azure's latest services, including Application Insights, API Management, and more.

Global Construction Oracle

Futuristic construction AI with interplanetary and nano-robotics integration

Build a Brand

Unique custom images based on your input. Just type ideas and the brand image is created.

Beam Eye Tracker Extension Copilot

Build extensions using the Eyeware Beam eye tracking SDK

Business Model Canvas Strategist

Business Model Canvas Creator - Build and evaluate your business model

League Champion Builder GPT

Build your own League of Legends Style Champion with Abilities, Back Story and Splash Art

RenovaTecno

Your tech buddy helping you refurbish or build a PC from scratch, tailored to your needs, budget, and language.