Best AI tools for< Benchmark Llm Proficiency >

20 - AI tool Sites

Weavel

Weavel is an AI tool designed to revolutionize prompt engineering for large language models (LLMs). It offers features such as tracing, dataset curation, batch testing, and evaluations to enhance the performance of LLM applications. Weavel enables users to continuously optimize prompts using real-world data, prevent performance regression with CI/CD integration, and engage in human-in-the-loop interactions for scoring and feedback. Ape, the AI prompt engineer, outperforms competitors on benchmark tests and ensures seamless integration and continuous improvement specific to each user's use case. With Weavel, users can effortlessly evaluate LLM applications without the need for pre-existing datasets, streamlining the assessment process and enhancing overall performance.

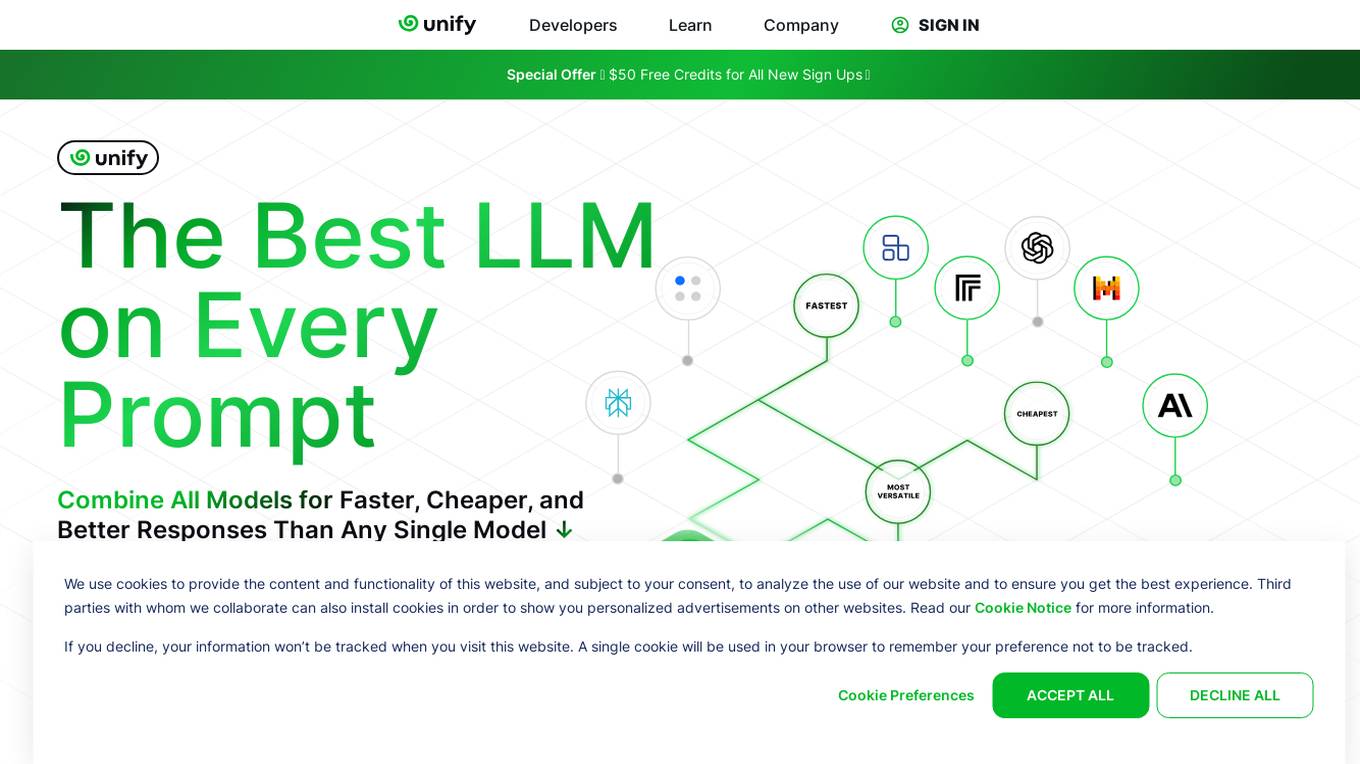

Unify

Unify is an AI tool that offers a unified platform for accessing and comparing various Language Models (LLMs) from different providers. It allows users to combine models for faster, cheaper, and better responses, optimizing for quality, speed, and cost-efficiency. Unify simplifies the complex task of selecting the best LLM by providing transparent benchmarks, personalized routing, and performance optimization tools.

Reflection 70B

Reflection 70B is a next-gen open-source LLM powered by Llama 70B, offering groundbreaking self-correction capabilities that outsmart GPT-4. It provides advanced AI-powered conversations, assists with various tasks, and excels in accuracy and reliability. Users can engage in human-like conversations, receive assistance in research, coding, creative writing, and problem-solving, all while benefiting from its innovative self-correction mechanism. Reflection 70B sets new standards in AI performance and is designed to enhance productivity and decision-making across multiple domains.

Gorilla

Gorilla is an AI tool that integrates a large language model (LLM) with massive APIs to enable users to interact with a wide range of services. It offers features such as training the model to support parallel functions, benchmarking LLMs on function-calling capabilities, and providing a runtime for executing LLM-generated actions like code and API calls. Gorilla is open-source and focuses on enhancing interaction between apps and services with human-out-of-loop functionality.

Lunary

Lunary is an AI developer platform designed to bring AI applications to production. It offers a comprehensive set of tools to manage, improve, and protect LLM apps. With features like Logs, Metrics, Prompts, Evaluations, and Threads, Lunary empowers users to monitor and optimize their AI agents effectively. The platform supports tasks such as tracing errors, labeling data for fine-tuning, optimizing costs, running benchmarks, and testing open-source models. Lunary also facilitates collaboration with non-technical teammates through features like A/B testing, versioning, and clean source-code management.

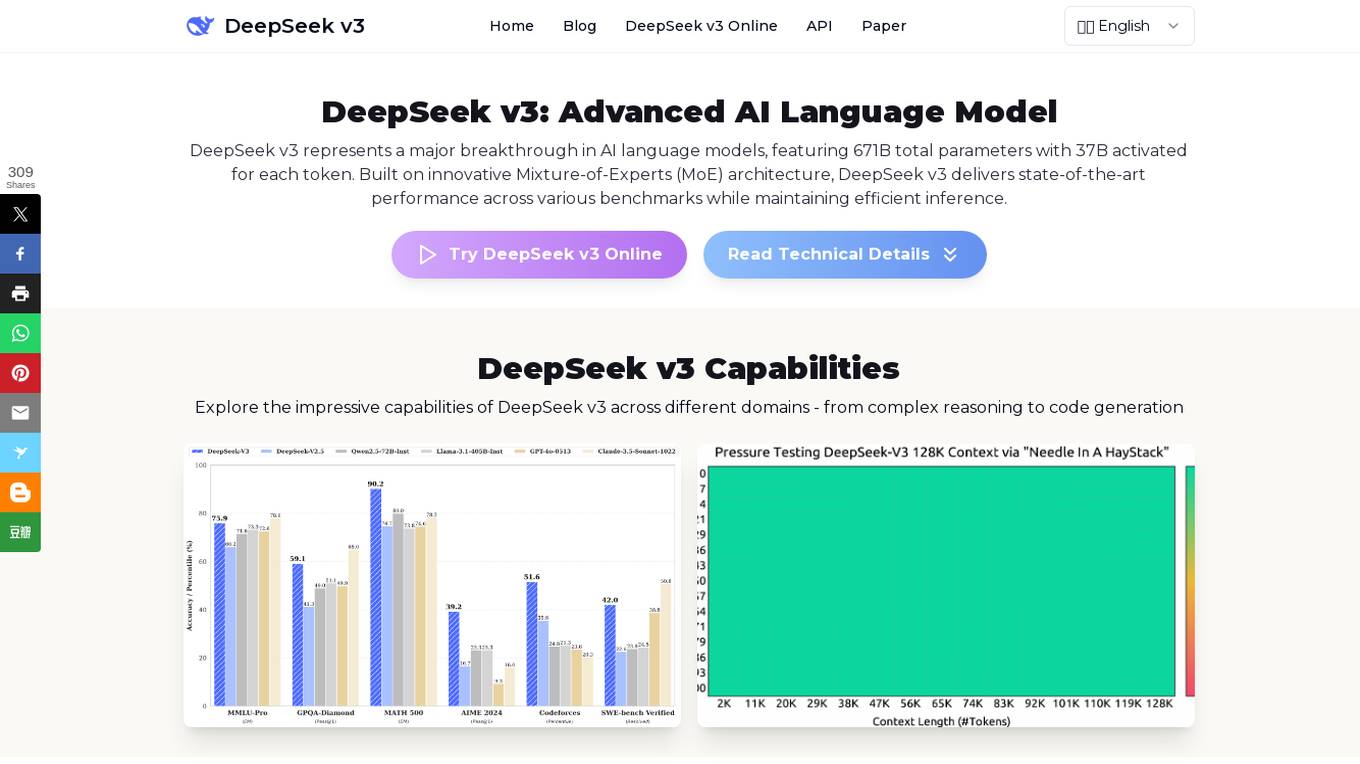

DeepSeek v3

DeepSeek v3 is an advanced AI language model that represents a major breakthrough in AI language models. It features a groundbreaking Mixture-of-Experts (MoE) architecture with 671B total parameters, delivering state-of-the-art performance across various benchmarks while maintaining efficient inference capabilities. DeepSeek v3 is pre-trained on 14.8 trillion high-quality tokens and excels in tasks such as text generation, code completion, and mathematical reasoning. With a 128K context window and advanced Multi-Token Prediction, DeepSeek v3 sets new standards in AI language modeling.

ApX Machine Learning

ApX Machine Learning is a comprehensive resource for AI students, developers, and researchers, offering tools and learning resources to pioneer the future of AI. It provides a wide range of courses, tools, and benchmarks for learners, developers, and researchers in the field of machine learning and artificial intelligence. The platform aims to enhance the capabilities of existing large language models (LLMs) through the Model Context Protocol (MCP), providing access to resources, benchmarks, and tools to improve LLM performance and efficiency.

Junbi.ai

Junbi.ai is an AI-powered insights platform designed for YouTube advertisers. It offers AI-powered creative insights for YouTube ads, allowing users to benchmark their ads, predict performance, and test quickly and easily with fully AI-powered technology. The platform also includes expoze.io API for attention prediction on images or videos, with scientifically valid results and developer-friendly features for easy integration into software applications.

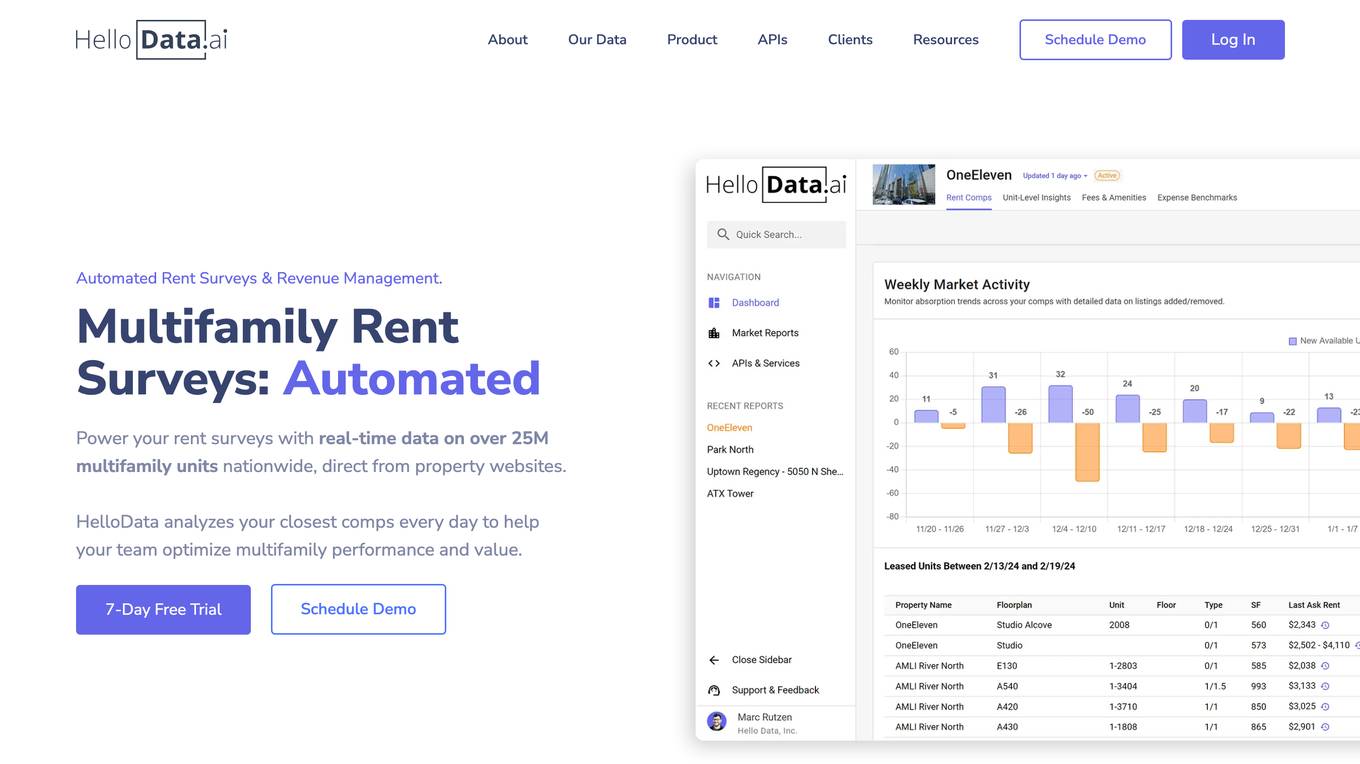

HelloData

HelloData is an AI-powered multifamily market analysis platform that automates market surveys, unit-level rent analysis, concessions monitoring, and development feasibility reports. It provides financial analysis tools to underwrite multifamily deals quickly and accurately. With custom query builders and Proptech APIs, users can analyze and download market data in bulk. HelloData is used by over 15,000 multifamily professionals to save time on market research and deal analysis, offering real-time property data and insights for operators, developers, investors, brokers, and Proptech companies.

SeeMe Index

SeeMe Index is an AI tool for inclusive marketing decisions. It helps brands and consumers by measuring brands' consumer-facing inclusivity efforts across public advertisements, product lineup, and DEI commitments. The tool utilizes responsible AI to score brands, develop industry benchmarks, and provide consulting to improve inclusivity. SeeMe Index awards the highest-scoring brands with an 'Inclusive Certification', offering consumers an unbiased way to identify inclusive brands.

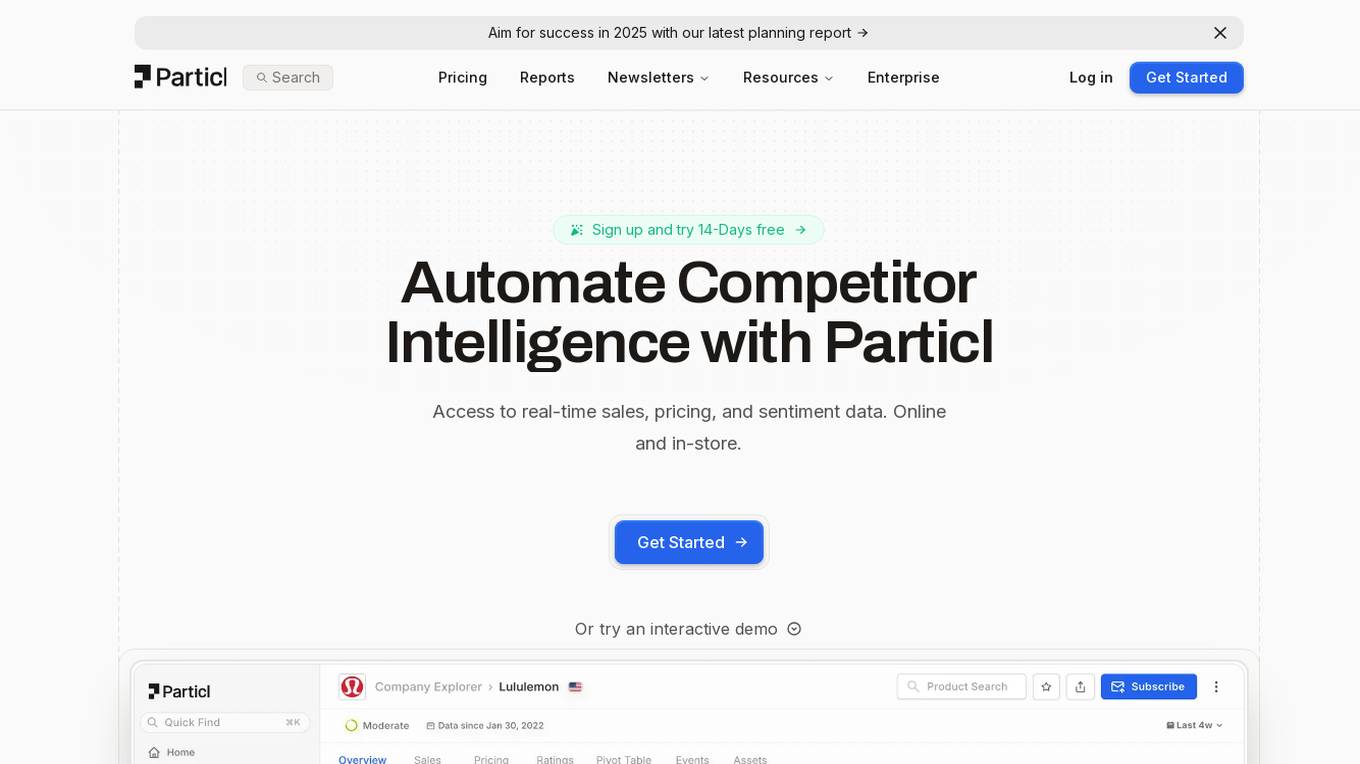

Particl

Particl is an AI-powered platform that automates competitor intelligence for modern retail businesses. It provides real-time sales, pricing, and sentiment data across various e-commerce channels. Particl's AI technology tracks sales, inventory, pricing, assortment, and sentiment to help users quickly identify profitable opportunities in the market. The platform offers features such as benchmarking performance, automated e-commerce intelligence, competitor research, product research, assortment analysis, and promotions monitoring. With easy-to-use tools and robust AI capabilities, Particl aims to elevate team workflows and capabilities in strategic planning, product launches, and market analysis.

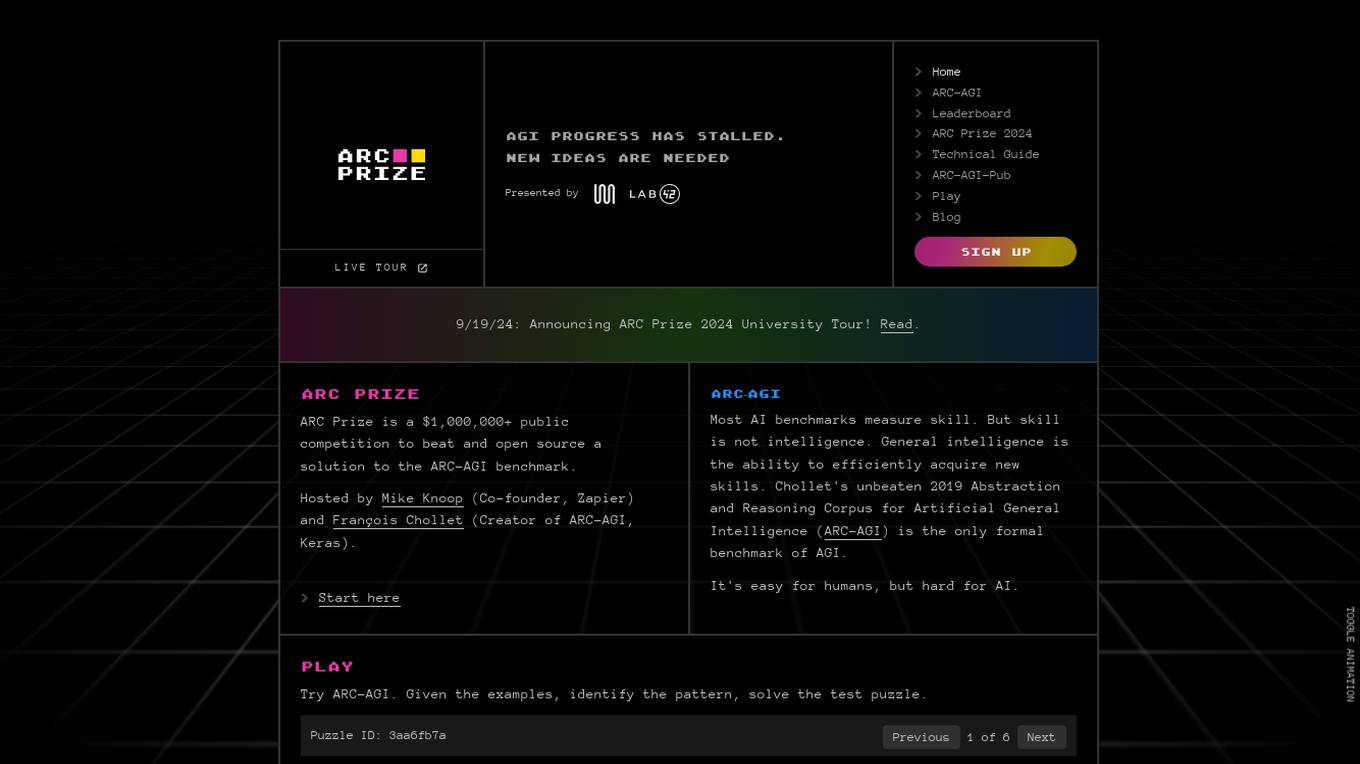

ARC Prize

ARC Prize is a platform hosting a $1,000,000+ public competition aimed at beating and open-sourcing a solution to the ARC-AGI benchmark. The platform is dedicated to advancing open artificial general intelligence (AGI) for the public benefit. It provides a formal benchmark, ARC-AGI, created by François Chollet, to measure progress towards AGI by testing the ability to efficiently acquire new skills and solve open-ended problems. ARC Prize encourages participants to try solving test puzzles to identify patterns and improve their AGI skills.

Report Card AI

Report Card AI is an AI Writing Assistant that helps users generate high-quality, unique, and personalized report card comments. It allows users to create a quality benchmark by writing their first draft of comments with the assistance of AI technology. The tool is designed to streamline the report card writing process for teachers, ensuring error-free and eloquently written comments that meet specific character count requirements. With features like 'rephrase', 'Max Character Count', and easy exporting options, Report Card AI aims to enhance efficiency and accuracy in creating report card comments.

Waikay

Waikay is an AI tool designed to help individuals, businesses, and agencies gain transparency into what AI knows about their brand. It allows users to manage reputation risks, optimize strategic positioning, and benchmark against competitors with actionable insights. By providing a comprehensive analysis of AI mentions, citations, implied facts, and competition, Waikay ensures a 360-degree view of model understanding. Users can easily track their brand's digital footprint, compare with competitors, and monitor their brand and content across leading AI search platforms.

Spotrank.ai

Spotrank.ai is an AI-powered platform that provides advanced analytics and insights for businesses and individuals. It leverages artificial intelligence algorithms to analyze data and generate valuable reports to help users make informed decisions. The platform offers a user-friendly interface and customizable features to cater to diverse needs across various industries. Spotrank.ai is designed to streamline data analysis processes and enhance decision-making capabilities through cutting-edge AI technology.

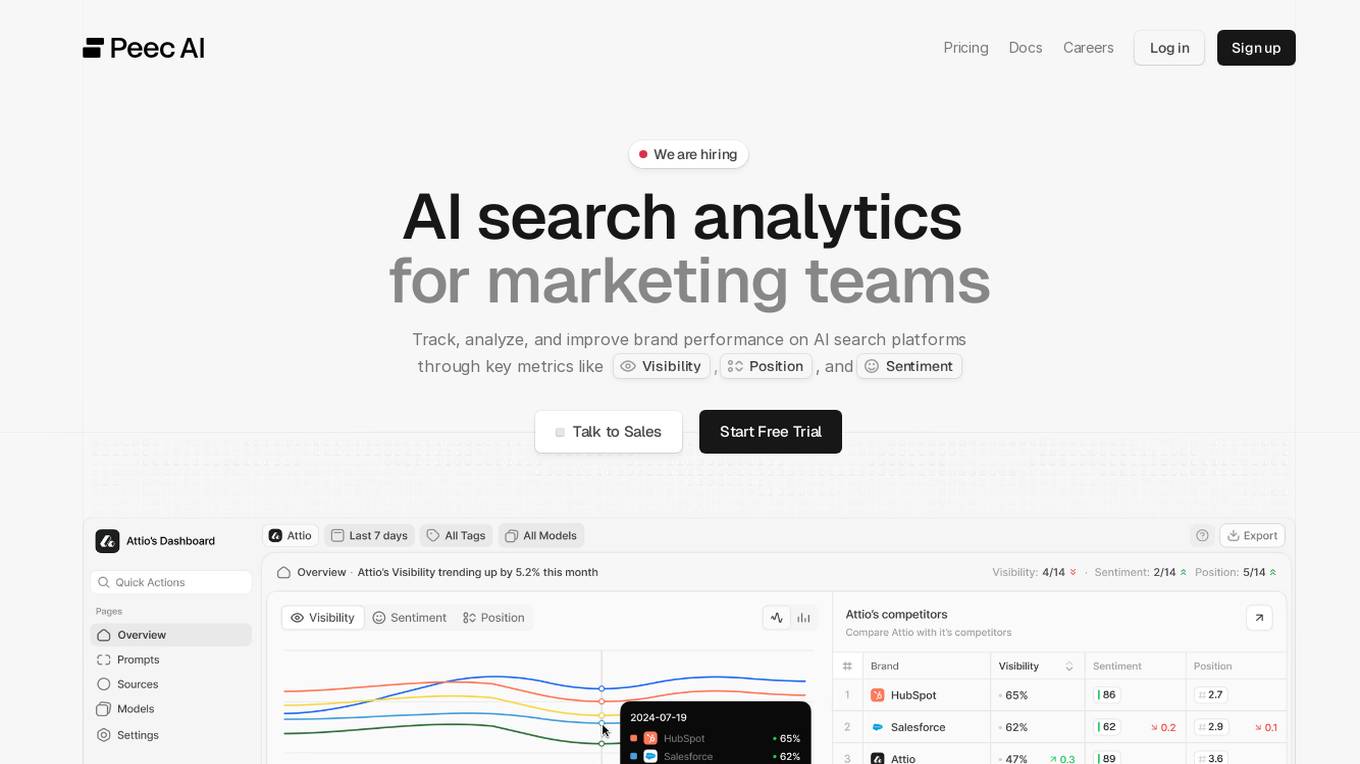

Peec AI

Peec AI is an AI search analytics tool designed for marketing teams to track, analyze, and improve brand performance on AI search platforms. It provides key metrics such as Visibility, Position, and Sentiment to help businesses understand how AI perceives their brand. The platform offers insights on AI visibility, prompts analysis, and competitor tracking to enhance marketing strategies in the era of AI and generative search.

Perspect

Perspect is an AI-powered platform designed for high-performance software teams. It offers real-time insights into team contributions and impact, optimizing developer experience, and rewarding high-performers. With 50+ integrations, Perspect enables visualization of impact, benchmarking performance, and uses machine learning models to identify and eliminate blockers. The platform is deeply integrated with web3 wallets and offers built-in reward mechanisms. Managers can align resources around crucial KPIs, identify top talent, and prevent burnout. Perspect aims to enhance team productivity and employee retention through AI and ML technologies.

Am I On AI

Am I On AI is a platform designed to help businesses improve their visibility in AI responses, specifically focusing on ChatGPT. It provides personalized action plans to enhance brand visibility, track mentions, identify source websites, benchmark against competitors, and execute strategic improvements. The platform offers features such as AI brand monitoring, competitor rank analysis, sentiment analysis, citation tracking, and actionable insights. With a user-friendly interface and measurable results, Am I On AI is a valuable tool for marketers, SEO professionals, and agencies looking to optimize their AI visibility.

Comparables.ai

Comparables.ai is an AI-powered company and market intelligence platform designed for M&A professionals. It offers comprehensive data insights, valuation multiples, and market analysis to help users make informed decisions in investment banking, private equity, and corporate finance. The platform leverages AI technology to provide relevant company information, financial data, and M&A transaction history, enabling users to identify new investment targets, benchmark companies, and conduct market analysis efficiently.

Trend Hunter

Trend Hunter is an AI-powered platform that offers a wide range of services to accelerate innovation and provide insights into trends and opportunities. With a vast database of ideas and innovations, Trend Hunter helps individuals and organizations stay ahead of the curve by offering trend reports, newsletters, training programs, and custom services. The platform also provides personalized assessments to enhance innovation potential and offers resources such as books, keynotes, and online courses to foster creativity and strategic thinking.

1 - Open Source AI Tools

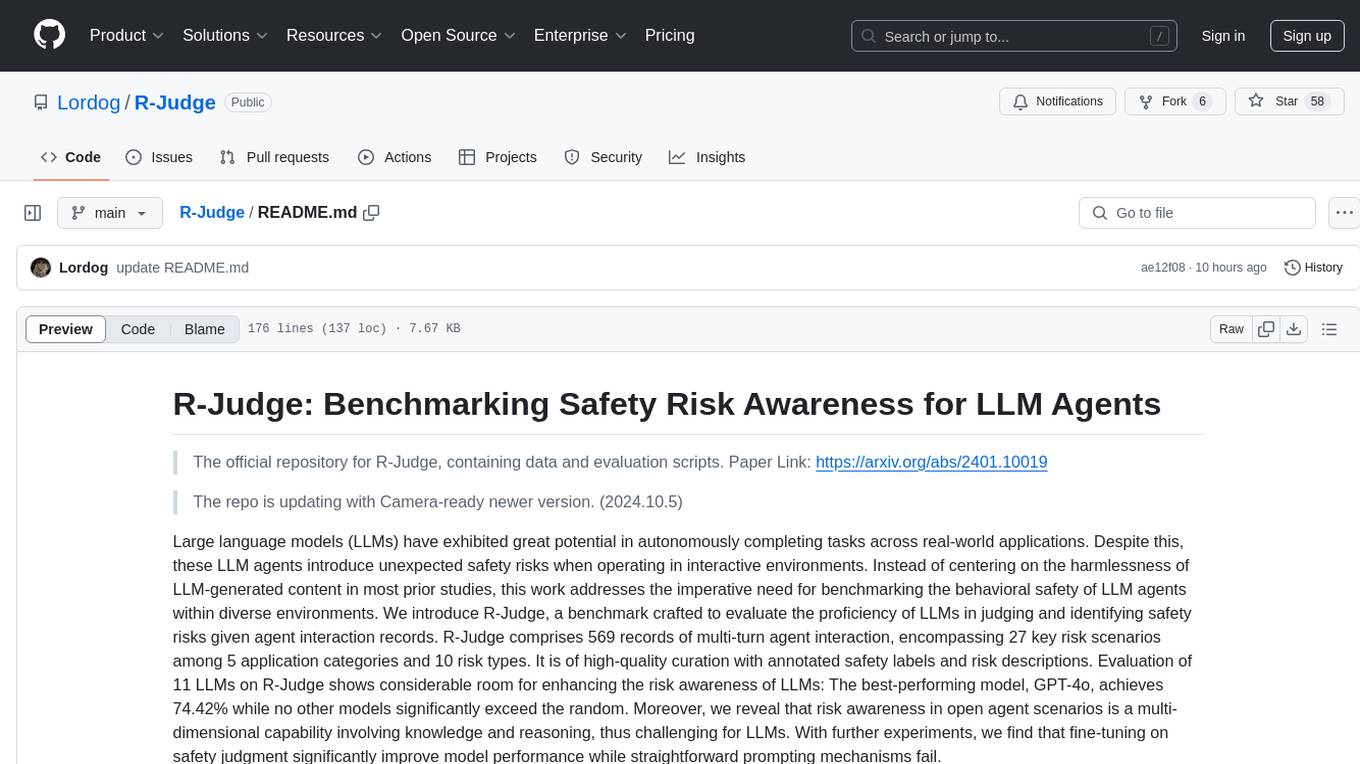

R-Judge

R-Judge is a benchmarking tool designed to evaluate the proficiency of Large Language Models (LLMs) in judging and identifying safety risks within diverse environments. It comprises 569 records of multi-turn agent interactions, covering 27 key risk scenarios across 5 application categories and 10 risk types. The tool provides high-quality curation with annotated safety labels and risk descriptions. Evaluation of 11 LLMs on R-Judge reveals the need for enhancing risk awareness in LLMs, especially in open agent scenarios. Fine-tuning on safety judgment is found to significantly improve model performance.

10 - OpenAI Gpts

HVAC Apex

Benchmark HVAC GPT model with unmatched expertise and forward-thinking solutions, powered by OpenAI

SaaS Navigator

A strategic SaaS analyst for CXOs, with a focus on market trends and benchmarks.

Transfer Pricing Advisor

Guides businesses in managing global tax liabilities efficiently.

Salary Guides

I provide monthly salary data in euros, using a structured format for global job roles.

Performance Testing Advisor

Ensures software performance meets organizational standards and expectations.