Best AI tools for< Benchmark Chemistry Tasks >

20 - AI tool Sites

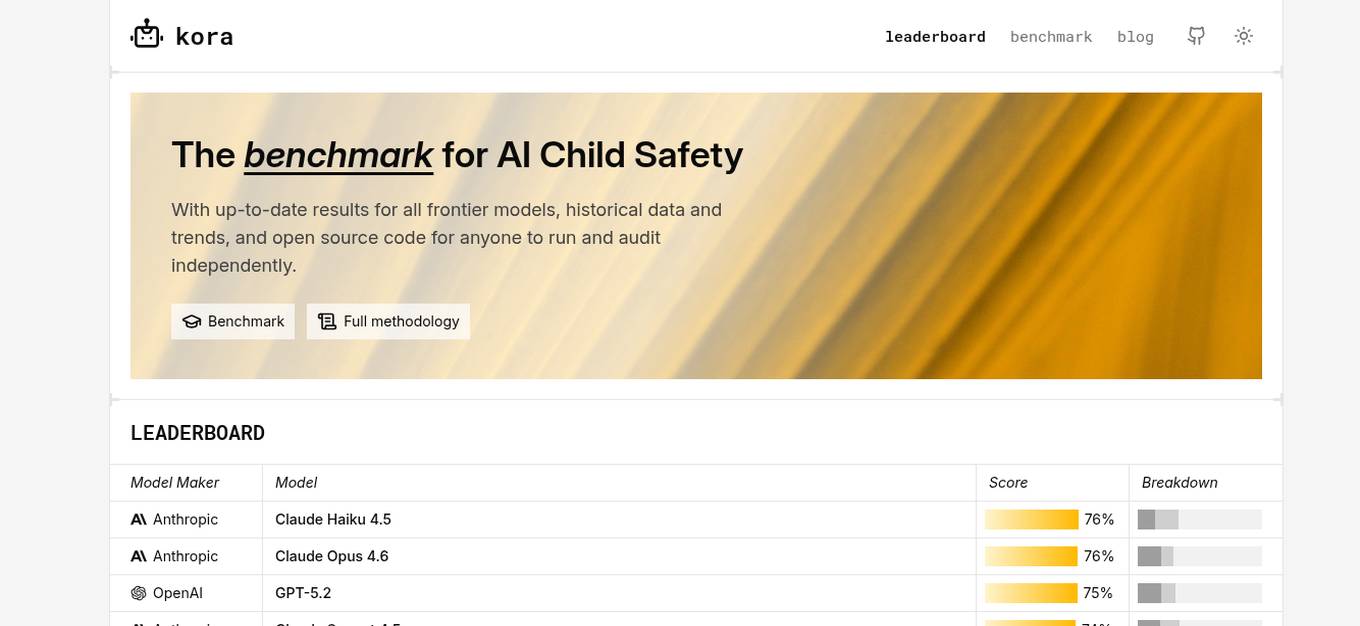

KORA Benchmark

KORA Benchmark is a leading platform that provides a benchmark for AI child safety. It offers up-to-date results for frontier models, historical data, and trends. The platform also provides open-source code for users to run and audit independently. KORA Benchmark aims to ensure the safety of children in the AI landscape by evaluating various models and providing valuable insights to the community.

Junbi.ai

Junbi.ai is an AI-powered insights platform designed for YouTube advertisers. It offers AI-powered creative insights for YouTube ads, allowing users to benchmark their ads, predict performance, and test quickly and easily with fully AI-powered technology. The platform also includes expoze.io API for attention prediction on images or videos, with scientifically valid results and developer-friendly features for easy integration into software applications.

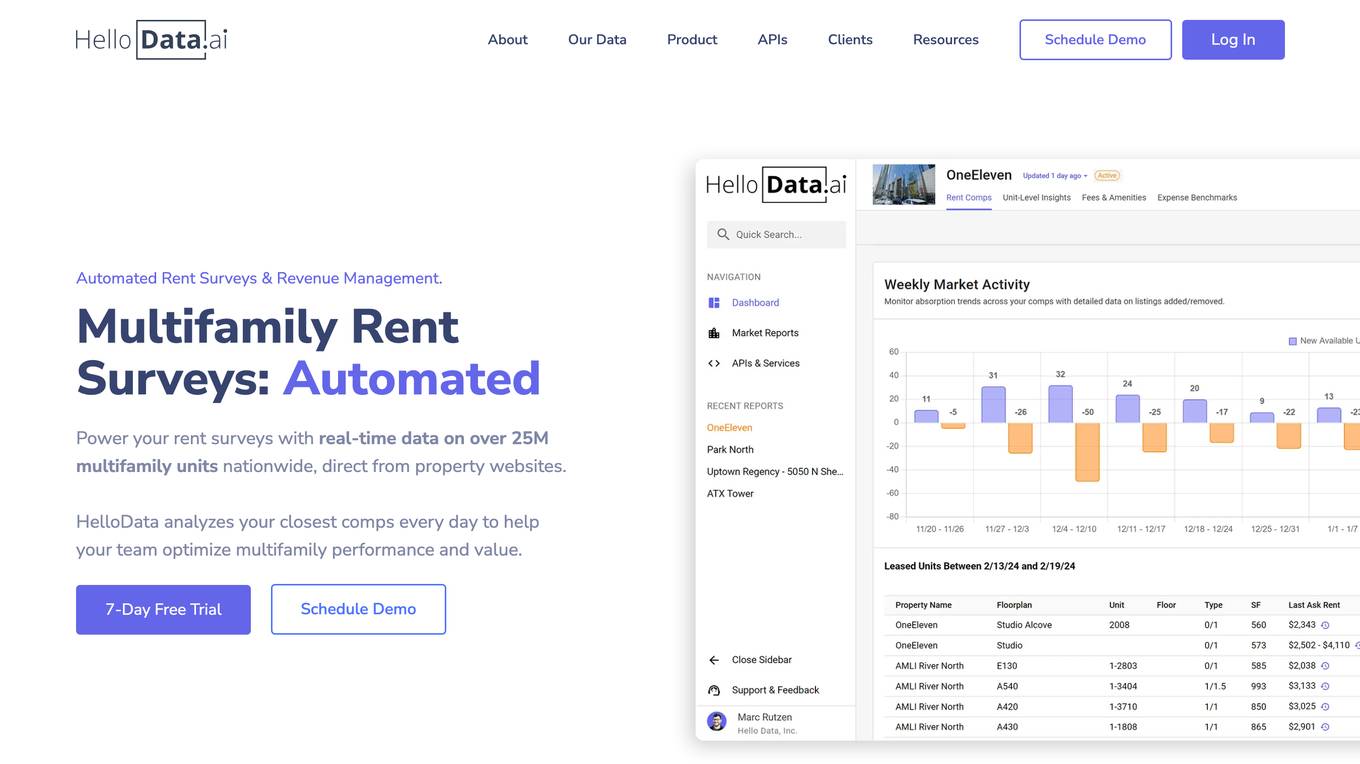

HelloData

HelloData is an AI-powered multifamily market analysis platform that automates market surveys, unit-level rent analysis, concessions monitoring, and development feasibility reports. It provides financial analysis tools to underwrite multifamily deals quickly and accurately. With custom query builders and Proptech APIs, users can analyze and download market data in bulk. HelloData is used by over 15,000 multifamily professionals to save time on market research and deal analysis, offering real-time property data and insights for operators, developers, investors, brokers, and Proptech companies.

SeeMe Index

SeeMe Index is an AI tool for inclusive marketing decisions. It helps brands and consumers by measuring brands' consumer-facing inclusivity efforts across public advertisements, product lineup, and DEI commitments. The tool utilizes responsible AI to score brands, develop industry benchmarks, and provide consulting to improve inclusivity. SeeMe Index awards the highest-scoring brands with an 'Inclusive Certification', offering consumers an unbiased way to identify inclusive brands.

Particl

Particl is an AI-powered platform that automates competitor intelligence for modern retail businesses. It provides real-time sales, pricing, and sentiment data across various e-commerce channels. Particl's AI technology tracks sales, inventory, pricing, assortment, and sentiment to help users quickly identify profitable opportunities in the market. The platform offers features such as benchmarking performance, automated e-commerce intelligence, competitor research, product research, assortment analysis, and promotions monitoring. With easy-to-use tools and robust AI capabilities, Particl aims to elevate team workflows and capabilities in strategic planning, product launches, and market analysis.

ARC Prize

ARC Prize is a platform hosting a $1,000,000+ public competition aimed at beating and open-sourcing a solution to the ARC-AGI benchmark. The platform is dedicated to advancing open artificial general intelligence (AGI) for the public benefit. It provides a formal benchmark, ARC-AGI, created by François Chollet, to measure progress towards AGI by testing the ability to efficiently acquire new skills and solve open-ended problems. ARC Prize encourages participants to try solving test puzzles to identify patterns and improve their AGI skills.

Report Card AI

Report Card AI is an AI Writing Assistant that helps users generate high-quality, unique, and personalized report card comments. It allows users to create a quality benchmark by writing their first draft of comments with the assistance of AI technology. The tool is designed to streamline the report card writing process for teachers, ensuring error-free and eloquently written comments that meet specific character count requirements. With features like 'rephrase', 'Max Character Count', and easy exporting options, Report Card AI aims to enhance efficiency and accuracy in creating report card comments.

Waikay

Waikay is an AI tool designed to help individuals, businesses, and agencies gain transparency into what AI knows about their brand. It allows users to manage reputation risks, optimize strategic positioning, and benchmark against competitors with actionable insights. By providing a comprehensive analysis of AI mentions, citations, implied facts, and competition, Waikay ensures a 360-degree view of model understanding. Users can easily track their brand's digital footprint, compare with competitors, and monitor their brand and content across leading AI search platforms.

Spotrank.ai

Spotrank.ai is an AI-powered platform that provides advanced analytics and insights for businesses and individuals. It leverages artificial intelligence algorithms to analyze data and generate valuable reports to help users make informed decisions. The platform offers a user-friendly interface and customizable features to cater to diverse needs across various industries. Spotrank.ai is designed to streamline data analysis processes and enhance decision-making capabilities through cutting-edge AI technology.

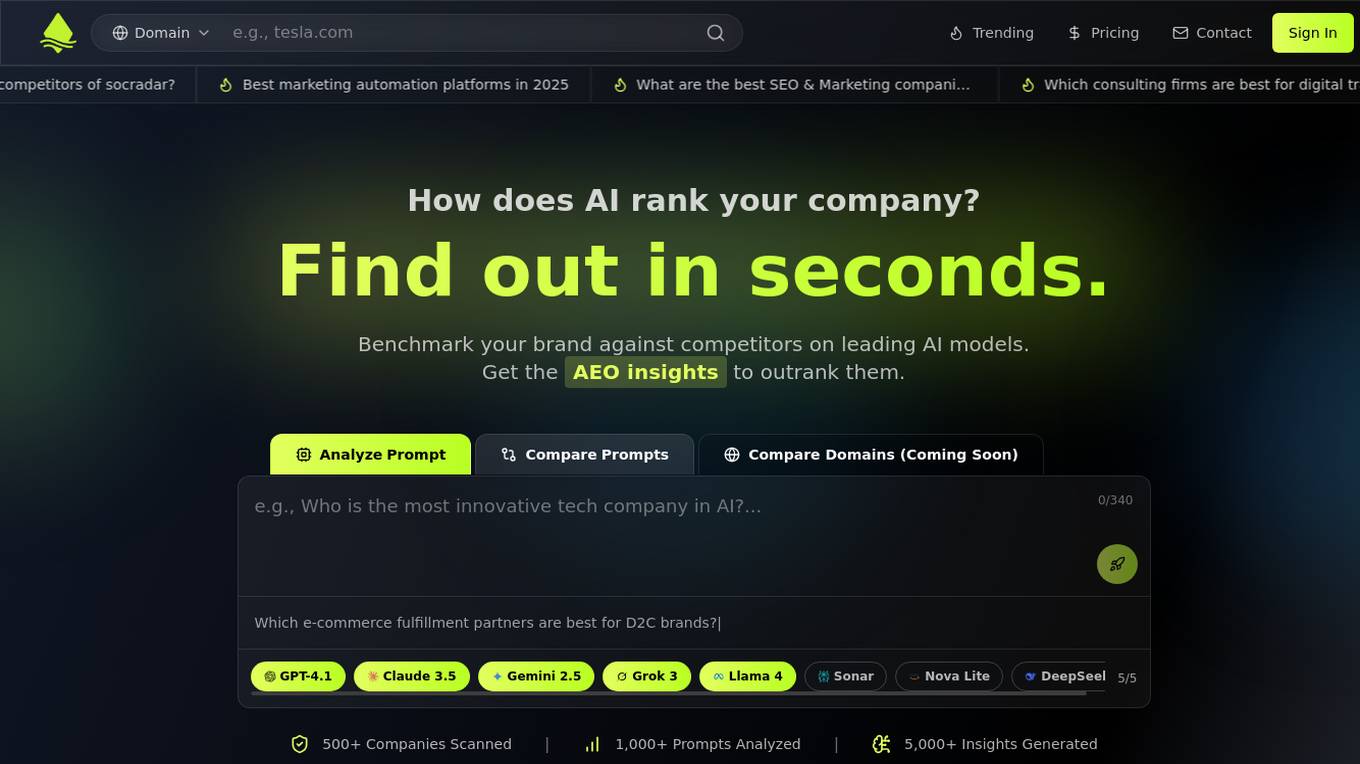

Peec AI

Peec AI is an AI search analytics tool designed for marketing teams to track, analyze, and improve brand performance on AI search platforms. It provides key metrics such as Visibility, Position, and Sentiment to help businesses understand how AI perceives their brand. The platform offers insights on AI visibility, prompts analysis, and competitor tracking to enhance marketing strategies in the era of AI and generative search.

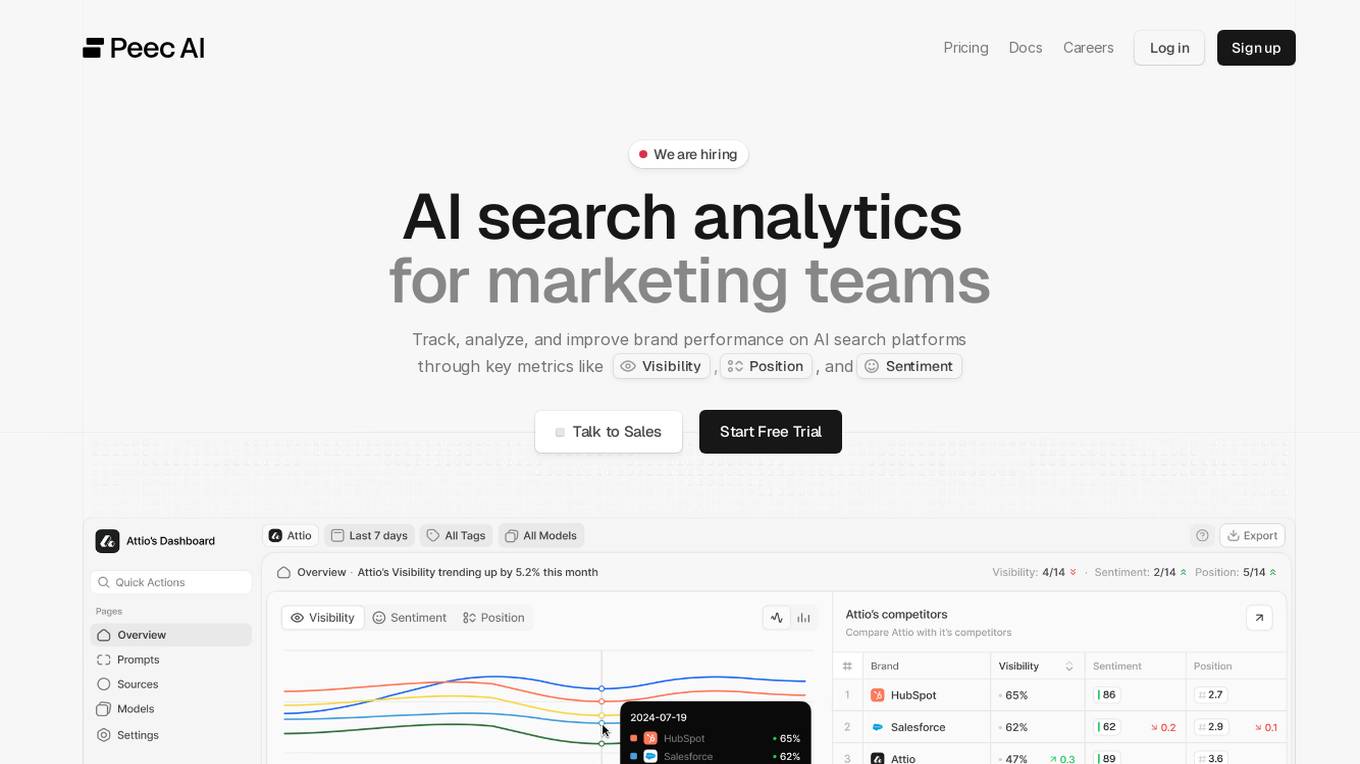

Perspect

Perspect is an AI-powered platform designed for high-performance software teams. It offers real-time insights into team contributions and impact, optimizing developer experience, and rewarding high-performers. With 50+ integrations, Perspect enables visualization of impact, benchmarking performance, and uses machine learning models to identify and eliminate blockers. The platform is deeply integrated with web3 wallets and offers built-in reward mechanisms. Managers can align resources around crucial KPIs, identify top talent, and prevent burnout. Perspect aims to enhance team productivity and employee retention through AI and ML technologies.

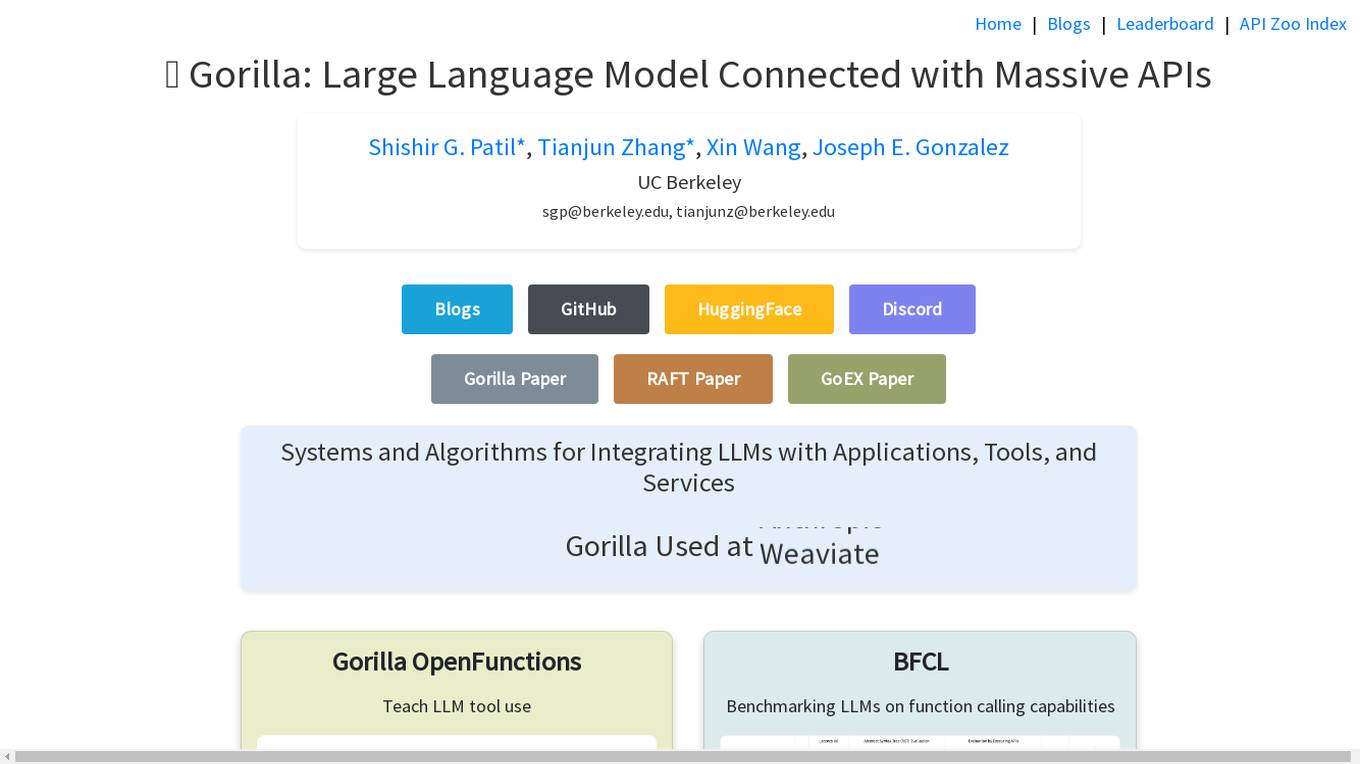

Gorilla

Gorilla is an AI tool that integrates a large language model (LLM) with massive APIs to enable users to interact with a wide range of services. It offers features such as training the model to support parallel functions, benchmarking LLMs on function-calling capabilities, and providing a runtime for executing LLM-generated actions like code and API calls. Gorilla is open-source and focuses on enhancing interaction between apps and services with human-out-of-loop functionality.

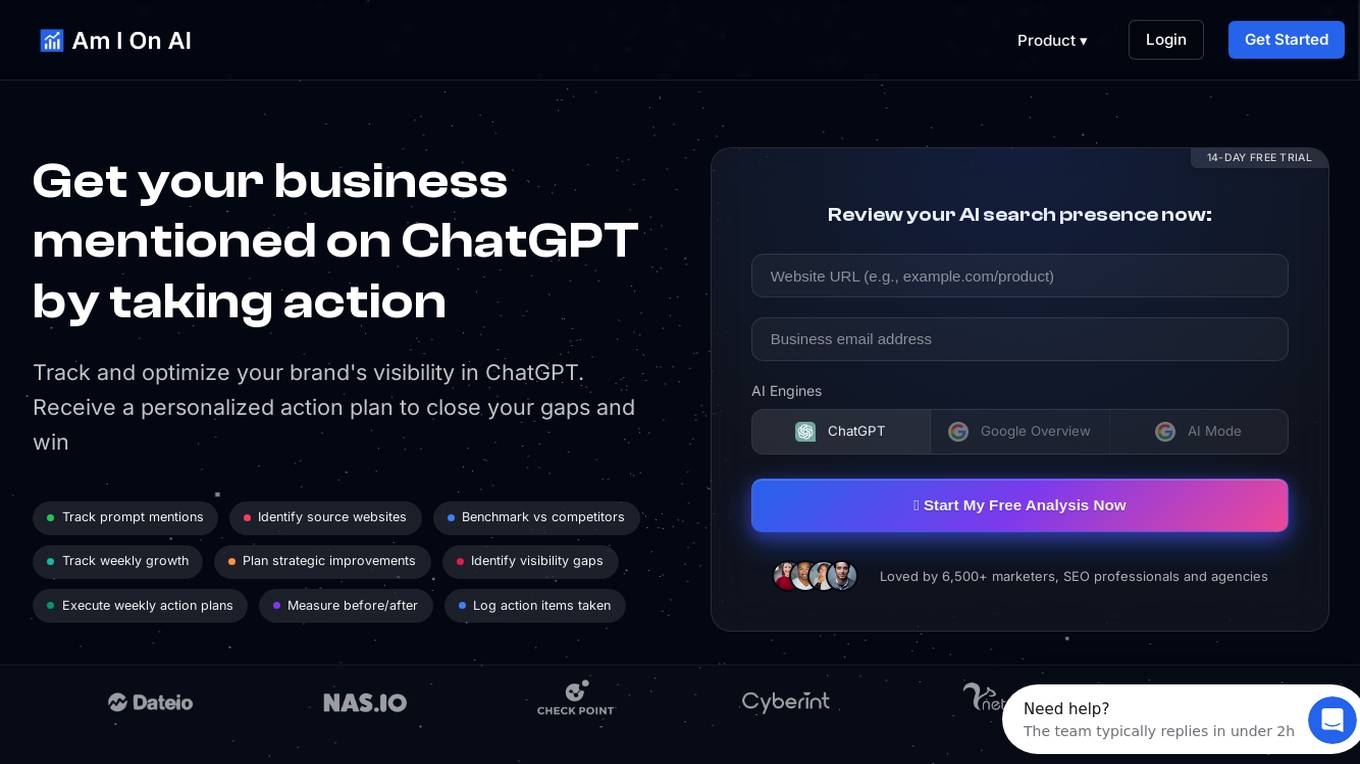

Am I On AI

Am I On AI is a platform designed to help businesses improve their visibility in AI responses, specifically focusing on ChatGPT. It provides personalized action plans to enhance brand visibility, track mentions, identify source websites, benchmark against competitors, and execute strategic improvements. The platform offers features such as AI brand monitoring, competitor rank analysis, sentiment analysis, citation tracking, and actionable insights. With a user-friendly interface and measurable results, Am I On AI is a valuable tool for marketers, SEO professionals, and agencies looking to optimize their AI visibility.

Comparables.ai

Comparables.ai is an AI-powered company and market intelligence platform designed for M&A professionals. It offers comprehensive data insights, valuation multiples, and market analysis to help users make informed decisions in investment banking, private equity, and corporate finance. The platform leverages AI technology to provide relevant company information, financial data, and M&A transaction history, enabling users to identify new investment targets, benchmark companies, and conduct market analysis efficiently.

Lucid Engine

Lucid Engine is an AI tool designed to optimize digital presence for better visibility in AI-generated answers. It helps users track their citations across AI search engines, benchmark competitors, and prioritize actions to improve recommendations. The tool offers features such as Visibility Audit, Competitor Radar, and Action Backlog to enhance AI visibility and competitiveness. Lucid Engine provides real-time monitoring of strategic prompts across multiple AI engines, enabling users to stay ahead of competitors and model shifts.

ASK BOSCO®

ASK BOSCO® is an AI reporting and forecasting tool designed for agencies and retailers. It helps in collecting and analyzing data to improve browsing experience, customize marketing strategies, and provide analytics for better decision-making. The platform offers features like AI reporting, competitor benchmarking, AI budget planning, and data integrations to streamline marketing efforts and maximize ROI. Trusted by leading brands, ASK BOSCO® provides accurate forecasting and insights to optimize media spend and plan budgets effectively.

Trend Hunter

Trend Hunter is an AI-powered platform that offers a wide range of services to accelerate innovation and provide insights into trends and opportunities. With a vast database of ideas and innovations, Trend Hunter helps individuals and organizations stay ahead of the curve by offering trend reports, newsletters, training programs, and custom services. The platform also provides personalized assessments to enhance innovation potential and offers resources such as books, keynotes, and online courses to foster creativity and strategic thinking.

JaanchAI

JaanchAI is an AI-powered tool that provides valuable insights for e-commerce businesses. It utilizes artificial intelligence algorithms to analyze data and trends in the e-commerce industry, helping businesses make informed decisions to optimize their operations and increase sales. With JaanchAI, users can gain a competitive edge by leveraging advanced analytics and predictive modeling techniques tailored for the e-commerce sector.

Deepfake Detection Challenge Dataset

The Deepfake Detection Challenge Dataset is a project initiated by Facebook AI to accelerate the development of new ways to detect deepfake videos. The dataset consists of over 100,000 videos and was created in collaboration with industry leaders and academic experts. It includes two versions: a preview dataset with 5k videos and a full dataset with 124k videos, each featuring facial modification algorithms. The dataset was used in a Kaggle competition to create better models for detecting manipulated media. The top-performing models achieved high accuracy on the public dataset but faced challenges when tested against the black box dataset, highlighting the importance of generalization in deepfake detection. The project aims to encourage the research community to continue advancing in detecting harmful manipulated media.

Censinet

Censinet is a purpose-built AI-powered solution designed to reduce risk in the healthcare industry. It offers a comprehensive platform to address various risk factors, including third-party vendors, patient data, medical devices, and supply chain cybersecurity. The flagship offering, Censinet RiskOps, facilitates secure data sharing and collaboration among healthcare delivery organizations and vendors. With deep expertise in healthcare and cybersecurity, Censinet aims to transform cybersecurity and risk management in the healthcare sector.

1 - Open Source AI Tools

chem-bench

ChemBench is a project aimed at expanding chemistry benchmark tasks in a BIG-bench compatible way, providing a pipeline to benchmark frontier and open models. It allows users to run benchmarking tasks on models with existing presets, offering predefined parameters and processing steps. The library facilitates benchmarking models on the entire suite, addressing challenges such as prompt structure, parsing, and scoring methods. Users can contribute to the project by following the developer notes.

10 - OpenAI Gpts

HVAC Apex

Benchmark HVAC GPT model with unmatched expertise and forward-thinking solutions, powered by OpenAI

SaaS Navigator

A strategic SaaS analyst for CXOs, with a focus on market trends and benchmarks.

Transfer Pricing Advisor

Guides businesses in managing global tax liabilities efficiently.

Salary Guides

I provide monthly salary data in euros, using a structured format for global job roles.

Performance Testing Advisor

Ensures software performance meets organizational standards and expectations.