Best AI tools for< Analyze Video Data >

20 - AI tool Sites

BlurOn

BlurOn is an AI tool for automatic mosaic insertion in video editing. It offers high accuracy detection of faces, heads, and license plates, complying with regulations like GDPR. The tool allows for proper anonymization of personal information in videos, supports automatic processing upon server arrival, and provides cost-effective video editing services. BlurOn has been recognized with awards in the industry and is used in various sectors such as the automotive industry, insurance companies, and overseas for video data processing.

Maekersuite

Maekersuite is an AI-powered platform designed to assist users in researching and scripting videos. It offers a wide range of tools and features to streamline the video creation process, from generating video ideas to optimizing scripts using data and AI. The platform aims to help users create engaging and data-driven video content for various purposes such as marketing, social media, education, and business.

Mixpeek Solutions

Mixpeek Solutions offers a Multimodal Data Warehouse for Developers, providing a Developer-First API for AI-native Content Understanding. The platform allows users to search, monitor, classify, and cluster unstructured data like video, audio, images, and documents. Mixpeek Solutions offers a range of features including Unified Search, Automated Classification, Unsupervised Clustering, Feature Extractors for Every Data Type, and various specialized extraction models for different data types. The platform caters to a wide range of industries and provides seamless model upgrades, cross-model compatibility, A/B testing infrastructure, and simplified model management.

Vidrovr

Vidrovr is a video analysis platform that uses machine learning to process unstructured video, image, or audio data. It provides business insights to help drive revenue, make strategic decisions, and automate monotonous processes within a business. Vidrovr's technology can be used to minimize equipment downtime, proactively plan for equipment replacement, leverage AI to empower mission objectives and decision making, monitor persons or topics of interest across various media sources, ensure critical infrastructure is monitored 24/7/365, and protect ecological assets.

VeedoAI

VeedoAI is an advanced AI tool that helps users create compelling video content, derive great insights, and make video content searchable, actionable, and intelligent. It offers features such as transforming videos into AI-generated slides, contextual search, AI chat for multi-turn conversations, frame explanation, transcription, smart scenes, and more. VeedoAI is trusted by a growing community of creators and businesses for various use cases like telemedicine, e-learning, law, sports, sales, and videography.

VideoSage

VideoSage is an AI-powered platform that allows users to ask questions and gain insights about videos. Empowered by Moonshot Kimi AI, VideoSage provides summaries, insights, timestamps, and accurate information based on video content. Users can engage in conversations with the AI while watching videos, fostering a collaborative environment. The platform aims to enhance the user experience by offering tools to customize and enhance viewing experiences.

Qortex

Qortex is a video intelligence platform that offers advanced AI technology to optimize advertising, monetization, and analytics for video content. The platform analyzes video frames in real-time to provide deep insights for media investment decisions. With features like On-Stream ad experiences and in-video ad units, Qortex helps brands achieve higher audience attention, revenue per stream, and fill rates. The platform is designed to enhance brand metrics and improve advertising performance through contextual targeting.

Vatis Tech

Vatis Tech is an AI-powered speech-to-text infrastructure that offers transcription software to help teams and individuals streamline their workflow. The platform provides accurate, accessible, and affordable speech-to-text API, caption generator, and audio intelligence solutions. It caters to various industries such as contact centers, broadcasting, medical, legal, media, newsrooms, and more. Vatis Tech's technology is powered by state-of-the-art AI, enabling near-human accuracy in transcribing speech with fast turnaround times. The platform also offers features like real-time transcription, custom AI models, and support for multiple languages.

Izwe.ai

Izwe.ai is a multi-lingual technology platform that transcribes speech to text in local languages. It is trusted by companies of all sizes, from startups to enterprises. Izwe.ai offers a range of solutions for businesses, including customer experience, developer automation, and personal transcription. The platform's features include automatic agent assessments, support from an internal knowledge base, and recommendations for actions and additional professional services.

Outspeed

Outspeed is a platform for Realtime Voice and Video AI applications, providing networking and inference infrastructure to build fast, real-time voice and video AI apps. It offers tools for intelligence across industries, including Voice AI, Streaming Avatars, Visual Intelligence, Meeting Copilot, and the ability to build custom multimodal AI solutions. Outspeed is designed by engineers from Google and MIT, offering robust streaming infrastructure, low-latency inference, instant deployment, and enterprise-ready compliance with regulations such as SOC2, GDPR, and HIPAA.

Clickworker GmbH

Clickworker GmbH is an AI training data and data management services platform that leverages a global crowd of Clickworkers to generate, validate, and label data for AI systems. The platform offers a range of AI datasets for machine learning, audio, image, and video datasets, as well as services like image annotation, content editing, and creation. Clickworkers participate in projects on a freelance basis, performing micro-tasks to create high-quality training data tailored to the requirements of AI systems. The platform also provides solutions for industries such as AI and data science research, eCommerce, fashion, retail, and digital marketing.

Recognito

Recognito is a leading facial recognition technology provider, offering the NIST FRVT Top 1 Face Recognition Algorithm. Their high-performance biometric technology is used by police forces and security services to enhance public safety, manage individual movements, and improve audience analytics for businesses. Recognito's software goes beyond object detection to provide detailed user role descriptions and develop user flows. The application enables rapid face and body attribute recognition, video analytics, and artificial intelligence analysis. With a focus on security, living, and business improvements, Recognito helps create safer and more prosperous cities.

Vid2txt

Vid2txt is an offline transcription application that revolutionizes the transcription process by providing fast, accurate, and affordable transcription services for both video and audio files. It eliminates the need for costly subscriptions and data sharing, offering users the freedom of lightning-fast and secure transcription. Vid2txt supports a wide range of file formats and generates .txt, .srt, and .vtt files 100% offline. The application is designed to be simple, useful, and affordable, with a one-time investment unlocking a lifetime of effortless transcription power.

OpusClip

OpusClip is a generative AI video tool that repurposes long videos into shorts in one click. It uses AI to analyze your video content in relation to the latest social and marketing trends from major platforms, and generates a comprehensive understanding of your video for a data-driven decision on content repurposing. It then picks the highlighting moments of your long video, rearranges them into a viral-worthy short and polishes it with dynamic captions, AI-relayout, smooth transition to ensure that the clip is coherent and attention-grabbing, and ends with a strong call-to-action.

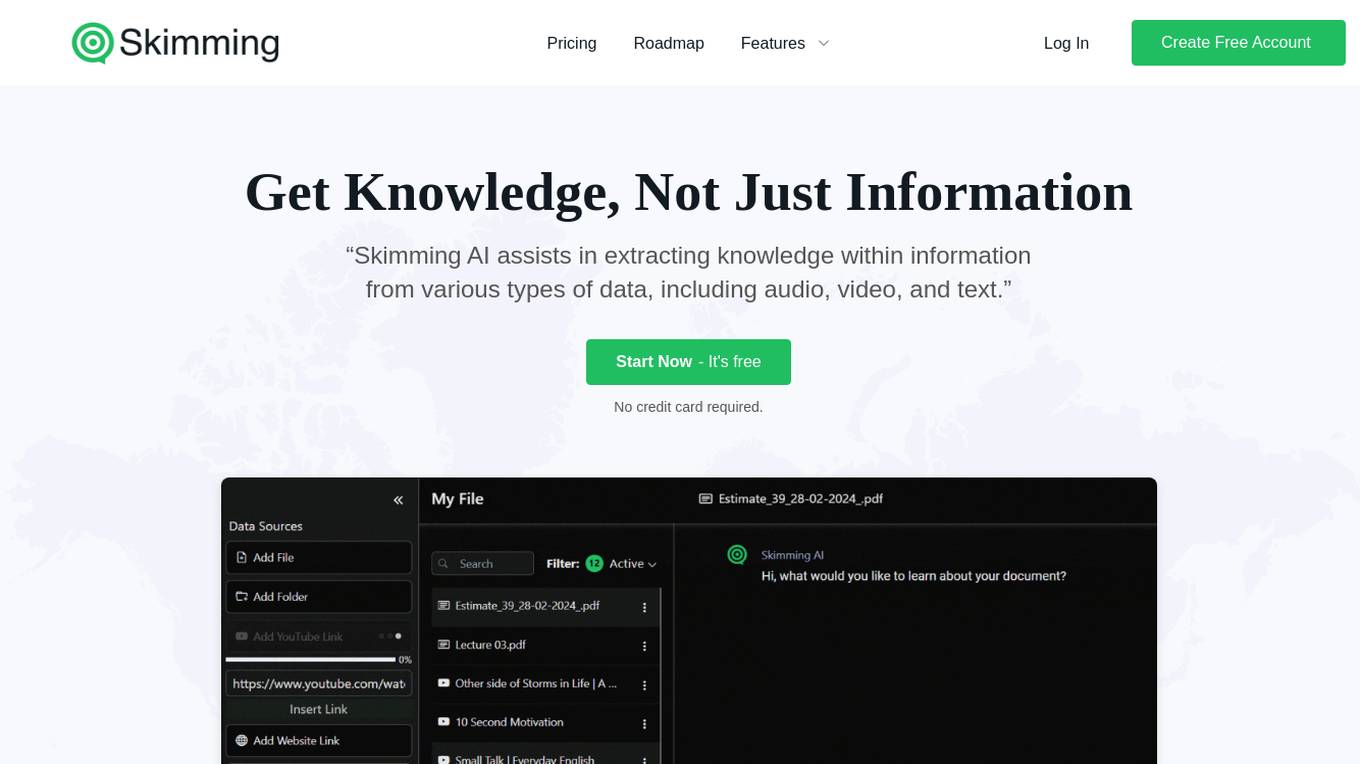

Skimming

Skimming is an AI tool that enables users to interact with various types of data, including audio, video, and text, to extract knowledge. It offers features like chatting with documents, YouTube videos, websites, audio, and video, as well as custom prompts and multilingual support. Skimming is trusted by over 100,000 users and is designed to save time and enhance information extraction. The tool caters to a diverse audience, including teachers, students, businesses, researchers, scholars, lawyers, HR professionals, YouTubers, and podcasters.

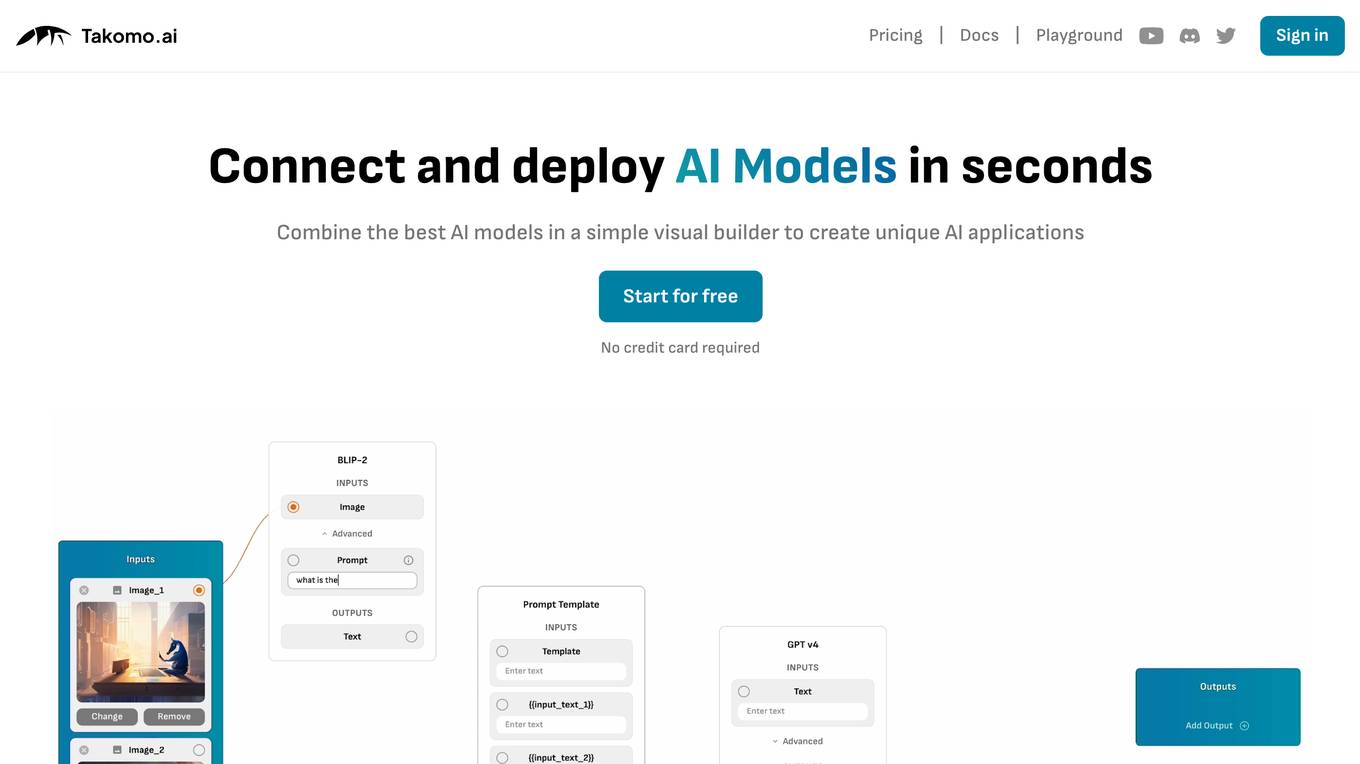

Takomo.ai

Takomo.ai is a no-code AI builder that allows users to connect and deploy AI models in seconds. With Takomo.ai, users can combine the best AI models in a simple visual builder to create unique AI applications. Takomo.ai offers a variety of features, including a drag-and-drop builder, pre-trained ML models, and a single API call for accessing multi-model pipelines.

MTS AI

MTS AI is a platform offering AI-based products and solutions, leveraging artificial intelligence technologies to create voice assistants, chatbots, video analysis solutions, and more. They develop AI solutions using natural language processing, computer vision, and edge computing technologies, collaborating with leading tech companies and global experts. MTS AI aims to find the most viable AI applications for the benefit of society, providing automation for customer service systems, security control, and voice and video data analysis.

Fyne AI

Fyne AI is an AI application that applies AI research in computer vision, generative AI, and machine learning to develop innovative products. The focus of the application is on automating analysis, generating insights from image and video datasets, enhancing creativity and productivity, and building prediction models. Users can subscribe to the Fyne AI newsletter to stay updated on product news and updates.

ArcadianAI

ArcadianAI is a modern security monitoring platform that offers easy and affordable solutions for businesses and individuals. The platform provides security cameras, crime maps, and a 30-day free trial for users to experience its features. ArcadianAI uses AI technology for intrusion detection, smart alerts analytics, AI heatmaps search, and AI detection of people. The platform aims to enhance security measures by intelligently contextualizing, analyzing, and safeguarding premises in real-time using existing CCTV footage.

FileGPT

FileGPT is a powerful GPT-AI application designed to enhance your workflow by providing quick and accurate responses to your queries across various file formats. It allows users to interact with different types of files, extract text from handwritten documents, and analyze audio and video content. With FileGPT, users can say goodbye to endless scrolling and searching, and hello to a smarter, more intuitive way of working with their documents.

0 - Open Source AI Tools

20 - OpenAI Gpts

Ai Marketing & Video Innovations

GPT Expert in AI-gestuurde marketing- en videotechnologieën

Social Media Assistant - videos & trends

Explore TikTok & social media trends, make effective videos, and optimize your content for virality. Previously called "Viral Video Generator by trendup".

Horea Mihai Badau

AI & Social Media Expert, Academically Acclaimed in Multimedia & Internet

Surf Coach AI: Surfing Video Analysis

Personalized surf tips from your surfing photos and videos

The Video Content Creator Coach

A content creator coach aiding in YouTube video content creation, analysis, script writing and storytelling. Designed by a successful YouTuber to help other YouTubers grow their channels.

Video Insights: Summaries/Transcription/Vision

Chat with any video or audio. High-quality search, summarization, insights, multi-language transcriptions, and more. We currently support Youtube and files uploaded on our website.