Best AI tools for< Analyze Values Data >

20 - AI tool Sites

AI CRE Tools

AI CRE Tools is a platform that helps users discover and compare the best AI tools for Commercial Real Estate (CRE). Users can explore a curated directory of AI tools across categories such as Property Search & Acquisition, Property Analysis & Valuation, Development & Construction, Legal, Compliance & Due Diligence, and more. The platform showcases innovative AI features developed by real estate experts to meet diverse business needs in the CRE industry.

ZestyAI

ZestyAI is an artificial intelligence tool that helps users make brilliant climate and property risk decisions. The tool uses AI to provide insights on property values and risk exposure to natural disasters. It offers products such as Property Insights, Digital Roof, Roof Age, Location Insights, and Climate Risk Models to evaluate and understand property risks. ZestyAI is trusted by top insurers in North America and aims to bring a ten times return on investment to its customers.

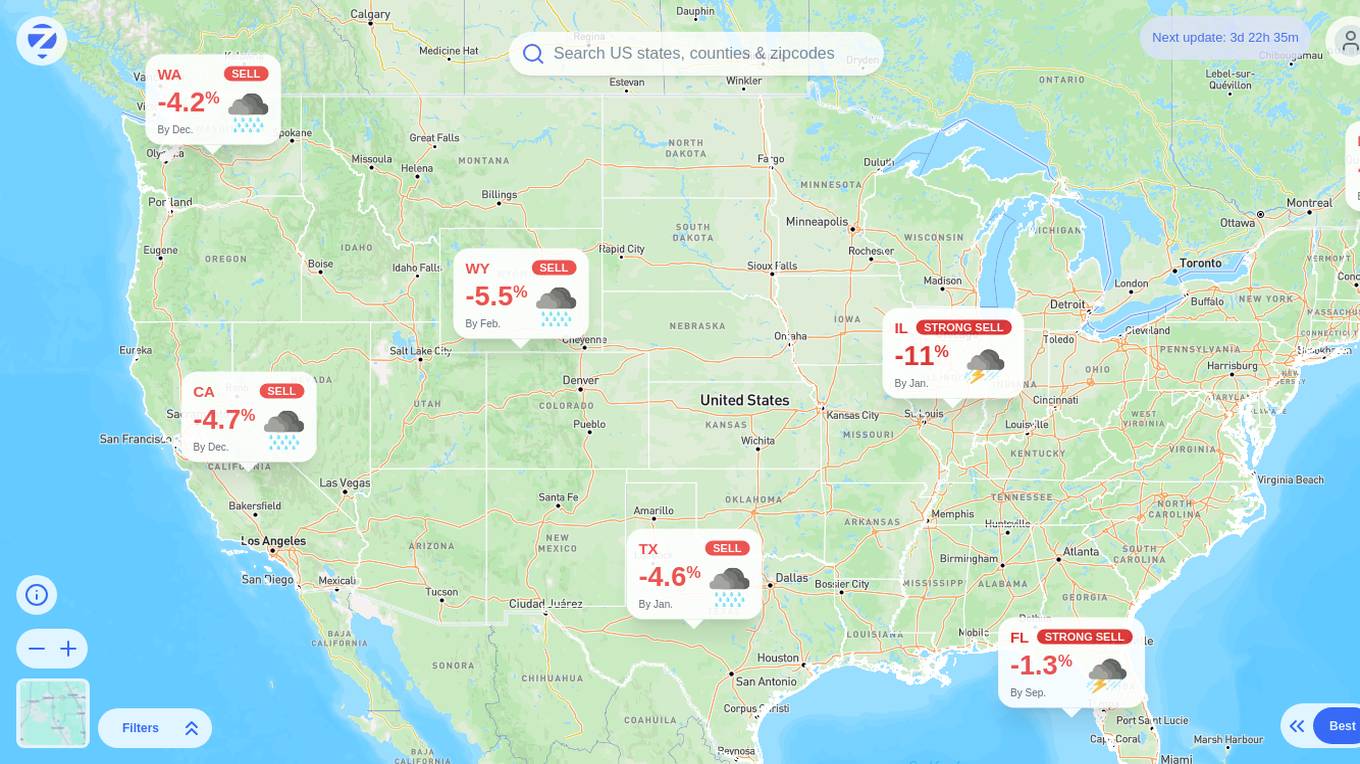

Zipsmart

Zipsmart is an AI-powered platform that helps users make smarter real estate decisions. By leveraging artificial intelligence technology, Zipsmart provides valuable insights and data-driven recommendations to assist individuals in buying, selling, or renting properties. The platform utilizes advanced algorithms to analyze market trends, property values, and other relevant factors, enabling users to optimize their real estate transactions efficiently. With Zipsmart, users can access personalized recommendations, market predictions, and comparative analyses to make informed decisions and maximize their real estate investments.

Leny.ai

Leny.ai is an AI-powered medical assistant designed to provide instant support to medical professionals and patients. It offers features such as differential diagnosis, treatment plan drafting, discharge instructions, referral letters, and lab value analysis. Leny.ai aims to streamline healthcare processes, save time, and provide reliable and accurate medical information. The platform is still in beta mode and continuously improving to offer more accurate responses. It is focused on data security and privacy, although not currently HIPAA compliant. Leny.ai is free of charge at present and plans to transition to a subscription-based model in the future.

Mnemonic AI

Mnemonic AI is an end-to-end marketing intelligence platform that helps businesses unify their data, understand their customers, and automate their growth. It connects disparate data sources to build AI-powered customer insights and streamline marketing processes. The platform offers features such as connecting marketing and sales apps, generating AI insights, and automating growth through BI-grade reports. Mnemonic AI is trusted by forward-thinking marketing teams to transform marketing intelligence and drive confident decisions.

Human-Centred Artificial Intelligence Lab

The Human-Centred Artificial Intelligence Lab (Holzinger Group) is a research group focused on developing AI solutions that are explainable, trustworthy, and aligned with human values, ethical principles, and legal requirements. The lab works on projects related to machine learning, digital pathology, interactive machine learning, and more. Their mission is to combine human and computer intelligence to address pressing problems in various domains such as forestry, health informatics, and cyber-physical systems. The lab emphasizes the importance of explainable AI, human-in-the-loop interactions, and the synergy between human and machine intelligence.

Dezan AI

Dezan AI is a DIY data collection and analysis platform powered by AI, focusing on collecting real-time data from interest-based respondents worldwide. It offers an easy way to create surveys, with features like pre-defined templates, multiple question types, and AI-assisted survey creation and analysis. Dezan AI helps users set survey goals, reach the right audience, and analyze collected data efficiently.

Imbue

Imbue is a company focused on building AI systems that can reason and code, with the goal of rekindling the dream of the personal computer by creating practical AI agents that can accomplish larger goals and work safely in the real world. The company emphasizes innovation in AI technology and aims to push the boundaries of what AI can achieve in various fields.

Upsolve AI

Upsolve AI is a customer-facing analytics service powered by AI that allows businesses to embed dashboard and business intelligence in their products for customers. It manages customer analytics, empowers users with product data insights, and provides out-of-the-box connections to popular databases. With features like building interactive analytics dashboards, creating custom charts, and offering self-service customization, Upsolve AI aims to help businesses make data-driven decisions and communicate values to stakeholders. The platform also offers easy deployment, theme customization, and AI-powered chart exploration for enhanced user experience.

Coherent Solutions

Coherent Solutions is a custom software development and engineering company that offers a range of services including digital product engineering, artificial intelligence, data and analytics, mobile development, DevOps and cloud, quality assurance, Salesforce, and product design and UX. They work collaboratively and transparently with clients to deliver successful projects. The company values set the standard for all decisions, and they have a strong focus on understanding and meeting the business needs of their clients. Coherent Solutions has a strong track record of success in various industries and is known for empowering business success through global expertise and industry-specific product engineering consulting services.

Morphlin

Morphlin is an AI-powered trading platform that empowers traders with smart tools and insights to make informed decisions. The platform offers a powerful AI MorphlinGPT API Key Pair for smart trading on Binance. Users can access real-time information, investment analysis, and professional research reports from third-party experts. Morphlin integrates data from mainstream markets and exchanges, providing clear information through a dynamic dashboard. The platform's core values include offering a good environment for researchers to share opinions and a referral program that shares 10% of Morphlin fees with community KOLs. With Morphlin, traders can trade wisely and efficiently, backed by AI technology and comprehensive market data.

ExpiredDomains.com

ExpiredDomains.com is a free online platform that helps users find valuable expired and expiring domain names. It aggregates domain data from hundreds of top-level domains (TLDs) and displays them in a searchable, filterable format. The platform provides tools and insights to support smarter decisions by offering comprehensive listings, exclusive data metrics, a user-friendly interface, and professional trust. Users can browse listings, view metrics, and apply filters without any cost or hidden charges.

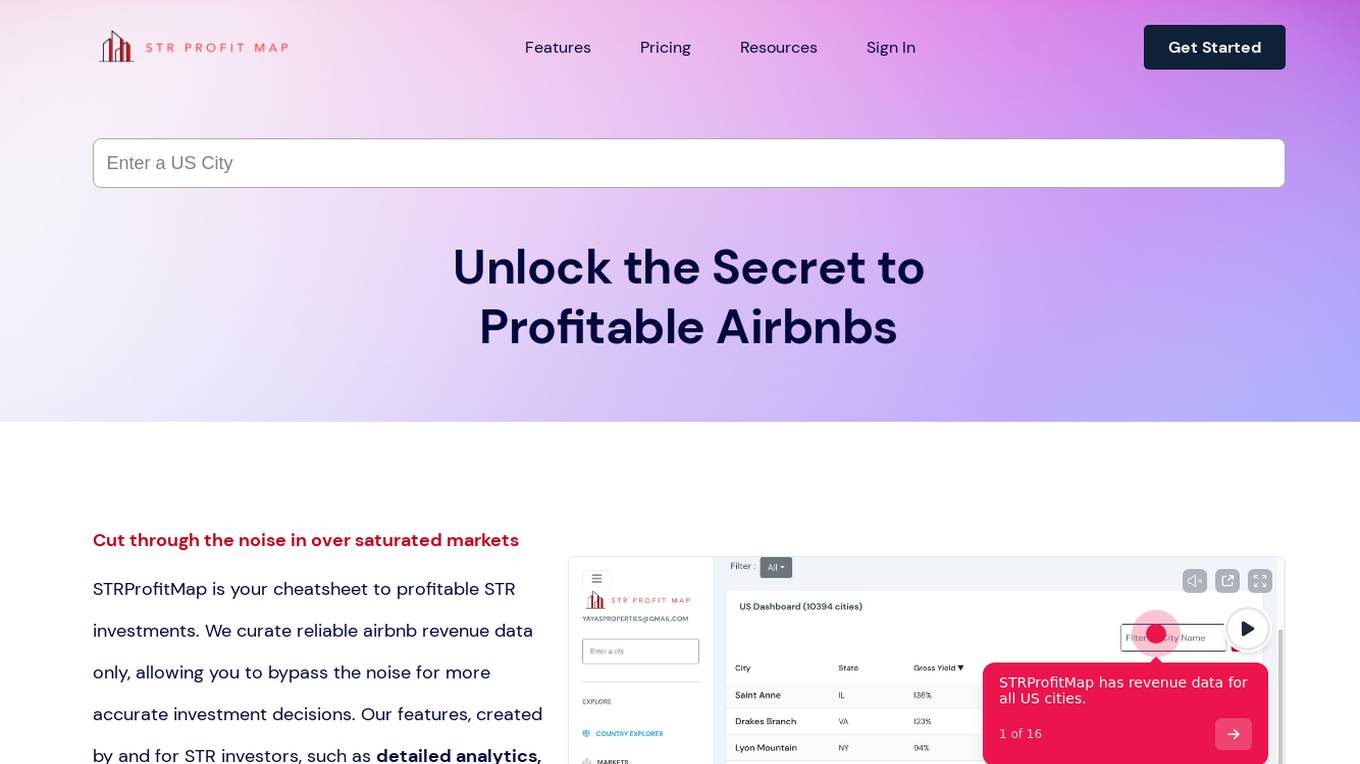

STRProfitMap

STRProfitMap is an AI-powered tool designed for Short-Term Rental (STR) investors to make informed and profitable decisions in the Airbnb market. It provides reliable revenue data sourced from Airbnb and VRBO, detailed analytics, profit maps, and AI-driven buy boxes to streamline the investment process. The platform curates data from over 11,000 cities/markets, offering insights into occupancy rates, average daily rates, property values, and estimated Return On Investments. By focusing on reliable listings with proven performance, STRProfitMap aims to reduce noise and uncertainty in the market, helping users identify top-performing markets with confidence.

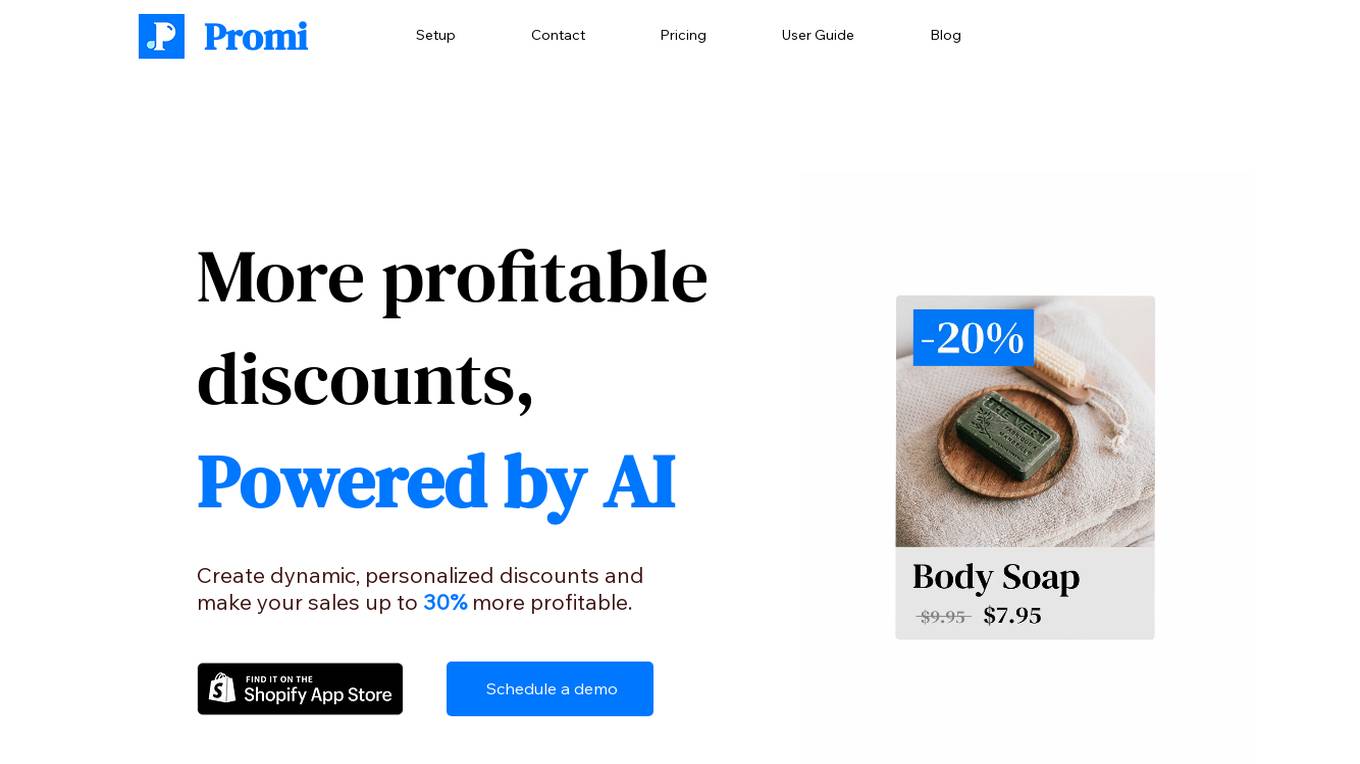

Promi

Promi is an AI-powered application that helps businesses create dynamic and personalized discounts to increase sales profitability by up to 30%. It offers powerful personalization features, dynamic clearance sales, product-level optimization, configurable price updates, and deep links for marketing. Promi leverages AI models to vary discount values based on user purchase intent, ensuring efficient product selling. The application provides seamless integration with major apps like Klaviyo and offers easy installation for both basic and advanced features.

Halfspace

Halfspace is an award-winning data, advanced analytics, and AI company that helps companies drive growth by leveraging applied Advanced Analytics & Artificial Intelligence. Their team, consisting of physicists, engineers, computer scientists, and designers, is data-driven to the core and captures value from data to unleash maximum potential. They work with bold and ambitious clients to create long-term value, focusing on quality above all.

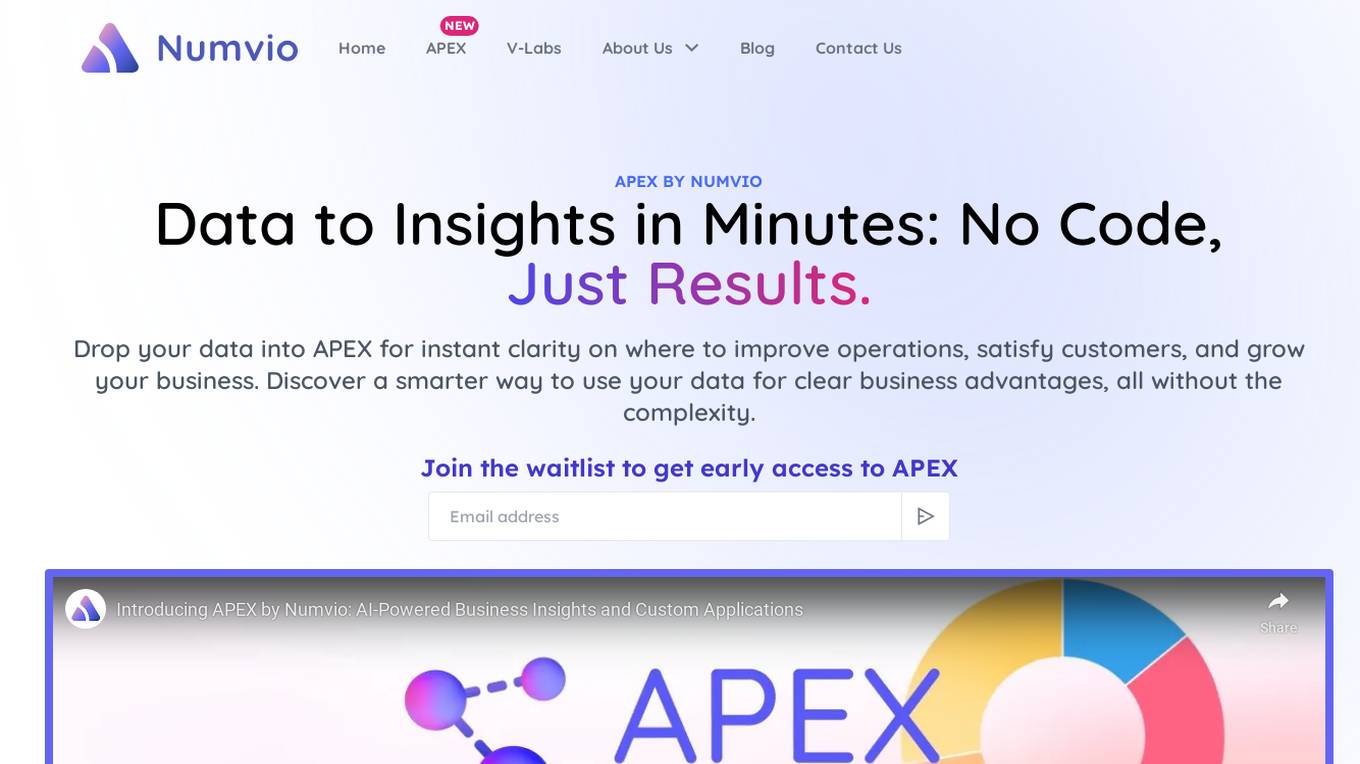

APEX by Numvio

Numvio's APEX is a groundbreaking no-code AI platform that empowers businesses to unlock the full potential of their data and drive real impact. With APEX, you can effortlessly implement customized AI solutions, delivered as user-friendly tools or APIs, without the need for complex tech infrastructure or coding expertise. APEX intelligently analyzes your data's potential and provides valuable insights, helping you make informed decisions and drive your business forward.

maya.ai

Crayon Data's maya.ai platform is an AI-led revenue acceleration platform for enterprises. It helps businesses unlock the value of data to increase customer engagement and revenue. The platform offers a range of capabilities, including data cleaning and enrichment, personalized recommendations, and plug-and-play APIs. Maya.ai has been used by leading global enterprises to achieve significant results, including increased revenue, improved customer engagement, and reduced time to market.

Querri

Querri is an AI-powered analytics platform that allows users to interact with their data through natural language queries, providing instant insights and shareable dashboards. It simplifies the data workflow for non-technical business users by offering features such as data cleansing, integration, personalized AI data analyst, drag-and-drop dashboards, and enterprise-grade security.

DEUS

DEUS is a data and artificial intelligence company that empowers organizations to advance value creation by unlocking the true value within their data and applying AI services. They offer services in data science, engineering, design, and strategy, partnering with organizations to benefit people, business, and society. DEUS also focuses on addressing wicked problems and societal challenges through human-centered artificial intelligence initiatives. They help organizations launch AI projects that create real value and partner across the product and service lifecycle.

DocAI

DocAI is an API-driven platform that enables you to implement contracts AI into your applications, without requiring development from the ground-up. Our AI identifies and extracts 1,300+ common legal clauses, provisions and data points from a variety of document types. Our AI is a low-code experience for all. Easily train new fields without the need for a data scientist. All you need is subject matter expertise. Flexible and scalable. Flexible deployment options in the Zuva hosted cloud or on prem, across multiple geographical regions. Reliable, expert-built AI our customers can trust. Over 1,300+ out of the box AI fields that are built and trained by experienced lawyers and subject matter experts. Fields identify and extract common legal clauses, provisions and data points from unstructured documents and contracts, including ones written in non-standard language.

0 - Open Source AI Tools

20 - OpenAI Gpts

Keynote Speaker/Human Values Expert David Allison

I respond to prompts as David Allison, human values expert, best-selling author, keynote speaker, and founder of the Valuegraphics Project.

Competitor Value Matrix

Analyzes websites, compares value elements, and organizes data into a table.

AI Market Analyzer

Analyzes markets, offers predictions on commodities, crypto, and companies.

Value Investor's Stock Assistant

I assist in analyzing stocks with a detail-oriented, patient, data-driven approach, drawing from a wide range of expert authors.

ENS Hex Domain Analyst

Systematically analyzes ENS domains & hex colors, calculating their ETH value.

ConsultorIA

I develop AI implementation proposals based on your specific needs, focusing on value and affordability.

AI Use Case Analyst for Sales & Marketing

Enables sales & marketing leadership to identify high-value AI use cases

Finance Business Partnering Advisor

Adviser for finance business partnering, offering insights on bringing value through your work as a finance business professional.

PragmaPilot - A Generative AI Use Case Generator

Show me your job description or just describe what you do professionally, and I'll help you identify high value use cases for AI in your day-to-day work. I'll also coach you on simple techniques to get the best out of ChatGPT.

Financial Modeling GPT

Expert in financial modeling for valuation, budgeting, and forecasting.

Traditional Values

Explores traditional values and their cultural impact, in an informative tone.