Best AI tools for< Analyze Figures >

20 - AI tool Sites

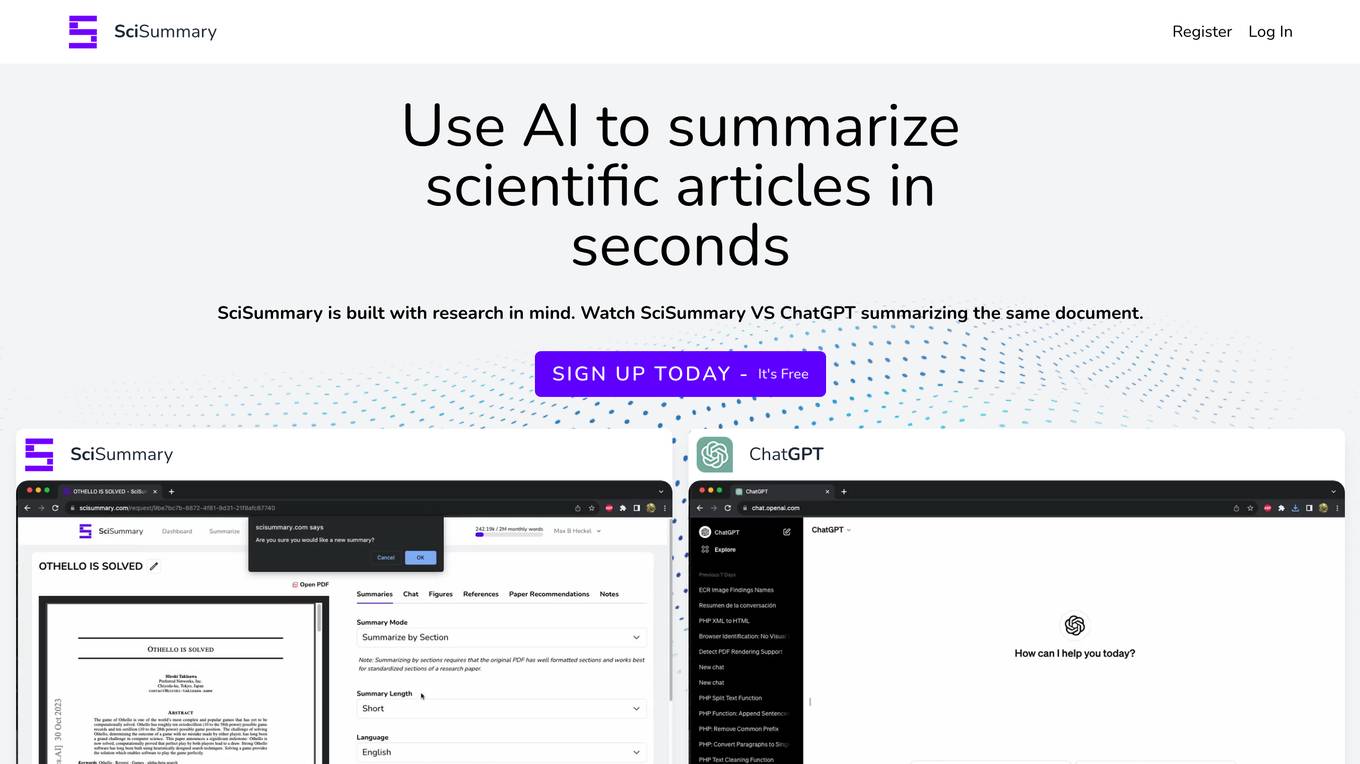

SciSummary

SciSummary is an AI tool designed to summarize scientific articles and research papers quickly and efficiently. It utilizes advanced AI technology, specifically GPT-3.5 and GPT-4 models, to provide accurate and concise summaries for busy scientists, students, and enthusiasts. The platform allows users to submit documents via email, upload articles to the dashboard, or attach PDFs for summarization. With features like unlimited summaries, figure and table analysis, and chat messages, SciSummary is a valuable resource for researchers looking to stay updated with the latest trends in research.

Unlocksales.ai

Unlocksales.ai is an AI-powered platform that revolutionizes B2B lead generation by offering services such as Email Campaigns, LinkedIn Campaigns, Targeted Calling, PPC Ads, and Sales Chatbots. The platform empowers sales teams with cutting-edge technology, personalized interactions, and continuous improvement to engage leads effectively. It prioritizes exceptional customer experience, seamless interactions, and brand consistency across multiple channels. Unlocksales.ai aims to expand into AI B2C Lead Generation and other AI-based services to drive industry success.

Echoes of History AI

Echoes of History AI is an innovative AI tool that utilizes advanced artificial intelligence algorithms to analyze historical data and provide insights into historical events. The tool is designed to help researchers, historians, and enthusiasts gain a deeper understanding of the past by uncovering hidden patterns and connections in historical records. With its cutting-edge technology, Echoes of History AI offers a unique and efficient way to explore and interpret historical data, making it a valuable resource for anyone interested in history.

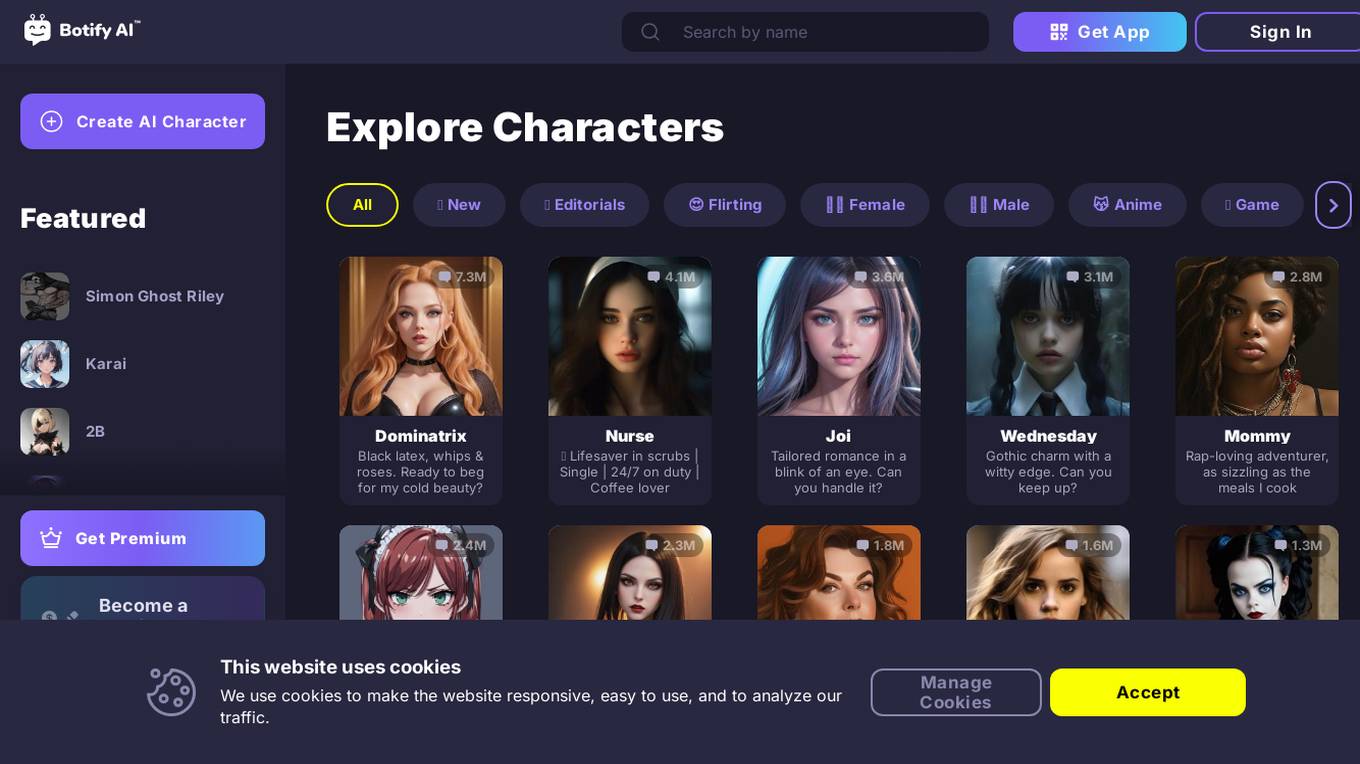

Botify AI

Botify AI is an AI-powered tool designed to assist users in optimizing their website's performance and search engine rankings. By leveraging advanced algorithms and machine learning capabilities, Botify AI provides valuable insights and recommendations to improve website visibility and drive organic traffic. Users can analyze various aspects of their website, such as content quality, site structure, and keyword optimization, to enhance overall SEO strategies. With Botify AI, users can make data-driven decisions to enhance their online presence and achieve better search engine results.

Botify AI

Botify AI is an AI tool that helps users optimize their website for search engines. It provides advanced analytics and insights to improve website performance and increase visibility on search engine results pages. With Botify AI, users can track and analyze key metrics, identify opportunities for optimization, and monitor the impact of changes on search engine rankings. The tool offers a user-friendly interface and actionable recommendations to help users make data-driven decisions and achieve their SEO goals.

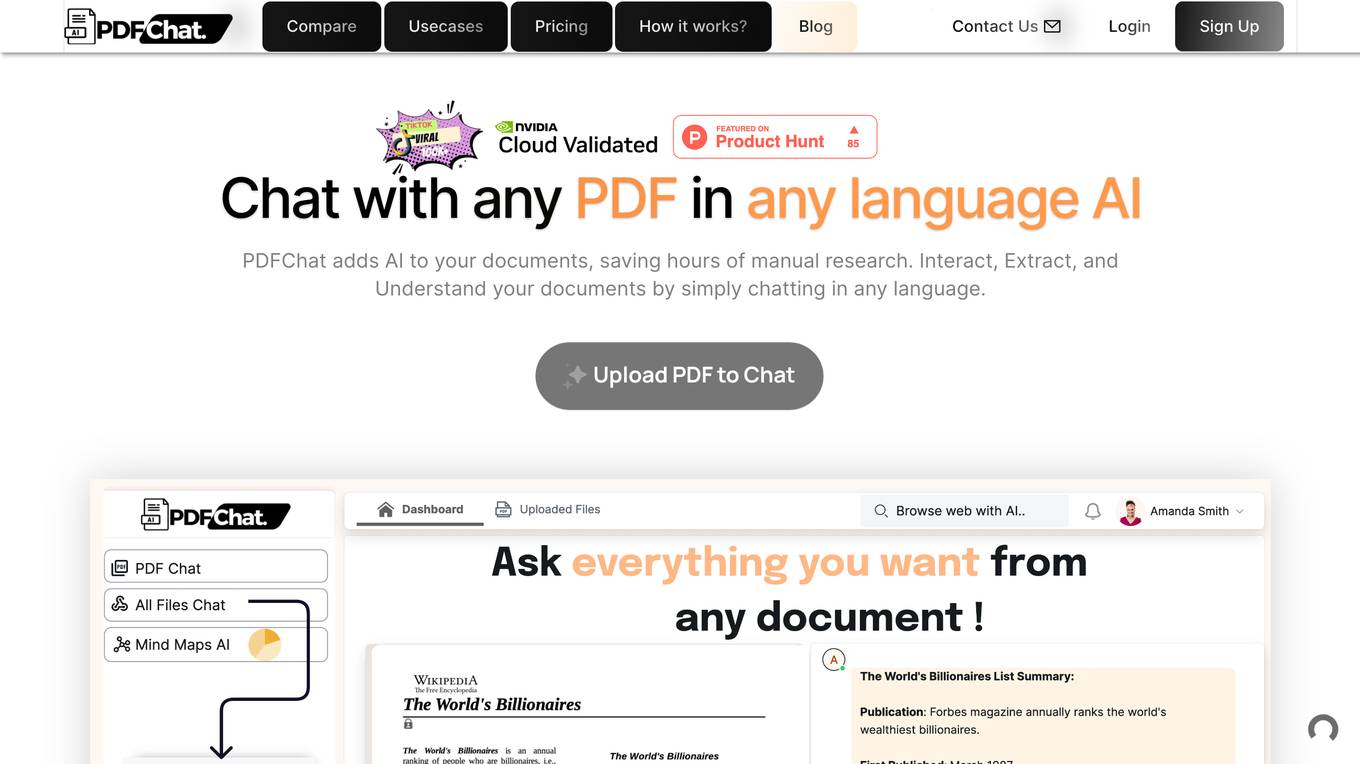

xPDF AI by PDFChat

xPDF AI by PDFChat is a personal AI assistant designed for PDF files. It offers advanced features to analyze tables, figures, and text from PDF documents, providing users with instant answers and insights. The AI assistant uses a chat interface for effortless interaction and is capable of summarizing PDF files, retrieving relevant figures, processing tables intelligently, and performing accurate calculations. Users can also benefit from voice chat, advanced search tools, performance analytics, report generation, and document assistance. With over 10,000 users trusting the platform, PDFChat aims to revolutionize document analysis and enhance productivity.

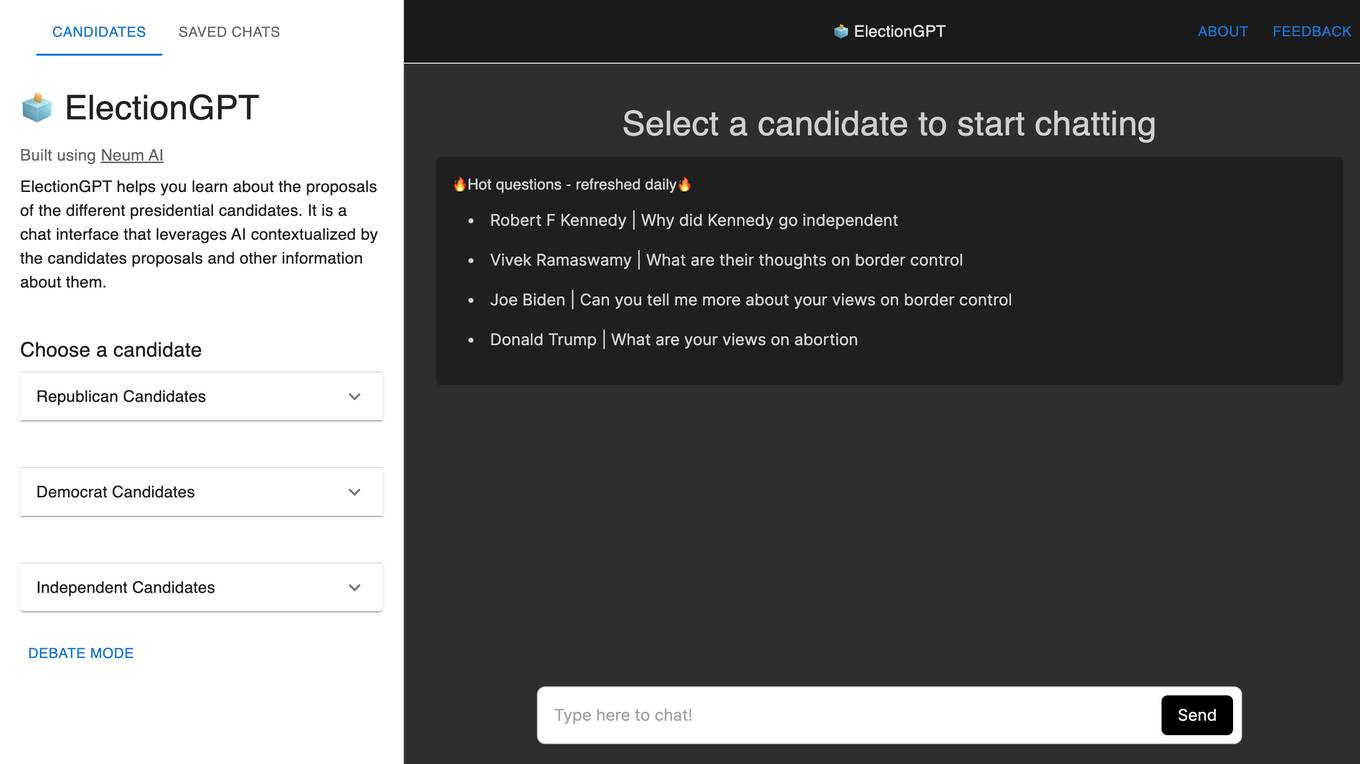

ElectionGPT

ElectionGPT is an AI-powered chatbot application that allows users to engage in simulated conversations with virtual representations of political candidates. Users can select a candidate and start chatting to ask questions or discuss various topics. The platform offers a unique and interactive way for users to learn about the views and opinions of different political figures. With a focus on providing insightful and engaging conversations, ElectionGPT aims to enhance user understanding of political issues and candidates.

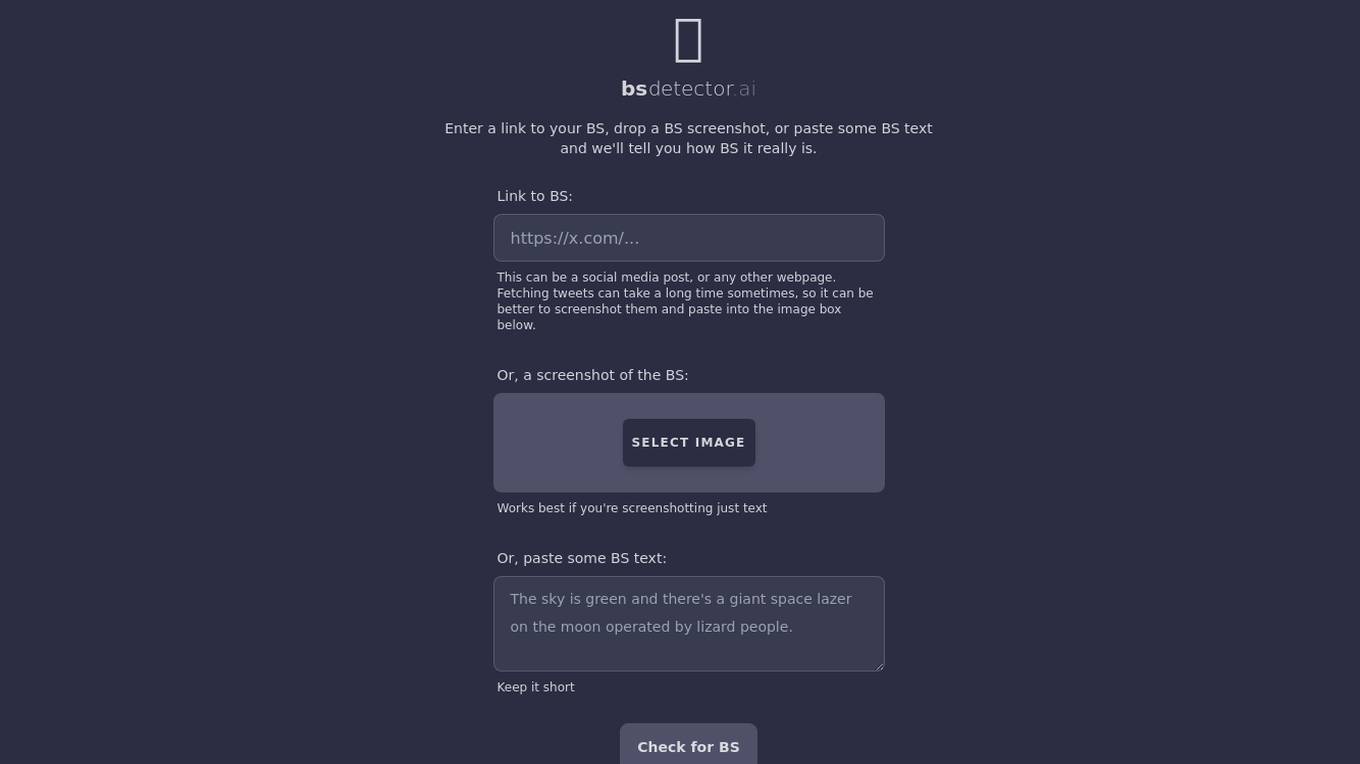

BS Detector

BS Detector is an AI tool designed to help users determine the credibility of information by analyzing text or images for misleading or false content. Users can input a link, upload a screenshot, or paste text to receive a BS (Bullshit) rating. The tool leverages AI algorithms to assess the accuracy and truthfulness of the provided content, offering users a quick and efficient way to identify potentially deceptive information.

Elicit

Elicit is a research tool that uses artificial intelligence to help researchers analyze research papers more efficiently. It can summarize papers, extract data, and synthesize findings, saving researchers time and effort. Elicit is used by over 800,000 researchers worldwide and has been featured in publications such as Nature and Science. It is a powerful tool that can help researchers stay up-to-date on the latest research and make new discoveries.

Plerdy

Plerdy is a comprehensive suite of conversion rate optimization tools that helps businesses track, analyze, and convert their website visitors into buyers. With a range of features including website heatmaps, session replay software, pop-up software, website feedback tools, and more, Plerdy provides businesses with the insights they need to improve their website's usability and conversion rates.

TimeComplexity.ai

TimeComplexity.ai is an AI tool that helps users analyze the runtime complexity of their code. It can be used across different programming languages without the need for headers, imports, or a main statement. Users can input their code and get insights into its time complexity. However, it is important to note that the results may not always be accurate, so caution is advised when using the tool.

StockGPT

StockGPT is an AI-powered financial research assistant that provides knowledge of earnings releases, financial reports, and fundamental information for S&P 500 and Nasdaq companies. It offers features like AI search, customizable filters, up-to-date data, and industry research to help users analyze companies and markets more efficiently.

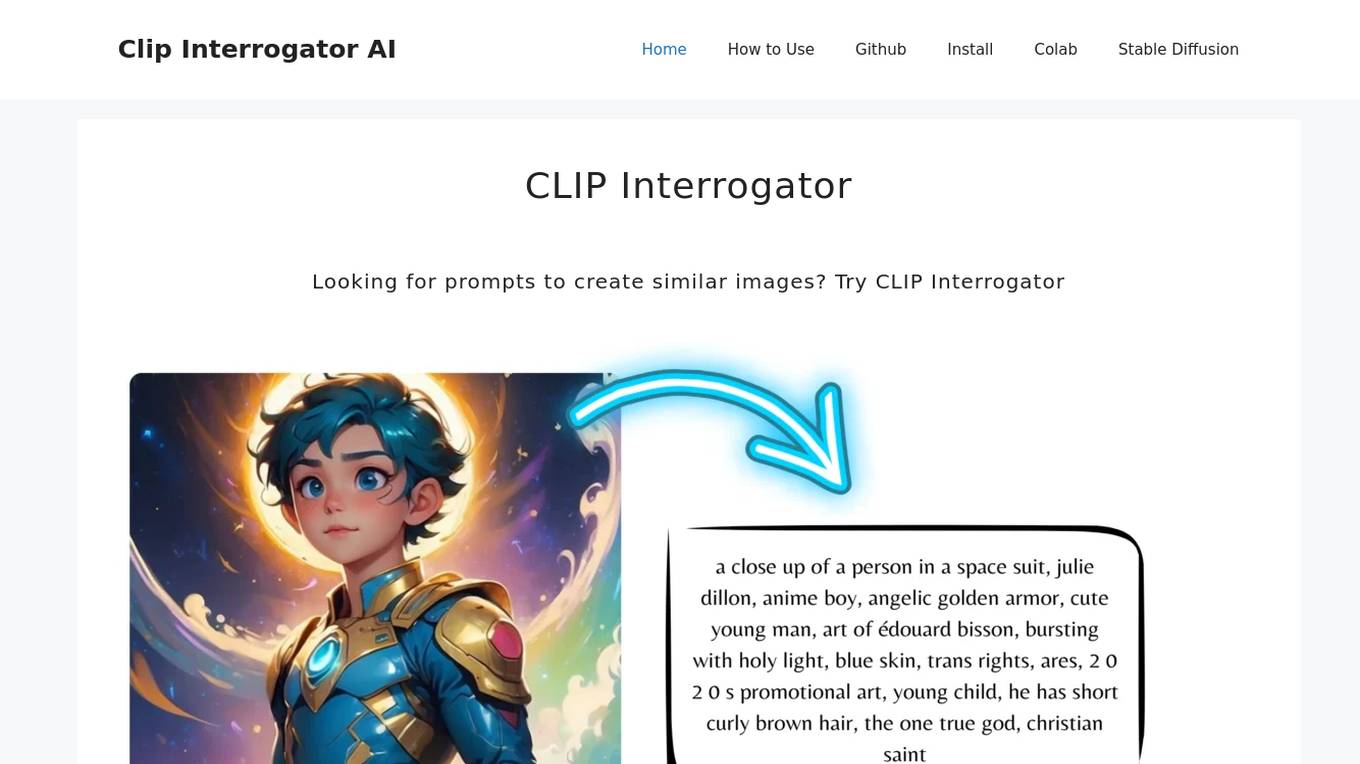

CLIP Interrogator

CLIP Interrogator is a tool that uses the CLIP (Contrastive Language–Image Pre-training) model to analyze images and generate descriptive text or tags. It effectively bridges the gap between visual content and language by interpreting the contents of images through natural language descriptions. The tool is particularly useful for understanding or replicating the style and content of existing images, as it helps in identifying key elements and suggesting prompts for creating similar imagery.

Surveyed.live

Surveyed.live is an AI-powered video survey platform that allows businesses to collect feedback and insights from customers through customizable survey templates. The platform offers features such as video surveys, AI touch response, comprehensible dashboard, Chrome extension, actionable insights, integration, predefined library, appealing survey creation, customer experience statistics, and more. Surveyed.live helps businesses enhance customer satisfaction, improve decision-making, and drive business growth by leveraging AI technology for video reviews and surveys. The platform caters to various industries including hospitality, healthcare, education, customer service, delivery services, and more, providing a versatile solution for optimizing customer relationships and improving overall business performance.

DINGR

DINGR is an AI-powered solution designed to help gamers analyze their performance in League of Legends. The tool uses AI algorithms to provide accurate insights into gameplay, comparing performance metrics with friends and offering suggestions for improvement. DINGR is currently in development, with a focus on enhancing the gaming experience through data-driven analysis and personalized feedback.

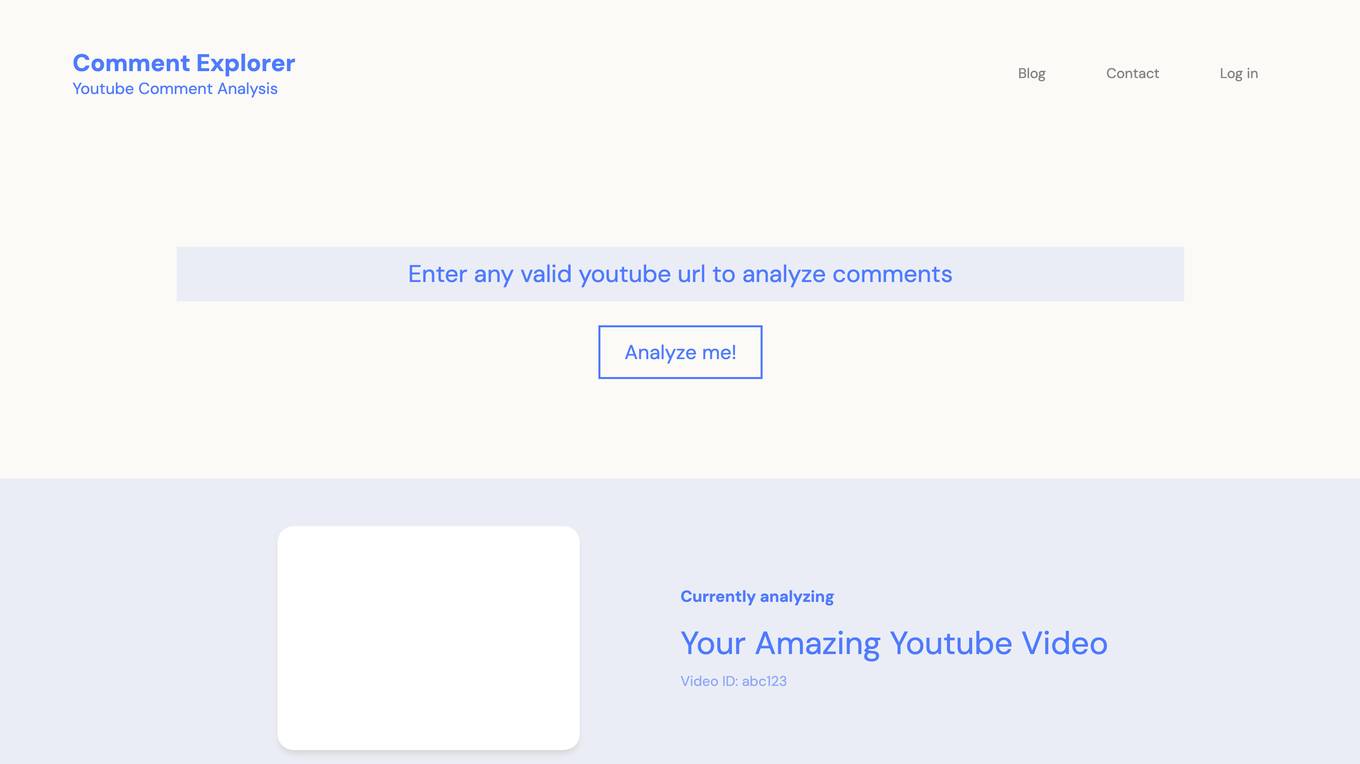

Comment Explorer

Comment Explorer is a free tool that allows users to analyze comments on YouTube videos. Users can gain insights into audience engagement, sentiment, and top subjects of discussion. The tool helps content creators understand the impact of their videos and improve interaction with viewers.

AI Tech Debt Analysis Tool

This website is an AI tool that helps senior developers analyze AI tech debt. AI tech debt is the technical debt that accumulates when AI systems are developed and deployed. It can be difficult to identify and quantify AI tech debt, but it can have a significant impact on the performance and reliability of AI systems. This tool uses a variety of techniques to analyze AI tech debt, including static analysis, dynamic analysis, and machine learning. It can help senior developers to identify and quantify AI tech debt, and to develop strategies to reduce it.

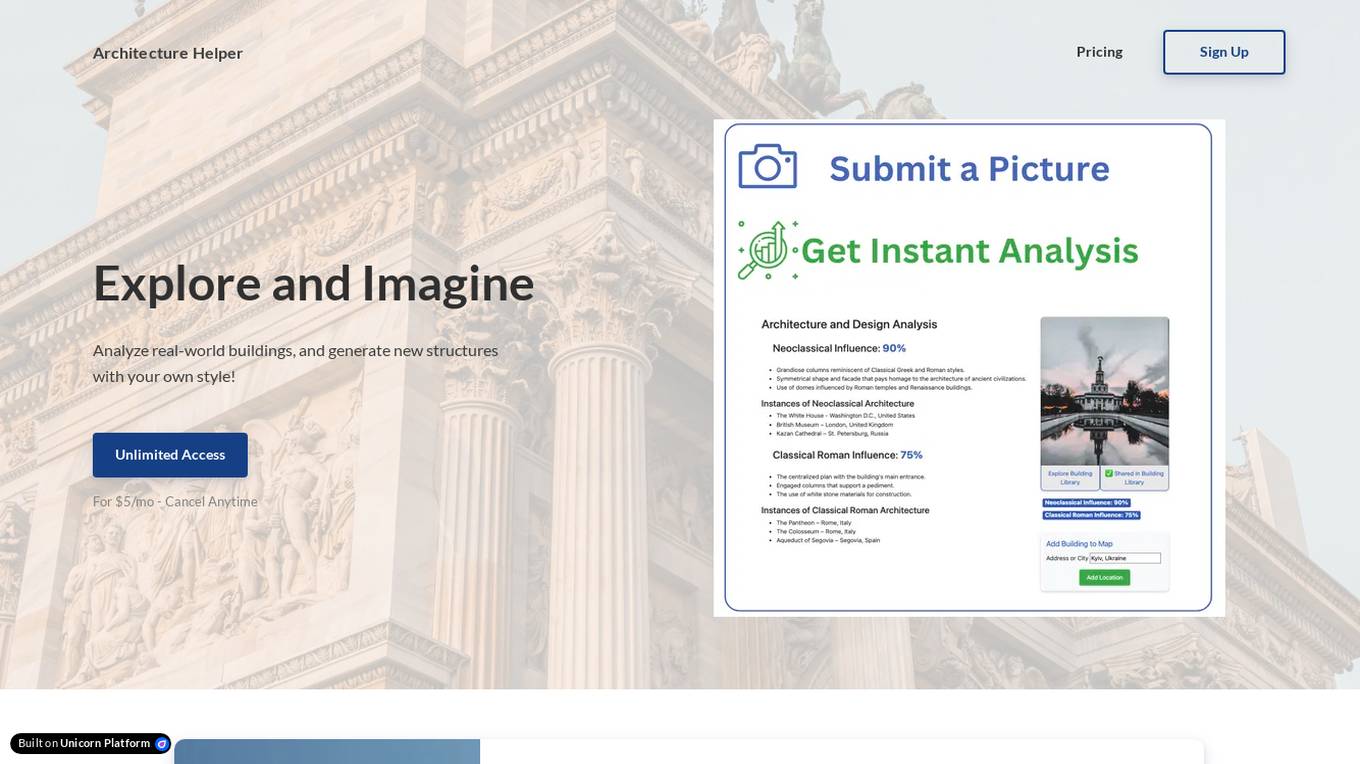

Architecture Helper

Architecture Helper is an AI-based application that allows users to analyze real-world buildings, explore architectural influences, and generate new structures with customizable styles. Users can submit images for instant design analysis, mix and match different architectural styles, and create stunning architectural and interior images. The application provides unlimited access for $5 per month, with the flexibility to cancel anytime. Named as a 'Top AI Tool' in Real Estate by CRE Software, Architecture Helper offers a powerful and playful tool for architecture enthusiasts to explore, learn, and create.

DISPL

DISPL is an AI-powered audience analytics and smart digital signage platform that helps businesses analyze, engage, and monetize offline audience behavior to increase sales. It offers solutions for visitor insights, impression analytics, smart digital signage, self-service portal, direct ad sales, and more. DISPL is designed to prioritize privacy and security by collecting only anonymous attributes of visitors, ensuring data cannot be used to identify specific individuals. The platform is compliant with global data protection standards such as LGPD and GDPR, making it a trusted solution for various industries including media owners, consumer electronics, restaurants, hotels, and more.

ChatInDoc

ChatInDoc is an AI-powered tool designed to revolutionize the way people interact with and comprehend lengthy documents. By leveraging cutting-edge AI technology, ChatInDoc offers users the ability to efficiently analyze, summarize, and extract key information from various file formats such as PDFs, Office documents, and text files. With features like IR analysis, term lookup, PDF viewing, and AI-powered chat capabilities, ChatInDoc aims to streamline the process of digesting complex information and enhance productivity. The application's user-friendly interface and advanced AI algorithms make it a valuable tool for students, professionals, and anyone dealing with extensive document reading tasks.

0 - Open Source AI Tools

20 - OpenAI Gpts

History Hunter

Delves into historical events, figures, or eras based on user queries. It can provide detailed narratives, analyze historical contexts, and even create engaging stories or hypothetical scenarios based on historical facts, making learning history interactive and fun.

HistoryExplorer

A multilingual historical guide on significant figures, blending facts with engaging analyses.

SaaS Product Scout

I'm a professional SaaS product analyst, help you quickly figure out the product's value proposition, features, user scenarios, advantages and more.

What is my dog thinking?

Upload a candid photo of your dog and let AI try to figure out what’s going on.

What is my cat thinking?

Upload a candid photo of your cat and let AI try to figure out what’s going on.

Chess Mentor

From novice to grandmaster, this enigmatic figure will be your guide, blending chess mastery with philosophical depth.

Wowza Bias Detective

I analyze cognitive biases in scenarios and thoughts, providing neutral, educational insights.

Art Engineer

Analyze and reverse engineer images. Receive style descriptions and image re-creation prompts.

Stock Market Analyst

I read and analyze annual reports of companies. Just upload the annual report PDF and start asking me questions!

Good Design Advisor

As a Good Design Advisor, I provide consultation and advice on design topics and analyze designs that are provided through documents or links. I can also generate visual representations myself to illustrate design concepts.

History Perspectives

I analyze historical events, offering insights from multiple perspectives.

Automated Knowledge Distillation

For strategic knowledge distillation, upload the document you need to analyze and use !start. ENSURE the uploaded file shows DOCUMENT and NOT PDF. This workflow requires leveraging RAG to operate. Only a small amount of PDFs are supported, convert to txt or doc. For timeout, refresh & !continue

Art Enthusiast

Analyze any uploaded art piece, providing thoughtful insight on the history of the piece and its maker. Replicate art pieces in new styles generated by the user. Be an overall expert in art and help users navigate the art scene. Inform them of different types of art

Historical Image Analyzer

A tool for historians to analyze and catalog historical images and documents.