Best AI tools for< Accelerate Workflow >

20 - AI tool Sites

Saxon AI

Saxon AI is an enterprise AI partner providing Agentic AI solutions tailored to various industries and teams. Their AI suite, AIssist, offers accelerated B2B sales growth, smarter financial decisions, faster legal reviews, streamlined HR processes, optimized procurement spend, proactive customer support, faster IT issue resolution, and leaner, more agile operations. Saxon focuses on AI that adds to the top line, not the to-do list, with verticalized solutions for different industries and role-specific AI agents. Their 4A framework combines apps, analytics, AI, and automation to ensure impactful and future-ready AI investments. Saxon also offers purpose-built solutions, accelerators, and a partner ecosystem to enhance client value.

Meshy AI

Meshy AI is a cutting-edge AI 3D model generator that empowers users to effortlessly transform artwork, images, and text into stunning 3D models. With features like image to 3D conversion, detailed 3D model creation from text prompts, AI texturing, animation, and API integration, Meshy AI revolutionizes the 3D content creation process. The platform caters to a wide range of industries, including game development, education, product design, film production, VR/AR, interior design, and more. Meshy AI offers a vibrant community, fine-tuned control over asset creation, and enterprise-grade controls for organizations.

Crunchbase Solutions

Crunchbase Solutions is an AI-powered company intelligence platform that helps users find prospects, investors, conduct market research, enrich databases, and build products. The platform offers products like Crunchbase Pro and Crunchbase Enterprise, providing personalized recommendations, AI-powered insights, and tools for company discovery and research. With a focus on leveraging AI technology, Crunchbase Solutions aims to assist users in making better decisions about investments, pipeline management, fundraising, partnerships, and product development. The platform's data is sourced from various contributors, partners, in-house experts, and AI algorithms, ensuring quality and compliance with SOC 2 Type II standards.

TractoAI

TractoAI is an advanced AI platform that offers deep learning solutions for various industries. It provides Batch Inference with no rate limits, DeepSeek offline inference, and helps in training open source AI models. TractoAI simplifies training infrastructure setup, accelerates workflows with GPUs, and automates deployment and scaling for tasks like ML training and big data processing. The platform supports fine-tuning models, sandboxed code execution, and building custom AI models with distributed training launcher. It is developer-friendly, scalable, and efficient, offering a solution library and expert guidance for AI projects.

Search Atlas

Search Atlas is the #1 AI SEO Automation Platform designed for agencies and brands. It offers a comprehensive suite of tools for managing Google Ads with AI, automating SEO tasks, generating AI content, conducting keyword research, and optimizing website performance. The platform is known for its enterprise SEO software, local citation builder, content tools like AI content writer and content planner, research tools like outreach tool and site explorer, and customization options for branding. Search Atlas helps agencies and brands improve strategy, accelerate workflows, and deliver quicker wins in organic marketing campaigns.

NVIDIA Run:ai

NVIDIA Run:ai is an enterprise platform for AI workloads and GPU orchestration. It accelerates AI and machine learning operations by addressing key infrastructure challenges through dynamic resource allocation, comprehensive AI life-cycle support, and strategic resource management. The platform significantly enhances GPU efficiency and workload capacity by pooling resources across environments and utilizing advanced orchestration. NVIDIA Run:ai provides unparalleled flexibility and adaptability, supporting public clouds, private clouds, hybrid environments, or on-premises data centers.

DocuBridge

DocuBridge is a financial data automation software product designed to accelerate audit and financial tasks with its AI Excel Add-In, offering 10x faster data entry and structuring for maximum efficiency. It is built for finance and audit professionals to streamline Excel workflows and bridge documents for more efficient data workflows from entry to analysis. The platform also offers versatile integrations, data privacy, and SOC-2 compliance to ensure complete data protection.

Athena Intelligence

Athena Intelligence is an AI-native analytics platform and artificial employee designed to accelerate analytics workflows by offering enterprise teams co-pilot and auto-pilot modes. Athena learns your workflow as a co-pilot, allowing you to hand over controls to her for autonomous execution with confidence. With Athena, everyone in your enterprise has access to a data analyst, and she doesn't take days off. Simple integration to your Enterprise Data Warehouse Chat with Athena to query data, generate visualizations, analyze enterprise data and codify workflows. Athena's AI learns from existing documentation, data and analyses, allowing teams to focus on creating new insights. Athena as a platform can be used collaboratively with co-workers or Athena, with over 100 users in the same report or whiteboard environment concurrently making edits. From simple queries and visualizations to complex industry specific workflows, Athena enables you with SQL and Python-based execution environments.

Gatsbi

Gatsbi is a research paper generator and AI research assistant that helps users discover original research ideas, draft scientific papers, write patent documents, and conduct systematic reviews and meta-analyses. It offers powerful features tailored for researchers and innovators, leveraging AI-driven ideation and innovation to streamline the research process. Gatsbi is designed to accelerate research workflows, save time, and inspire groundbreaking ideas across various fields.

Fermat

Fermat is an AI toolmaker that allows users to build their own AI workflows and accelerate their creative process. It is trusted by professionals in fashion design, product design, interior design, and brainstorming. Fermat's unique features include the ability to blend AI models into tools that fit the way users work, embed processes in reusable tools, keep teams on the same page, and embed users' own style to get coherent results. With Fermat, users can visualize their sketches, change colors and materials, create photo shoots, turn images into vectors, and more. Fermat offers a free Starter plan for individuals and a Pro plan for teams and professionals.

VoxCraft

VoxCraft is a free 3D AI generator that allows users to create realistic 3D models using artificial intelligence technology. With VoxCraft, users can easily generate detailed 3D models without the need for advanced design skills or software. The application leverages AI algorithms to streamline the modeling process and produce high-quality results. Whether you are a beginner or an experienced designer, VoxCraft offers a user-friendly platform to bring your creative ideas to life in the world of 3D modeling.

Encord

Encord is a leading data development platform designed for computer vision and multimodal AI teams. It offers a comprehensive suite of tools to manage, clean, and curate data, streamline labeling and workflow management, and evaluate AI model performance. With features like data indexing, annotation, and active model evaluation, Encord empowers users to accelerate their AI data workflows and build robust models efficiently.

Daloopa

Daloopa is an AI financial modeling tool designed to automate fundamental data updates for financial analysts working in Excel. It helps analysts build and update financial models efficiently by eliminating manual work and providing accurate, auditable data points sourced from thousands of companies. Daloopa leverages AI technology to deliver complete and comprehensive data sets faster than humanly possible, enabling analysts to focus on analysis, insight generation, and idea development to drive better investment decisions.

Juro

Juro is an intelligent contract automation platform that empowers modern businesses to agree and manage contracts faster in one AI-native workspace. It offers a comprehensive suite of features such as creating contracts from browser-native templates, automating contract reminders, integrating with core platforms, and providing advanced electronic signature capabilities. Juro also enables users to extract key data from contracts, collaborate with AI-native workflows, and track obligations and risks automatically. With a focus on security and efficiency, Juro is designed to streamline the contract management process for legal, HR, procurement, sales, and finance teams across various industries.

Synthetic Data Generation for Agentic AI

The website provides information on Synthetic Data Generation for Agentic AI, offering high-quality, domain-specific synthetic data to accelerate the development of agentic workflows. It explains the technical implementation, benefits, and usage of synthetic data in training specialized agentic systems. The site also showcases related use cases, quick links for further exploration, and detailed steps for generating synthetic data.

FlashIntel

FlashIntel is a revenue acceleration platform that offers a suite of tools and solutions to streamline sales and partnership processes. It provides features like real-time enrichment, personalized messaging, sequence and cadence, email deliverability, parallel dialing, account-based marketing, and more. The platform aims to help businesses uncover ideal prospects, target key insights, craft compelling outreach sequences, research companies and people's contacts in real-time, and execute omnichannel sequences with AI personalization.

CodeScope

CodeScope is an AI tool designed to help users build and edit incredible AI applications. It offers features like one-click code and SEO performance optimization, AI app builder, API creation, headless CMS, development tools, and SEO reporting. CodeScope aims to revolutionize the development workflow by providing a comprehensive solution for developers and marketers to enhance collaboration and efficiency in the digital development and marketing landscape.

Qlerify

Qlerify is an AI-powered software modeling tool that helps digital transformation teams accelerate the digitalization of enterprise business processes. It allows users to quickly create workflows with AI, generate source code in minutes, and reuse actionable models in various formats. Qlerify supports powerful frameworks like Event Storming, Domain Driven Design, and Business Process Modeling, providing a user-friendly interface for collaborative modeling.

Boomi

Boomi is an AI-powered integration and automation platform that simplifies and accelerates business processes by leveraging generative AI capabilities. With over 20,000 customers worldwide, Boomi offers flexible pricing for small to enterprise-level businesses, ensuring security and compliance with regulatory standards. The platform enables seamless integration, automation, and management of applications, data, APIs, workflows, and event-driven integrations. Boomi AI Agents provide advanced features like AI-powered data classification, automated data mapping, error resolution, and process documentation. Boomi AI empowers businesses to streamline operations, enhance efficiency, and drive growth through proactive business intelligence and cross-team collaboration.

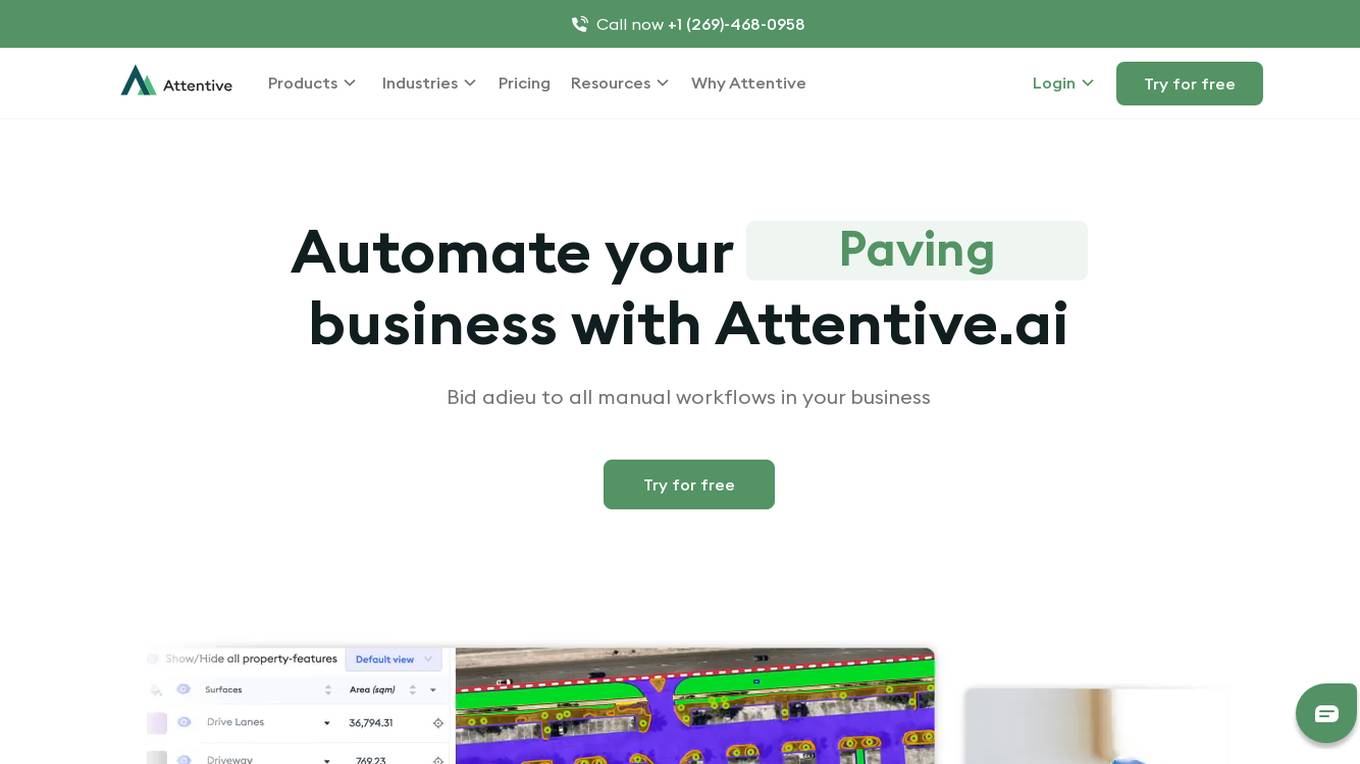

Attentive.ai

Attentive.ai is an AI-powered platform designed to automate landscaping, paving, and construction businesses. It offers end-to-end automation solutions for various industry needs, such as takeoff measurements, workflow management, and bid automation. The platform utilizes advanced AI algorithms to provide accurate measurements, streamline workflows, and optimize business operations. With features like automated takeoffs, smart scheduling, and real-time insights, Attentive.ai aims to enhance sales efficiency, production optimization, and back-office operations for field service businesses. Trusted by over 750 field service businesses, Attentive.ai is a comprehensive solution for managing landscape maintenance and construction projects efficiently.

0 - Open Source AI Tools

7 - OpenAI Gpts

Material Tailwind GPT

Accelerate web app development with Material Tailwind GPT's components - 10x faster.

Tourist Language Accelerator

Accelerates the learning of key phrases and cultural norms for travelers in various languages.

Digital Entrepreneurship Accelerator Coach

The Go-To Coach for Aspiring Digital Entrepreneurs, Innovators, & Startups. Learn More at UnderdogInnovationInc.com.

24 Hour Startup Accelerator

Niche-focused startup guide, humorous, strategic, simplifying ideas.

Backloger.ai - Product MVP Accelerator

Drop in any requirements or any text ; I'll help you create an MVP with insights.

Digital Boost Lab

A guide for developing university-focused digital startup accelerator programs.