Best AI tools for< Accelerate Language Model Inference >

20 - AI tool Sites

FluidStack

FluidStack is a leading GPU cloud platform designed for AI and LLM (Large Language Model) training. It offers unlimited scale for AI training and inference, allowing users to access thousands of fully-interconnected GPUs on demand. Trusted by top AI startups, FluidStack aggregates GPU capacity from data centers worldwide, providing access to over 50,000 GPUs for accelerating training and inference. With 1000+ data centers across 50+ countries, FluidStack ensures reliable and efficient GPU cloud services at competitive prices.

Denvr DataWorks AI Cloud

Denvr DataWorks AI Cloud is a cloud-based AI platform that provides end-to-end AI solutions for businesses. It offers a range of features including high-performance GPUs, scalable infrastructure, ultra-efficient workflows, and cost efficiency. Denvr DataWorks is an NVIDIA Elite Partner for Compute, and its platform is used by leading AI companies to develop and deploy innovative AI solutions.

Cerebras

Cerebras is an AI tool that offers products and services related to AI supercomputers, cloud system processors, and applications for various industries. It provides high-performance computing solutions, including large language models, and caters to sectors such as health, energy, government, scientific computing, and financial services. Cerebras specializes in AI model services, offering state-of-the-art models and training services for tasks like multi-lingual chatbots and DNA sequence prediction. The platform also features the Cerebras Model Zoo, an open-source repository of AI models for developers and researchers.

Cerebras

Cerebras is a leading AI tool and application provider that offers cutting-edge AI supercomputers, model services, and cloud solutions for various industries. The platform specializes in high-performance computing, large language models, and AI model training, catering to sectors such as health, energy, government, and financial services. Cerebras empowers developers and researchers with access to advanced AI models, open-source resources, and innovative hardware and software development kits.

ONNX Runtime

ONNX Runtime is a production-grade AI engine designed to accelerate machine learning training and inferencing in various technology stacks. It supports multiple languages and platforms, optimizing performance for CPU, GPU, and NPU hardware. ONNX Runtime powers AI in Microsoft products and is widely used in cloud, edge, web, and mobile applications. It also enables large model training and on-device training, offering state-of-the-art models for tasks like image synthesis and text generation.

UpRizz

UpRizz is an AI-powered tool that helps users increase their Instagram followers and engagement by writing better comments. It uses advanced AI models to generate personalized comments that are tailored to each post, making it easy for users to connect with their audience and grow their influence on Instagram.

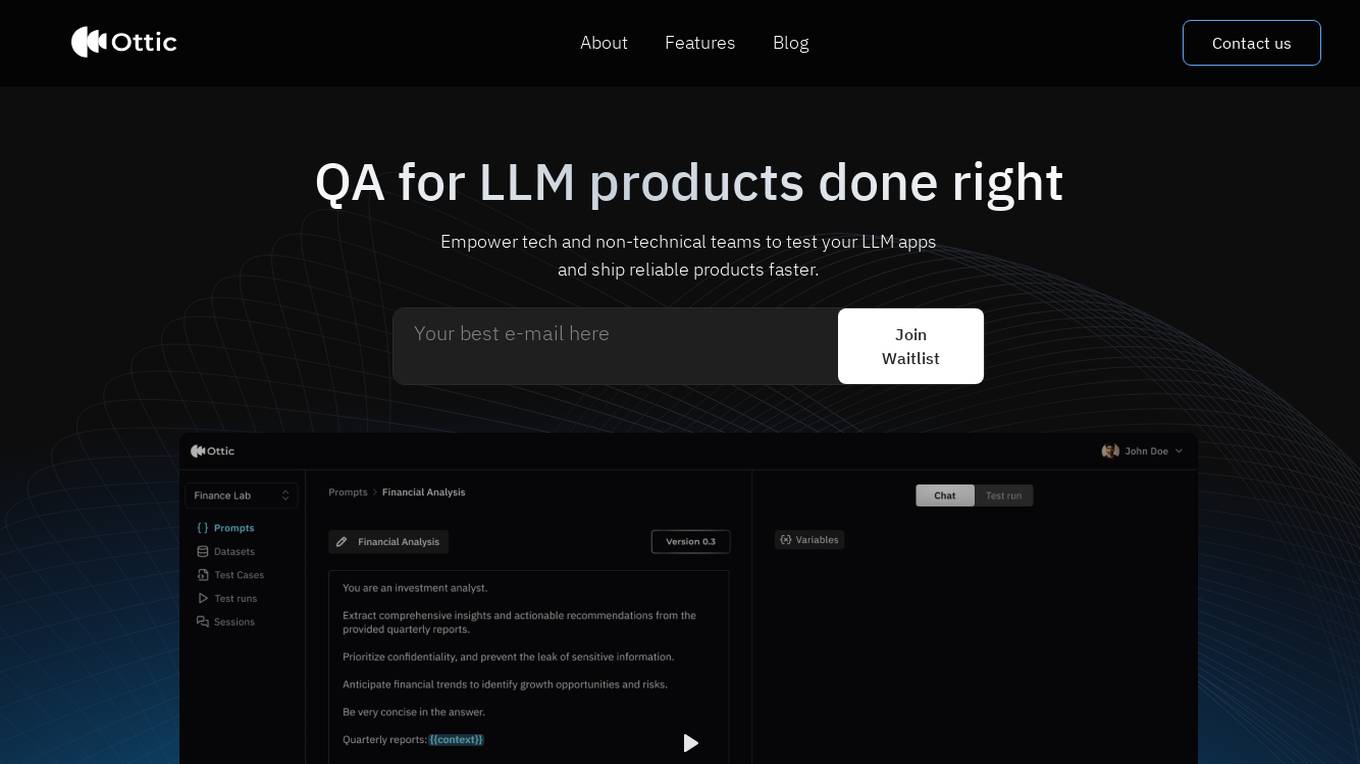

Ottic

Ottic is an AI tool designed to empower both technical and non-technical teams to test Language Model (LLM) applications efficiently and accelerate the development cycle. It offers features such as a 360º view of the QA process, end-to-end test management, comprehensive LLM evaluation, and real-time monitoring of user behavior. Ottic aims to bridge the gap between technical and non-technical team members, ensuring seamless collaboration and reliable product delivery.

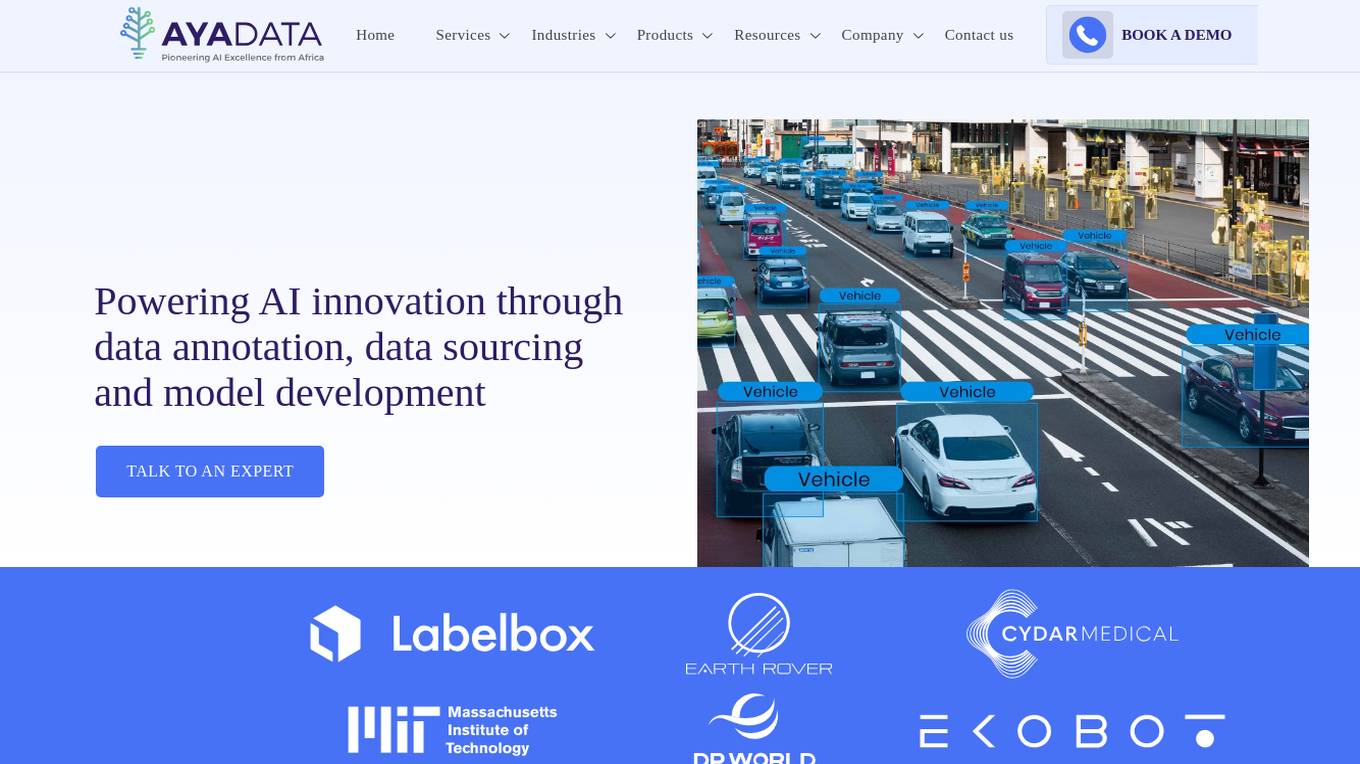

Aya Data

Aya Data is an AI tool that offers services such as data annotation, computer vision, natural language annotation, 3D annotation, AI data acquisition, and AI consulting. They provide cutting-edge tools to transform raw data into training datasets for AI models, deliver bespoke AI solutions for various industries, and offer AI-powered products like AyaGrow for crop management and AyaSpeech for speech-to-speech translation. Aya Data focuses on exceptional accuracy, rapid development cycles, and high performance in real-world scenarios.

AutopilotNext

AutopilotNext is a next-generation software development agency that offers a subscription-based model for unlimited software development. With AutopilotNext, businesses can avoid overspending on costly or unsuitable developers, accelerate development speed, and enhance quality. The agency specializes in various services, including custom AI chatbots, custom websites, custom CRM dashboards, rapid MVP development, workflow automation, software maintenance, and landing page development. AutopilotNext's approach to Rapid MVP Development ensures that businesses can swiftly bring their concepts to market and gather critical insights. The agency also offers Chrome extension development services.

Snorkel AI

Snorkel AI is a data-centric AI application designed for enterprise use. It offers tools and platforms to programmatically label and curate data, accelerate AI development, and build high-quality generative AI applications. The application aims to help users develop AI models 100x faster by leveraging programmatic data operations and domain knowledge. Snorkel AI is known for its expertise in computer vision, data labeling, generative AI, and enterprise AI solutions. It provides resources, case studies, and research papers to support users in their AI development journey.

ModelOp

ModelOp is the leading AI Governance software for enterprises, providing a single source of truth for all AI systems, automated process workflows, real-time insights, and integrations to extend the value of existing technology investments. It helps organizations safeguard AI initiatives without stifling innovation, ensuring compliance, accelerating innovation, and improving key performance indicators. ModelOp supports generative AI, Large Language Models (LLMs), in-house, third-party vendor, and embedded systems. The software enables visibility, accountability, risk tiering, systemic tracking, enforceable controls, workflow automation, reporting, and rapid establishment of AI governance.

Sarvam AI

Sarvam AI is an AI application focused on leading transformative research in AI to develop, deploy, and distribute Generative AI applications in India. The platform aims to build efficient large language models for India's diverse linguistic culture and enable new GenAI applications through bespoke enterprise models. Sarvam AI is also developing an enterprise-grade platform for developing and evaluating GenAI apps, while contributing to open-source models and datasets to accelerate AI innovation.

OneSky Localization Agent

OneSky Localization Agent (OLA) is an AI-powered platform designed to streamline the localization process for mobile apps, games, websites, and software products. OLA leverages multiple Large Language Models (LLMs) and a collaborative translation approach by a team of AI agents to deliver high-quality, accurate translations. It offers post-editing solutions, real-time progress tracking, and seamless integration into development workflows. With a focus on precision-engineered AI translations and human touch, OLA aims to provide a smarter way for global growth through efficient localization.

Zapata AI

Zapata AI is an Industrial Generative AI application that empowers enterprises to revolutionize their industry by building and deploying cutting-edge AI applications. It specializes in tackling complex business challenges with precision using quantum techniques and advanced computing technologies. The platform offers solutions for various industries, accelerates quantum research, and provides expert perspectives on Generative AI and quantum computing.

iGenius

iGenius is an AI company specializing in providing AI solutions for regulated industries. They offer a range of products including Crystal AI Agent for Decision Intelligence and Unicorn Tailored AI for businesses. iGenius focuses on developing language models and supercomputers to meet the needs of mission-critical use cases requiring maximum data security, reliability, and accuracy. The company collaborates with industry leaders to accelerate the development and deployment of AI applications that comply with regulatory requirements and align with local languages and culture.

Innovation Acceleration

Innovation Acceleration is an AI-powered platform that empowers organizations to unlock their creative potential through the integration of advanced AI technologies and structured innovation frameworks. The platform offers a systematic and repeatable approach to creative thinking using Systematic Inventive Thinking (SIT) and Natural Language Processing (NLP) tools such as Large Language Models (LLMs) and generative AI (GenAI). Innovation Acceleration aims to accelerate the innovation process by guiding users through creating customized, industry-leading products, processes, strategies, and marketing innovations.

Toloka AI

Toloka AI is a data labeling platform that empowers AI development by combining human insight with machine learning models. It offers adaptive AutoML, human-in-the-loop workflows, large language models, and automated data labeling. The platform supports various AI solutions with human input, such as e-commerce services, content moderation, computer vision, and NLP. Toloka AI aims to accelerate machine learning processes by providing high-quality human-labeled data and leveraging the power of the crowd.

Koncile

Koncile is an AI-powered OCR solution that automates data extraction from various documents. It combines advanced OCR technology with large language models to transform unstructured data into structured information. Koncile can extract data from invoices, accounting documents, identity documents, and more, offering features like categorization, enrichment, and database integration. The tool is designed to streamline document management processes and accelerate data processing. Koncile is suitable for businesses of all sizes, providing flexible subscription plans and enterprise solutions tailored to specific needs.

Cloobot X

Cloobot X is a Gen-AI-powered implementation studio that accelerates the deployment of enterprise applications with fewer resources. It leverages natural language processing to model workflow automation, deliver sandbox previews, configure workflows, extend functionalities, and manage versioning & changes. The platform aims to streamline enterprise application deployments, making them simple, swift, and efficient for all stakeholders.

Fifi.ai

Fifi.ai is a managed AI cloud platform that provides users with the infrastructure and tools to deploy and run AI models. The platform is designed to be easy to use, with a focus on plug-and-play functionality. Fifi.ai also offers a range of customization and fine-tuning options, allowing users to tailor the platform to their specific needs. The platform is supported by a team of experts who can provide assistance with onboarding, API integration, and troubleshooting.

1 - Open Source AI Tools

flashinfer

FlashInfer is a library for Language Languages Models that provides high-performance implementation of LLM GPU kernels such as FlashAttention, PageAttention and LoRA. FlashInfer focus on LLM serving and inference, and delivers state-the-art performance across diverse scenarios.

7 - OpenAI Gpts

Tourist Language Accelerator

Accelerates the learning of key phrases and cultural norms for travelers in various languages.

Material Tailwind GPT

Accelerate web app development with Material Tailwind GPT's components - 10x faster.

Digital Entrepreneurship Accelerator Coach

The Go-To Coach for Aspiring Digital Entrepreneurs, Innovators, & Startups. Learn More at UnderdogInnovationInc.com.

24 Hour Startup Accelerator

Niche-focused startup guide, humorous, strategic, simplifying ideas.

Backloger.ai - Product MVP Accelerator

Drop in any requirements or any text ; I'll help you create an MVP with insights.

Digital Boost Lab

A guide for developing university-focused digital startup accelerator programs.