Best AI tools for< Accelerate Deployment >

20 - AI tool Sites

FuriosaAI

FuriosaAI is an AI application that offers Hardware RNGD for LLM and Multimodality, as well as WARBOY for Computer Vision. It provides a comprehensive developer experience through the Furiosa SDK, Model Zoo, and Dev Support. The application focuses on efficient AI inference, high-performance LLM and multimodal deployment capabilities, and sustainable mass adoption of AI. FuriosaAI features the Tensor Contraction Processor architecture, software for streamlined LLM deployment, and a robust ecosystem support. It aims to deliver powerful and efficient deep learning acceleration while ensuring future-proof programmability and efficiency.

Seldon

Seldon is an MLOps platform that helps enterprises deploy, monitor, and manage machine learning models at scale. It provides a range of features to help organizations accelerate model deployment, optimize infrastructure resource allocation, and manage models and risk. Seldon is trusted by the world's leading MLOps teams and has been used to install and manage over 10 million ML models. With Seldon, organizations can reduce deployment time from months to minutes, increase efficiency, and reduce infrastructure and cloud costs.

Valohai

Valohai is a scalable MLOps platform that enables Continuous Integration/Continuous Deployment (CI/CD) for machine learning and pipeline automation on-premises and across various cloud environments. It helps streamline complex machine learning workflows by offering framework-agnostic ML capabilities, automatic versioning with complete lineage of ML experiments, hybrid and multi-cloud support, scalability and performance optimization, streamlined collaboration among data scientists, IT, and business units, and smart orchestration of ML workloads on any infrastructure. Valohai also provides a knowledge repository for storing and sharing the entire model lifecycle, facilitating cross-functional collaboration, and allowing developers to build with total freedom using any libraries or frameworks.

Attri

Attri is a leading Generative AI application specialized in custom AI solutions for enterprises. It harnesses the power of Generative AI and Foundation Models to drive innovation and accelerate digital transformation. Attri offers a range of AI solutions for various industries, focusing on responsible AI deployment and ethical innovation.

Pandio

Pandio is an AI orchestration platform that simplifies data pipelines to harness the power of AI. It offers cloud-native managed solutions to connect systems, automate data movement, and accelerate machine learning model deployment. Pandio's AI-driven architecture orchestrates models, data, and ML tools to drive AI automation and data-driven decisions faster. The platform is designed for price-performance, offering data movement at high speed and low cost, with near-infinite scalability and compatibility with any data, tools, or cloud environment.

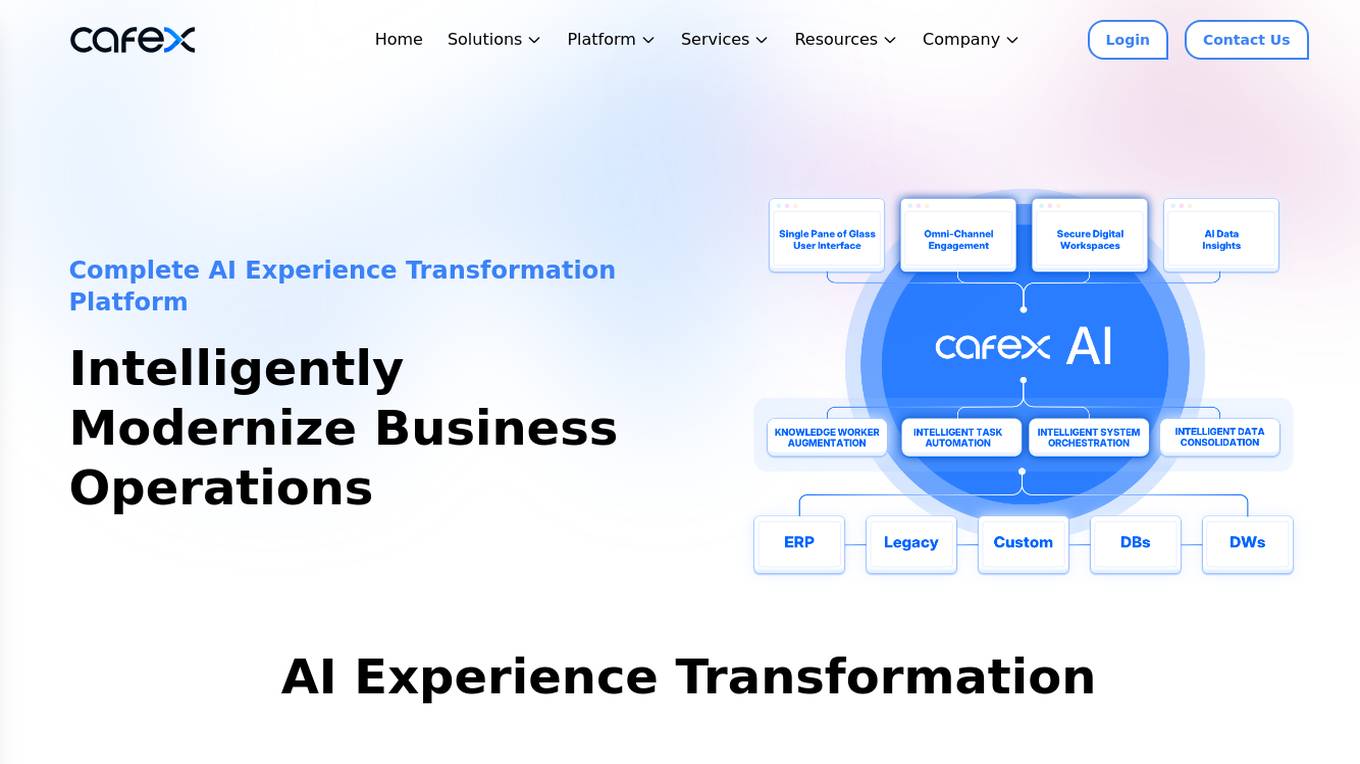

CafeX

CafeX is an AI-powered platform that offers AI Experience Transformation solutions for businesses. It helps in modernizing business operations, integrating AI and automation to simplify complex challenges, and enhancing customer interactions with plug-and-play solutions. CafeX enables organizations in regulated industries to optimize digital engagement with employees, customers, and partners by leveraging existing investments and unifying fragmented solutions. The platform provides unified intelligence, seamless integration, developer empowerment, efficient deployment, and audit & compliance functionalities.

SiMa.ai

SiMa.ai is an AI application that offers high-performance, power-efficient, and scalable edge machine learning solutions for various industries such as automotive, industrial, healthcare, drones, and government sectors. The platform provides MLSoC™ boards, DevKit 2.0, Palette Software 1.2, and Edgematic™ for developers to accelerate complete applications and deploy AI-enabled solutions. SiMa.ai's Machine Learning System on Chip (MLSoC) enables full-pipeline implementations of real-world ML solutions, making it a trusted platform for edge AI development.

SuperAnnotate

SuperAnnotate is an AI data platform that simplifies and accelerates model-building by unifying the AI pipeline. It enables users to create, curate, and evaluate datasets efficiently, leading to the development of better models faster. The platform offers features like connecting any data source, building customizable UIs, creating high-quality datasets, evaluating models, and deploying models seamlessly. SuperAnnotate ensures global security and privacy measures for data protection.

Zevo.ai

Zevo.ai is an AI-powered code visualization tool designed to accelerate code comprehension, deployment, and observation. It offers dynamic code analysis, contextual code understanding, and automatic code mapping to help developers streamline shipping, refactoring, and onboarding processes for both legacy and existing applications. By leveraging AI models, Zevo.ai provides deeper insights into code, logs, and cloud infrastructure, enabling developers to gain a better understanding of their codebase.

Domino Data Lab

Domino Data Lab is an enterprise AI platform that enables data scientists and IT leaders to build, deploy, and manage AI models at scale. It provides a unified platform for accessing data, tools, compute, models, and projects across any environment. Domino also fosters collaboration, establishes best practices, and tracks models in production to accelerate and scale AI while ensuring governance and reducing costs.

Striveworks

Striveworks is an AI application that offers a Machine Learning Operations Platform designed to help organizations build, deploy, maintain, monitor, and audit machine learning models efficiently. It provides features such as rapid model deployment, data and model auditability, low-code interface, flexible deployment options, and operationalizing AI data science with real returns. Striveworks aims to accelerate the ML lifecycle, save time and money in model creation, and enable non-experts to leverage AI for data-driven decisions.

Code99

Code99 is an AI-powered platform designed to speed up the development process by providing instant boilerplate code generation. It allows users to customize their tech stack, streamline development, and launch projects faster. Ideal for startups, developers, and IT agencies looking to accelerate project timelines and improve productivity. The platform offers features such as authentication, database support, RESTful APIs, data validation, Swagger API documentation, email integration, state management, modern UI, clean code generation, and more. Users can generate production-ready apps in minutes, transform database schema into React or Nest.js apps, and unleash creativity through effortless editing and experimentation. Code99 aims to save time, avoid repetitive tasks, and help users focus on building their business effectively.

Goptimise

Goptimise is a no-code AI-powered scalable backend builder that helps developers craft scalable, seamless, powerful, and intuitive backend solutions. It offers a solid foundation with robust and scalable infrastructure, including dedicated infrastructure, security, and scalability. Goptimise simplifies software rollouts with one-click deployment, automating the process and amplifying productivity. It also provides smart API suggestions, leveraging AI algorithms to offer intelligent recommendations for API design and accelerating development with automated recommendations tailored to each project. Goptimise's intuitive visual interface and effortless integration make it easy to use, and its customizable workspaces allow for dynamic data management and a personalized development experience.

XenonStack

XenonStack is an AI application that offers a reasoning foundry for agentic enterprises. It provides unified reasoning foundation enabling seamless orchestration, analytics, infrastructure, and trust across intelligent ecosystems. The platform includes various AI tools such as Akira AI for reasoning and agent orchestration, ElixirData for agentic analytics intelligence, NexaStack for agentic infrastructure automation, MetaSecure for trust, compliance, and defense, and Neural AI for agentic intelligence & autonomous innovation. It also offers pre-built autonomous agents for domain-specific intelligence, seamless integrations, and governed enterprise deployment.

DDN A³I

DDN A³I is an AI storage platform that maximizes business differentiation and market leadership through data utilization, AI, and advanced analytics. It offers comprehensive enterprise features, easy deployment and management, predictable scaling, data protection, and high performance. DDN A³I enables organizations to accelerate insights, reduce costs, and optimize GPU productivity for faster results.

NetMind

NetMind is an AI tool that offers a Model Library, Enterprise AI Solutions, and AI Consulting services. It provides cutting-edge inference capabilities, model APIs for various data types, and GPU clusters for accelerated performance. The platform allows rapid deployment of models with flexible scaling options. NetMind caters to a wide range of industries, offering solutions that enhance accuracy, cut costs, and accelerate decision-making processes.

Serenity Star

Serenity Star is a Generative AI deployment service that offers Models As A Service to help businesses increase productivity and design tailored solutions. The platform provides access to over 100 LLMs, an ecosystem with agents, co-pilots, and plugins, and features low code and no code solutions for quick market release. Serenity Star aims to simplify the implementation of Generative AI in enterprises by offering tools, support, and resources for process optimization, innovation, revenue maximization, and informed decision-making.

Context64AI

Context64AI is an AI application that specializes in transforming industries with data-driven solutions. It provides a unified intelligence platform that connects data, workflows, and knowledge to deliver trusted, actionable outcomes. The application focuses on providing comprehensive business context to AI models, ensuring better outcomes and faster decision-making. Context64AI offers various intelligence solutions such as Product Intelligence Hub, Compliance Intelligence, Scenario Intelligence, Digital Twin Intelligence, Sustainability Intelligence, and Supply Network Intelligence. It also features an open architecture, intelligent orchestration, and a unified context platform for enterprise deployment.

Granica

Granica is an AI tool designed for data compression and optimization, enabling users to transform petabytes of data into terabytes through self-optimizing, lossless compression. It works seamlessly across various data platforms like Iceberg, Delta, Trino, Spark, Snowflake, BigQuery, and Databricks, offering significant cost savings and improved query performance. Granica is trusted by data and AI leaders globally for its ability to reduce data bloat, speed up queries, and enhance data lake optimization. The tool is built for structured AI, providing transparent deployment, continuous adaptation, hands-off orchestration, and trusted controls for data security and compliance.

Embedl

Embedl is an AI tool that specializes in developing advanced solutions for efficient AI deployment in embedded systems. With a focus on deep learning optimization, Embedl offers a cost-effective solution that reduces energy consumption and accelerates product development cycles. The platform caters to industries such as automotive, aerospace, and IoT, providing cutting-edge AI products that drive innovation and competitive advantage.

2 - Open Source AI Tools

edgeai

Embedded inference of Deep Learning models is quite challenging due to high compute requirements. TI’s Edge AI software product helps optimize and accelerate inference on TI’s embedded devices. It supports heterogeneous execution of DNNs across cortex-A based MPUs, TI’s latest generation C7x DSP, and DNN accelerator (MMA). The solution simplifies the product life cycle of DNN development and deployment by providing a rich set of tools and optimized libraries.

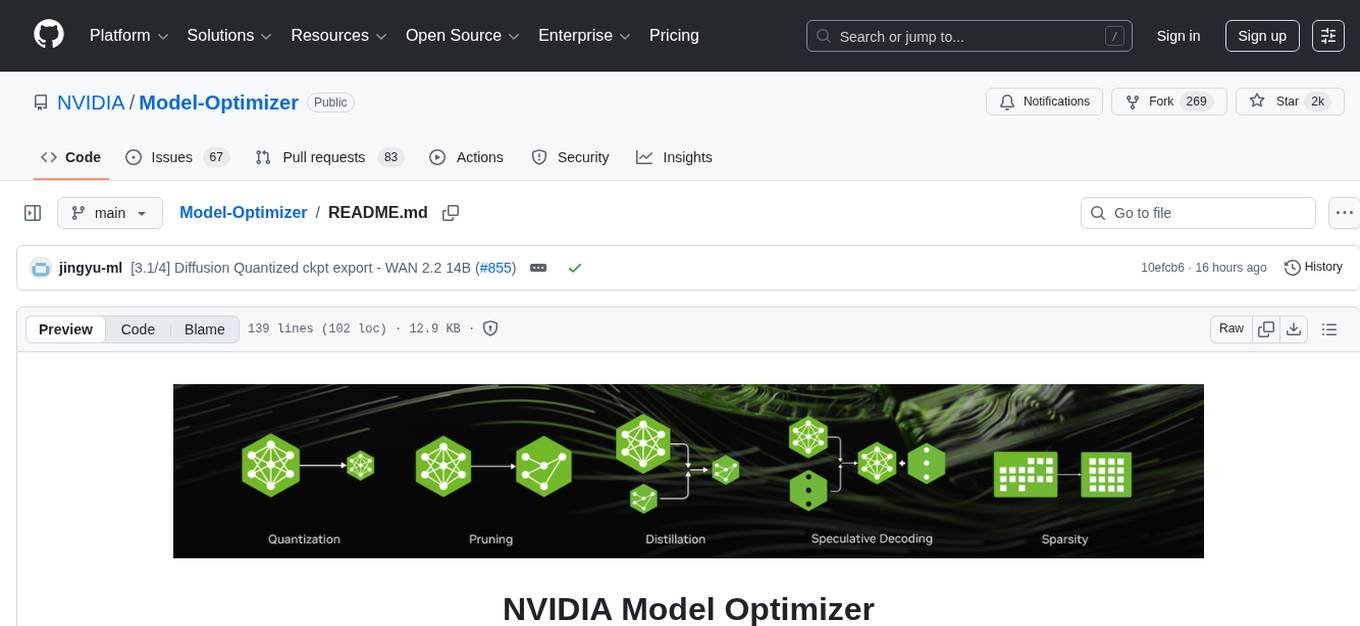

Model-Optimizer

NVIDIA Model Optimizer is a library that offers state-of-the-art model optimization techniques like quantization, distillation, pruning, speculative decoding, and sparsity to accelerate models. It supports inputs of Hugging Face, PyTorch, or ONNX models, provides Python APIs for easy composition of optimization techniques, and exports optimized quantized checkpoints. Integrated with NVIDIA Megatron-Bridge, Megatron-LM, and Hugging Face Accelerate for training required inference optimization techniques. The generated quantized checkpoint is ready for deployment in downstream inference frameworks like SGLang, TensorRT-LLM, TensorRT, or vLLM.

7 - OpenAI Gpts

Material Tailwind GPT

Accelerate web app development with Material Tailwind GPT's components - 10x faster.

Tourist Language Accelerator

Accelerates the learning of key phrases and cultural norms for travelers in various languages.

Digital Entrepreneurship Accelerator Coach

The Go-To Coach for Aspiring Digital Entrepreneurs, Innovators, & Startups. Learn More at UnderdogInnovationInc.com.

24 Hour Startup Accelerator

Niche-focused startup guide, humorous, strategic, simplifying ideas.

Backloger.ai - Product MVP Accelerator

Drop in any requirements or any text ; I'll help you create an MVP with insights.

Digital Boost Lab

A guide for developing university-focused digital startup accelerator programs.