Best AI tools for< Protect Against Genai Risks >

20 - AI tool Sites

Prompt Security

Prompt Security is a platform that secures all uses of Generative AI in the organization: from tools used by your employees to your customer-facing apps.

Lakera

Lakera is the world's most advanced AI security platform that offers cutting-edge solutions to safeguard GenAI applications against various security threats. Lakera provides real-time security controls, stress-testing for AI systems, and protection against prompt attacks, data loss, and insecure content. The platform is powered by a proprietary AI threat database and aligns with global AI security frameworks to ensure top-notch security standards. Lakera is suitable for security teams, product teams, and LLM builders looking to secure their AI applications effectively and efficiently.

Lakera

Lakera is the world's most advanced AI security platform designed to protect organizations from AI threats. It offers solutions for prompt injection detection, unsafe content identification, PII and data loss prevention, data poisoning prevention, and insecure LLM plugin design. Lakera is recognized for setting global AI security standards and is trusted by leading enterprises, foundation model providers, and startups. The platform is powered by a proprietary AI threat database and aligns with global AI security frameworks.

Blackbird.AI

Blackbird.AI is a narrative and risk intelligence platform that helps organizations identify and protect against narrative attacks created by misinformation and disinformation. The platform offers a range of solutions tailored to different industries and roles, enabling users to analyze threats in text, images, and memes across various sources such as social media, news, and the dark web. By providing context and clarity for strategic decision-making, Blackbird.AI empowers organizations to proactively manage and mitigate the impact of narrative attacks on their reputation and financial stability.

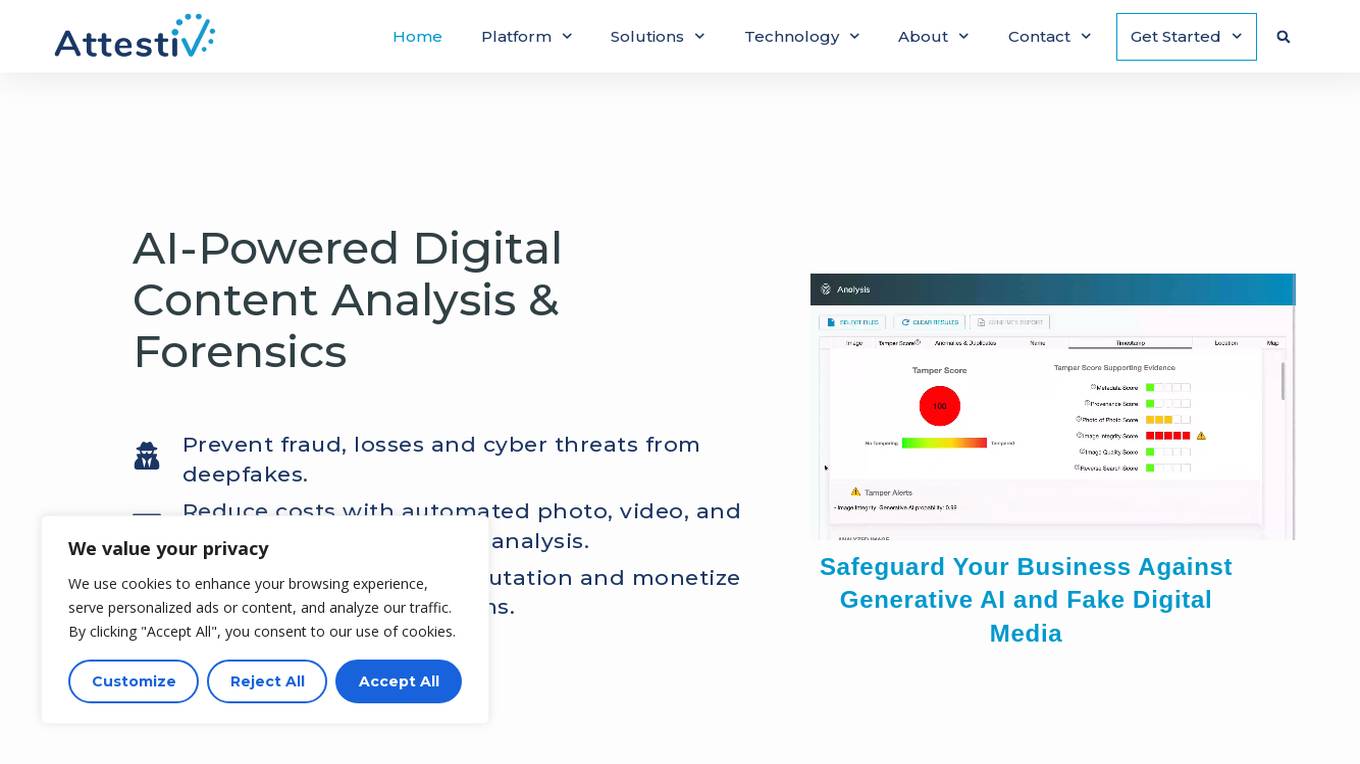

Attestiv

Attestiv is an AI-powered digital content analysis and forensics platform that offers solutions to prevent fraud, losses, and cyber threats from deepfakes. The platform helps in reducing costs through automated photo, video, and document inspection and analysis, protecting company reputation, and monetizing trust in secure systems. Attestiv's technology provides validation and authenticity for all digital assets, safeguarding against altered photos, videos, and documents that are increasingly easy to create but difficult to detect. The platform uses patented AI technology to ensure the authenticity of uploaded media and offers sector-agnostic solutions for various industries.

Hiya

Hiya is an AI-powered caller ID, call blocker, and protection application that enhances voice communication experiences. It helps users identify incoming calls, block spam and fraud, and protect against AI voice fraud and scams. Hiya offers solutions for businesses, carriers, and consumers, with features like branded caller ID, spam detection, call filtering, and more. With a global reach and a user base of over 450 million, Hiya aims to bring trust, identity, and intelligence back to phone calls.

Breacher.ai

Breacher.ai is an AI-powered cybersecurity solution that specializes in deepfake detection and protection. It offers a range of services to help organizations guard against deepfake attacks, including deepfake phishing simulations, awareness training, micro-curriculum, educational videos, and certification. The platform combines advanced AI technology with expert knowledge to detect, educate, and protect against deepfake threats, ensuring the security of employees, assets, and reputation. Breacher.ai's fully managed service and seamless integration with existing security measures provide a comprehensive defense strategy against deepfake attacks.

Hive Defender

Hive Defender is an advanced, machine-learning-powered DNS security service that offers comprehensive protection against a vast array of cyber threats including but not limited to cryptojacking, malware, DNS poisoning, phishing, typosquatting, ransomware, zero-day threats, and DNS tunneling. Hive Defender transcends traditional cybersecurity boundaries, offering multi-dimensional protection that monitors both your browser traffic and the entirety of your machine’s network activity.

CrowdStrike

CrowdStrike is a leading cybersecurity platform that uses artificial intelligence (AI) to protect businesses from cyber threats. The platform provides a unified approach to security, combining endpoint security, identity protection, cloud security, and threat intelligence into a single solution. CrowdStrike's AI-powered technology enables it to detect and respond to threats in real-time, providing businesses with the protection they need to stay secure in the face of evolving threats.

Robust Intelligence

Robust Intelligence is an end-to-end solution for securing AI applications. It automates the evaluation of AI models, data, and files for security and safety vulnerabilities and provides guardrails for AI applications in production against integrity, privacy, abuse, and availability violations. Robust Intelligence helps enterprises remove AI security blockers, save time and resources, meet AI safety and security standards, align AI security across stakeholders, and protect against evolving threats.

Sider.ai

Sider.ai is an AI tool designed to verify the security of user connections. It ensures a safe browsing experience by reviewing and authenticating human users before granting access. The tool performs security checks in real-time, providing a seamless and secure online environment for users. Sider.ai leverages AI technology to enhance performance and protect against potential threats, offering peace of mind to individuals and businesses alike.

Cloudflare

The website page is related to Cloudflare, a popular content delivery network and security service that helps to secure and optimize websites. It explains the error code 1014, which occurs when a CNAME record is used across different accounts on Cloudflare, violating their security policy. The page provides information on why the error happens and what steps users can take to resolve it.

Cloudflare

Cloudflare is a web infrastructure and website security company that provides content delivery network services, DDoS mitigation, Internet security, and distributed domain name server services. It offers a range of developer products and AI products to enhance web performance and security. Cloudflare's platform allows users to build, secure, and deliver applications globally, with features like Workers, Pages, Images, Stream, AutoRAG, AI Vectorize, AI Gateway, and AI Playground.

RTB House

RTB House is a global leader in online ad campaigns, offering a range of AI-powered solutions to help businesses drive sales and engage with customers. Their technology leverages deep learning to optimize ad campaigns, providing personalized retargeting, branding, and fraud protection. RTB House works with agencies and clients across various industries, including fashion, electronics, travel, and multi-category retail.

Deepfake Detector

Deepfake Detector is an AI tool designed to identify deepfakes in audio and video files. It offers features such as background noise and music removal, audio and video file analysis, and browser extension integration. The tool helps individuals and businesses protect themselves against deepfake scams by providing accurate detection and filtering of AI-generated content. With a focus on authenticity and reliability, Deepfake Detector aims to prevent financial losses and fraudulent activities caused by deepfake technology.

AI Scam Detective

AI Scam Detective is an AI tool designed to help users detect and prevent online scams. Users can input messages or conversations into the tool, which then provides a scam likelihood score from 1 to 10. The tool aims to empower users to identify potential scams and protect themselves from fraudulent activities. Created by Sam Meehan.

Sentitrac

Sentitrac.com is a website that focuses on security verification for user connections. It ensures that users are human by conducting security checks before allowing access to the site. The platform utilizes technologies like JavaScript and cookies to enhance security measures. Additionally, it leverages Cloudflare for performance optimization and protection against cyber threats.

www.atom.com

The website www.atom.com is a platform that provides security verification services to ensure a safe and secure connection for users. It verifies the authenticity of users by checking for human interaction, enabling JavaScript and cookies, and reviewing the security of connections. The platform is powered by Cloudflare to enhance performance and security measures.

Turing.school

Turing.school is a website that focuses on security verification for users. It ensures the safety of connections by reviewing security measures before allowing access. Users may encounter a brief waiting period during the verification process, which involves enabling JavaScript and cookies. The site is powered by Cloudflare for enhanced performance and security.

Tokenomist.ai

Tokenomist.ai is an AI-powered security service website that helps protect against online attacks by enabling cookies and blocking malicious activities. It uses advanced algorithms to detect and prevent security threats, ensuring a safe browsing experience for users. The platform is designed to safeguard websites from potential risks and vulnerabilities, offering a reliable security solution for online businesses and individuals.

0 - Open Source AI Tools

20 - OpenAI Gpts

fox8 botnet paper

A helpful guide for understanding the paper "Anatomy of an AI-powered malicious social botnet"

T71 Russian Cyber Samovar

Analyzes and updates on cyber-related Russian APTs, cognitive warfare, disinformation, and other infoops.

CyberNews GPT

CyberNews GPT is an assistant that provides the latest security news about cyber threats, hackings and breaches, malware, zero-day vulnerabilities, phishing, scams and so on.

Personal Cryptoasset Security Wizard

An easy to understand wizard that guides you through questions about how to protect, back up and inherit essential digital information and assets such as crypto seed phrases, private keys, digital art, wallets, IDs, health and insurance information for you and your family.

Cute Little Time Travellers, a text adventure game

Protect your cute little timeline. Let me entertain you with this interactive repair-the-timeline game, lovingly illustrated in the style of ultra-cute little 3D kawaii dioramas.

Litigation Advisor

Advises on litigation strategies to protect the organization's legal rights.

Free Antivirus Software 2024

Free Antivirus Software : Reviews and Best Free Offers for antivirus software to protect you

GPT Auth™

This is a demonstration of GPT Auth™, an authentication system designed to protect your customized GPT.

Prompt Injection Detector

GPT used to classify prompts as valid inputs or injection attempts. Json output.

👑 Data Privacy for Insurance Companies 👑

Insurance providers collect and process personal health, financial, and property information, making it crucial to implement comprehensive data protection strategies.

Project Risk Assessment Advisor

Assesses project risks to mitigate potential organizational impacts.

PrivacyGPT

Guides And Advise On Digital Privacy Ranging From The Well Known To The Underground....

Big Idea Assistant

Expert advisor for protecting, sharing, and monetizing Intellectual Digital Assets (IDEAs) using Big Idea Platform.