Best AI tools for< Compare Judges >

20 - AI tool Sites

Lex Machina

Lex Machina is a Legal Analytics platform that provides comprehensive insights into litigation track records of parties across the United States. It offers accurate and transparent analytic data, exclusive outcome analytics, and valuable insights to help law firms and companies craft successful strategies, assess cases, and set litigation strategies. The platform uses a unique combination of machine learning and in-house legal experts to compile, clean, and enhance data, providing unmatched insights on courts, judges, lawyers, law firms, and parties.

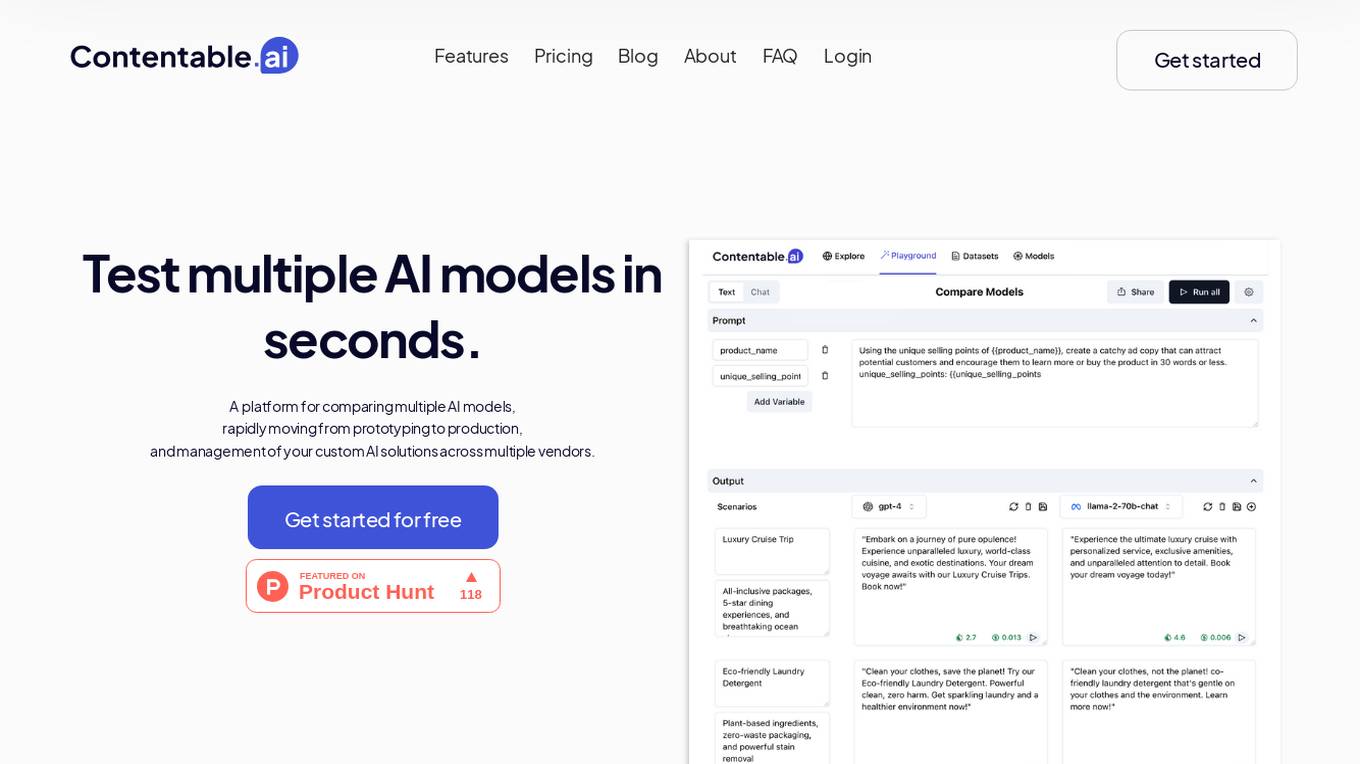

Contentable.ai

Contentable.ai is a platform for comparing multiple AI models, rapidly moving from prototyping to production, and management of your custom AI solutions across multiple vendors. It allows users to test multiple AI models in seconds, compare models side-by-side across top AI providers, collaborate on AI models with their team seamlessly, design complex AI workflows without coding, and pay as they go.

Sofon

Sofon is a knowledge aggregation and curation platform that provides users with personalized insights on topics they care about. It aggregates and curates knowledge shared across 1,000+ articles, podcasts, and books, delivering a personalized stream of ideas to users. Sofon uses AI to compare ideas across hundreds of people on any question, saving users thousands of hours of curation. Users can indicate the people they want to learn from, and Sofon will curate insights across all their knowledge. Users can receive an idealetter, which is a unique combination of ideas across all the people they've selected around a common theme, delivered at an interval of their choice.

LLM Clash

LLM Clash is a web-based application that allows users to compare the outputs of different large language models (LLMs) on a given task. Users can input a prompt and select which LLMs they want to compare. The application will then display the outputs of the LLMs side-by-side, allowing users to compare their strengths and weaknesses.

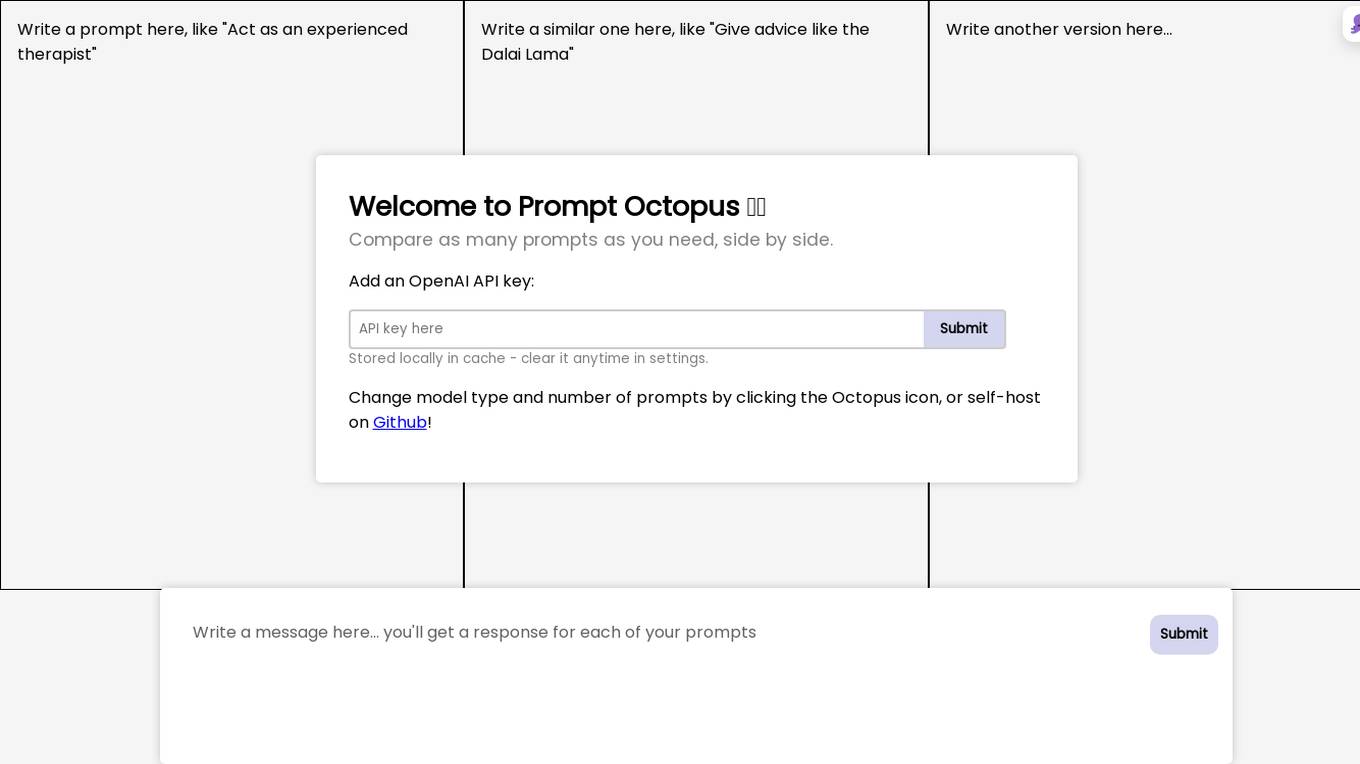

Prompt Octopus

Prompt Octopus is a free tool that allows you to compare multiple prompts side-by-side. You can add as many prompts as you need and view the responses in real-time. This can be helpful for fine-tuning your prompts and getting the best possible results from your AI model.

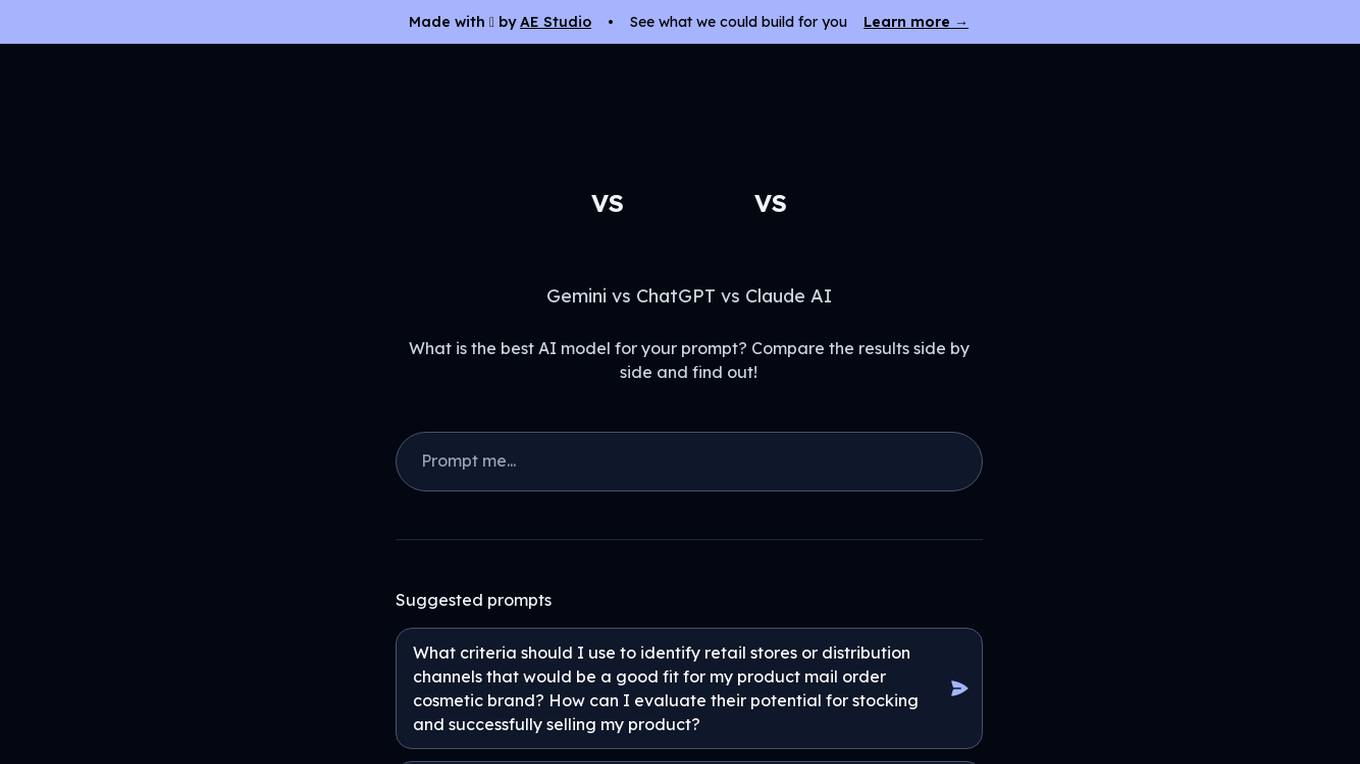

Gemini vs ChatGPT

Gemini is a multi-modal AI model, developed by Google. It is designed to understand and generate human language, and can be used for a variety of tasks, including question answering, translation, and dialogue generation. ChatGPT is a large language model, developed by OpenAI. It is also designed to understand and generate human language, and can be used for a variety of tasks, including question answering, translation, and dialogue generation.

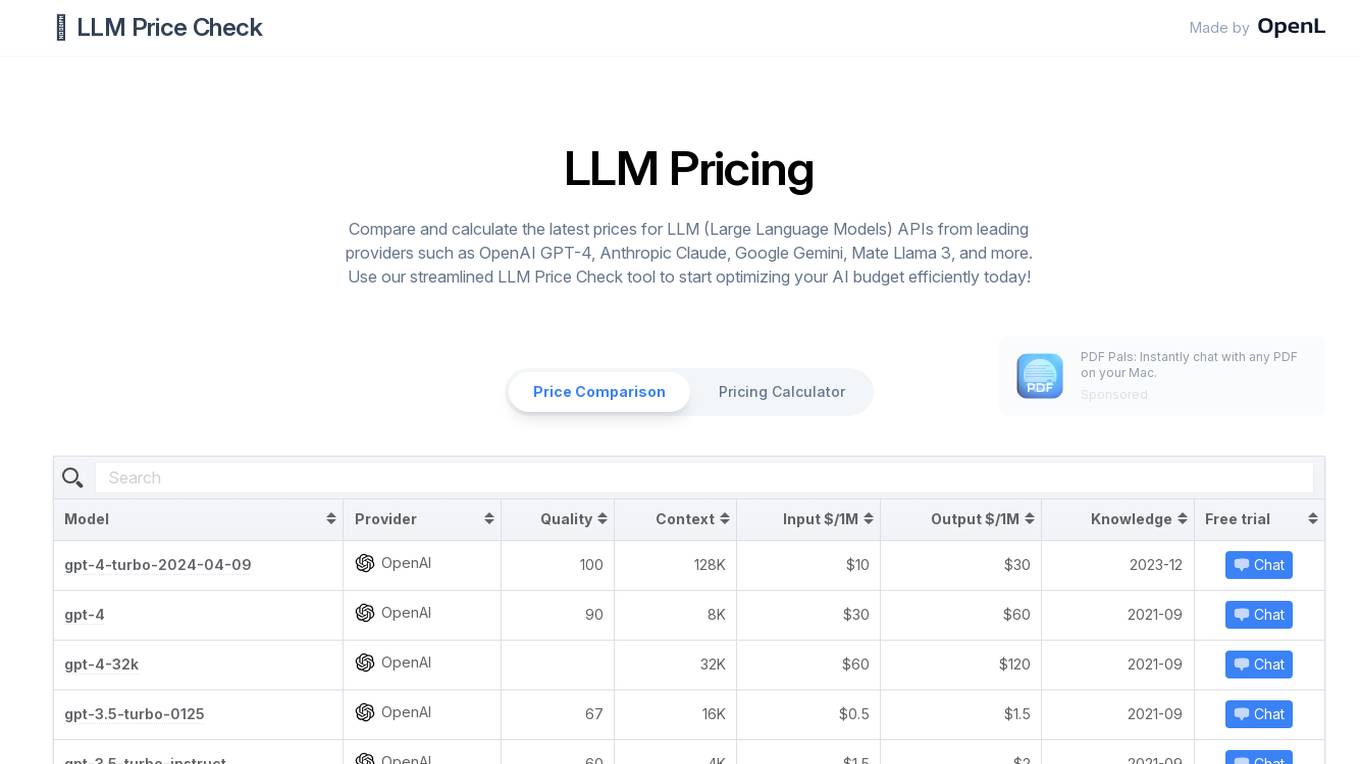

LLM Price Check

LLM Price Check is an AI tool designed to compare and calculate the latest prices for Large Language Models (LLM) APIs from leading providers such as OpenAI, Anthropic, Google, and more. Users can use the streamlined tool to optimize their AI budget efficiently by comparing pricing, sorting by various parameters, and searching for specific models. The tool provides a comprehensive overview of pricing information to help users make informed decisions when selecting an LLM API provider.

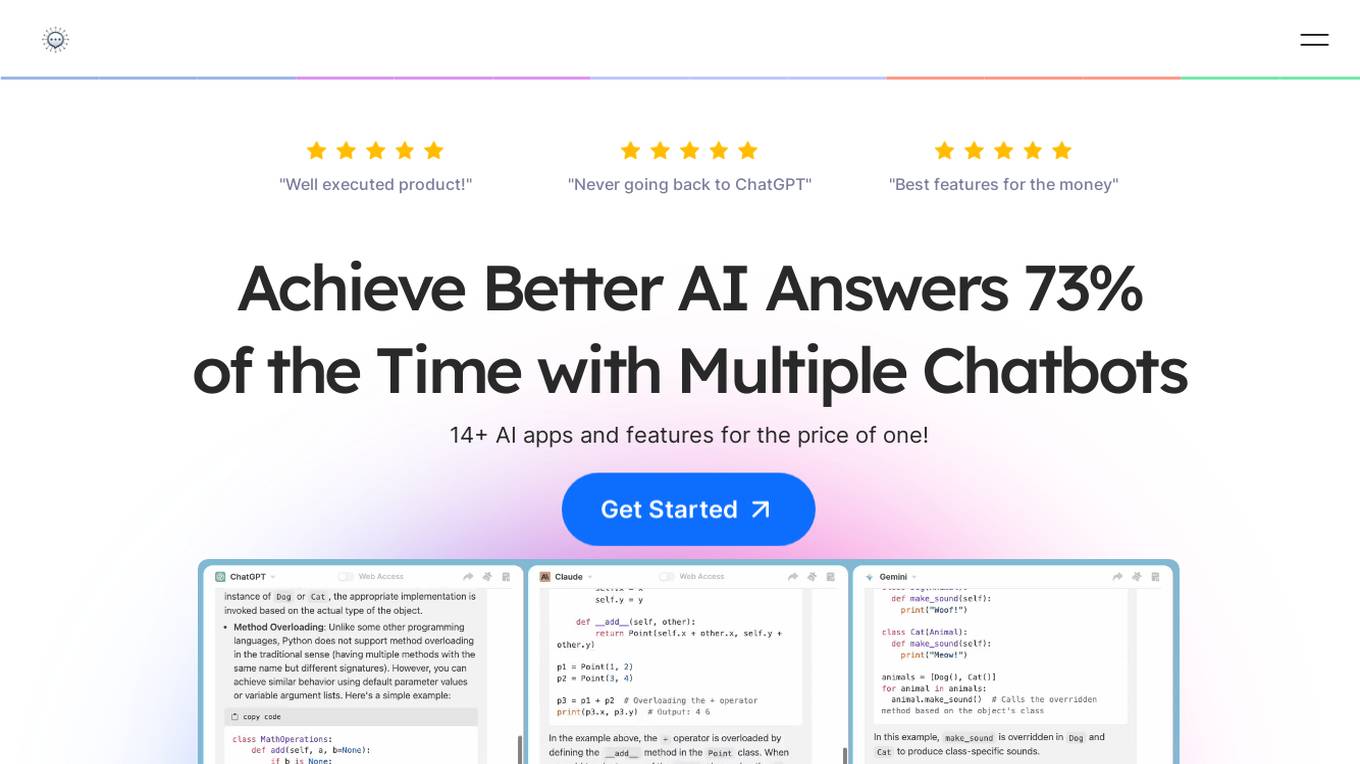

ChatPlayground AI

ChatPlayground AI is a versatile platform that allows users to compare multiple AI chatbots to obtain the best responses. With 14+ AI apps and features available, users can achieve better AI answers 73% of the time. The platform offers a comprehensive prompt library, real-time web search capabilities, image generation, history recall, document upload and analysis, and multilingual support. It caters to developers, data scientists, students, researchers, content creators, writers, and AI enthusiasts. Testimonials from users highlight the efficiency and creativity-enhancing benefits of using ChatPlayground AI.

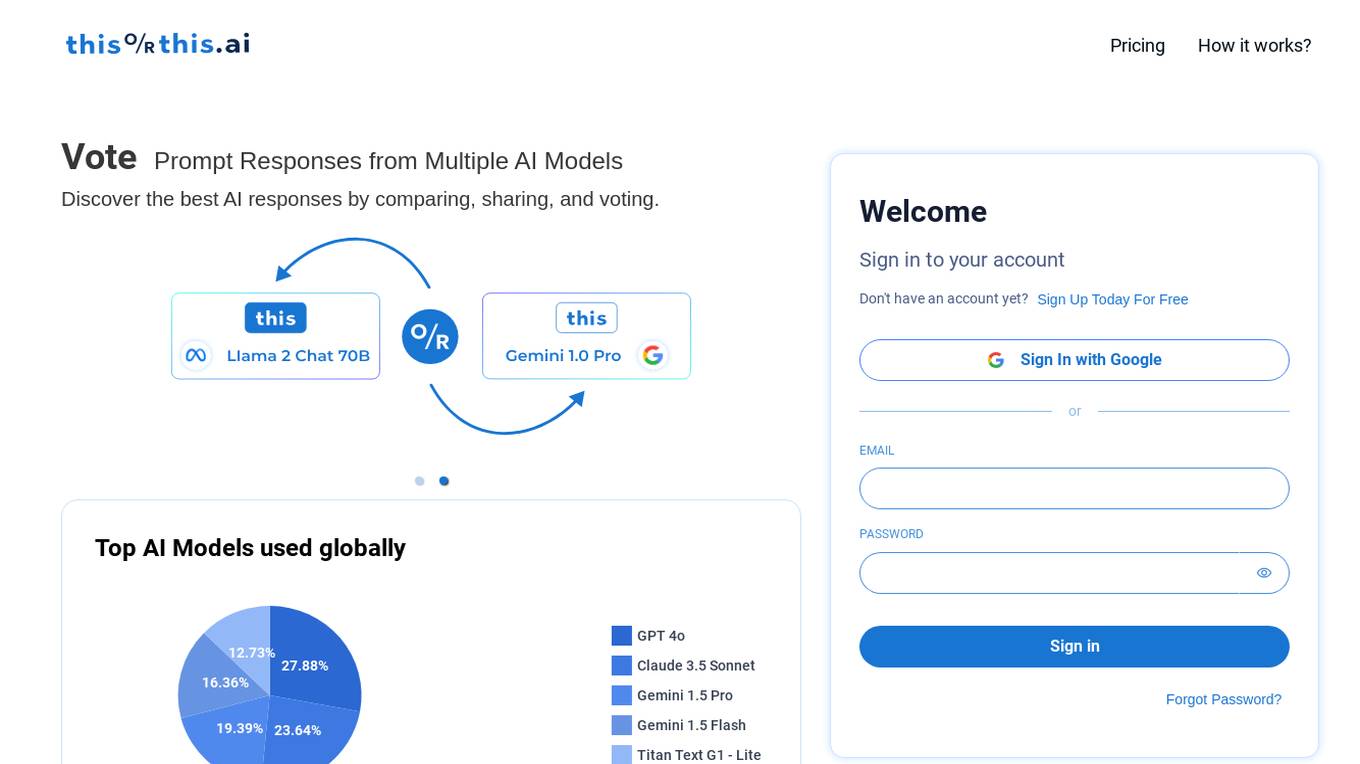

thisorthis.ai

thisorthis.ai is an AI tool that allows users to compare generative AI models and AI model responses. It helps users analyze and evaluate different AI models to make informed decisions. The tool requires JavaScript to be enabled for optimal functionality.

GetOData

GetOData is an AI-based data extraction tool designed for small-scale scraping. It allows users to discover and compare over 4,000 APIs for various use cases. The tool offers Apify Actors for extracting structured listings from any website and a Chrome Extension for seamless data extraction. With features like AI-based data extraction, side-by-side API comparisons, and automated scrolling for data collection, GetOData is a powerful tool for web scraping and data analysis.

Ai Tool Hunt

Ai Tool Hunt is a comprehensive directory of free AI tools, software, and websites. It provides users with a curated list of the best AI resources available online, empowering them to enhance their digital experiences and leverage the latest advancements in artificial intelligence. With Ai Tool Hunt, users can discover powerful AI tools for various tasks, including content creation, data analysis, image editing, language learning, and more. The platform offers detailed descriptions, user ratings, and easy access to these tools, making it a valuable resource for individuals and businesses seeking to integrate AI into their workflows.

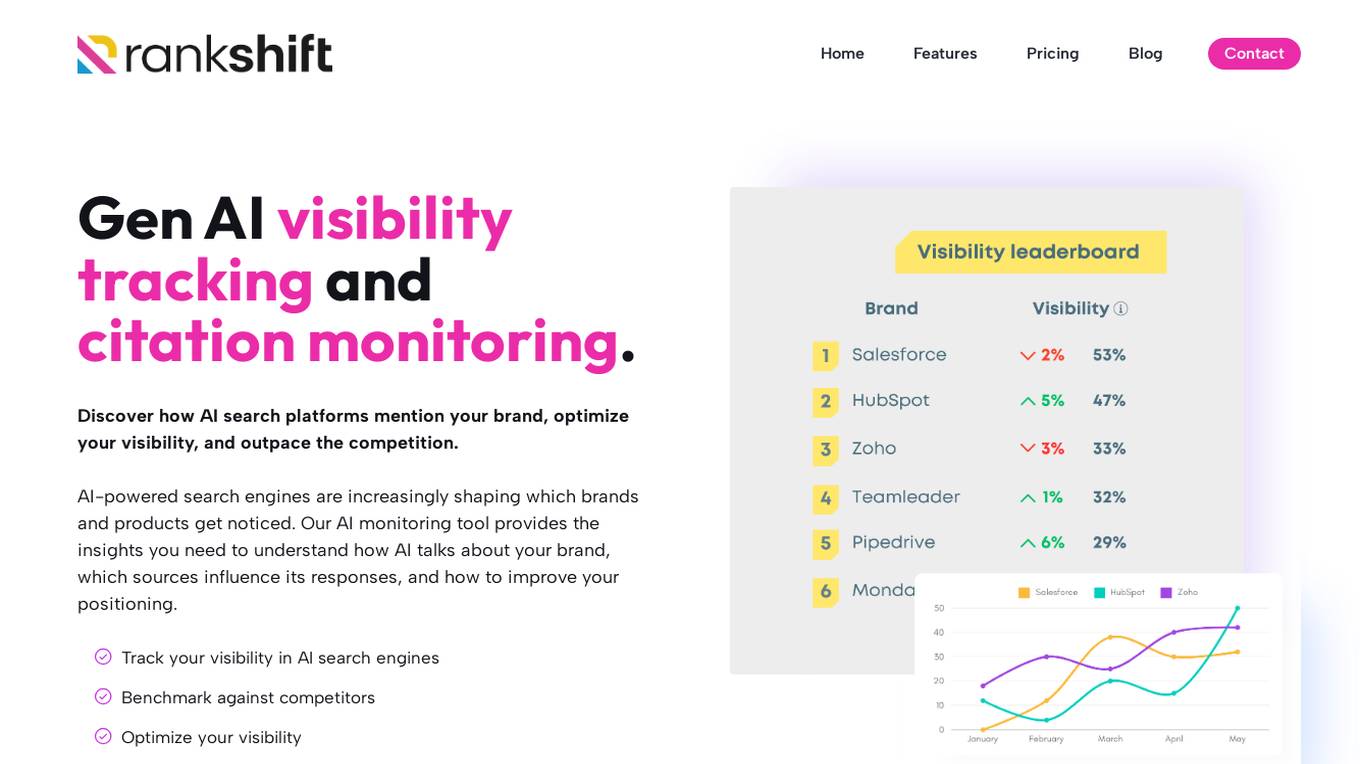

RANKSHIFT

RANKSHIFT is an AI brand visibility tracking tool that helps businesses monitor their visibility in AI search engines and optimize their presence. It provides insights on how AI platforms mention brands, compares visibility against competitors, and offers data-driven insights to improve AI search strategy. The tool tracks brand mentions, identifies sources influencing AI-generated answers, and helps businesses stay ahead in the competitive AI landscape.

Error Detector

The website appears to be experiencing technical difficulties as indicated by the error message displayed. It seems that the request could not be satisfied and there was a failure to contact the origin server. The error message includes a timestamp and a request ID. This suggests that the website may be encountering server-side issues or connectivity problems.

Everypixel

Everypixel.com is a website that provides image analysis services. Users can upload images to the platform for analysis, which includes features such as identifying the quality of images, detecting objects, and providing aesthetic scores. The platform helps users in making informed decisions about their images, whether for personal or professional use. Everypixel.com ensures the security of user connections and requires JavaScript and cookies to be enabled for a seamless experience.

Insurance Policy AI

This application utilizes AI technology to simplify the complex process of understanding health insurance policies. Unlike other apps that focus on insurance search and comparison, this app specializes in deciphering the intricate language found in policies. It provides instant access to policy analysis with a one-time payment, empowering users to gain clarity and make informed decisions regarding their health insurance coverage.

OSS Insight

OSS Insight is an AI tool that provides deep insight into developers and repositories on GitHub, offering information about stars, pull requests, issues, pushes, comments, and reviews. It utilizes artificial intelligence to analyze data and provide valuable insights to users. The tool ranks repositories, tracks trending repositories, and offers real-time information about GitHub events. Additionally, it offers features like data exploration, collections, live blog, API integration, and widgets.

WordfixerBot's Paraphrasing Tool

WordfixerBot's Paraphrasing Tool is an AI-powered tool that helps users quickly and accurately rephrase any text. It uses advanced AI models to generate human-like text while maintaining the original meaning. The tool offers multiple tone options, allowing users to customize the paraphrased text to suit their writing style and audience. It is widely used by individuals and organizations, including writers, marketing professionals, bloggers, students, copywriters, journalists, editors, business professionals, content creators, and researchers.

Personalized.energy

Personalized.energy is an AI-powered online platform that offers personalized electricity plans tailored to individual needs and lifestyles. The platform simplifies the process of finding the best energy solutions by utilizing an AI-powered search engine to compare and match users with the most suitable plans based on their location and usage profile. By eliminating the need for manual research and comparison, Personalized.energy aims to provide a stress-free experience for users looking to navigate the complexities of the energy market.

NavTo.AI

NavTo.AI is the ultimate AI tools directory that curates exceptional AI solutions from around the globe. It showcases cutting-edge innovations in artificial intelligence, covering a wide range of categories such as AI chatbots, fitness trackers, content generators, video editors, and more. Whether you're a developer, entrepreneur, or AI enthusiast, NavTo.AI helps you discover tools that enhance productivity and spark innovation. The platform offers daily updates on breakthrough AI technologies, making it a valuable resource for staying ahead in the AI landscape.

Flux LoRA Model Library

Flux LoRA Model Library is an AI tool that provides a platform for finding and using Flux LoRA models suitable for various projects. Users can browse a catalog of popular Flux LoRA models and learn about FLUX models and LoRA (Low-Rank Adaptation) technology. The platform offers resources for fine-tuning models and ensuring responsible use of generated images.

0 - Open Source AI Tools

20 - OpenAI Gpts

ConstitutiX

Asesor en derecho constitucional chileno. Te explicaré las diferencias entre la Constitución Vigente y la Propuesta Constitucional 2023.

Best Spy Apps for Android (Q&A)

FREE tool to compare best spy apps for Android. Get answers to your questions and explore features, pricing, pros and cons of each spy app.

GPTValue

Compare similar GPTs outputs quality on the same question, identify the most valuable one.

TV Comparison | Comprehensive TV Database

Compare TV Devices Uncover the pros and cons of different latest TV models.

PerspectiveBot

Provide TOPIC & different views to compare: Gateway to Informed Comparisons. Harness AI-powered insights to analyze and score different viewpoints on any topic, delivering balanced, data-driven perspectives for smarter decision-making.

Calorie Count & Cut Cost: Food Data

Apples vs. Oranges? Optimize your low-calorie diet. Compare food items. Get tailored advice on satiating, nutritious, cost-effective food choices based on 240 items.

Best price kuwait

A customized GPT model for price comparison would search and compare product prices on websites in Kuwait, tailored to local markets and languages.

Software Comparison

I compare different software, providing detailed, balanced information.

Website Conversion by B12

I'll help you optimize your website for more conversions, and compare your site's CRO potential to competitors’.

Course Finder

Find the perfect online course in tech, business, marketing, programming, and more. Compare options from top platforms like Udemy, Coursera, and EDX.

AI Hub

Your Gateway to AI Discovery – Ask, Compare, Learn. Explore AI tools and software with ease. Create AI Tech Stacks for your business and much more – Just ask, and AI Hub will do the rest!

🔵 GPT Boosted

GPT- 5 ? | Enhanced version of GPT-4 Turbo, don't believe, try and compare! | ver .001