Best AI tools for< Benchmark Performance >

20 - AI tool Sites

Perspect

Perspect is an AI-powered platform designed for high-performance software teams. It offers real-time insights into team contributions and impact, optimizing developer experience, and rewarding high-performers. With 50+ integrations, Perspect enables visualization of impact, benchmarking performance, and uses machine learning models to identify and eliminate blockers. The platform is deeply integrated with web3 wallets and offers built-in reward mechanisms. Managers can align resources around crucial KPIs, identify top talent, and prevent burnout. Perspect aims to enhance team productivity and employee retention through AI and ML technologies.

Clarity AI

Clarity AI is an AI-powered technology platform that offers a Sustainability Tech Kit for sustainable investing, shopping, reporting, and benchmarking. The platform provides built-in sustainability technology with customizable solutions for various needs related to data, methodologies, and tools. It seamlessly integrates into workflows, offering scalable and flexible end-to-end SaaS tools to address sustainability use cases. Clarity AI leverages powerful AI and machine learning to analyze vast amounts of data points, ensuring reliable and transparent data coverage. The platform is designed to empower users to assess, analyze, and report on sustainability aspects efficiently and confidently.

Groq

Groq is a fast AI inference tool that offers GroqCloud™ Platform and GroqRack™ Cluster for developers to build and deploy AI models with ultra-low-latency inference. It provides instant intelligence for openly-available models like Llama 3.1 and is known for its speed and compatibility with other AI providers. Groq powers leading openly-available AI models and has gained recognition in the AI chip industry. The tool has received significant funding and valuation, positioning itself as a strong challenger to established players like Nvidia.

Hailo Community

Hailo Community is an AI tool designed for developers and enthusiasts working with Raspberry Pi and Hailo-8L AI Kit. The platform offers resources, benchmarks, and support for training custom models, optimizing AI tasks, and troubleshooting errors related to Hailo and Raspberry Pi integration.

EarningsCall.ai

EarningsCall.ai is an AI-powered tool that provides stock earnings call summaries and insights, saving users hours of reading transcripts. It allows users to compare competitors, analyze trends, and generate their own insights. The tool is designed for business leaders, financial professionals, consultants, and advisors to track competitor earnings, assess market trends, and optimize investment strategies.

Particl

Particl is an AI-powered platform that automates competitor intelligence for modern retail businesses. It provides real-time sales, pricing, and sentiment data across various e-commerce channels. Particl's AI technology tracks sales, inventory, pricing, assortment, and sentiment to help users quickly identify profitable opportunities in the market. The platform offers features such as benchmarking performance, automated e-commerce intelligence, competitor research, product research, assortment analysis, and promotions monitoring. With easy-to-use tools and robust AI capabilities, Particl aims to elevate team workflows and capabilities in strategic planning, product launches, and market analysis.

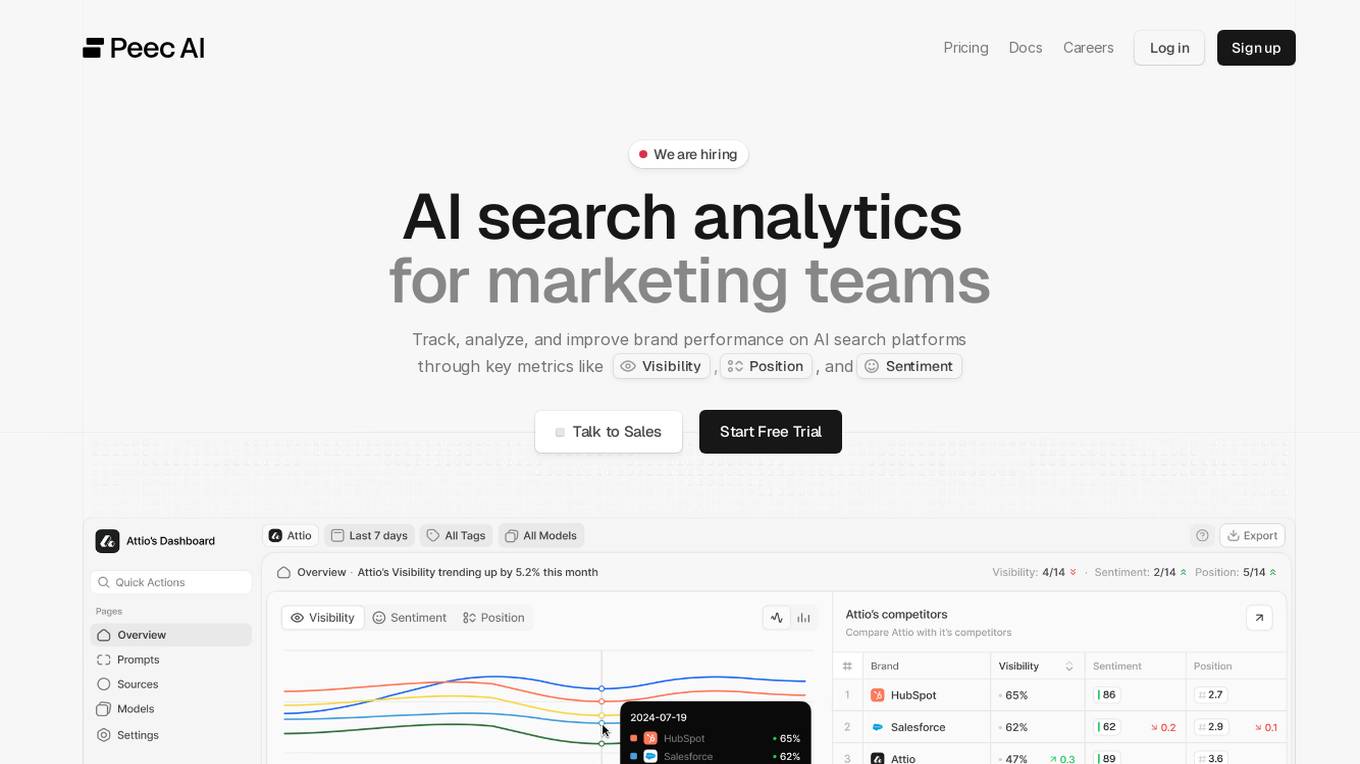

Peec AI

Peec AI is an AI search analytics tool designed for marketing teams to track, analyze, and improve brand performance on AI search platforms. It provides key metrics such as Visibility, Position, and Sentiment to help businesses understand how AI perceives their brand. The platform offers insights on AI visibility, prompts analysis, and competitor tracking to enhance marketing strategies in the era of AI and generative search.

Weavel

Weavel is an AI tool designed to revolutionize prompt engineering for large language models (LLMs). It offers features such as tracing, dataset curation, batch testing, and evaluations to enhance the performance of LLM applications. Weavel enables users to continuously optimize prompts using real-world data, prevent performance regression with CI/CD integration, and engage in human-in-the-loop interactions for scoring and feedback. Ape, the AI prompt engineer, outperforms competitors on benchmark tests and ensures seamless integration and continuous improvement specific to each user's use case. With Weavel, users can effortlessly evaluate LLM applications without the need for pre-existing datasets, streamlining the assessment process and enhancing overall performance.

Junbi.ai

Junbi.ai is an AI-powered insights platform designed for YouTube advertisers. It offers AI-powered creative insights for YouTube ads, allowing users to benchmark their ads, predict performance, and test quickly and easily with fully AI-powered technology. The platform also includes expoze.io API for attention prediction on images or videos, with scientifically valid results and developer-friendly features for easy integration into software applications.

Woven Insights

Woven Insights is an AI-driven Fashion Retail Market & Consumer Insights solution that empowers fashion businesses with data-driven decision-making capabilities. It provides competitive intelligence, performance monitoring analytics, product assortment optimization, market insights, consumer insights, and pricing strategies to help businesses succeed in the retail market. With features like insights-driven competitive benchmarking, real-time market insights, product performance tracking, in-depth market analytics, and sentiment analysis, Woven Insights offers a comprehensive solution for businesses of all sizes. The application also offers bespoke data analysis, AI insights, natural language query, and easy collaboration tools to enhance decision-making processes. Woven Insights aims to democratize fashion intelligence by providing affordable pricing and accessible insights to help businesses stay ahead of the competition.

Unify

Unify is an AI tool that offers a unified platform for accessing and comparing various Language Models (LLMs) from different providers. It allows users to combine models for faster, cheaper, and better responses, optimizing for quality, speed, and cost-efficiency. Unify simplifies the complex task of selecting the best LLM by providing transparent benchmarks, personalized routing, and performance optimization tools.

Vals AI

Vals AI is an advanced AI tool that provides benchmark reports and comparisons for various models in the fields of finance, coding, and law. The platform offers insights into the performance of different AI models across different tasks and industries. Vals AI aims to bridge the gap in model benchmarking and provide valuable information for users looking to evaluate and compare AI models for specific tasks.

Quolity AI

Quolity is an AI-powered platform designed for Answer Engine Optimization (AEO) to help brands and businesses enhance their online visibility and credibility in AI search engines. It offers insights, quality scores, and actions based on analyzing AI citations, brand mentions, and competitive link analysis. Quolity tracks brand mentions, provides quality analysis, and offers recommendations to improve AEO performance. The platform caters to startups, B2B SaaS, agencies, and content-driven brands aiming to excel in the evolving landscape of AI search.

Aider

Aider is an AI pair programming tool that allows users to collaborate with Language Model Models (LLMs) to edit code in their local git repository. It supports popular languages like Python, JavaScript, TypeScript, PHP, HTML, and CSS. Aider can handle complex requests, automatically commit changes, and work well in larger codebases by using a map of the entire git repository. Users can edit files while chatting with Aider, add images and URLs to the chat, and even code using their voice. Aider has received positive feedback from users for its productivity-enhancing features and performance on software engineering benchmarks.

Reflection 70B

Reflection 70B is a next-gen open-source LLM powered by Llama 70B, offering groundbreaking self-correction capabilities that outsmart GPT-4. It provides advanced AI-powered conversations, assists with various tasks, and excels in accuracy and reliability. Users can engage in human-like conversations, receive assistance in research, coding, creative writing, and problem-solving, all while benefiting from its innovative self-correction mechanism. Reflection 70B sets new standards in AI performance and is designed to enhance productivity and decision-making across multiple domains.

Legal Benchmarks

Legal Benchmarks is a platform that provides independent lawyer-led AI evaluations for in-house legal work in the legal industry. The platform evaluates AI assistants on critical legal tasks like contract drafting and information extraction. It offers rankings based on how different AI tools perform on real-world legal tasks, helping legal teams understand and adopt AI solutions. Legal Benchmarks also allows legal AI vendors to submit their tools for evaluation and provides access to customized private reports, insights, and practical breakdowns of AI tools' performance.

WorkViz

WorkViz is an AI-powered performance tool designed for remote teams to visualize productivity, maximize performance, and foresee the team's potential. It offers features such as automated daily reports, employee voice expression through emojis, workload management alerts, productivity solutions, and intelligent summaries. WorkViz ensures data security through guaranteed audit, desensitization, and SSL security protocols. The application has received positive feedback from clients for driving improvements, providing KPIs and benchmarks, and simplifying daily reporting. It helps users track work hours, identify roadblocks, and improve team performance.

Flowtrace

Flowtrace is an AI-powered Company Analytics Productivity tool that focuses on improving meeting culture, collaboration, and engagement within organizations. It analyzes meeting data to provide actionable recommendations for enhancing team performance and productivity. With features like industry benchmarks, integration with common SaaS tools, and personalized insights, Flowtrace aims to help teams work more efficiently and effectively. The tool helps in reducing meeting costs, increasing productivity, removing distractions, and transforming meeting culture for better decision-making and outcomes.

Proxima

Proxima is a predictive data intelligence tool that offers custom audiences for paid social, analytics, and tactical benchmarks. It helps businesses lower acquisition costs, increase customer lifetime value, and grow profitably by leveraging AI-powered advertising solutions. With a focus on predictive insights and enterprise-grade analytics, Proxima empowers users to make data-driven decisions that fuel growth and optimize marketing performance.

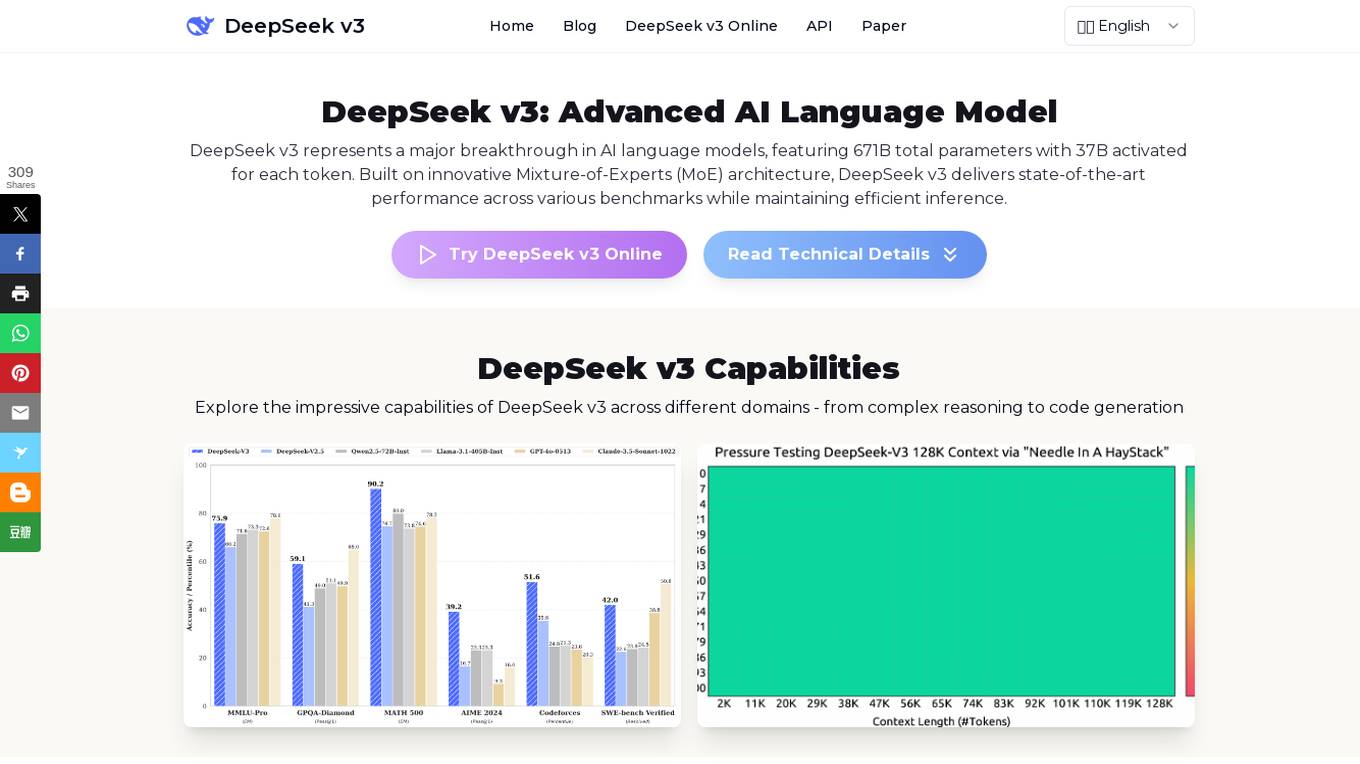

DeepSeek v3

DeepSeek v3 is an advanced AI language model that represents a major breakthrough in AI language models. It features a groundbreaking Mixture-of-Experts (MoE) architecture with 671B total parameters, delivering state-of-the-art performance across various benchmarks while maintaining efficient inference capabilities. DeepSeek v3 is pre-trained on 14.8 trillion high-quality tokens and excels in tasks such as text generation, code completion, and mathematical reasoning. With a 128K context window and advanced Multi-Token Prediction, DeepSeek v3 sets new standards in AI language modeling.

0 - Open Source AI Tools

10 - OpenAI Gpts

Performance Testing Advisor

Ensures software performance meets organizational standards and expectations.

HVAC Apex

Benchmark HVAC GPT model with unmatched expertise and forward-thinking solutions, powered by OpenAI

SaaS Navigator

A strategic SaaS analyst for CXOs, with a focus on market trends and benchmarks.

Transfer Pricing Advisor

Guides businesses in managing global tax liabilities efficiently.

Salary Guides

I provide monthly salary data in euros, using a structured format for global job roles.