Best AI tools for< Assess Risks >

20 - AI tool Sites

Pascal

Pascal is an AI-powered risk-based KYC & AML screening and monitoring platform that offers users the ability to assess findings faster and more accurately than other compliance tools. It utilizes AI, machine learning, and Natural Language Processing to analyze open-source and client-specific data, interpret adverse media in multiple languages, simplify onboarding processes, provide continuous monitoring, reduce false positives, and enhance compliance decision-making.

SWMS AI

SWMS AI is an AI-powered safety risk assessment tool that helps businesses streamline compliance and improve safety. It leverages a vast knowledge base of occupational safety resources, codes of practice, risk assessments, and safety documents to generate risk assessments tailored specifically to a project, trade, and industry. SWMS AI can be customized to a company's policies to align its AI's document generation capabilities with proprietary safety standards and requirements.

Archistar

Archistar is a leading property research platform in Australia that empowers users to make confident and compliant property decisions with the help of data and AI. It offers a range of features, including the ability to find and assess properties, generate 3D design concepts, and minimize risk and maximize return on investment. Archistar is trusted by over 100,000 individuals and 1,000 leading property firms.

Lumenova AI

Lumenova AI is an AI platform that focuses on making AI ethical, transparent, and compliant. It provides solutions for AI governance, assessment, risk management, and compliance. The platform offers comprehensive evaluation and assessment of AI models, proactive risk management solutions, and simplified compliance management. Lumenova AI aims to help enterprises navigate the future confidently by ensuring responsible AI practices and compliance with regulations.

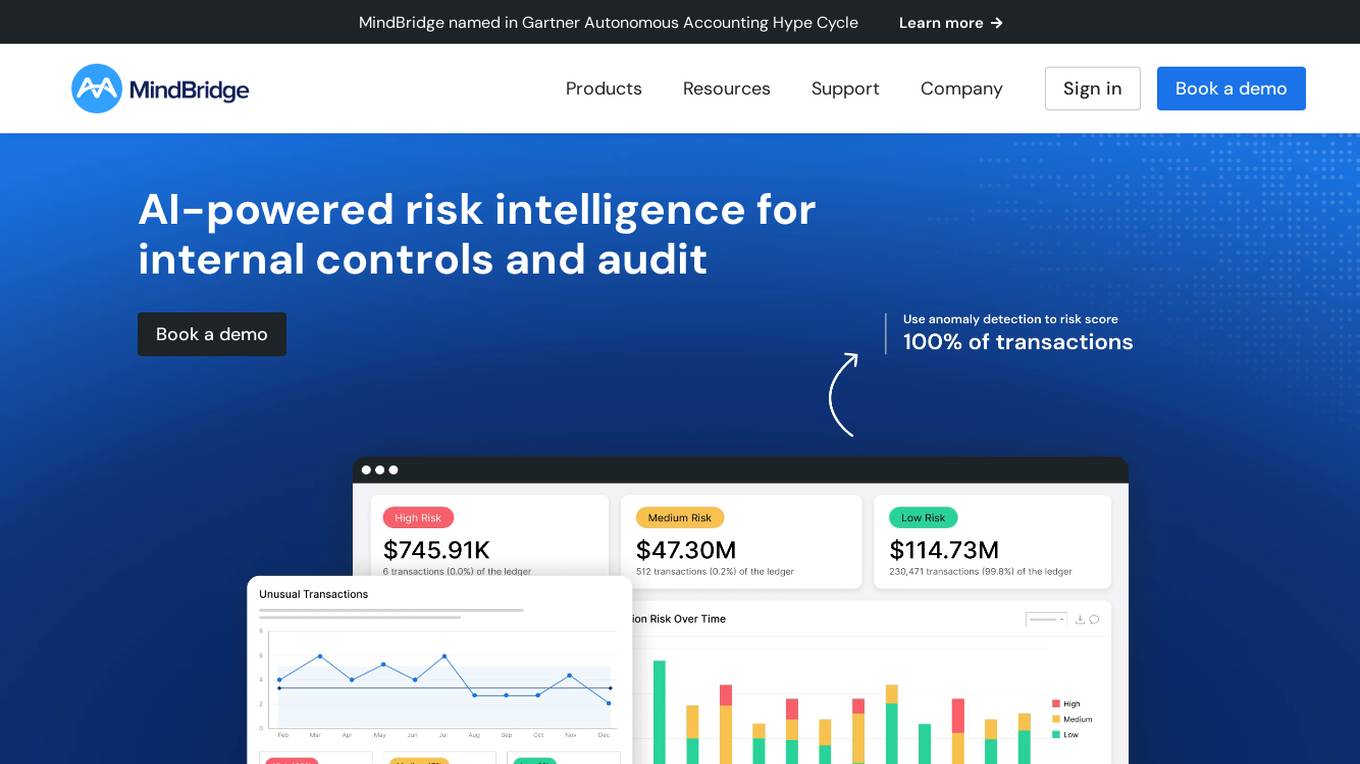

MindBridge

MindBridge is a global leader in financial risk discovery and anomaly detection. The MindBridge AI Platform drives insights and assesses risks across critical business operations. It offers various products like General Ledger Analysis, Company Card Risk Analytics, Payroll Risk Analytics, Revenue Risk Analytics, and Vendor Invoice Risk Analytics. With over 250 unique machine learning control points, statistical methods, and traditional rules, MindBridge is deployed to over 27,000 accounting, finance, and audit professionals globally.

ClearAI

ClearAI is an AI-powered platform that offers instant extraction of insights, effortless document navigation, and natural language interaction. It enables users to upload PDFs securely, ask questions, and receive accurate responses in seconds. With features like structured results, intelligent search, and lifetime access offers, ClearAI simplifies tasks such as analyzing company reports, risk assessment, audit support, contract review, legal research, and due diligence. The platform is designed to streamline document analysis and provide relevant data efficiently.

ISMS Copilot

ISMS Copilot is an AI-powered assistant designed to simplify ISO 27001 preparation for both experts and beginners. It offers various features such as ISMS scope definition, risk assessment and treatment, compliance navigation, incident management, business continuity planning, performance tracking, and more. The tool aims to save time, provide precise guidance, and ensure ISO 27001 compliance. With a focus on security and confidentiality, ISMS Copilot is a valuable resource for small businesses and information security professionals.

PropHunt.ai

PropHunt.ai is an AI-powered platform designed for real estate professionals and property investors. It utilizes advanced machine learning algorithms to analyze property data and provide valuable insights for property hunting and investment decisions. The platform offers features such as property price prediction, neighborhood analysis, investment risk assessment, property comparison, and market trend forecasting. With PropHunt.ai, users can make informed decisions, optimize their property investments, and stay ahead in the competitive real estate market.

Center for a New American Security

The Center for a New American Security (CNAS) is a bipartisan, non-profit think tank that focuses on national security and defense policy. CNAS conducts research, analysis, and policy development on a wide range of topics, including defense strategy, nuclear weapons, cybersecurity, and energy security. CNAS also provides expert commentary and analysis on current events and policy debates.

BCT Digital

BCT Digital is an AI-powered risk management suite provider that offers a range of products to help enterprises optimize their core Governance, Risk, and Compliance (GRC) processes. The rt360 suite leverages next-generation technologies, sophisticated AI/ML models, data-driven algorithms, and predictive analytics to assist organizations in managing various risks effectively. BCT Digital's solutions cater to the financial sector, providing tools for credit risk monitoring, early warning systems, model risk management, environmental, social, and governance (ESG) risk assessment, and more.

ZestyAI

ZestyAI is an artificial intelligence tool that helps users make brilliant climate and property risk decisions. The tool uses AI to provide insights on property values and risk exposure to natural disasters. It offers products such as Property Insights, Digital Roof, Roof Age, Location Insights, and Climate Risk Models to evaluate and understand property risks. ZestyAI is trusted by top insurers in North America and aims to bring a ten times return on investment to its customers.

Graphio

Graphio is an AI-driven employee scoring and scenario builder tool that leverages continuous, real-time scoring with AI agents to assess potential, predict flight risks, and identify future leaders. It replaces subjective evaluations with AI-driven insights to ensure accurate, unbiased decisions in talent management. Graphio uses AI to remove bias in talent management, providing real-time, data-driven insights for fair decisions in promotions, layoffs, and succession planning. It offers compliance features and rules that users can control, ensuring accurate and secure assessments aligned with legal and regulatory requirements. The platform focuses on security, privacy, and personalized coaching to enhance employee engagement and reduce turnover.

Intelligencia AI

Intelligencia AI is a leading provider of AI-powered solutions for the pharmaceutical industry. Our suite of solutions helps de-risk and enhance clinical development and decision-making. We use a combination of data, AI, and machine learning to provide insights into the probability of success for drugs across multiple therapeutic areas. Our solutions are used by many of the top global pharmaceutical companies to improve their R&D productivity and make more informed decisions.

CUBE3.AI

CUBE3.AI is a real-time crypto fraud prevention tool that utilizes AI technology to identify and prevent various types of fraudulent activities in the blockchain ecosystem. It offers features such as risk assessment, real-time transaction security, automated protection, instant alerts, and seamless compliance management. The tool helps users protect their assets, customers, and reputation by proactively detecting and blocking fraud in real-time.

Modulos

Modulos is a Responsible AI Platform that integrates risk management, data science, legal compliance, and governance principles to ensure responsible innovation and adherence to industry standards. It offers a comprehensive solution for organizations to effectively manage AI risks and regulations, streamline AI governance, and achieve relevant certifications faster. With a focus on compliance by design, Modulos helps organizations implement robust AI governance frameworks, execute real use cases, and integrate essential governance and compliance checks throughout the AI life cycle.

Archistar

Archistar is a leading property research platform that utilizes data and AI to help investors, developers, architects, and government officials make confident and compliant decisions. The platform offers features such as finding the best use of a site, researching real estate rules and risks, generating 3D design concepts with AI, and fast-tracking building permit assessments. With over 100,000 users, Archistar provides access to advanced algorithms, filters, and market insights to discover real estate opportunities efficiently.

Limbic

Limbic is a clinical AI application designed for mental healthcare providers to save time, improve outcomes, and maximize impact. It offers a suite of tools developed by a team of therapists, physicians, and PhDs in computational psychiatry. Limbic is known for its evidence-based approach, safety focus, and commitment to patient care. The application leverages AI technology to enhance various aspects of the mental health pathway, from assessments to therapeutic content delivery. With a strong emphasis on patient safety and clinical accuracy, Limbic aims to support clinicians in meeting the rising demand for mental health services while improving patient outcomes and preventing burnout.

Credo AI

Credo AI is a leading provider of AI governance, risk management, and compliance software. Our platform helps organizations to adopt AI safely and responsibly, while ensuring compliance with regulations and standards. With Credo AI, you can track and prioritize AI projects, assess AI vendor models for risk and compliance, create artifacts for audit, and more.

Springs

Springs is a custom AI compliance solution provider for enterprises across industries such as Pharma & Life Sciences, Food Safety, and Manufacturing. Their AI-based compliance software is designed to adapt to specific industry needs, scale with requirements, and ensure organizations are always audit-ready. The platform offers features like regulatory intelligence, gap analysis, compliance management, and customizable workflows to streamline compliance processes. Springs aims to reduce costs, mitigate risks, and improve quality through intelligent compliance solutions.

Castello.ai

Castello.ai is a financial analysis tool that uses artificial intelligence to help businesses make better decisions. It provides users with real-time insights into their financial data, helping them to identify trends, risks, and opportunities. Castello.ai is designed to be easy to use, even for those with no financial background.

0 - Open Source AI Tools

20 - OpenAI Gpts

Project Risk Assessment Advisor

Assesses project risks to mitigate potential organizational impacts.

Canadian Film Industry Safety Expert

Film studio safety expert guiding on regulations and practices

Fluffy Risk Analyst

A cute sheep expert in risk analysis, providing downloadable checklists.

Outsourcing-assistenten (finans)

Dansk vejledning i outsourcing regler for kreditinstitutter og datacentraler

Information Assurance Advisor

Ensures information security through policy development and risk assessments.

Corporate Governance Audit Advisor

Ensures corporate compliance through meticulous governance audits.

Compliance Audit Advisor

Ensures regulatory compliance through proficient auditing practices.