Best AI tools for< Video Analysis >

Infographic

20 - AI tool Sites

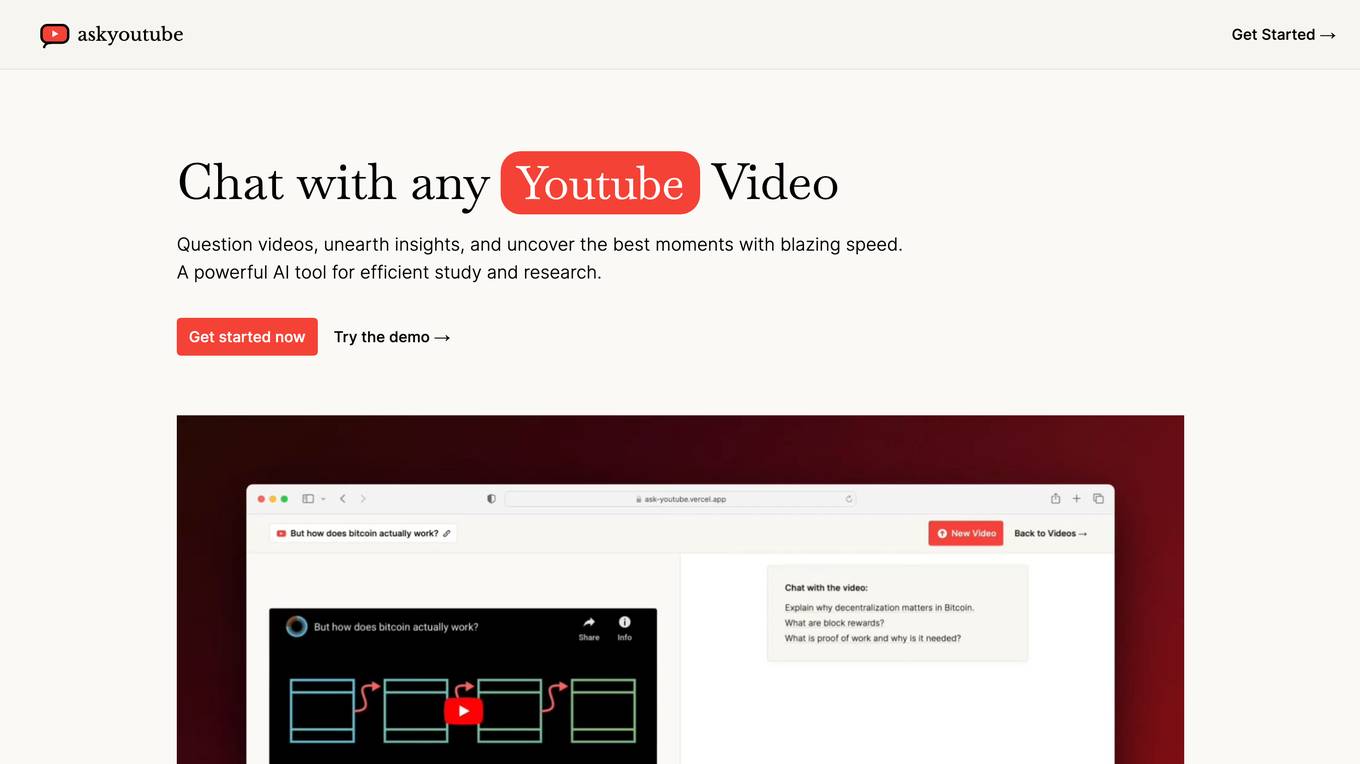

YouTube Video Chat AI Tool

The website offers an AI tool that allows users to chat with any YouTube video, ask questions, analyze videos, discover insights, and identify key moments quickly. It is designed to enhance study and research efficiency by providing a powerful platform for users to interact with video content. Users can access a demo to experience the tool's capabilities and are encouraged to stop relying on the comments section for finding timestamps. The tool is free to use and aims to streamline the process of extracting valuable information from videos.

Valossa

Valossa is an AI tool that offers Video Analysis AI services, including Video-to-Text, Search, Captions, Clips, and more. It provides solutions for generating video transcripts, captions, and logging, enabling brand-safe contextual advertising, automatically clipping promo videos, identifying sensitive content for compliance, and analyzing video moods and sentiment. Valossa's AI understands video like a human does, offering advanced video automation tools for various industries.

EdgeDX

EdgeDX is a leading provider of Edge AI Video Analysis Solutions, specializing in security and surveillance, construction and logistics safety, efficient store management, public safety management, and intelligent transportation system. The application offers over 50 intuitive AI apps capable of advanced human behavior analysis, supports various protocols and VMS, and provides features like P2P based mobile alarm viewer, LTE & GPS support, and internal recording with M.2 NVME SSD. EdgeDX aims to protect customer assets, ensure safety, and enable seamless integration with AI Bridge for easy and efficient implementation.

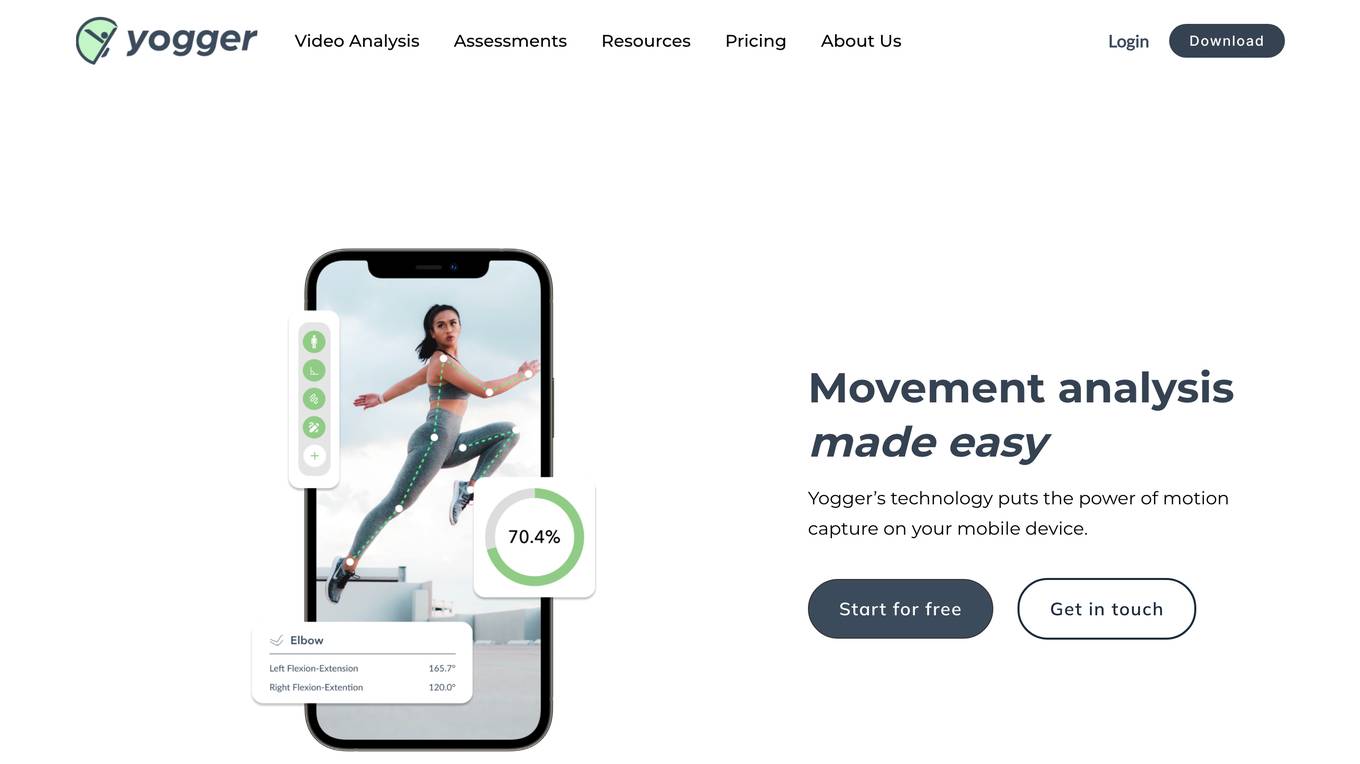

Yogger

Yogger is an AI-powered video analysis and movement assessment tool designed for coaches, trainers, physical therapists, and athletes. It allows users to gather precise movement data using video-based joint tracking or automatic movement screenings. With Yogger, users can analyze movement patterns, critique form, and measure progress, all from their phone. The tool is versatile, easy to use, and suitable for in-person coaching or online sessions. Yogger provides real data for accurate assessments, making it a valuable tool for professionals in the sports and fitness industry.

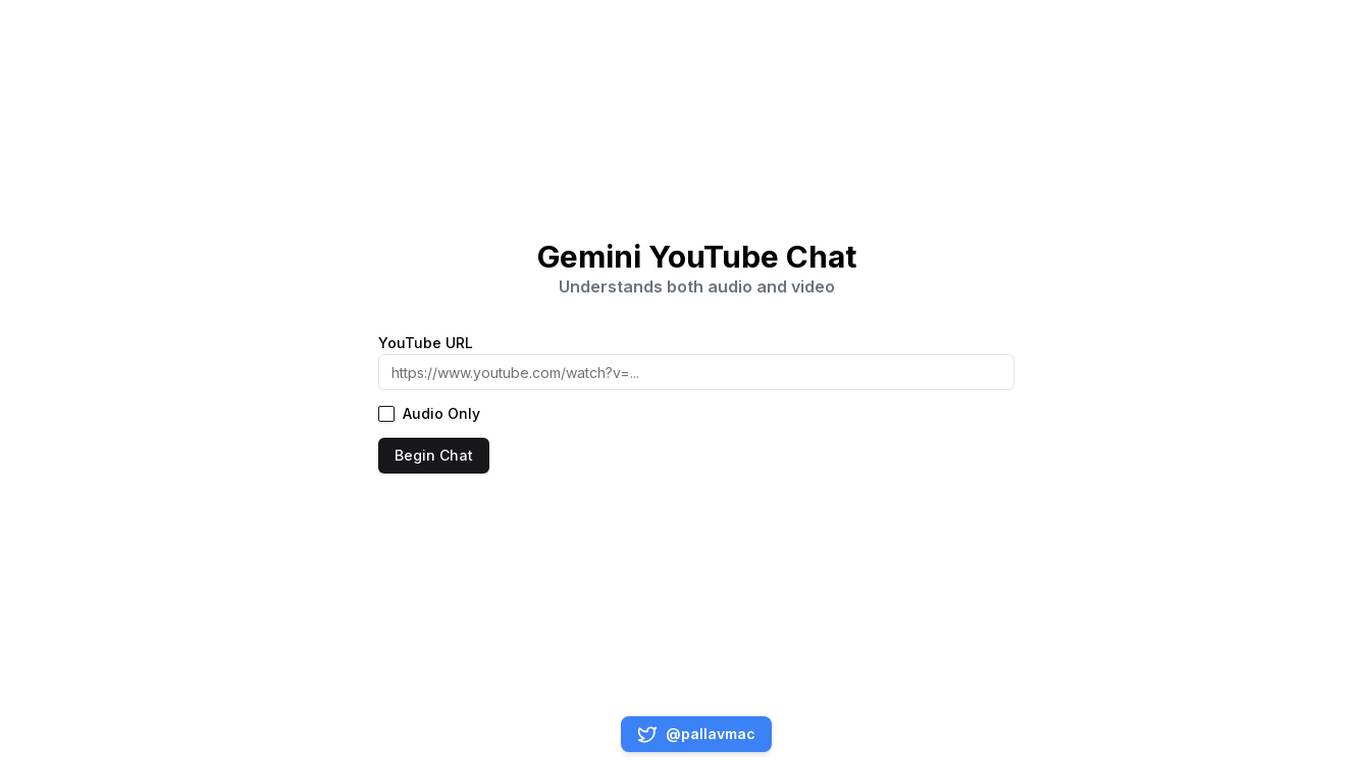

Gemini YouTube Chat

Gemini YouTube Chat is an AI tool that integrates with YouTube to provide chat functionality based on both audio and video content. Users can engage in conversations related to specific YouTube URLs, whether they contain audio, video, or both. The tool offers a seamless experience for users to interact and discuss content in real-time, enhancing the overall engagement and community building on the platform.

SumyAI

SumyAI is an AI-powered tool that helps users get 10x faster insights from YouTube videos. It condenses lengthy videos into key points for faster absorption, saving time and enhancing retention. SumyAI also provides summaries of events and conferences, podcasts and interviews, educational tutorials, product reviews, news reports, and entertainment.

Qortex

Qortex is a video intelligence platform that offers advanced AI technology to optimize advertising, monetization, and analytics for video content. The platform analyzes video frames in real-time to provide deep insights for media investment decisions. With features like On-Stream ad experiences and in-video ad units, Qortex helps brands achieve higher audience attention, revenue per stream, and fill rates. The platform is designed to enhance brand metrics and improve advertising performance through contextual targeting.

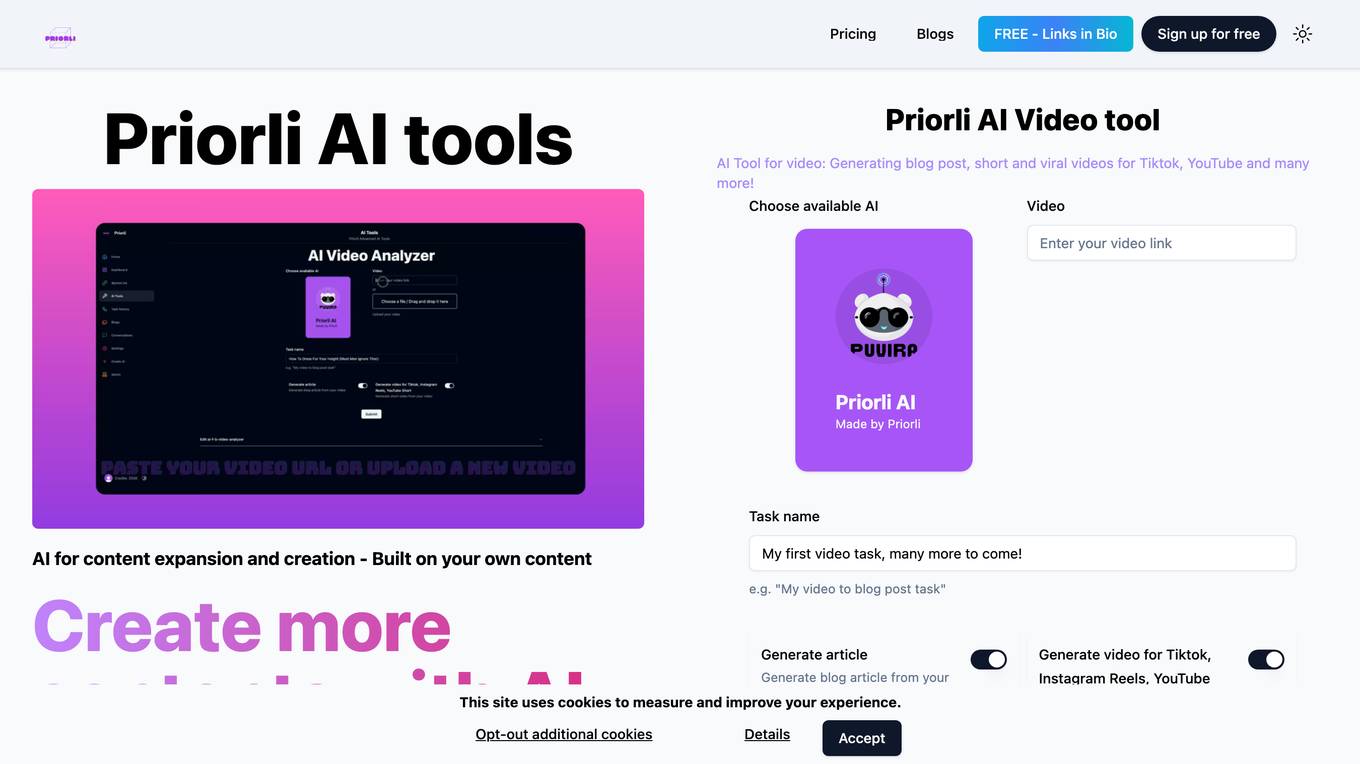

Priorli

Priorli is an AI-powered content creation tool that helps you generate high-quality content quickly and easily. With Priorli, you can create blog posts, articles, social media posts, and more, in just a few clicks. Priorli's AI engine analyzes your input and generates unique, engaging content that is tailored to your specific needs.

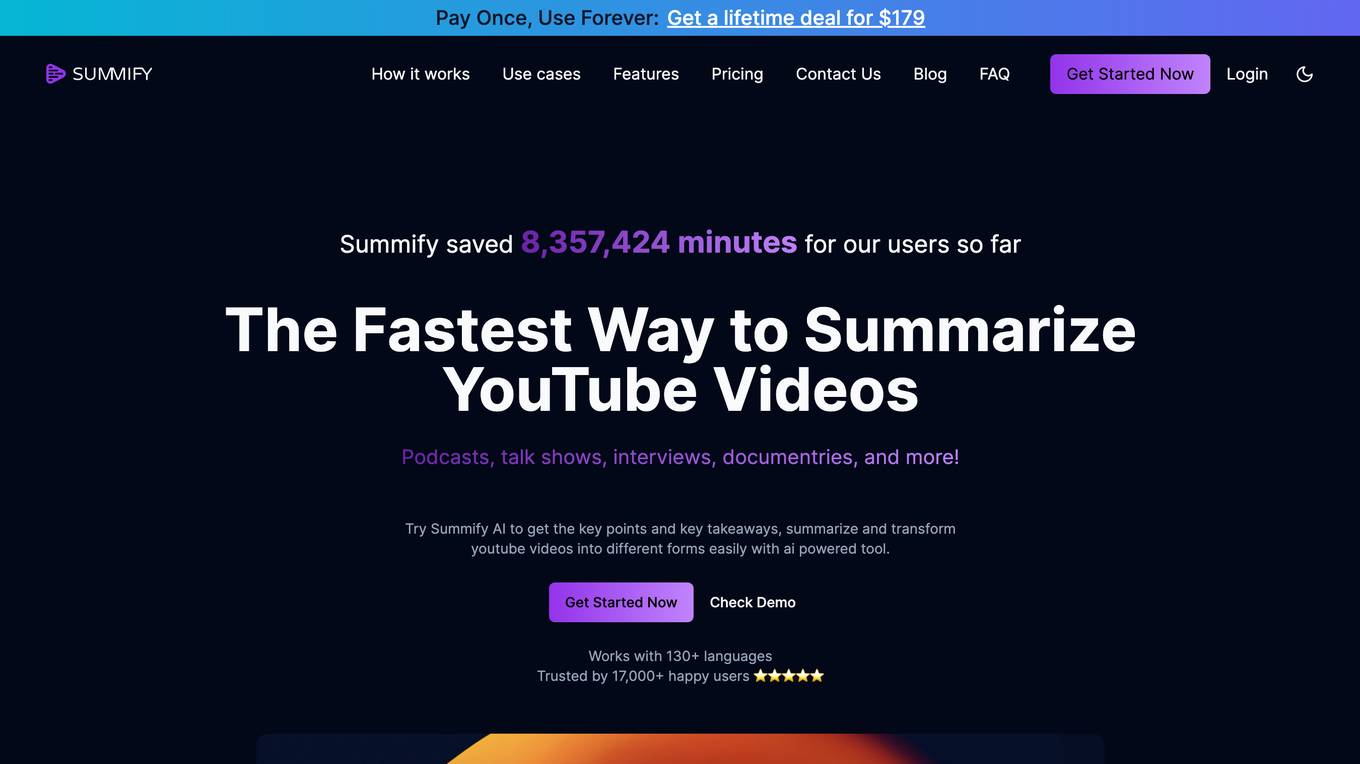

Summify

Summify is an AI-powered tool that helps users summarize YouTube videos, podcasts, and other audio-visual content. It offers a range of features to make it easy to extract key points, generate transcripts, and transform videos into written content. Summify is designed to save users time and effort, and it can be used for a variety of purposes, including content creation, blogging, learning, digital marketing, and research.

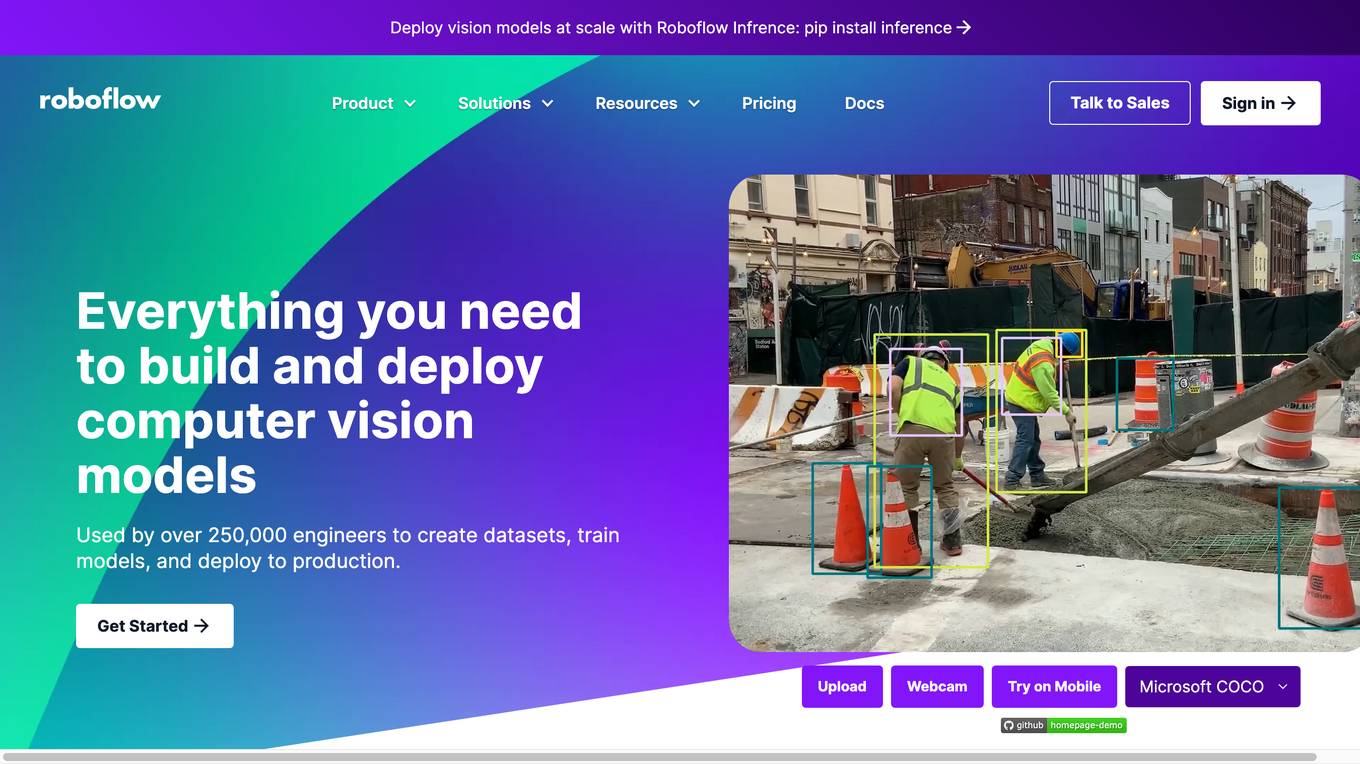

Roboflow

Roboflow is a platform that provides tools for building and deploying computer vision models. It offers a range of features, including data annotation, model training, and deployment. Roboflow is used by over 250,000 engineers to create datasets, train models, and deploy to production.

Panda Video

Panda Video is a video hosting platform that offers a variety of AI-powered features to help businesses increase sales and improve security. These features include a mind map tool for visualizing video content, a quiz feature for creating interactive learning experiences, an AI-powered ebook feature for providing supplemental resources, automatic captioning, a search feature for quickly finding specific content within videos, and automatic dubbing for creating videos in multiple languages. Panda Video also offers a variety of other features, such as DRM protection to prevent piracy, smart autoplay to increase engagement, a customizable player appearance, Facebook Pixel integration for retargeting, and analytics to track video performance.

ExpiredDomains.com

ExpiredDomains.com is a free online platform that helps users find valuable expired and expiring domain names. It aggregates domain data from hundreds of top-level domains (TLDs) and displays them in a searchable, filterable format. The platform provides tools and insights to support smarter decisions by offering comprehensive listings, exclusive data metrics, a user-friendly interface, and professional trust. Users can browse listings, view metrics, and apply filters without any cost or hidden charges.

ScreenApp

ScreenApp is an AI-powered notetaker, transcription tool, summarizer, and recorder for audio and video content. It offers a comprehensive suite of features across various platforms, including web access, desktop applications, mobile apps, and browser extensions. ScreenApp leverages AI technology to provide users with efficient and accurate transcription, summarization, and analysis capabilities for their audio and video recordings. The application is designed to streamline the process of capturing, organizing, and extracting insights from conversations, meetings, and other multimedia content.

Vidrovr

Vidrovr is a video analysis platform that uses machine learning to process unstructured video, image, or audio data. It provides business insights to help drive revenue, make strategic decisions, and automate monotonous processes within a business. Vidrovr's technology can be used to minimize equipment downtime, proactively plan for equipment replacement, leverage AI to empower mission objectives and decision making, monitor persons or topics of interest across various media sources, ensure critical infrastructure is monitored 24/7/365, and protect ecological assets.

Twelve Labs

Twelve Labs is a cutting-edge AI tool that specializes in multimodal video understanding, allowing users to bring human-like video comprehension to any application. The tool enables users to search, generate, and embed video content with state-of-the-art accuracy and scalability. With the ability to handle vast video libraries and provide rich video embeddings, Twelve Labs is a game-changer in the field of video analysis and content creation.

Nova AI

Nova AI is an online video editing platform that offers a wide range of tools and features for creating high-quality videos. Users can edit, trim, merge, add subtitles, translate, and more entirely online without the need for installation. The platform also provides AI-powered tools for tasks such as dubbing, voice generation, video analysis, and more. Nova AI aims to simplify the video editing process and help users create professional videos with ease.

VideoVerse

VideoVerse is a company that provides AI-powered video solutions. Their products include Magnifi, an AI-driven highlights generator; Illusto, an intuitive and powerful video editing tool; and Contextual video analysis, a tool that uses AI to detect and tag sensitive content in videos. VideoVerse's solutions are used by a variety of businesses, including sports broadcasters, OTTs, teams, rights holders, and the media, entertainment, and e-sports industries.

VideoSage

VideoSage is an AI-powered platform that allows users to ask questions and gain insights about videos. Empowered by Moonshot Kimi AI, VideoSage provides summaries, insights, timestamps, and accurate information based on video content. Users can engage in conversations with the AI while watching videos, fostering a collaborative environment. The platform aims to enhance the user experience by offering tools to customize and enhance viewing experiences.

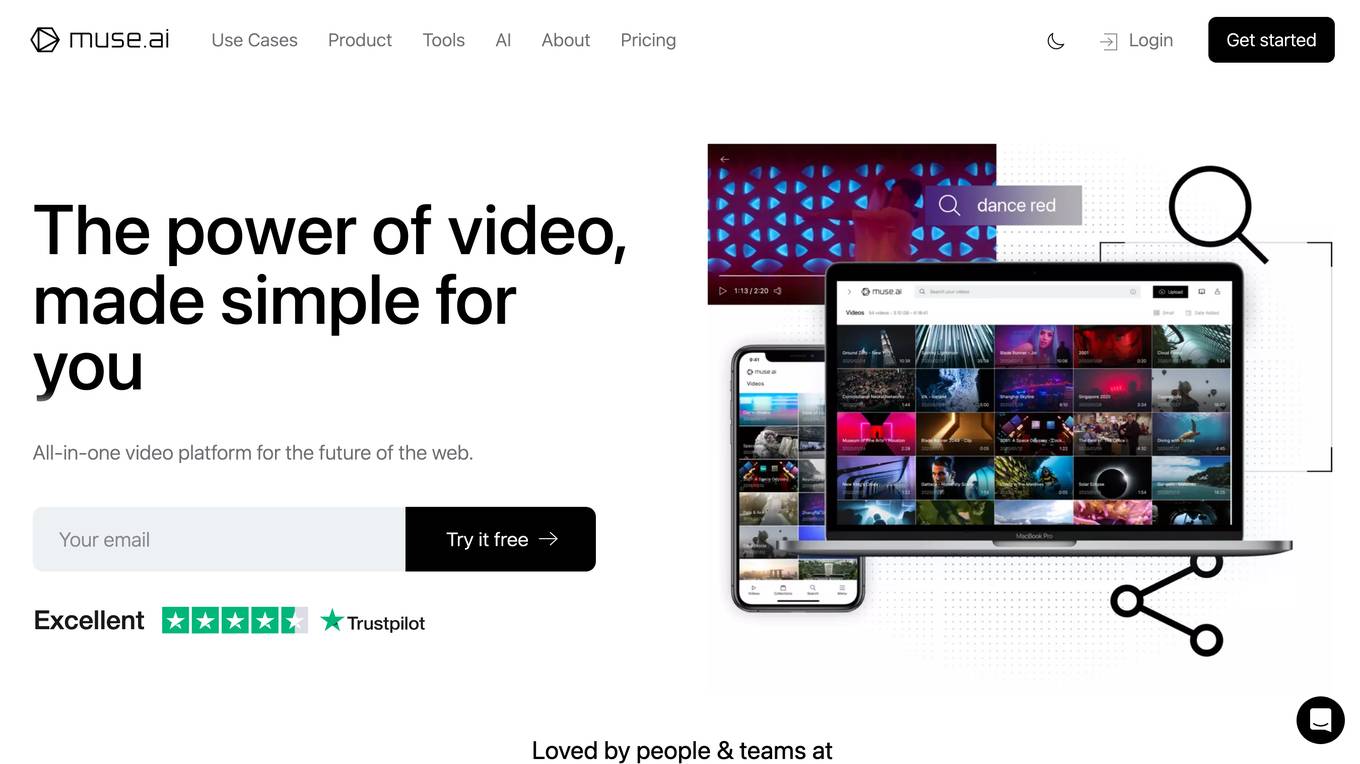

Muse.ai

Muse.ai is an all-in-one video platform that provides a suite of tools for video hosting, editing, searching, and monetization. It uses artificial intelligence (AI) to automatically transcribe, index, and label videos, making them easily searchable and discoverable. Muse.ai also offers a customizable video player, analytics, and integrations with other services. It is suitable for a wide range of users, including individuals, teams, businesses, and educational institutions.

FilmBase

FilmBase is an AI-powered video editing tool that helps you remove silences and filler words from your videos with a single click. It uses AI technology to detect the unwanted parts of your video and allows you to edit them with its transcript editor. FilmBase supports exporting to multiple different video editors, including Final Cut Pro, DaVinci Resolve, and Adobe Premiere Pro.

0 - Open Source Tools

20 - OpenAI Gpts

Surf Coach AI: Surfing Video Analysis

Personalized surf tips from your surfing photos and videos

The Video Content Creator Coach

A content creator coach aiding in YouTube video content creation, analysis, script writing and storytelling. Designed by a successful YouTuber to help other YouTubers grow their channels.

Ringkesan

Nyimpulkeun sareng nimba poin konci tina téks, artikel, video, dokumén sareng seueur deui

Video Brief Genius

Transform your brand! Provide brand and product info, and we'll craft a unique, visually stunning 30-45 second video brief. Simple, effective, impactful.