Best AI tools for< Sensory Integration Therapist >

Infographic

20 - AI tool Sites

Roboto AI

Roboto AI is an advanced platform that allows users to curate, transform, and analyze robotics data at scale. It provides features for data management, actions, events, search capabilities, and SDK integration. The application helps users understand complex machine data through multimodal queries and custom actions, enabling efficient data processing and collaboration within teams.

Caper

Caper is an AI-powered smart shopping cart technology that revolutionizes the in-store shopping experience for retailers. It offers seamless and personalized shopping, incremental consumer spend, and alternate revenue streams through personalized advertising and loyalty program integration. Caper enhances customer engagement with gamification features and provides anti-theft and operational capabilities for retailers. The application integrates with existing POS systems and loyalty programs, offering a unified online and in-store grocery experience. With advanced technology like sensor fusion and AI integration, Caper transforms the traditional shopping cart into a smart, interactive tool for both customers and retailers.

Edge Impulse

Edge Impulse is a leading edge AI platform that enables users to build datasets, train models, and optimize libraries to run directly on any edge device. It offers sensor datasets, feature engineering, model optimization, algorithms, and NVIDIA integrations. The platform is designed for product leaders, AI practitioners, embedded engineers, and OEMs across various industries and applications. Edge Impulse helps users unlock sensor data value, build high-quality sensor datasets, advance algorithm development, optimize edge AI models, and achieve measurable results. It allows for future-proofing workflows by generating models and algorithms that perform efficiently on any edge hardware.

One Drop

One Drop has developed a next-generation intradermal continuous glucose monitoring (CGM) device. Advanced material science, chemistry, and electronics make the One Health CGM among the most innovative body-worn sensors—explicitly designed to meet the needs of people with type 2 diabetes. By integrating proprietary micro-needle technology and AI-enabled precision guidance, the minimally invasive One Health CGM will deliver pain-free, needle-free wear and unprecedented access to a population currently underserved by CGM.

Gemini AI

Gemini AI is an AI and ML solutions provider that accelerates innovation through artificial intelligence. The company leads the revolution of artificial intelligence for augmented intelligence, leveraging cutting-edge AI and ML to solve challenging problems and augment human intelligence. Gemini AI specializes in areas such as computer vision, geospatial science, human health, and integrative technologies. Their services include data and sensors analysis, modeling with deep learning techniques, and deployment of predictive models for real-time insights.

Gastrograph AI

Gastrograph AI is a cutting-edge artificial intelligence platform that empowers food and beverage companies to optimize their products for consistent market success. Leveraging the world's largest sensory database, Gastrograph AI provides deep insights into consumer preferences, enabling companies to develop new products, enter new markets, and optimize existing products with confidence. With Gastrograph AI, companies can reduce time to market costs, simplify product development, and gain access to trustworthy insights, leading to measurable results and a competitive edge in the global marketplace.

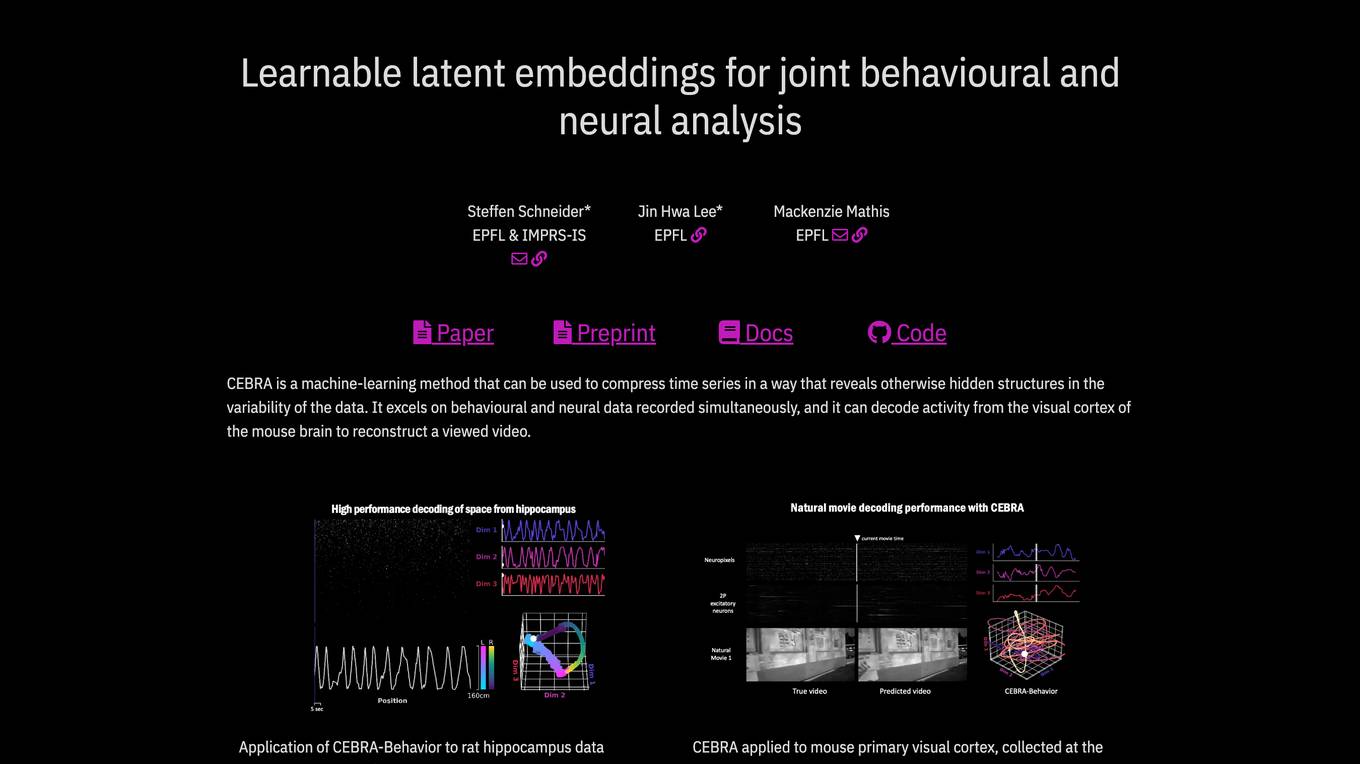

CEBRA

CEBRA is a self-supervised learning algorithm that provides interpretable embeddings of high-dimensional recordings using auxiliary variables. It excels in compressing time series data to reveal hidden structures, particularly in behavioral and neural data. The algorithm can decode activity from the visual cortex, reconstruct viewed videos, decode trajectories, and determine position during navigation. CEBRA is a valuable tool for joint behavioral and neural analysis, offering consistent and high-performance latent spaces for hypothesis testing and label-free usage across various datasets and species.

Osmo

Osmo is an AI scent platform that aims to digitize the sense of smell, combining frontier AI and olfactory science to improve human health and wellbeing through fragrance. The platform reads, maps, and writes scents using modern AI tools, enabling the discovery of new fragrance ingredients and applications for insect repellents, threat detection, and immersive experiences.

Aimlabs

Aimlabs is a comprehensive gaming platform that provides users with a variety of tools to improve their aim and overall gaming skills. With over 29,000 tasks and playlists, 500 FPS game profiles, and detailed aim analysis, Aimlabs helps gamers of all levels improve their performance. The platform also features an AI personal assistant that can offer tips and create custom maps on-the-spot. Aimlabs is the official partner of VALORANT and Rainbow Six Siege, and its science-backed training methods have been developed by a team of neuroscientists, designers, developers, and computer vision pioneers.

Mobility Engineering

Mobility Engineering is a website that provides news, articles, and resources on the latest developments in mobility technology. The site covers a wide range of topics, including autonomous vehicles, connected cars, electric vehicles, and more. Mobility Engineering is a valuable resource for anyone interested in staying up-to-date on the latest trends in mobility technology.

Tangram Vision

Tangram Vision is a company that provides sensor calibration tools and infrastructure for robotics and autonomous vehicles. Their products include MetriCal, a high-speed bundle adjustment software for precise sensor calibration, and AutoCal, an on-device, real-time calibration health check and adjustment tool. Tangram Vision also offers a high-resolution depth sensor called HiFi, which combines high-resolution depth data with high-powered AI capabilities. The company's mission is to accelerate the development and deployment of autonomous systems by providing the tools and infrastructure needed to ensure the accuracy and reliability of sensors.

Rokoko

Rokoko offers studio-grade motion capture tools for creators, empowering users across industries from indie animators to full-scale studios. The tools seamlessly integrate with popular software like Blender, Unreal Engine, and Maya. Users can set up the gear in minutes, capture and edit recordings with no limits, and export or stream the data for various workflows. Rokoko's motion capture devices are trusted by over 250,000 creators globally, providing a simple and efficient solution for motion capture needs.

ePlant

ePlant is an advanced solution for tree care and plant research, offering precision tree monitoring from the lab to the landscape. It provides wireless monitoring solutions with advanced sensors for plant researchers and consulting arborists, enabling efficient data collection and analysis for better decision-making. The platform empowers users to track plant growth, water stress, tree lean, and sway through innovative sensors and data visualization tools. ePlant aims to simplify data management and transform complex datasets into actionable insights for users in the field of plant science and arboriculture.

Deep Planet

Deep Planet is a precision viticulture platform powered by AI that focuses on enhancing sustainability in agriculture. It offers solutions for the wine industry, landowners, farmers, and supply chain companies by providing data-driven insights to maximize potential, optimize nutrient application, and support the transition to achieve net zero targets. The platform leverages AI and satellite imagery to empower users with actionable intelligence for better decision-making in vineyard management and soil health.

Airship AI

Airship AI is a cutting-edge, artificial intelligence-driven video, sensor, and data management surveillance platform. Customers rely on their services to provide actionable intelligence in real-time, collected from a wide range of deployed sensors, utilizing the latest in edge and cloud-based analytics. These capabilities improve public safety and operational efficiency for both public sector and commercial clients. Founded in 2006, Airship AI is U.S. owned and operated, headquartered in Redmond, Washington. Airship's product suite is comprised of three core offerings: Acropolis, the enterprise software stack, Command, the family of viewing clients, and Outpost, edge hardware and software AI offerings.

Intrinsic

Intrinsic is an AI platform that focuses on building the next generation of intelligent automation, making robotics more accessible and valuable for developers and businesses. The platform offers a range of capabilities and skills to develop intelligent solutions, from perception to motion planning and sensor-based controls. Intrinsic aims to simplify the programming, usage, and innovation of robots, enabling them to become usable tools for millions of users.

EverSQL

EverSQL is an AI-powered tool designed for SQL query optimization, database observability, and cost reduction for PostgreSQL and MySQL databases. It automatically optimizes SQL queries using smart AI-based algorithms, provides ongoing performance insights, and helps reduce monthly database costs by offering optimization recommendations. With over 100,000 professionals trusting EverSQL, it aims to save time, improve database performance, and enhance cost-efficiency without accessing sensitive data.

Reality AI Software

Reality AI Software is an Edge AI software development environment that combines advanced signal processing, machine learning, and anomaly detection on every MCU/MPU Renesas core. The software is underpinned by the proprietary Reality AI ML algorithm that delivers accurate and fully explainable results supporting diverse applications. It enables features like equipment monitoring, predictive maintenance, and sensing user behavior and the surrounding environment with minimal impact on the Bill of Materials (BoM). Reality AI software running on Renesas processors helps deliver endpoint intelligence in products across various markets.

Just Walk Out technology

Just Walk Out technology is a checkout-free shopping experience that allows customers to enter a store, grab whatever they want, and quickly get back to their day, without having to wait in a checkout line or stop at a cashier. The technology uses camera vision and sensor fusion, or RFID technology which allows them to simply walk away with their items. Just Walk Out technology is designed to increase revenue with cost-optimized technology, maximize space productivity, increase throughput, optimize operational costs, and improve shopper loyalty.

Cambridge Mobile Telematics

Cambridge Mobile Telematics (CMT) is the world's largest telematics service provider, dedicated to making roads and drivers safer. Their AI-driven platform, DriveWell Fusion®, utilizes sensor data from various IoT devices to analyze and improve vehicle and driver behavior. CMT collaborates with auto insurers, automakers, gig companies, and the public sector to enhance risk assessment, safety, claims, and driver improvement programs. With headquarters in Cambridge, MA, and global offices, CMT protects millions of drivers worldwide daily.

0 - Open Source Tools

12 - OpenAI Gpts

Sensory Integration Guide

Your personalized guide guide to all things sensory, including Sensory Processing Disorder and Sensory Integration Therapy.

Sensory Supporter

A supportive guide for managing sensory dysregulation with tailored advice.

Serenity Nova

Serenity Nova guides individuals in exploring sensory awareness to navigate life's continuum, fostering harmony and personal freedom.

Raspberry Pi Pico Master

Expert in MicroPython, C, and C++ for Raspberry Pi Pico and RP2040 and other microcontroller oriented applications.