Best AI tools for< Processor >

Infographic

20 - AI tool Sites

Hailo

Hailo is a leading provider of top-performing edge AI processors for various edge devices, offering generative AI accelerators, AI vision processors, and AI accelerators. The company's technology enables high-performance deep learning applications on edge devices, catering to industries such as automotive, security, industrial automation, retail, and personal computing.

Graphcore

Graphcore is a cloud-based platform that accelerates machine learning processes by harnessing the power of IPU-powered generative AI. It offers cloud services, pre-trained models, optimized inference engines, and APIs to streamline operations and bring intelligence to enterprise applications. With Graphcore, users can build and deploy AI-native products and platforms using the latest AI technologies such as LLMs, NLP, and Computer Vision.

Slicker

Slicker is an AI-powered tool designed to recover failed subscription payments and maximize subscription revenue for businesses. It uses a proprietary AI engine to process each failing payment individually, converting past due invoices into revenue. With features like payment recovery on auto-pilot, state-of-the-art machine learning model, lightning-fast setup, in-depth payment analytics, and enterprise-grade security, Slicker offers a comprehensive solution to reduce churn and boost revenue. The tool is fully transparent, allowing users to inspect and review every action taken by the AI engine. Slicker seamlessly integrates with popular billing and payment platforms, making it easy to implement and start seeing results quickly.

Silverwork Solutions

Silverwork Solutions is a fintech company that provides AI-powered mortgage automation solutions. Its Digital Workforce Solutions are role-based autonomous bots that integrate seamlessly into loan manufacturing processes, from application to post-closing. These bots utilize AI to make predictions and decisions, enhancing the loan processing experience. Silverwork's solutions empower lenders to realize the full potential of automation and transform their operations, allowing them to focus on higher-value activities while the bots handle repetitive tasks.

DocVu.AI

DocVu.AI is an intelligent mortgage document processing solution that revolutionizes the handling of intricate documents with advanced AI/ML technologies. It automates complex operational rules, streamlining business functions seamlessly. DocVu.AI offers a holistic approach to digital transformation, ensuring businesses remain agile, competitive, and ahead in the digital race. It provides tailored solutions for invoice processing, customer onboarding, loans and mortgage processing, quality checks and audit, records digitization, and insurance claim processing.

Wisedocs

Wisedocs is an AI-powered platform that specializes in medical record reviews, summaries, and insights for claims processing. The platform offers intelligent features such as medical chronologies, workflows, deduplication, intelligent OCR, and insights summaries. Wisedocs streamlines the process of reviewing medical records for insurance, legal, and independent medical evaluation firms, providing speed, accuracy, and efficiency in claims processing. The platform automates tasks that were previously laborious and error-prone, making it a valuable tool for industries dealing with complex medical records.

Docsumo

Docsumo is an advanced Document AI platform designed for scalability and efficiency. It offers a wide range of capabilities such as pre-processing documents, extracting data, reviewing and analyzing documents. The platform provides features like document classification, touchless processing, ready-to-use AI models, auto-split functionality, and smart table extraction. Docsumo is a leader in intelligent document processing and is trusted by various industries for its accurate data extraction capabilities. The platform enables enterprises to digitize their document processing workflows, reduce manual efforts, and maximize data accuracy through its AI-powered solutions.

AlgoDocs

AlgoDocs is a powerful AI Platform developed based on the latest technologies to streamline your processes and free your team from annoying and error-prone manual data entry by offering fast, secure, and accurate document data extraction.

FormX.ai

FormX.ai is an AI-powered data extraction and conversion tool that automates the process of extracting data from physical documents and converting it into digital formats. It supports a wide range of document types, including invoices, receipts, purchase orders, bank statements, contracts, HR forms, shipping orders, loyalty member applications, annual reports, business certificates, personnel licenses, and more. FormX.ai's pre-configured data extraction models and effortless API integration make it easy for businesses to integrate data extraction into their existing systems and workflows. With FormX.ai, businesses can save time and money on manual data entry and improve the accuracy and efficiency of their data processing.

Parsio

Parsio is an AI-powered document parser that can extract structured data from PDFs, emails, and other documents. It uses natural language processing to understand the context of the document and identify the relevant data points. Parsio can be used to automate a variety of tasks, such as extracting data from invoices, receipts, and emails.

Infrrd

Infrrd is an intelligent document automation platform that offers advanced document extraction solutions. It leverages AI technology to enhance, classify, extract, and review documents with high accuracy, eliminating the need for human review. Infrrd provides effective process transformation solutions across various industries, such as mortgage, invoice, insurance, and audit QC. The platform is known for its world-class document extraction engine, supported by over 10 patents and award-winning algorithms. Infrrd's AI-powered automation streamlines document processing, improves data accuracy, and enhances operational efficiency for businesses.

Base64.ai

Base64.ai is an AI-powered document intelligence platform that offers a comprehensive solution for document processing and data extraction. It leverages advanced AI technology to streamline workflows, improve accuracy, and drive digital transformation for organizations. With features like Generative AI agents, workflow automation, and data intelligence, Base64.ai enables users to extract insights from structured and unstructured documents with ease. The platform is designed to enhance efficiency, reduce processing time, and increase productivity by eliminating manual document processing tasks.

Novo AI

Novo AI is an AI application that empowers financial institutions by leveraging Generative AI and Large Language Models to streamline operations, maximize insights, and automate processes like claims processing and customer support traditionally handled by humans. The application helps insurance companies understand claim documents, automate claims processing, optimize pricing strategies, and improve customer satisfaction. For banks, Novo AI automates document processing across multiple languages and simplifies adverse media screenings through efficient research on live internet data.

Plnar

Plnar is a smartphone imagery platform powered by AI that transforms smartphone photos into accurate 3D models, precise measurements, and fast estimates. It allows users to capture spatial-ready imagery without special equipment, enabling self-service for policyholders and providing field solutions for adjusters. Plnar standardizes data formats from a single smartphone image, generating reliable data for claims, underwriting, and more. The platform integrates services into one streamlined solution, eliminating inconsistencies and manual entry.

InsightPro

InsightPro is a workforce analytics platform specifically designed for healthcare payers. It integrates claims processing, quality assurance, training, and contact center functionalities, all powered by AI and machine learning. The platform offers capabilities such as dashboards, team productivity, workforce optimization, workload management, workforce access, real-time monitoring, and training management. InsightPro aims to improve operational efficiencies, reduce costs, enhance workforce planning, and foster team collaboration within payer organizations.

Cradl AI

Cradl AI is a no-code AI-powered document workflow automation tool that helps organizations automate document-related tasks, such as data extraction, processing, and validation. It uses AI to automatically extract data from complex document layouts, regardless of layout or language. Cradl AI also integrates with other no-code tools, making it easy to build and deploy custom AI models.

PYQ

PYQ is an AI-powered platform that helps businesses automate document-related tasks, such as data extraction, form filling, and system integration. It uses natural language processing (NLP) and machine learning (ML) to understand the content of documents and perform tasks accordingly. PYQ's platform is designed to be easy to use, with pre-built automations for common use cases. It also offers custom automation development services for more complex needs.

Peslac AI

Peslac AI is an intelligent document processing and data extraction tool that offers efficient document processing, custom workflows, and secure digital signatures. It automates the extraction of data from various document types using advanced AI technology, transforms unstructured documents into actionable insights, and streamlines document-heavy workflows with intelligent automation. Peslac serves industries such as insurance, finance, healthcare, legal, and others by automating claims processing, compliance documentation, patient records processing, legal forms, and more. The tool provides seamless integration via API, precise data extraction, and customizable AI models to enhance operational efficiency and accuracy.

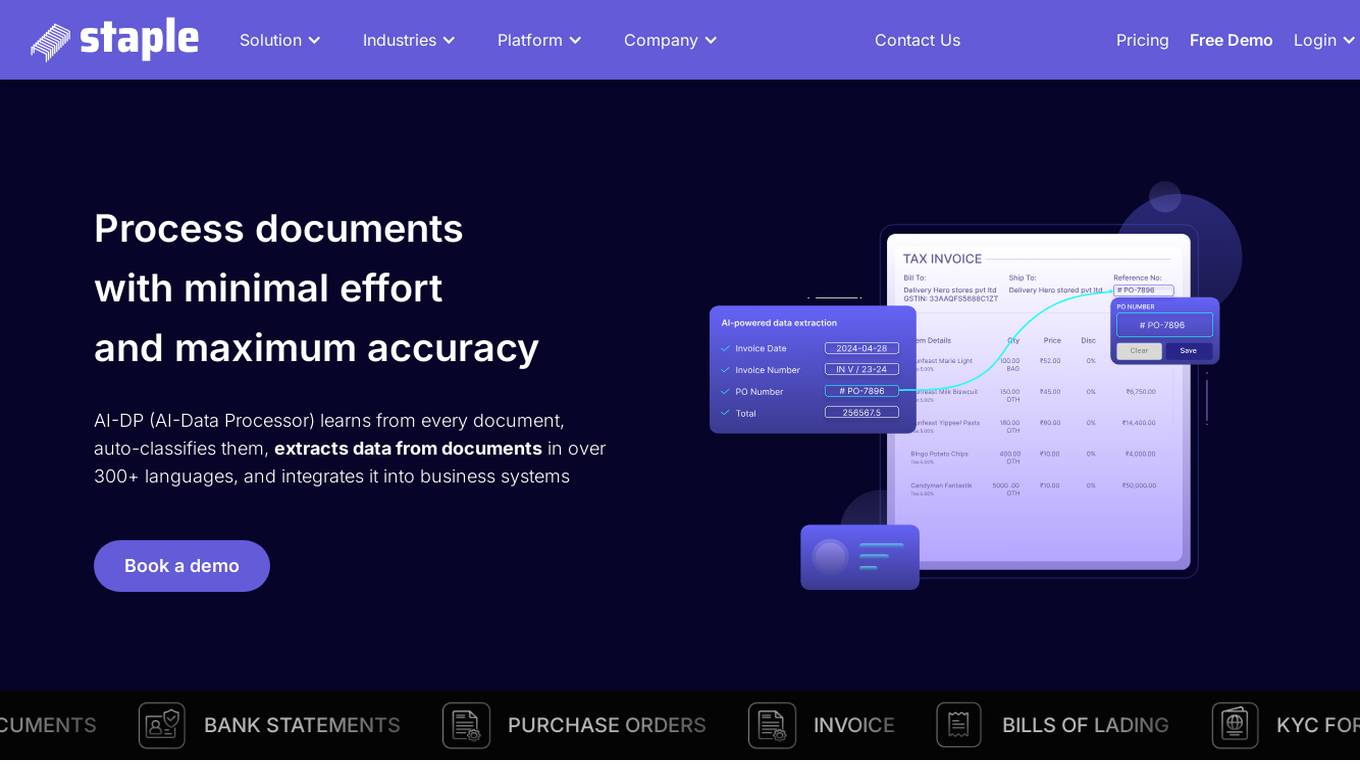

Staple AI Solutions

Staple AI Solutions offers AI-powered document processing solutions for various industries such as retail, manufacturing, healthcare, banking, logistics, and insurance. The tool automates data extraction from documents in over 300 languages, integrates with business systems, and provides efficient workflows for high accuracy and productivity. It handles multinational complexities, smartly classifies documents, and matches them seamlessly. Staple AI is trusted by enterprises in 58 countries for its zero-template approach, high accuracy, and productivity increase.

Neota

Neota is a no-code development platform that empowers individuals to build bespoke solutions without writing a single line of code. It offers a wide range of capabilities and features to streamline processes, automate documents, and accelerate time-to-market. Neota integrates seamlessly with existing tools, provides visually stunning user experiences, and ensures security with international certifications. The platform harnesses AI for practical business automation solutions and caters to various roles like Legal Operations, Insurance, and Human Resources. Neota is trusted globally and has a decade of experience in supporting teams to accomplish more with less.

0 - Open Source Tools

20 - OpenAI Gpts

Veteran's Aid Assistant

Empathetic guide for VA claims, offering precise, reliable assistance.

Loan Management Software

Loan management software expertise. Get the most powerful loan origination and loan servicing software on the market.

Terpene Tracker GPT

Web-enabled cannabis and terpene profile analyzer with image recognition

PMJAY Financial Assistant

Expert in managing and tracking payment recoveries for Hope Hospital.

VA Compensation GPT

Guide on veterans' affairs, focusing on compensation and benefits with updated 38 CFR Part 4.

CT Strain Names GPT 2.0

Translates CT cannabis strain names to true names, with detailed descriptions.

Abby

Your always-on, always available friendly assistant from BBPD, to help you with all your product queries and orders.

VA: Veterans Benefits Navigator (VBN)

Veterans Benefits Navigator (VBN) is a specialized chatbot designed to guide U.S. veterans through the complexities of VA benefits. It offers tailored, up-to-date information, locates nearest VA facilities, and ensures empathetic, confidential assistance for all benefit-related inquiries.

Cannabis Regulation Advisor by Yerba Buena

An AI specializing in New York State cannabis laws.