Best AI tools for< Phishing Investigator >

Infographic

16 - AI tool Sites

Dropzone AI

Dropzone AI is an award-winning AI application designed to reinforce Security Operations Centers (SOCs) by providing autonomous AI analysts. It replicates the techniques of elite analysts to autonomously investigate alerts, covering various use cases such as phishing, endpoint, network, cloud, identity, and insider threats. The application offers pre-trained AI agents that work alongside human analysts, automating investigation tasks and providing fast, detailed, and accurate reports. With built-in integrations with major security tools, Dropzone AI aims to reduce Mean Time to Respond (MTTR) and allow analysts to focus on addressing real threats.

Darktrace

Darktrace is a cybersecurity platform that leverages AI technology to provide proactive protection against cyber threats. It offers cloud-native AI security solutions for networks, emails, cloud environments, identity protection, and endpoint security. Darktrace's AI Analyst investigates alerts at the speed and scale of AI, mimicking human analyst behavior. The platform also includes services such as 24/7 expert support and incident management. Darktrace's AI is built on a unique approach where it learns from the organization's data to detect and respond to threats effectively. The platform caters to organizations of all sizes and industries, offering real-time detection and autonomous response to known and novel threats.

KnowBe4

KnowBe4 is a human risk management platform that offers security awareness training, cloud email security, phishing protection, real-time coaching, compliance training, and AI defense agents. The platform integrates AI to help organizations drive awareness, change user behavior, and reduce human risk. KnowBe4 is trusted by 70,000 organizations worldwide and is known for its comprehensive security products and customer-centric approach.

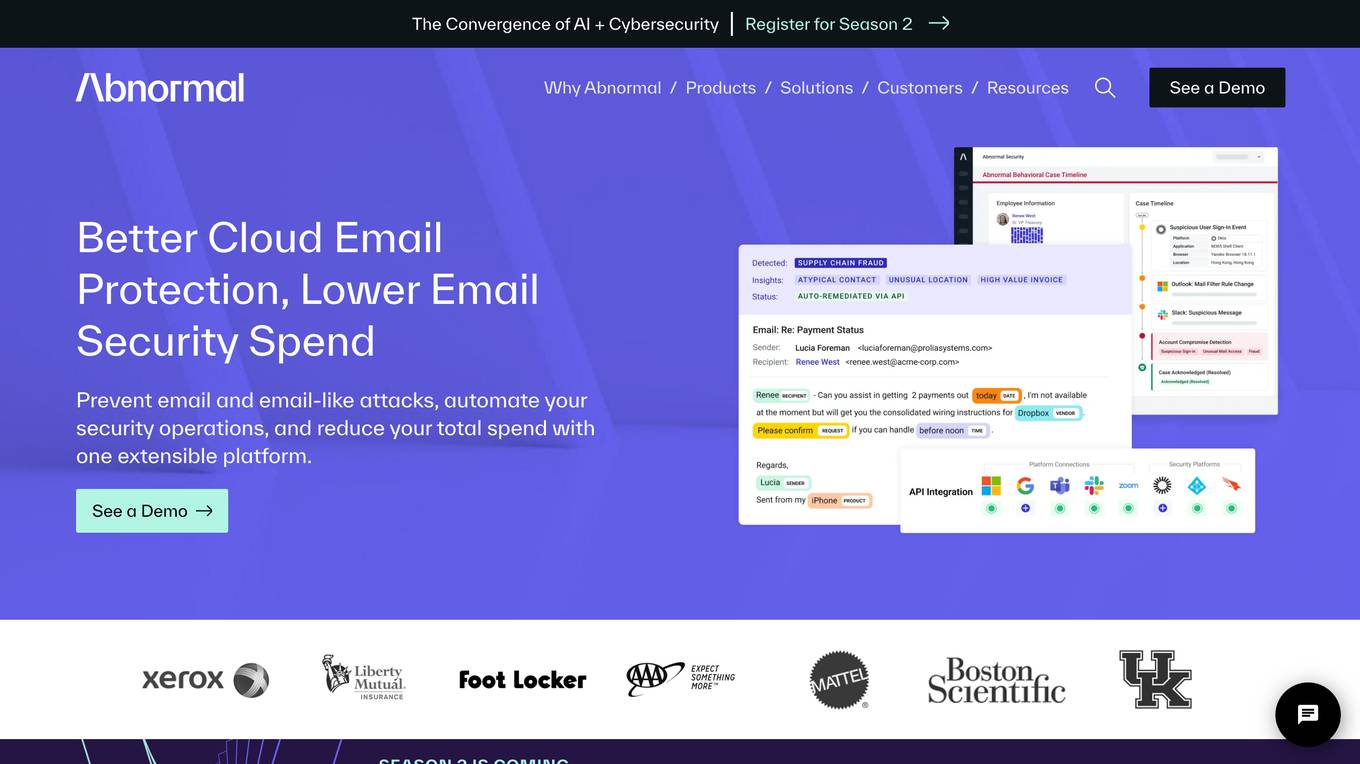

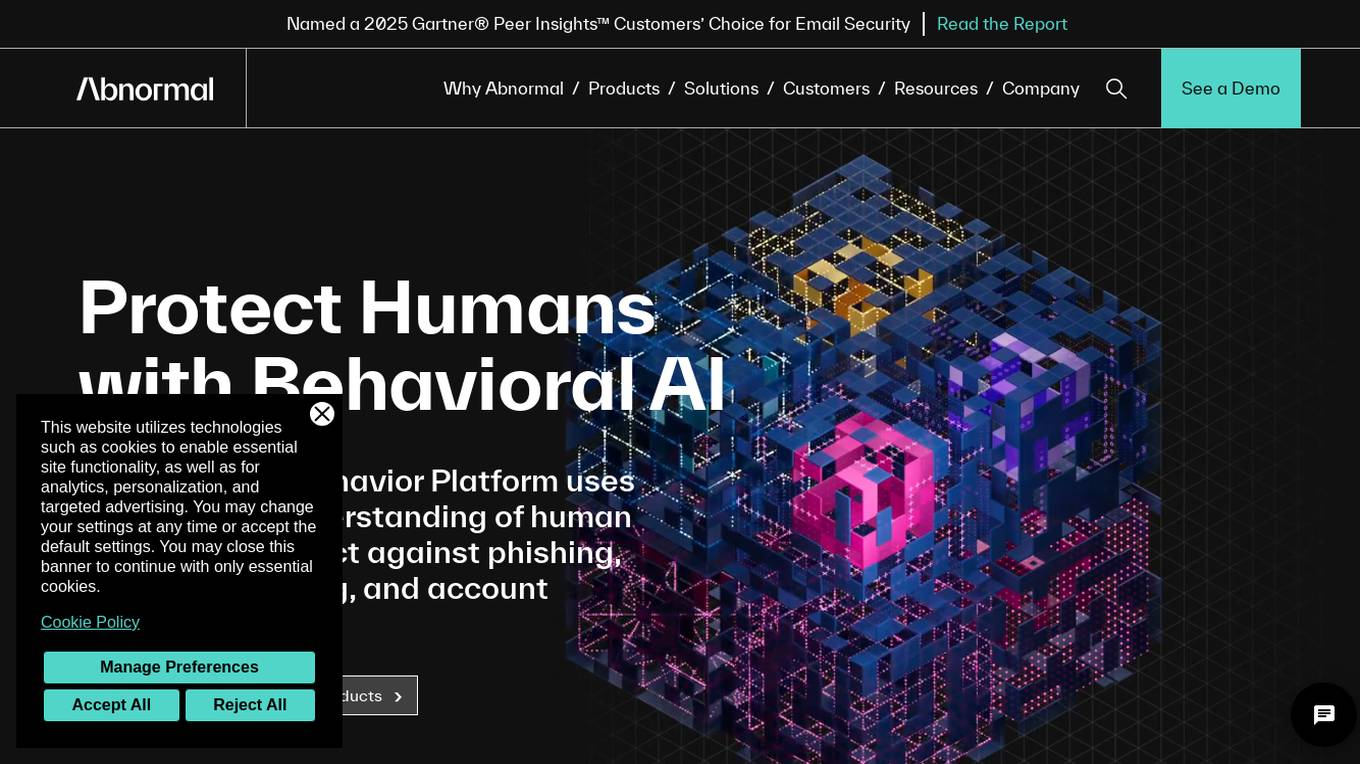

Abnormal

Abnormal is an AI-powered platform that leverages superhuman understanding of human behavior to protect against email attacks such as phishing, social engineering, and account takeovers. The platform offers unified protection across email and cloud applications, behavioral anomaly detection, account compromise detection, data security, and autonomous AI agents for security operations. Abnormal is recognized as a leader in email security and AI-native security, trusted by over 3,000 customers, including 20% of the Fortune 500. The platform aims to autonomously protect humans, reduce risks, save costs, accelerate AI adoption, and provide industry-leading security solutions.

Abnormal Security

Abnormal Security is an AI-powered platform that leverages superhuman understanding of human behavior to protect against email threats such as phishing, social engineering, and account takeovers. The platform is trusted by over 3,000 customers, including 25% of the Fortune 500 companies. Abnormal Security offers a comprehensive cloud email security solution, behavioral anomaly detection, SaaS security, and autonomous AI security agents to provide multi-layered protection against advanced email attacks. The platform is recognized as a leader in email security and AI-native security, delivering unmatched protection and reducing the risk of phishing attacks by 90%.

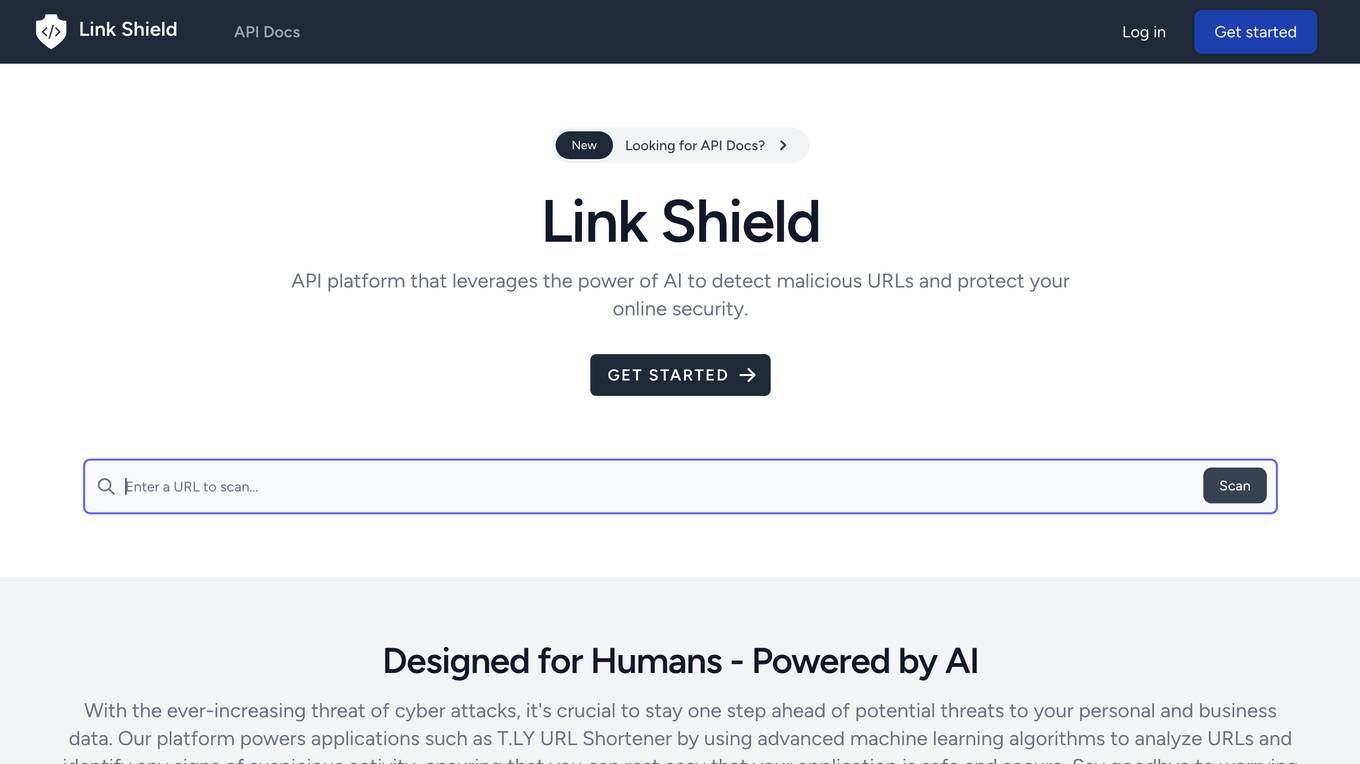

Link Shield

Link Shield is an AI-powered malicious URL detection API platform that helps protect online security. It utilizes advanced machine learning algorithms to analyze URLs and identify suspicious activity, safeguarding users from phishing scams, malware, and other harmful threats. The API is designed for ease of integration, affordability, and flexibility, making it accessible to developers of all levels. Link Shield empowers businesses to ensure the safety and security of their applications and online communities.

edu720

edu720 is a science-backed learning platform that uses AI and nanolearning to redefine how workforces learn and achieve their goals. It provides pre-built learning modules on various topics, including cybersecurity, privacy, and AI ethics. edu720's 360-degree approach ensures that all employees, regardless of their status or location, fully understand and absorb the knowledge conveyed.

Darktrace

Darktrace is an essential AI cybersecurity platform that offers proactive protection, cloud-native AI security, comprehensive risk management, and user protection across various devices. It accelerates triage by 10x, defends with confidence, and connects with various integrations. Darktrace ActiveAI Security Platform spots novel threats across organizations, providing solutions for ransomware, APTs, phishing, data loss, and more. With a focus on defense, Darktrace aims to transform cybersecurity by detecting and responding to known and novel threats in real-time.

BforeAI

BforeAI is an AI-powered platform that specializes in fighting cyberthreats with intelligence. The platform offers predictive security solutions to prevent phishing, spoofing, impersonation, hijacking, ransomware, online fraud, and data exfiltration. BforeAI uses cutting-edge AI technology for behavioral analysis and predictive results, going beyond reactive blocklists to predict and prevent attacks before they occur. The platform caters to various industries such as financial, manufacturing, retail, and media & entertainment, providing tailored solutions to address unique security challenges.

SpamBully

SpamBully is an email spam filter application designed for Outlook, Outlook Express, Windows Mail, and Windows Live Mail users. It utilizes artificial intelligence and server blacklists to intelligently filter emails, allowing users to keep their inbox spam-free. The application offers features such as Bayesian spam filtering, allow/block lists, punishment options for spammers, detailed email information, real-time blackhole list checks, mobile phone forwarding, statistics, and fraudulent link detection. With over 50,000 satisfied customers, SpamBully is known for its accuracy and ease of use in combating spam emails.

Breacher.ai

Breacher.ai is an AI-powered cybersecurity solution that specializes in deepfake detection and protection. It offers a range of services to help organizations guard against deepfake attacks, including deepfake phishing simulations, awareness training, micro-curriculum, educational videos, and certification. The platform combines advanced AI technology with expert knowledge to detect, educate, and protect against deepfake threats, ensuring the security of employees, assets, and reputation. Breacher.ai's fully managed service and seamless integration with existing security measures provide a comprehensive defense strategy against deepfake attacks.

Mimecast

Mimecast is an AI-powered email and collaboration security application that offers advanced threat protection, cloud archiving, security awareness training, and more. With a focus on protecting communications, data, and people, Mimecast leverages AI technology to provide industry-leading security solutions to organizations globally. The application is designed to defend against sophisticated email attacks, enhance human risk management, and streamline compliance processes.

Hive Defender

Hive Defender is an advanced, machine-learning-powered DNS security service that offers comprehensive protection against a vast array of cyber threats including but not limited to cryptojacking, malware, DNS poisoning, phishing, typosquatting, ransomware, zero-day threats, and DNS tunneling. Hive Defender transcends traditional cybersecurity boundaries, offering multi-dimensional protection that monitors both your browser traffic and the entirety of your machine’s network activity.

NodeZero™ Platform

Horizon3.ai Solutions offers the NodeZero™ Platform, an AI-powered autonomous penetration testing tool designed to enhance cybersecurity measures. The platform combines expert human analysis by Offensive Security Certified Professionals with automated testing capabilities to streamline compliance processes and proactively identify vulnerabilities. NodeZero empowers organizations to continuously assess their security posture, prioritize fixes, and verify the effectiveness of remediation efforts. With features like internal and external pentesting, rapid response capabilities, AD password audits, phishing impact testing, and attack research, NodeZero is a comprehensive solution for large organizations, ITOps, SecOps, security teams, pentesters, and MSSPs. The platform provides real-time reporting, integrates with existing security tools, reduces operational costs, and helps organizations make data-driven security decisions.

Sightengine

The website offers content moderation and image analysis products using powerful APIs to automatically assess, filter, and moderate images, videos, and text. It provides features such as image moderation, video moderation, text moderation, AI image detection, and video anonymization. The application helps in detecting unwanted content, AI-generated images, and personal information in videos. It also offers tools to identify near-duplicates, spam, and abusive links, and prevent phishing and circumvention attempts. The platform is fast, scalable, accurate, easy to integrate, and privacy compliant, making it suitable for various industries like marketplaces, dating apps, and news platforms.

Varonis

Varonis is an AI-powered data security platform that provides end-to-end data security solutions for organizations. It offers automated outcomes to reduce risk, enforce policies, and stop active threats. Varonis helps in data discovery & classification, data security posture management, data-centric UEBA, data access governance, and data loss prevention. The platform is designed to protect critical data across multi-cloud, SaaS, hybrid, and AI environments.

0 - Open Source Tools

8 - OpenAI Gpts

Phish or No Phish Trainer

Hone your phishing detection skills! Analyze emails, texts, and calls to spot deception. Become a security pro!

CyberNews GPT

CyberNews GPT is an assistant that provides the latest security news about cyber threats, hackings and breaches, malware, zero-day vulnerabilities, phishing, scams and so on.