Best AI tools for< Language Modeling >

Infographic

20 - AI tool Sites

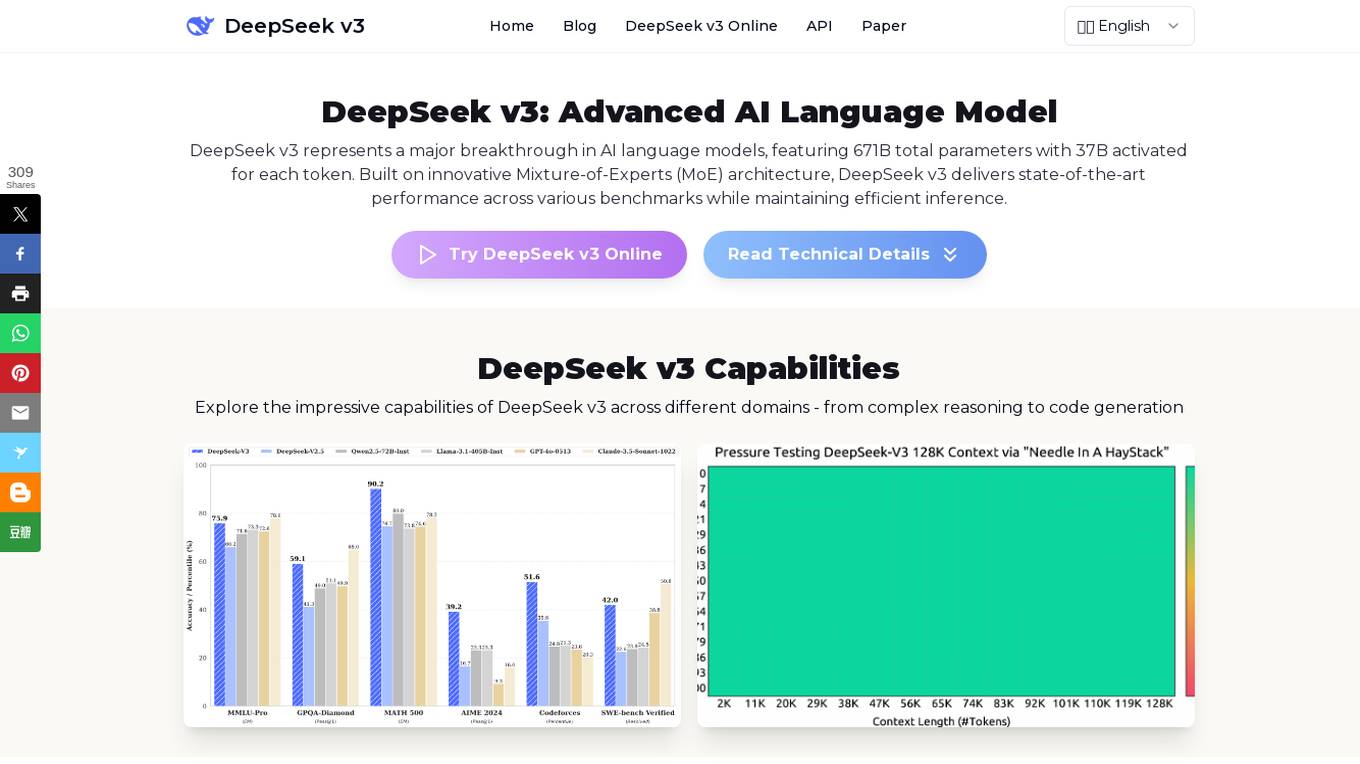

DeepSeek v3

DeepSeek v3 is an advanced AI language model that represents a major breakthrough in AI language models. It features a groundbreaking Mixture-of-Experts (MoE) architecture with 671B total parameters, delivering state-of-the-art performance across various benchmarks while maintaining efficient inference capabilities. DeepSeek v3 is pre-trained on 14.8 trillion high-quality tokens and excels in tasks such as text generation, code completion, and mathematical reasoning. With a 128K context window and advanced Multi-Token Prediction, DeepSeek v3 sets new standards in AI language modeling.

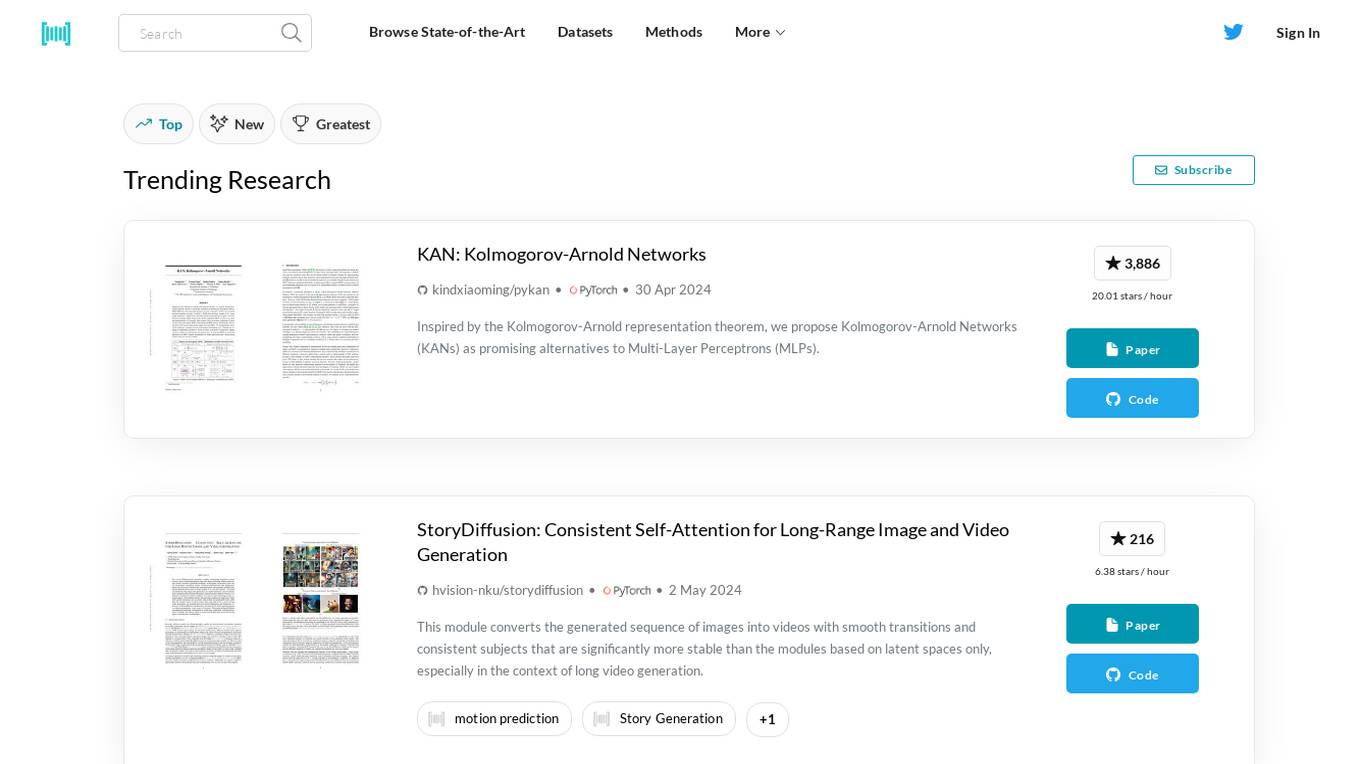

Papers With Code

Papers With Code is an AI tool that provides access to the latest research papers in the field of Machine Learning, along with corresponding code implementations. It offers a platform for researchers and enthusiasts to stay updated on state-of-the-art datasets, methods, and trends in the ML domain. Users can explore a wide range of topics such as language modeling, image generation, virtual try-on, and more through the collection of papers and code available on the website.

Clarifai

Clarifai is a full-stack AI developer platform that provides a range of tools and services for building and deploying AI applications. The platform includes a variety of computer vision, natural language processing, and generative AI models, as well as tools for data preparation, model training, and model deployment. Clarifai is used by a variety of businesses and organizations, including Fortune 500 companies, startups, and government agencies.

Clarifai

Clarifai is a full-stack AI platform that provides developers and ML engineers with the fastest, production-grade deep learning platform. It offers a wide range of features, including data preparation, model building, model operationalization, and AI workflows. Clarifai is used by a variety of companies, including Fortune 500 companies and startups, to build AI applications in a variety of industries, including retail, manufacturing, and healthcare.

Sensey.ai

Sensey.ai is a personal AI assistant designed to help startups with a variety of tasks, from scheduling meetings to managing finances. It uses natural language processing and machine learning to understand your needs and provide personalized recommendations.

Avanzai

Avanzai is a workflow automation tool tailored for financial services, offering AI-driven solutions to streamline processes and enhance decision-making. The platform enables funds to leverage custom data for generating valuable insights, from market trend analysis to risk assessment. With a focus on simplifying complex financial workflows, Avanzai empowers users to explore, visualize, and analyze data efficiently, without the need for extensive setup. Through open-source demos and customizable data integrations, institutions can harness the power of AI to optimize macro analysis, instrument screening, risk analytics, and factor modeling.

Ogma

Ogma is an interpretable symbolic general problem-solving model that utilizes a symbolic sequence modeling paradigm to address tasks requiring reliability, complex decomposition, and without hallucinations. It offers solutions in areas such as math problem-solving, natural language understanding, and resolution of uncertainty. The technology is designed to provide a structured approach to problem-solving by breaking down tasks into manageable components while ensuring interpretability and self-interpretability. Ogma aims to set benchmarks in problem-solving applications by offering a reliable and transparent methodology.

NumPy

NumPy is a library for the Python programming language, adding support for large, multi-dimensional arrays and high-level mathematical functions to perform operations on these arrays. It is the fundamental package for scientific computing with Python and is used in a wide range of applications, including data science, machine learning, and image processing. NumPy is open source and distributed under a liberal BSD license, and is developed and maintained publicly on GitHub by a vibrant, responsive, and diverse community.

Slidebean

Slidebean is an all-in-one pitch deck software that helps startups create professional and visually appealing pitch decks to raise funds from investors. It offers a range of features including an AI-powered pitch deck builder, collaboration tools, automated design, and analytics. Slidebean also provides pitch deck services where a team of experts helps founders with writing, design, financial modeling, and go-to-market strategy.

Julius

Julius is an AI-powered tool that helps users analyze data and files. It can perform various tasks such as generating visualizations, answering data questions, and performing statistical modeling. Julius is designed to save users time and effort by automating complex data analysis tasks.

Tableau Augmented Analytics

Tableau Augmented Analytics is a class of analytics powered by artificial intelligence (AI) and machine learning (ML) that expands a human’s ability to interact with data at a contextual level. It uses AI to make analytics accessible so that more people can confidently explore and interact with data to drive meaningful decisions. From automated modeling to guided natural language queries, Tableau's augmented analytics capabilities are powerful and trusted to help organizations leverage their growing amount of data and empower a wider business audience to discover insights.

Stability AI

Stability AI is an AI application that offers a suite of models for various modalities such as image, video, audio, 3D, and language. It provides cutting-edge generative AI technology with a focus on stability and quality. Users can access advanced AI models for tasks like text-to-image generation, video modeling, audio generation, and more. The application also offers licensing options for commercial use and self-hosting benefits.

SketchPro

SketchPro is the world's first AI design assistant for architecture and design. It allows users to create realistic 3D models and visualizations from simple sketches, elevations, or images. SketchPro is powered by AI, which gives it the ability to understand natural language instructions and generate designs that are both accurate and visually appealing. With SketchPro, architects and designers can save time and effort, and explore more creative possibilities.

Hanooman.AI

Hanooman.AI is an advanced artificial intelligence tool that leverages machine learning algorithms to provide intelligent solutions for various industries. The application offers a wide range of features such as natural language processing, image recognition, predictive analytics, and personalized recommendations. With Hanooman.AI, users can automate repetitive tasks, gain valuable insights from data, and enhance decision-making processes. The platform is designed to be user-friendly and scalable, making it suitable for both individuals and businesses looking to harness the power of AI technology.

Nyle

Nyle is an AI-powered operating system for e-commerce growth. It provides tools to generate higher profits and increase team productivity. Nyle's platform includes advanced market analysis, quantitative assessment of customer sentiment, and automated insights. It also offers collaborative dashboards and interactive modeling to facilitate decision-making and cross-functional alignment.

Virtual Friends

Virtual Friends is a website that allows users to create and interact with 3D AI-powered virtual friends. These virtual friends can be customized to look and act like anyone the user wants, and they can be used for a variety of purposes, such as companionship, entertainment, and education. Virtual Friends is still in development, but it has the potential to be a powerful tool for connecting people with AI-powered companions.

Xeven Solutions

Xeven Solutions is an AI Development & Solutions Company that offers a wide range of AI services including AI Chatbot Development, Predictive Modelling, Mobile App Development, Chat GPT Integrations, Custom Software, Natural Language Processing, Digital Marketing, Machine Learning, DevOps, and Computer Vision. The company aims to shape the future of businesses by providing tailored AI solutions that improve decision-making, automate operations, save time, and boost profits. Xeven Solutions leverages expertise in machine learning, deep learning, and AI development to deliver exceptional solutions across various industries.

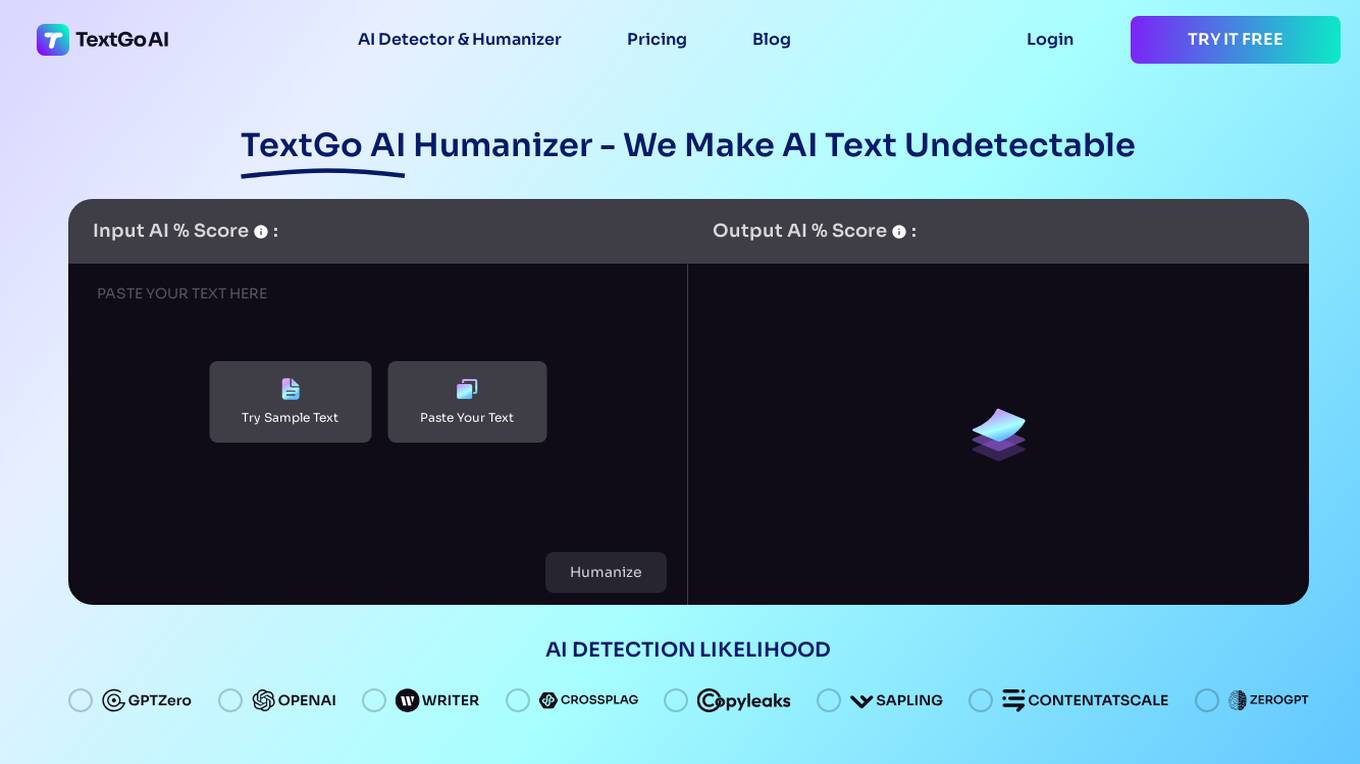

TextGo AI Humanizer

TextGo AI Humanizer is an advanced AI tool designed to humanize AI-generated text, making it undetectable by AI detection tools. It offers a unique solution to rewrite content in an original and plagiarism-free manner. TextGo AI surpasses AI detectors by understanding AI detection criteria such as NLP, perplexity, burstiness, and watermarks. With features like advanced modeling techniques, cohesive writing, and watermark removal, TextGo AI ensures the production of high-quality, human-like content. It supports over 80 languages and provides a free trial for users to experience its capabilities.

Language Reactor

Language Reactor is a web application that helps users learn foreign languages by watching videos with interactive subtitles. Users can hover over any word in the subtitles to see its translation, definition, and pronunciation. They can also click on any word to add it to their vocabulary list. Language Reactor also offers a variety of exercises to help users practice their listening, speaking, reading, and writing skills.

Language Atlas

Language Atlas is an online language learning platform that uses AI to help students learn French or Spanish. The platform offers a variety of courses, flashcards, and other resources to help students learn at their own pace. Language Atlas also has a global community of learners who can help students stay motivated and on track.

1 - Open Source Tools

intel-extension-for-transformers

Intel® Extension for Transformers is an innovative toolkit designed to accelerate GenAI/LLM everywhere with the optimal performance of Transformer-based models on various Intel platforms, including Intel Gaudi2, Intel CPU, and Intel GPU. The toolkit provides the below key features and examples: * Seamless user experience of model compressions on Transformer-based models by extending [Hugging Face transformers](https://github.com/huggingface/transformers) APIs and leveraging [Intel® Neural Compressor](https://github.com/intel/neural-compressor) * Advanced software optimizations and unique compression-aware runtime (released with NeurIPS 2022's paper [Fast Distilbert on CPUs](https://arxiv.org/abs/2211.07715) and [QuaLA-MiniLM: a Quantized Length Adaptive MiniLM](https://arxiv.org/abs/2210.17114), and NeurIPS 2021's paper [Prune Once for All: Sparse Pre-Trained Language Models](https://arxiv.org/abs/2111.05754)) * Optimized Transformer-based model packages such as [Stable Diffusion](examples/huggingface/pytorch/text-to-image/deployment/stable_diffusion), [GPT-J-6B](examples/huggingface/pytorch/text-generation/deployment), [GPT-NEOX](examples/huggingface/pytorch/language-modeling/quantization#2-validated-model-list), [BLOOM-176B](examples/huggingface/pytorch/language-modeling/inference#BLOOM-176B), [T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), [Flan-T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), and end-to-end workflows such as [SetFit-based text classification](docs/tutorials/pytorch/text-classification/SetFit_model_compression_AGNews.ipynb) and [document level sentiment analysis (DLSA)](workflows/dlsa) * [NeuralChat](intel_extension_for_transformers/neural_chat), a customizable chatbot framework to create your own chatbot within minutes by leveraging a rich set of [plugins](https://github.com/intel/intel-extension-for-transformers/blob/main/intel_extension_for_transformers/neural_chat/docs/advanced_features.md) such as [Knowledge Retrieval](./intel_extension_for_transformers/neural_chat/pipeline/plugins/retrieval/README.md), [Speech Interaction](./intel_extension_for_transformers/neural_chat/pipeline/plugins/audio/README.md), [Query Caching](./intel_extension_for_transformers/neural_chat/pipeline/plugins/caching/README.md), and [Security Guardrail](./intel_extension_for_transformers/neural_chat/pipeline/plugins/security/README.md). This framework supports Intel Gaudi2/CPU/GPU. * [Inference](https://github.com/intel/neural-speed/tree/main) of Large Language Model (LLM) in pure C/C++ with weight-only quantization kernels for Intel CPU and Intel GPU (TBD), supporting [GPT-NEOX](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox), [LLAMA](https://github.com/intel/neural-speed/tree/main/neural_speed/models/llama), [MPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/mpt), [FALCON](https://github.com/intel/neural-speed/tree/main/neural_speed/models/falcon), [BLOOM-7B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/bloom), [OPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/opt), [ChatGLM2-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/chatglm), [GPT-J-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptj), and [Dolly-v2-3B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox). Support AMX, VNNI, AVX512F and AVX2 instruction set. We've boosted the performance of Intel CPUs, with a particular focus on the 4th generation Intel Xeon Scalable processor, codenamed [Sapphire Rapids](https://www.intel.com/content/www/us/en/products/docs/processors/xeon-accelerated/4th-gen-xeon-scalable-processors.html).

20 - OpenAI Gpts

Language Coach

Practice speaking another language like a local without being a local (use ChatGPT Voice via mobile app!)

Language Learning Content Creator

I make fun, engaging learning material for any language, topic and level!

Quickest Feedback for Language Learner

Helps improve language skills through interactive scenarios and feedback.

Create Short Stories to Learn a Language

2500+ word stories in target language with images, for language learning.

Indigenous Language Supporter

Supports Indigenous language learning, particularly Cree, Ojibwe, and Oji-Cree

Avash Language Companion

Avash Tutor: Expert in Avash alphabet, words, pronunciation, and culture.

Spreche - German Language Buddy

Bilingual companion for German-English translations and language learning.

Language Proficiency Level Self-Assessment

A language self-assessment guide with mobile app voice interaction support.