Best AI tools for< Data Quality Analyst >

Infographic

20 - AI tool Sites

Welo Data

Welo Data is an AI tool that specializes in AI benchmarking, model assessment, and training high-quality datasets for AI models. The platform offers services such as supervised fine tuning, reinforcement learning with human feedback, data generation, expert evaluations, and data quality framework to support the development of world-class AI models. With over 27 years of experience, Welo Data combines language expertise and AI data to deliver exceptional training and performance evaluation solutions.

Lightup

Lightup is a cloud data quality monitoring tool with AI-powered anomaly detection, incident alerts, and data remediation capabilities for modern enterprise data stacks. It specializes in helping large organizations implement successful and sustainable data quality programs quickly and easily. Lightup's pushdown architecture allows for monitoring data content at massive scale without moving or copying data, providing extreme scalability and optimal automation. The tool empowers business users with democratized data quality checks and enables automatic fixing of bad data at enterprise scale.

Roundtable

Roundtable is a human-in-the-loop AI application designed to improve market research data quality by detecting and stopping bots and fraud in real-time. It uses behavioral biometrics to analyze user interactions, identify AI-generated responses, and ensure data integrity. The application offers seamless security, effortless integration, privacy-preserving design, and explainable results for transparent decision-making. Trusted by global platforms, Roundtable helps businesses maintain trust in their data and make informed decisions.

DQLabs

DQLabs is a modern data quality platform that leverages observability to deliver reliable and accurate data for better business outcomes. It combines the power of Data Quality and Data Observability to enable data producers, consumers, and leaders to achieve decentralized data ownership and turn data into action faster, easier, and more collaboratively. The platform offers features such as data observability, remediation-centric data relevance, decentralized data ownership, enhanced data collaboration, and AI/ML-enabled semantic data discovery.

Dot Group Data Advisory

Dot Group is an AI-powered data advisory and solutions platform that specializes in effective data management. They offer services to help businesses maximize the potential of their data estate, turning complex challenges into profitable opportunities using AI technologies. With a focus on data strategy, data engineering, and data transport, Dot Group provides innovative solutions to drive better profitability for their clients.

One Data

One Data is an AI-powered data product builder that offers a comprehensive solution for building, managing, and sharing data products. It bridges the gap between IT and business by providing AI-powered workflows, lifecycle management, data quality assurance, and data governance features. The platform enables users to easily create, access, and share data products with automated processes and quality alerts. One Data is trusted by enterprises and aims to streamline data product management and accessibility through Data Mesh or Data Fabric approaches, enhancing efficiency in logistics and supply chains. The application is designed to accelerate business impact with reliable data products and support cost reduction initiatives with advanced analytics and collaboration for innovative business models.

Branded Research

Branded Research, acquired by Dynata, provides access to AI-verified audience insights. It offers a range of research methods, including surveys, webcam studies, and emotional AI. With its advanced algorithms and extensive profiling, Branded helps businesses connect with their target audience and gain valuable insights to drive innovation. The company serves various industries, including tech, consumer goods, healthcare, and research agencies.

Lilac

Lilac is an AI tool designed to enhance data quality and exploration for AI applications. It offers features such as data search, quantification, editing, clustering, semantic search, field comparison, and fuzzy-concept search. Lilac enables users to accelerate dataset computations and transformations, making it a valuable asset for data scientists and AI practitioners. The tool is trusted by Alignment Lab and is recommended for working with LLM datasets.

Luminarity.ai

Luminarity.ai is a cutting-edge AI application that specializes in Product Data Intelligence. It leverages artificial intelligence to generate intelligence from product descriptive data, such as 3D CAD models, 2D drawings, and specifications. By transforming data into actionable insights, Luminarity empowers businesses to enhance their competitiveness and automate complex decision-making processes. The application offers a new dimension of AI-based data utilization, enabling companies to significantly increase their competitive edge by leveraging their own enterprise data effectively.

DataBahn

DataBahn is an AI-powered data pipeline management platform that empowers global enterprises with intelligent tools to gather, manage, and move data reliably and quickly. It offers a comprehensive solution for data integration, management, and optimization, helping users save costs and time. The platform ensures real-time insights, agility, and value by automating data processes and providing complete data ownership and governance. DataBahn is trusted by ambitious companies and partners worldwide for its efficiency and effectiveness in handling data workflows.

Seudo

Seudo is a data workflow automation platform that uses AI to help businesses automate their data processes. It provides a variety of features to help businesses with data integration, data cleansing, data transformation, and data analysis. Seudo is designed to be easy to use, even for businesses with no prior experience with AI. It offers a drag-and-drop interface that makes it easy to create and manage data workflows. Seudo also provides a variety of pre-built templates that can be used to get started quickly.

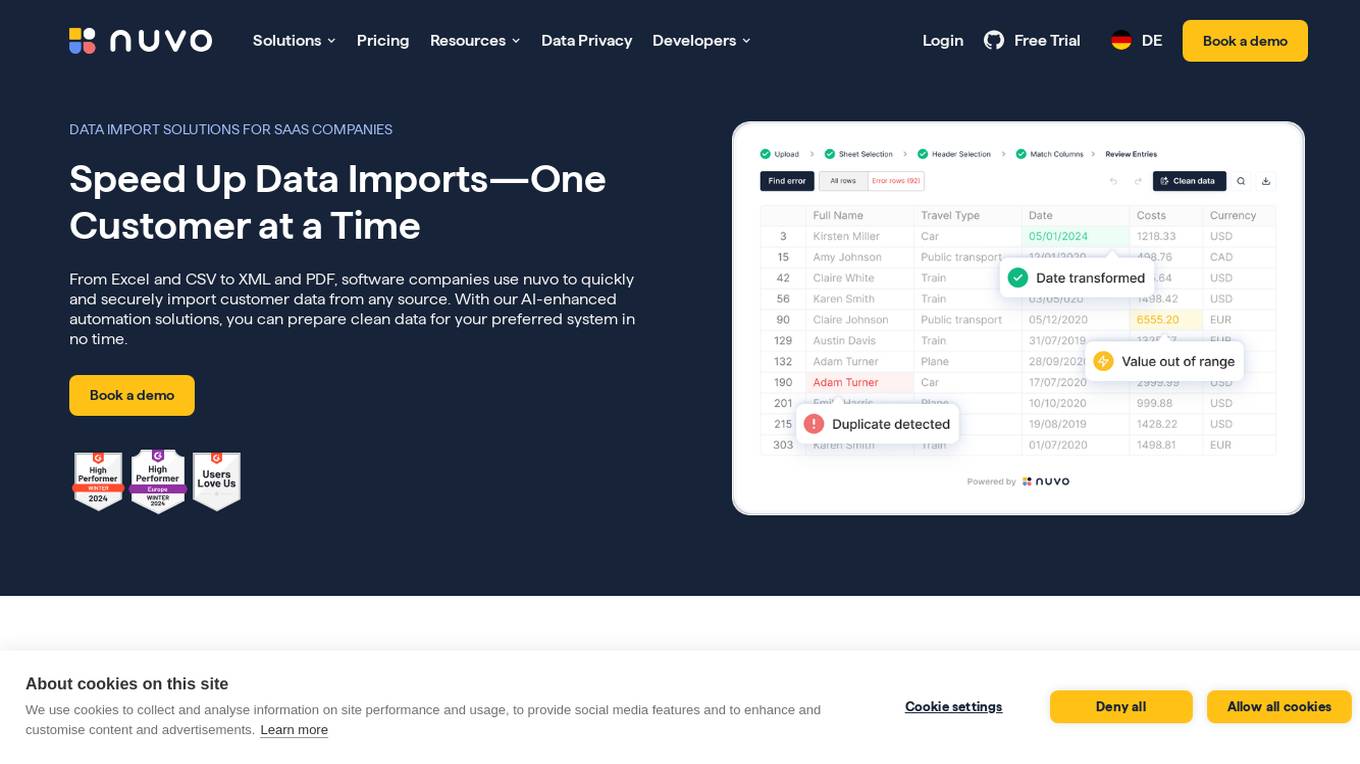

nuvo

nuvo is an AI-powered data import solution that offers fast, secure, and scalable data import solutions for software companies. It provides tools like nuvo Data Importer SDK and nuvo Data Pipeline to streamline manual and recurring ETL data imports, enabling users to manage data imports independently. With AI-enhanced automation, nuvo helps prepare clean data for preferred systems quickly and efficiently, reducing manual effort and improving data quality. The platform allows users to upload unlimited data in various formats, match imported data to system schemas, clean and validate data, and import clean data into target systems with just a click.

Coginiti

Coginiti is a collaborative analytics platform and tools designed for SQL developers, data scientists, engineers, and analysts. It offers capabilities such as AI assistant, data mesh, database & object store support, powerful query & analysis, and share & reuse curated assets. Coginiti empowers teams and organizations to manage collaborative practices, data efficiency, and deliver trusted data products faster. The platform integrates modular analytic development, collaborative versioned teamwork, and a data quality framework to enhance productivity and ensure data reliability. Coginiti also provides an AI-enabled virtual analytics advisor to boost team efficiency and empower data heroes.

Datuum

Datuum is an AI-powered data onboarding solution that offers seamless integration for businesses. It simplifies the data onboarding process by automating manual tasks, generating code, and ensuring data accuracy with AI-driven validation. Datuum helps businesses achieve faster time to value, reduce costs, improve scalability, and enhance data quality and consistency.

Evidently AI

Evidently AI is an open-source machine learning (ML) monitoring and observability platform that helps data scientists and ML engineers evaluate, test, and monitor ML models from validation to production. It provides a centralized hub for ML in production, including data quality monitoring, data drift monitoring, ML model performance monitoring, and NLP and LLM monitoring. Evidently AI's features include customizable reports, structured checks for data and models, and a Python library for ML monitoring. It is designed to be easy to use, with a simple setup process and a user-friendly interface. Evidently AI is used by over 2,500 data scientists and ML engineers worldwide, and it has been featured in publications such as Forbes, VentureBeat, and TechCrunch.

Tektonic AI

Tektonic AI is an AI application that empowers businesses by providing AI agents to automate processes, make better decisions, and bridge data silos. It offers solutions to eliminate manual work, increase autonomy, streamline tasks, and close gaps between disconnected systems. The application is designed to enhance data quality, accelerate deal closures, optimize customer self-service, and ensure transparent operations. Tektonic AI is founded by industry veterans with expertise in AI, cloud, and enterprise software.

Datasaur

Datasaur is an advanced text and audio data labeling platform that offers customizable solutions for various industries such as LegalTech, Healthcare, Financial, Media, e-Commerce, and Government. It provides features like configurable annotation, quality control automation, and workforce management to enhance the efficiency of NLP and LLM projects. Datasaur prioritizes data security with military-grade practices and offers seamless integrations with AWS and other technologies. The platform aims to streamline the data labeling process, allowing engineers to focus on creating high-quality models.

CloudResearch

CloudResearch is an online platform that offers tools for online research and participant recruitment. It provides academic and market researchers with immediate access to millions of diverse, high-quality respondents worldwide. The platform is designed to help researchers recruit vetted online participants, manage complex research projects, and elevate data quality. CloudResearch also offers AI-powered solutions for survey research, participant engagement, and data quality assurance.

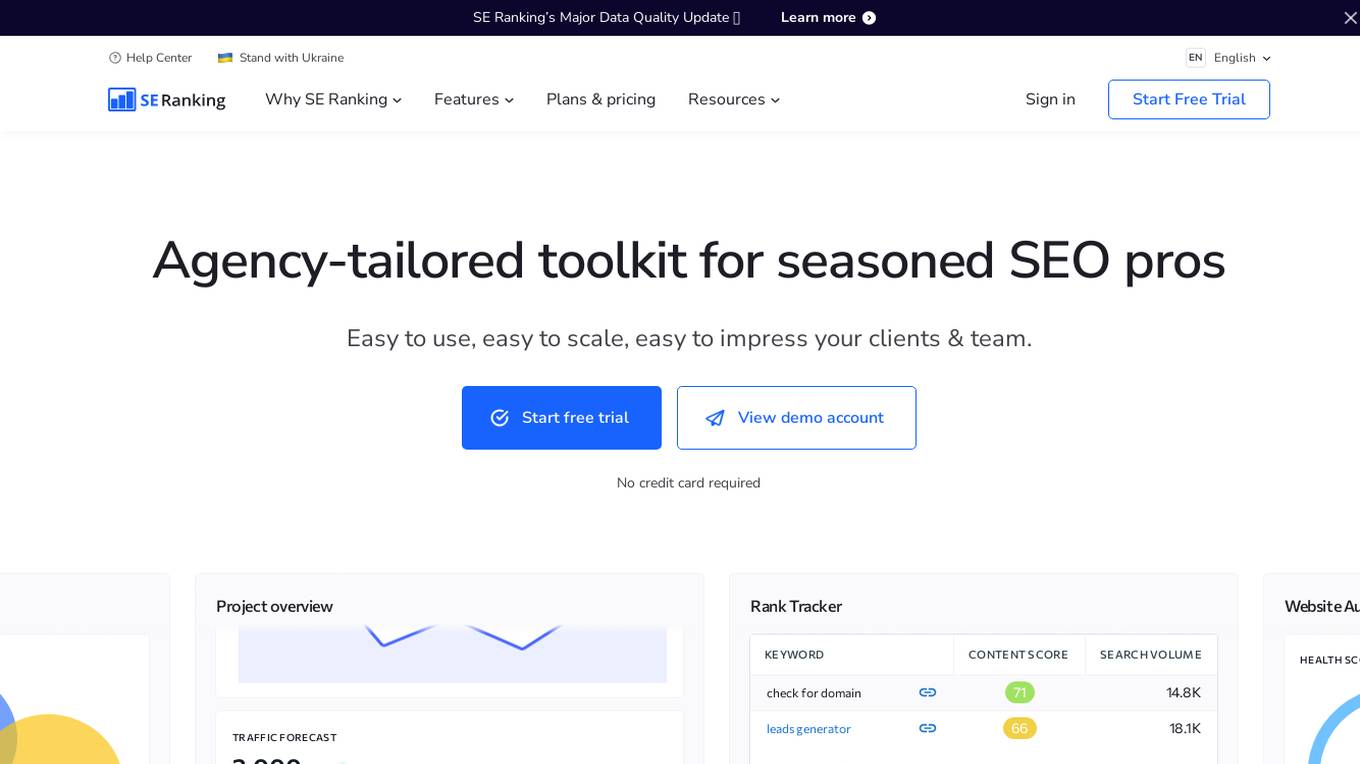

SE Ranking

SE Ranking is a robust SEO platform that offers a comprehensive suite of tools for agencies, enterprises, SMBs, and entrepreneurs. It provides features such as Rank Tracker, On-Page SEO Checker, Website Audit, Competitor Analysis Tool, and Backlink Checker. SE Ranking is trusted by users worldwide and offers a range of resources including educational content, webinars, and an Agency Hub. The platform also includes an AI Writer for content creation and optimization. With a focus on data quality and user satisfaction, SE Ranking aims to help users improve their SEO performance and online visibility.

Prolific

Prolific is a platform that helps users quickly find research participants they can trust. It offers free representative samples, a participant pool of domain experts, the ability to bring your own participants, and an API for integration. Prolific ensures data quality by verifying participants with bank-grade ID checks, ongoing checks to identify bots, and no AI participants. The platform allows users to easily set up accounts, access rich and comprehensive responses, and scale research projects efficiently.

0 - Open Source Tools

20 - OpenAI Gpts

DataQualityGuardian

A GPT-powered assistant specializing in data validation and quality checks for various datasets.

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

Data Governance Advisor

Ensures data accuracy, consistency, and security across organization.

Missing Cluster Identification Program

I analyze and integrate missing clusters in data for coherent structuring.

Project Quality Assurance Advisor

Ensures project deliverables meet predetermined quality standards.

Maurice

Your go-to for designing, analyzing, and recording experiments, and generate your lab report.

Project Performance Monitoring Advisor

Guides project success through comprehensive performance monitoring.

Academic Paper Evaluator

Enthusiastic about truth in academic papers, critical and analytical.

Triage Management and Pipeline Architecture

Strategic advisor for triage management and pipeline optimization in business operations.

Performance Testing Advisor

Ensures software performance meets organizational standards and expectations.

Biochem Helper: Research's Helper

A helpful guide for biochemical engineers, offering insights and reassurance.

E&L and Pharmaceutical Regulatory Compliance AI

This GPT chat AI is specialized in understanding Extractables and Leachables studies, aligning with pharmaceutical guidelines, and aiding in the design and interpretation of relevant experiments.