Best AI tools for< Data Extractor >

Infographic

20 - AI tool Sites

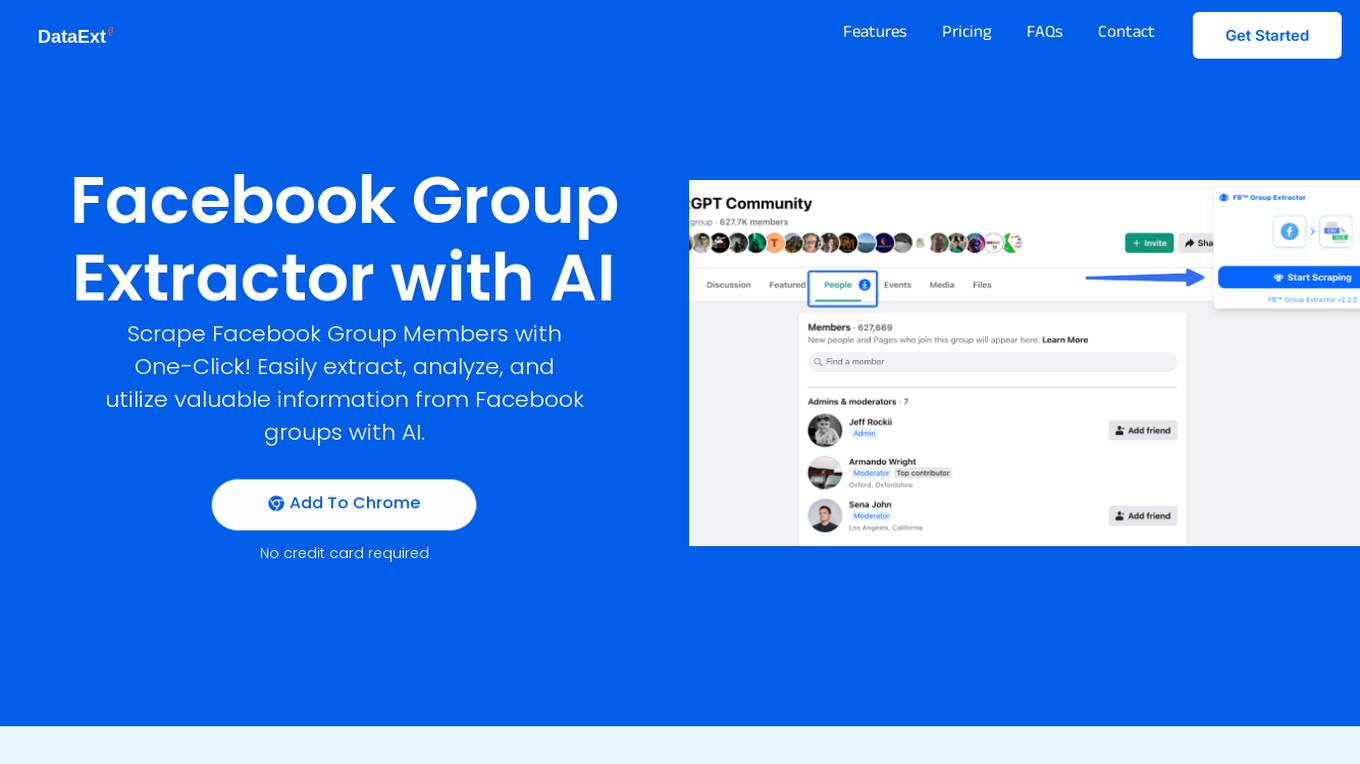

FB Group Extractor

FB Group Extractor is an AI-powered tool designed to scrape Facebook group members' data with one click. It allows users to easily extract, analyze, and utilize valuable information from Facebook groups using artificial intelligence technology. The tool provides features such as data extraction, behavioral analytics for personalized ads, content enhancement, user research, and more. With over 10k satisfied users, FB Group Extractor offers a seamless experience for businesses to enhance their marketing strategies and customer insights.

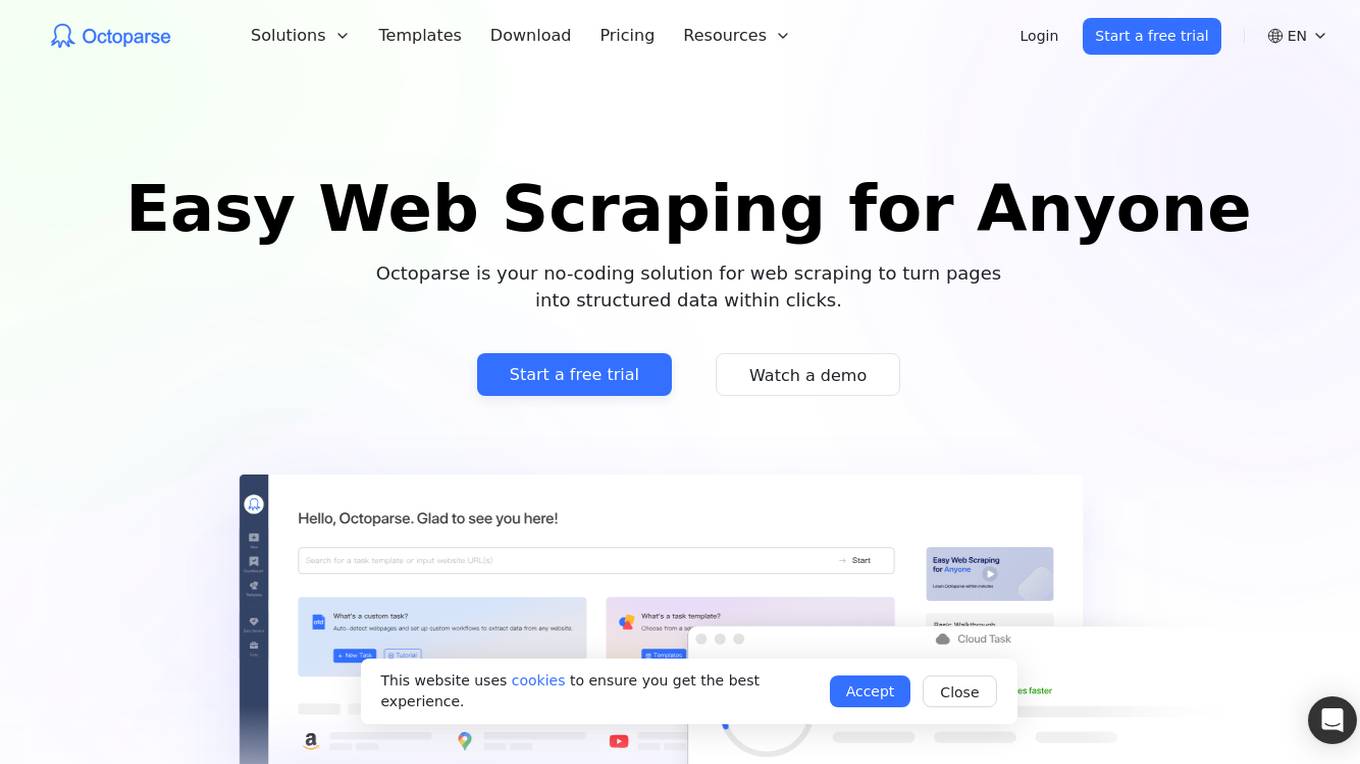

Octoparse

Octoparse is an AI web scraping tool that offers a no-coding solution for turning web pages into structured data with just a few clicks. It provides users with the ability to build reliable web scrapers without any coding knowledge, thanks to its intuitive workflow designer. With features like AI assistance, automation, and template libraries, Octoparse is a powerful tool for data extraction and analysis across various industries.

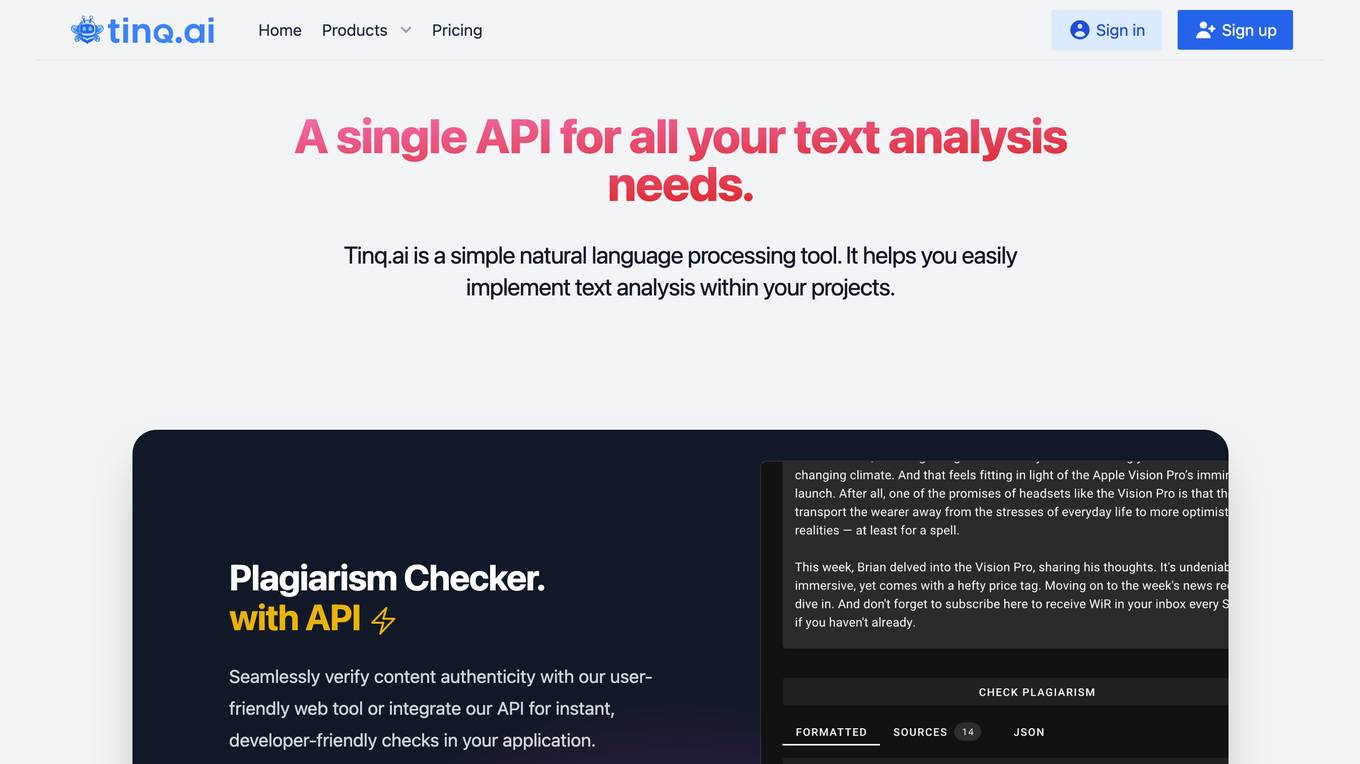

Tinq.ai

Tinq.ai is a natural language processing (NLP) tool that provides a range of text analysis capabilities through its API. It offers tools for tasks such as plagiarism checking, text summarization, sentiment analysis, named entity recognition, and article extraction. Tinq.ai's API can be integrated into applications to add NLP functionality, such as content moderation, sentiment analysis, and text rewriting.

Cogniflow

Cogniflow is a no-code AI platform that allows users to build and deploy custom AI models without any coding experience. The platform provides a variety of pre-built AI models that can be used for a variety of tasks, including customer service, HR, operations, and more. Cogniflow also offers a variety of integrations with other applications, making it easy to connect your AI models to your existing workflow.

Reworkd

Reworkd is a web data extraction tool that uses AI to generate and repair web extractors on the fly. It allows users to retrieve data from hundreds of websites without the need for developers. Reworkd is used by businesses in a variety of industries, including manufacturing, e-commerce, recruiting, lead generation, and real estate.

ScrapeComfort

ScrapeComfort is an AI-driven web scraping tool that offers an effortless and intuitive data mining solution. It leverages AI technology to extract data from websites without the need for complex coding or technical expertise. Users can easily input URLs, download data, set up extractors, and save extracted data for immediate use. The tool is designed to cater to various needs such as data analytics, market investigation, and lead acquisition, making it a versatile solution for businesses and individuals looking to streamline their data collection process.

FormX.ai

FormX.ai is an AI-powered tool that automates data extraction and conversion to empower businesses with digital transformation. It offers Intelligent Document Processing, Optical Character Recognition, and a Document Extractor to streamline document handling and data extraction across various industries such as insurance, finance, retail, human resources, logistics, and healthcare. With FormX.ai, users can instantly extract document data, power their apps with API-ready data extraction, and enjoy low-code development for efficient data processing. The tool is designed to eliminate manual work, embrace seamless automation, and provide real-world solutions for streamlining data entry processes.

MyEmailExtractor

MyEmailExtractor is a free email extractor tool that helps you find and save emails from web pages to a CSV file. It's a great way to quickly increase your leads and grow your business. With MyEmailExtractor, you can extract emails from any website, including search engine results pages (SERPs), social media sites, and professional networking sites. The extracted emails are accurate and up-to-date, and you can export them to a CSV file for easy use.

Mixpeek Solutions

Mixpeek Solutions offers a Multimodal Data Warehouse for Developers, providing a Developer-First API for AI-native Content Understanding. The platform allows users to search, monitor, classify, and cluster unstructured data like video, audio, images, and documents. Mixpeek Solutions offers a range of features including Unified Search, Automated Classification, Unsupervised Clustering, Feature Extractors for Every Data Type, and various specialized extraction models for different data types. The platform caters to a wide range of industries and provides seamless model upgrades, cross-model compatibility, A/B testing infrastructure, and simplified model management.

Extracto.bot

Extracto.bot is an AI web scraping tool that automates the process of extracting data from websites. It is a no-configuration, intelligent web scraper that allows users to collect data from any site using Google Sheets and AI technology. The tool is designed to be simple, instant, and intelligent, enabling users to save time and effort in collecting and organizing data for various purposes.

Extracta.ai

Extracta.ai is an AI data extraction tool for documents and images that automates data extraction processes with easy integration. It allows users to define custom templates for extracting structured data without the need for training. The platform can extract data from various document types, including invoices, resumes, contracts, receipts, and more, providing accurate and efficient results. Extracta.ai ensures data security, encryption, and GDPR compliance, making it a reliable solution for businesses looking to streamline document processing.

Extractify.co

Extractify.co is a website that offers a variety of tools and services for extracting information from different sources. The platform provides users with the ability to extract data from websites, documents, and other sources in a quick and efficient manner. With a user-friendly interface, Extractify.co aims to simplify the process of data extraction for individuals and businesses alike. Whether you need to extract text, images, or other types of data, Extractify.co has the tools to help you get the job done. The platform is designed to be intuitive and easy to use, making it accessible to users of all skill levels.

Evolution AI

Evolution AI is an AI data extraction tool that specializes in extracting data from financial documents such as financial statements, bank statements, invoices, and other related documents. The tool uses generative AI technology to automate the data extraction process, eliminating the need for manual entry. Evolution AI is trusted by global industry leaders and offers exceptional customer service, advanced technology, and a one-stop shop for data extraction.

Airparser

Airparser is an AI-powered email and document parser tool that revolutionizes data extraction by utilizing the GPT parser engine. It allows users to automate the extraction of structured data from various sources such as emails, PDFs, documents, and handwritten texts. With features like automatic extraction, export to multiple platforms, and support for multiple languages, Airparser simplifies data extraction processes for individuals and businesses. The tool ensures data security and offers seamless integration with other applications through APIs and webhooks.

Jsonify

Jsonify is an AI tool that automates the process of exploring and understanding websites to find, filter, and extract structured data at scale. It uses AI-powered agents to navigate web content, replacing traditional data scrapers and providing data insights with speed and precision. Jsonify integrates with leading data analysis and business intelligence suites, allowing users to visualize and gain insights into their data easily. The tool offers a no-code dashboard for creating workflows and easily iterating on data tasks. Jsonify is trusted by companies worldwide for its ability to adapt to page changes, learn as it runs, and provide technical and non-technical integrations.

Kudra

Kudra is an AI-powered data extraction tool that offers dedicated solutions for finance, human resources, logistics, legal, and more. It effortlessly extracts critical data fields, tables, relationships, and summaries from various documents, transforming unstructured data into actionable insights. Kudra provides customizable AI models, seamless integrations, and secure document processing while supporting over 20 languages. With features like custom workflows, model training, API integration, and workflow builder, Kudra aims to streamline document processing for businesses of all sizes.

AgentQL

AgentQL is an AI-powered tool for painless data extraction and web automation. It eliminates the need for fragile XPath or DOM selectors by using semantic selectors and natural language descriptions to find web elements reliably. With controlled output and deterministic behavior, AgentQL allows users to shape data exactly as needed. The tool offers features such as extracting data, filling forms automatically, and streamlining testing processes. It is designed to be user-friendly and efficient for developers and data engineers.

Dataku.ai

Dataku.ai is an advanced data extraction and analysis tool powered by AI technology. It offers seamless extraction of valuable insights from documents and texts, transforming unstructured data into structured, actionable information. The tool provides tailored data extraction solutions for various needs, such as resume extraction for streamlined recruitment processes, review insights for decoding customer sentiments, and leveraging customer data to personalize experiences. With features like market trend analysis and financial document analysis, Dataku.ai empowers users to make strategic decisions based on accurate data. The tool ensures precision, efficiency, and scalability in data processing, offering different pricing plans to cater to different user needs.

Browse AI

Browse AI is a powerful AI-powered data extraction platform that allows users to scrape and monitor data from any website without the need for coding. With Browse AI, users can easily extract data, monitor websites for changes, turn websites into APIs, and integrate data with over 7,000 apps. The platform offers prebuilt robots for various use cases like e-commerce, real estate, recruitment, and more. Browse AI is trusted by over 740,000 users worldwide for its reliability, scalability, and ease of use.

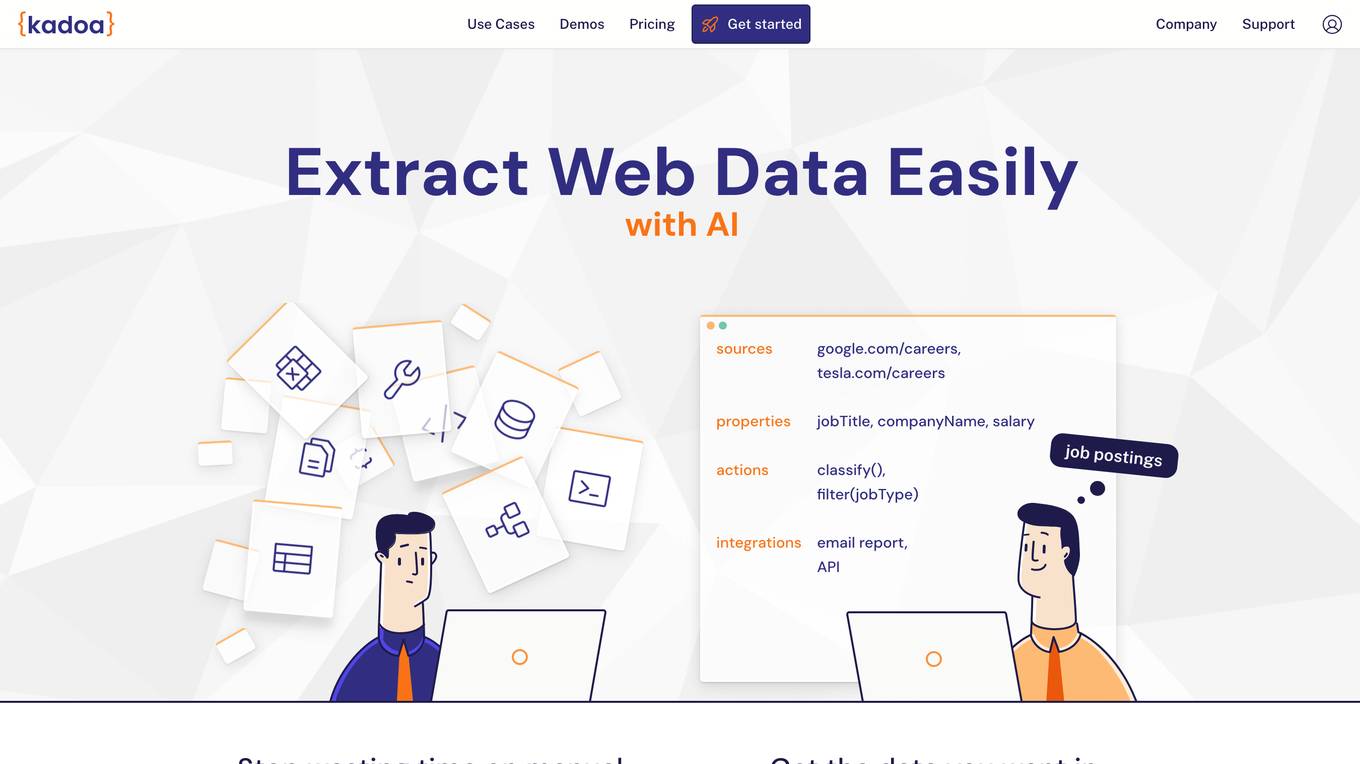

Kadoa

Kadoa is an AI web scraper tool that extracts unstructured web data at scale automatically, without the need for coding. It offers a fast and easy way to integrate web data into applications, providing high accuracy, scalability, and automation in data extraction and transformation. Kadoa is trusted by various industries for real-time monitoring, lead generation, media monitoring, and more, offering zero setup or maintenance effort and smart navigation capabilities.

0 - Open Source Tools

20 - OpenAI Gpts

Data Extractor Pro

Expert in data extraction and context-driven analysis. Can read most filetypes including PDFS, XLSX, Word, TXT, CSV, EML, Etc.

Dissertation & Thesis GPT

An Ivy Leage Scholar GPT equipped to understand your research needs, formulate comprehensive literature review strategies, and extract pertinent information from a plethora of academic databases and journals. I'll then compose a peer review-quality paper with citations.

PDF Ninja

I extract data and tables from PDFs to CSV, focusing on data privacy and precision.

Property Manager Document Assistant

Provides analysis and data extraction of Property Management documents and contracts for managers

Receipt CSV Formatter

Extract from receipts to CSV: Date of Purchase, Item Purchased, Quantity Purchased, Units

Fill PDF Forms

Fill legal forms & complex PDF documents easily! Upload a file, provide data sources and I'll handle the rest.

Advanced Web Scraper with Code Generator

Generates web scraping code with accurate selectors.