Best AI tools for< Captioning Specialist >

Infographic

20 - AI tool Sites

Echo Labs

Echo Labs is an AI-powered platform that provides captioning services for higher education institutions. The platform leverages cutting-edge technology to offer accurate and affordable captioning solutions, helping schools save millions of dollars. Echo Labs aims to make education more accessible by ensuring proactive accessibility measures are in place, starting with lowering the cost of captioning. The platform boasts a high accuracy rate of 99.8% and is backed by industry experts. With seamless integrations and a focus on inclusive learning environments, Echo Labs is revolutionizing accessibility in education.

Line 21

Line 21 is an intelligent captioning solution that provides real-time remote captioning services in over a hundred languages. The platform offers a state-of-the-art caption delivery software that combines human expertise with AI services to create, enhance, translate, and deliver live captions to various viewer destinations. Line 21 supports accessible corporations, concerts, societies, and screenings by delivering fast and accurate captions through low-latency delivery methods. The platform also features an Ai Proofreader for real-time caption accuracy, caption encoding, fast caption delivery, and automatic translations in over 100 languages.

Live-captions.com

Live-captions.com is an AI-based live captioning service that offers real-time, cost-effective accessibility solutions for meetings and conferences. The service allows users to integrate live captions and interactive transcripts seamlessly, without the need for programming. With real-time processing capabilities, users can provide live captions alongside their RTMP streams or generate captions for recorded media. The platform supports multi-lingual options, with nearly 140 languages and dialects available. Live-captions.com aims to automate captioning services through its programmatic API, making it a valuable tool for enhancing accessibility and user experience.

AIEasyUse

AIEasyUse is a user-friendly website that provides easy-to-use AI tools for businesses and individuals. With over 60+ content creation templates, our AI-powered content writer can help you quickly generate high-quality content for your blog, website, or marketing materials. Our AI-powered image generator can create custom images for your content. Simply input your desired image parameters and our AI technology will generate a unique image for you. Our AI-powered chatbot is available 24/7 to help you with any questions you may have about our platform or your content. Our chatbot can handle common inquiries and provide personalized support. Our AI-powered code generator can help you write code for your web or mobile app faster and more efficiently. Easily convert speech files to text for transcription or captioning purposes.

Captions

Captions is an AI-powered creative studio that offers a wide range of tools to simplify the video creation process. With features like automatic captioning, eye contact correction, video trimming, background noise removal, and more, Captions empowers users to create professional-grade videos effortlessly. Trusted by millions worldwide, Captions leverages the power of AI to enhance storytelling and streamline video production.

AssemblyAI

AssemblyAI is an industry-leading Speech AI tool that offers powerful SpeechAI models for accurate transcription and understanding of speech. It provides breakthrough speech-to-text models, real-time captioning, and advanced speech understanding capabilities. AssemblyAI is designed to help developers build world-class products with unmatched accuracy and transformative audio intelligence.

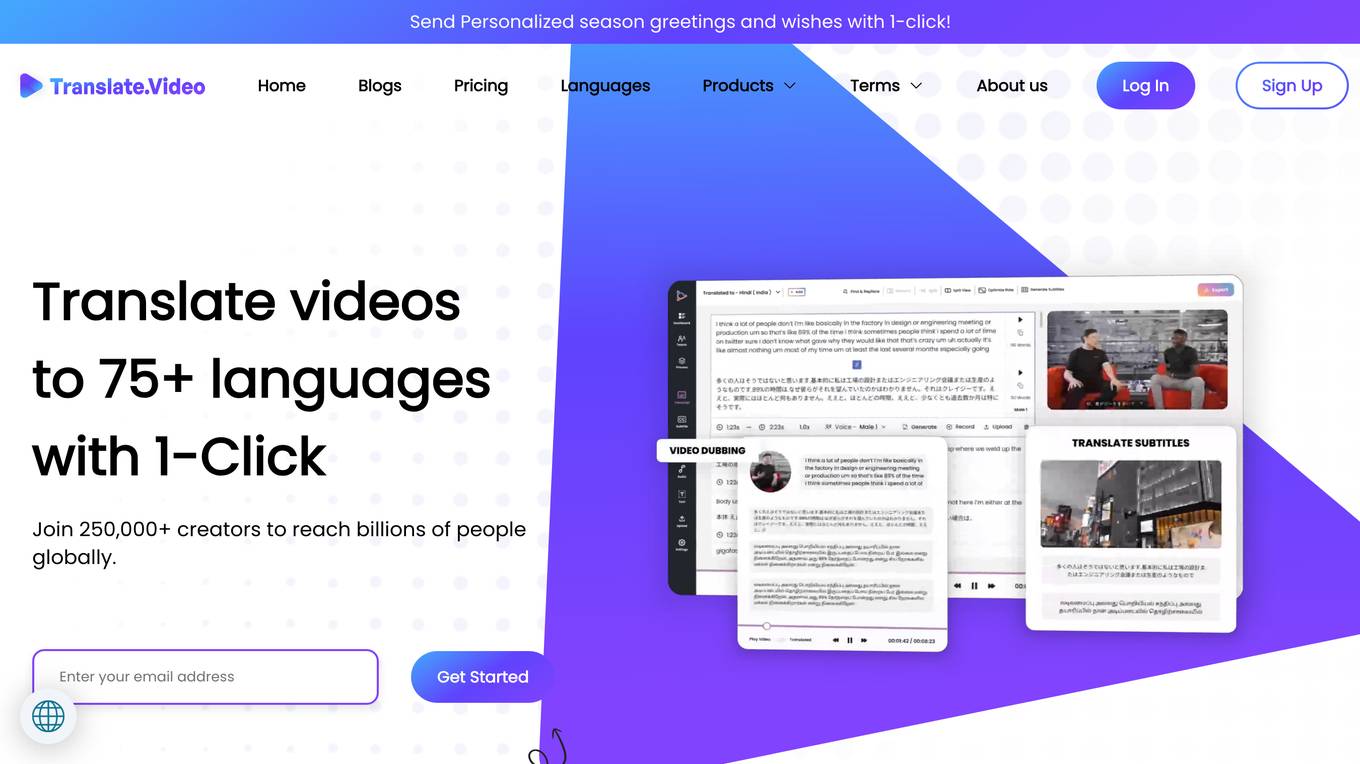

Translate.Video

Translate.Video is an AI multi-speaker video translation tool that offers speaker diarization, voice cloning, text-to-speech, and instant voice cloning features. It allows users to translate videos to over 75 languages with just one click, making content creation and translation efficient and accessible. The tool also provides plugins for popular design software like Photoshop, Illustrator, and Figma, enabling users to accelerate creative translation. Translate.Video is designed to help creators, influencers, and enterprises reach a global audience by simplifying the captioning, subtitling, and dubbing process.

Evolphin

Evolphin is a leading AI-powered platform for Digital Asset Management (DAM) and Media Asset Management (MAM) that caters to creatives, sports professionals, marketers, and IT teams. It offers advanced AI capabilities for fast search, robust version control, and Adobe plugins. Evolphin's AI automation streamlines video workflows, identifies objects, faces, logos, and scenes in media, generates speech-to-text for search and closed captioning, and enables automations based on AI engine identification. The platform allows for editing videos with AI, creating rough cuts instantly. Evolphin's cloud solutions facilitate remote media production pipelines, ensuring speed, security, and simplicity in managing creative assets.

AltTextGenerate

AltTextGenerate is a free online tool for generating alt text for images, enhancing SEO and accessibility. It uses AI-powered descriptions to provide suitable alt text for visuals. The tool leverages Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) to understand image content and generate descriptive text. AltTextGenerate offers a comprehensive solution for generating alt text across various platforms, including WordPress, Shopify, and CMSs. Users can benefit from SEO advantages, improved website ranking, and enhanced user experience through descriptive alt text.

SceneXplain

SceneXplain is a cutting-edge AI tool that specializes in generating descriptive captions for images and summarizing videos. It leverages advanced artificial intelligence algorithms to analyze visual content and provide accurate and concise textual descriptions. With SceneXplain, users can easily create engaging captions for their images and obtain quick summaries of lengthy videos. The tool is designed to streamline the process of content creation and enhance the accessibility of visual media for a wide range of applications.

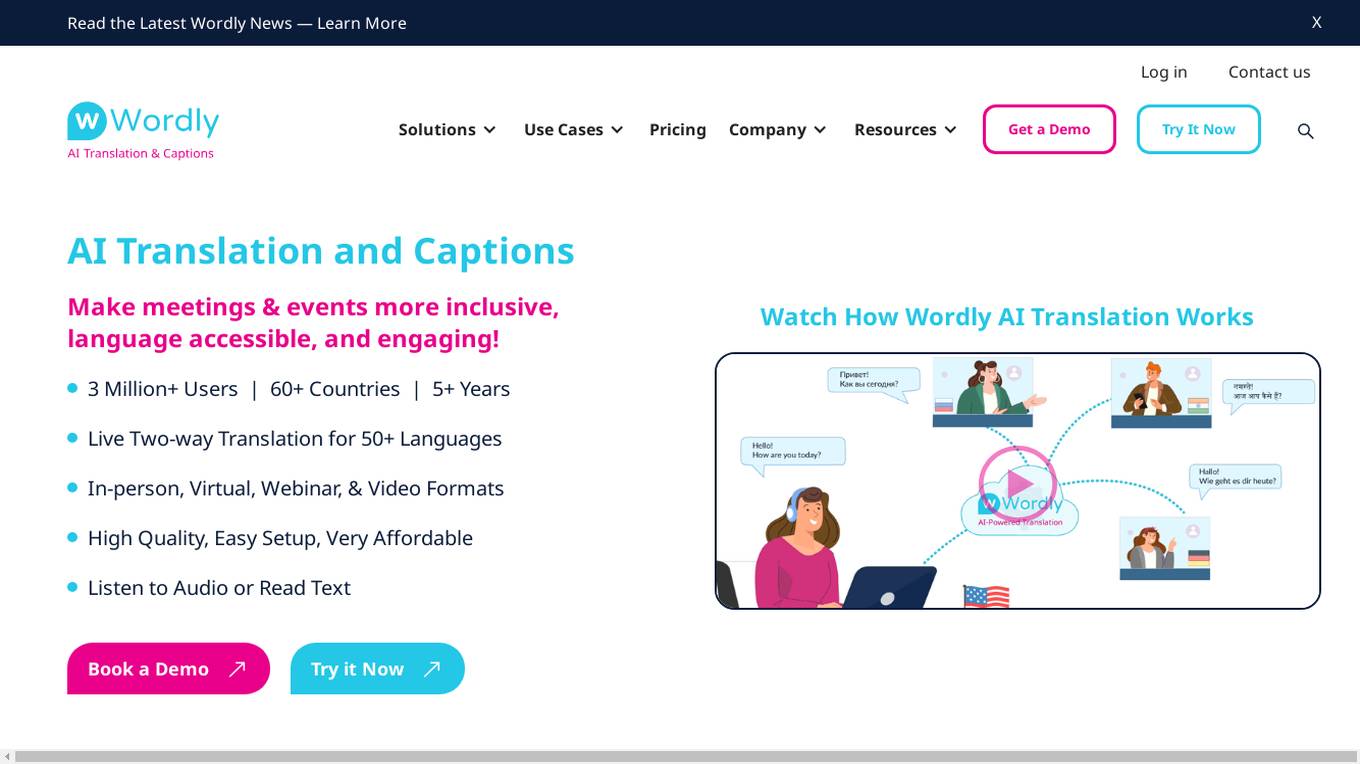

Wordly AI Translation

Wordly AI Translation is a leading AI application that specializes in providing live translation and captioning services for meetings and events. With over 3 million users across 60+ countries, Wordly offers a comprehensive solution to make events more inclusive, language accessible, and engaging. The platform supports two-way translation for 50+ languages in various event formats, including in-person, virtual, webinar, and video. Wordly ensures high-quality translation output through extensive language testing and optimization, along with powerful glossary tools. The application also prioritizes security and privacy, meeting SOC 2 Type II compliance requirements. Wordly's AI translation technology has been recognized for its speed, ease of use, and affordability, making it a trusted choice for event organizers worldwide.

ListenMonster

ListenMonster is a free video caption generator tool that provides unmatched speech-to-text accuracy. It allows users to generate automatic subtitles in multiple languages, customize video captions, remove background noise, and export results in various formats. ListenMonster aims to offer high accuracy transcription at affordable prices, with instant results and support for 99 languages. The tool features a smart editor for easy customization, flexible export options, and automatic language detection. Subtitles are emphasized as a necessity in today's world, offering benefits such as global reach, SEO boost, accessibility, and content repurposing.

CaptionBot

CaptionBot is an AI tool developed by Microsoft Cognitive Services that provides automated image captioning. It uses advanced artificial intelligence algorithms to analyze images and generate descriptive captions. Users can upload images to the platform and receive accurate and detailed descriptions of the content within the images. CaptionBot.ai aims to assist users in understanding and interpreting visual content more effectively through the power of AI technology.

Verbit

Verbit is an AI-based transcription and captioning service that provides unmatched accuracy with actionable insights. It offers services such as live and recorded captioning, transcription, audio description, translation, note-taking, and dubbing. Verbit caters to various industries including media & entertainment, legal, education, corporate & market research, and government. The platform leverages AI technologies like Automatic Speech Recognition engine (Captivate™) and Generative AI technology (Gen.V™) to provide real-time insights and seamless integration into existing workflows. Verbit aims to make speech-to-text conversion more accessible and productive for its users.

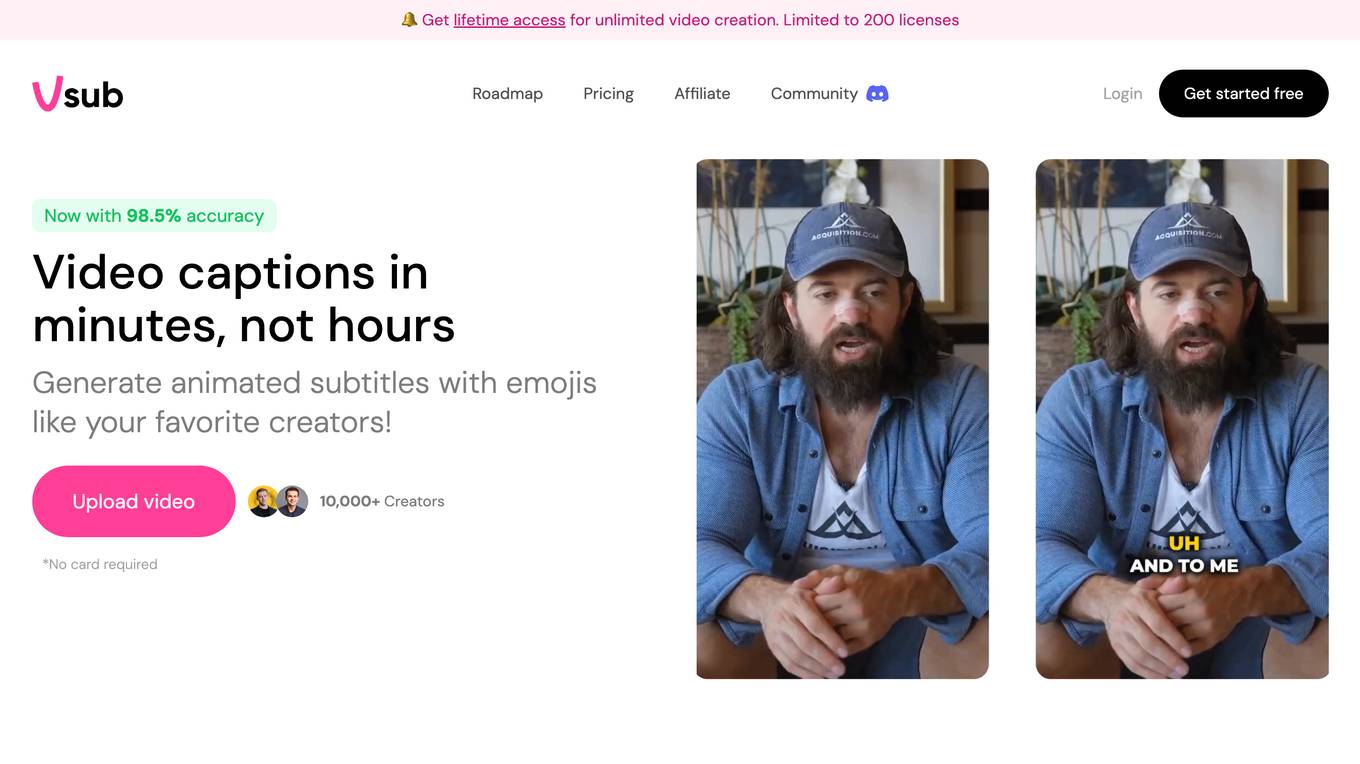

Vsub

Vsub is an AI-powered video captioning tool that makes it easy to create accurate and engaging captions for your videos. With Vsub, you can automatically generate captions, highlight keywords, and add animated emojis to your videos. Vsub also offers a variety of templates to help you create professional-looking captions. Vsub is the perfect tool for anyone who wants to create high-quality video content quickly and easily.

Vsub

Vsub is an AI-powered platform that allows users to create faceless videos quickly and easily. With a focus on video automation, Vsub offers a range of features such as generating AI shorts with one click, multiple templates for various niches, auto captions with animated emojis, and more. The platform aims to streamline the video creation process and help users save time by automating tasks that would otherwise require manual editing. Vsub is designed to cater to content creators, marketers, and individuals looking to create engaging videos without the need for on-camera appearances.

Felo Subtitles

Felo Subtitles is an AI-powered tool that provides live captions and translated subtitles for various types of content. It uses advanced speech recognition and translation algorithms to generate accurate and real-time subtitles in multiple languages. With Felo Subtitles, users can enjoy seamless communication and accessibility in different scenarios, such as online meetings, webinars, videos, and live events.

AudioShake

AudioShake is a cloud-based audio processing platform that uses artificial intelligence (AI) to separate audio into its component parts, such as vocals, music, and effects. This technology can be used for a variety of applications, including mixing and mastering, localization and captioning, interactive audio, and sync licensing.

UNUM

UNUM is a comprehensive social media management tool designed to help users create, publish, analyze, and grow their social media channels. With features like auto-posting, AI captioning, hashtag generation, photo and video editing, and a media library, UNUM streamlines the social media workflow. It also offers insights, reports, and a social media calendar to help users track their performance and plan ahead. UNUM is trusted by over 20 million creators and teams worldwide, providing a centralized platform for managing multiple social media accounts and optimizing content strategy.

Visionati

Visionati is an AI-powered platform that provides image captioning, descriptions, and analysis for everyone. It offers a comprehensive toolkit for visual analysis, including intelligent tagging, content filtering, and integration with various AI technologies. Visionati helps transform complex visuals into clear, actionable insights for digital marketing, storytelling, and data analysis. Users can easily create an account, access seamless integration, and leverage advanced analysis capabilities through the Visionati API.