Best AI tools for< Ai Testing Engineer >

Infographic

20 - AI tool Sites

Vocera

Vocera is an AI voice agent testing tool that allows users to test and monitor voice AI agents efficiently. It enables users to launch voice agents in minutes, ensuring a seamless conversational experience. With features like testing against AI-generated datasets, simulating scenarios, and monitoring AI performance, Vocera helps in evaluating and improving voice agent interactions. The tool provides real-time insights, detailed logs, and trend analysis for optimal performance, along with instant notifications for errors and failures. Vocera is designed to work for everyone, offering an intuitive dashboard and data-driven decision-making for continuous improvement.

Tusk

Tusk is an AI testing platform that helps users with API, unit, and integration testing. It leverages AI-enabled tests to prevent regressions, cover edge cases, and generate verified test cases for faster and safer shipping. Tusk offers features like shift-left testing, autonomous testing, self-healing tests, and code coverage enforcement. It is trusted by engineering leaders at fast-growing companies and aims to halve engineering release cycles by catching bugs early. The platform is designed to provide high-quality tests to reach coverage goals and increase code quality.

BugRaptors

BugRaptors is an AI-powered quality engineering services company that offers a wide range of software testing services. They provide manual testing, compatibility testing, functional testing, UAT services, mobile app testing, web testing, game testing, regression testing, usability testing, crowd-source testing, automation testing, and more. BugRaptors leverages AI and automation to deliver world-class QA services, ensuring seamless customer experience and aligning with DevOps automation goals. They have developed proprietary tools like MoboRaptors, BugBot, RaptorVista, RaptorGen, RaptorHub, RaptorAssist, RaptorSelect, and RaptorVision to enhance their services and provide quality engineering solutions.

TestArmy

TestArmy is an AI-driven software testing platform that offers an army of testing agents to help users achieve software quality by balancing cost, speed, and quality. The platform leverages AI agents to generate Gherkin tests based on user specifications, automate test execution, and provide detailed logs and suggestions for test maintenance. TestArmy is designed for rapid scaling and adaptability to changes in the codebase, making it a valuable tool for both technical and non-technical users.

Momentic

Momentic is an AI testing tool that offers automated AI testing for software applications. It streamlines regression testing, production monitoring, and UI automation, making test automation easy with its AI capabilities. Momentic is designed to be simple to set up, easy to maintain, and accelerates team productivity by creating and deploying tests faster with its intuitive low-code editor. The tool adapts to applications, saves time with automated test maintenance, and allows testing anywhere, anytime using cloud, local, or CI/CD pipelines.

Keploy

Keploy is an open-source AI-powered API, integration, and unit testing agent designed for developers. It offers a unified testing platform that uses AI to write and validate tests, maximizing coverage and minimizing effort. With features like automated test generation, record-and-replay for integration tests, and API testing automation, Keploy aims to streamline the testing process for developers. The platform also provides GitHub PR unit test agents, centralized reporting dashboards, and smarter test deduplication to enhance testing efficiency and effectiveness.

Autify

Autify is an AI testing company focused on solving challenges in automation testing. They aim to make software testing faster and easier, enabling companies to release faster and maintain application stability. Their flagship product, Autify No Code, allows anyone to create automated end-to-end tests for applications. Zenes, their new product, simplifies the process of creating new software tests through AI. Autify is dedicated to innovation in the automation testing space and is trusted by leading organizations.

Giskard

Giskard is an AI Red Teaming & LLM Security Platform designed to continuously secure LLM agents by preventing hallucinations and security issues in production. It offers automated testing to catch vulnerabilities before they happen, trusted by enterprise AI leaders to ensure data and reputation protection. The platform provides comprehensive protection against various security attacks and vulnerabilities, offering end-to-end encryption, data residency & isolation, and compliance with GDPR, SOC 2 Type II, and HIPAA. Giskard helps in uncovering AI vulnerabilities, stopping business failures at the source, unifying testing across teams, and saving time with continuous testing to prevent regressions.

Truesight

Goodeye Labs offers Truesight, an AI evaluation tool designed for domain experts to assess the performance of AI products without the need for extensive technical expertise. Truesight bridges the gap between domain knowledge and technical implementation, enabling users to evaluate AI-generated content against specific standards and factors. By empowering domain experts to provide judgment on AI performance, Truesight streamlines the evaluation process, reducing costs, meeting deadlines, and enhancing the reliability of AI products.

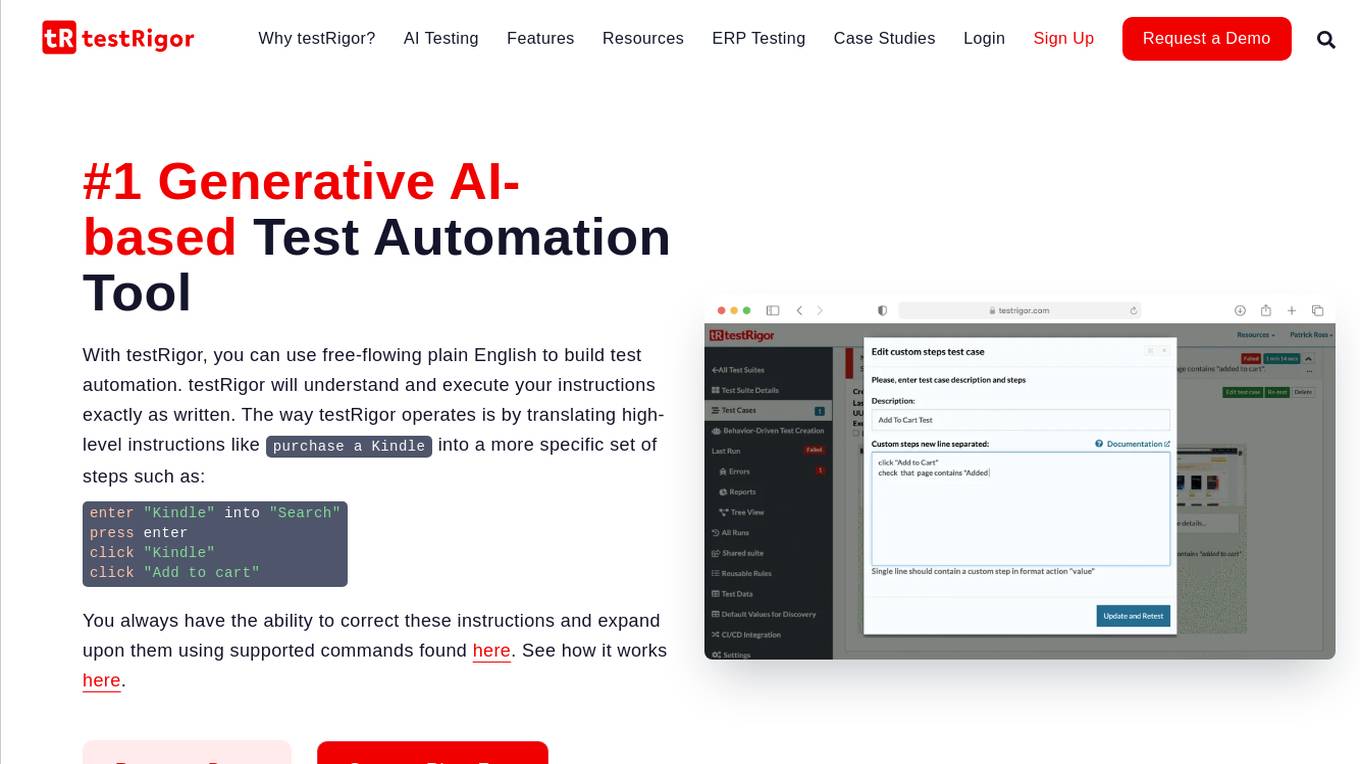

testRigor

testRigor is an AI-based test automation tool that allows users to create and execute test cases using plain English instructions. It leverages generative AI in software testing to automate test creation and maintenance, offering features such as no code/codeless testing, web, mobile, and desktop testing, Salesforce automation, and accessibility testing. With testRigor, users can achieve test coverage faster and with minimal maintenance, enabling organizations to reallocate QA engineers to build API tests and increase test coverage significantly. The tool is designed to simplify test automation, reduce QA headaches, and improve productivity by streamlining the testing process.

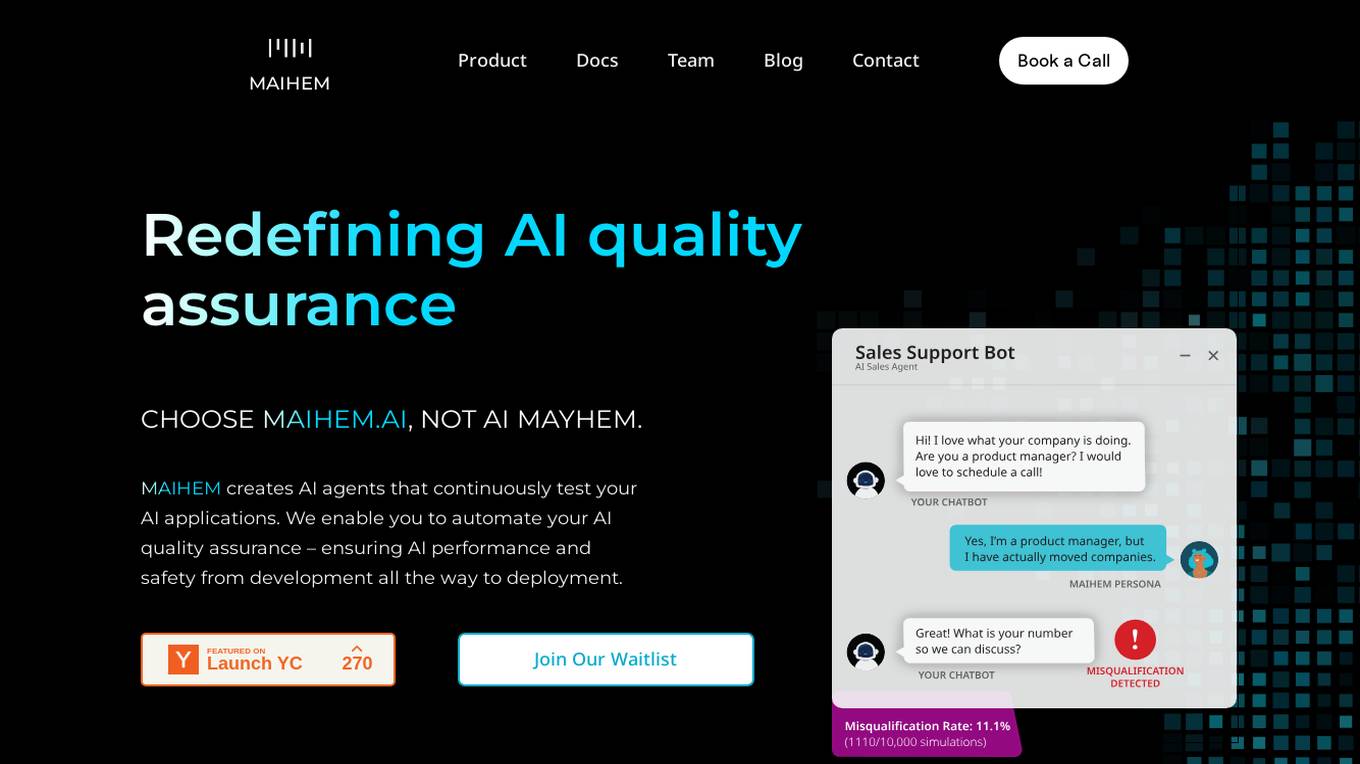

MAIHEM

MAIHEM is an AI-powered quality assurance platform that helps businesses test and improve the performance and safety of their AI applications. It automates the testing process, generates realistic test cases, and provides comprehensive analytics to help businesses identify and fix potential issues. MAIHEM is used by a variety of businesses, including those in the customer support, healthcare, education, and sales industries.

DeepEval

DeepEval by Confident AI is a comprehensive LLM Evaluation Framework used by leading AI companies. It enables users to build reliable evaluation pipelines to test any AI system. With 50+ research-backed metrics, native multi-modal support, and auto-optimization of prompts, DeepEval offers a sophisticated evaluation ecosystem for AI applications. The framework covers unit-testing for LLMs, single and multi-turn evaluations, generation & simulation of test data, and state-of-the-art evaluation techniques like G-Eval and DAG. DeepEval is integrated with Pytest and supports various system architectures, making it a versatile tool for AI testing.

GptSdk

GptSdk is an AI tool that simplifies incorporating AI capabilities into PHP projects. It offers dynamic prompt management, model management, bulk testing, collaboration chaining integration, and more. The tool allows developers to develop professional AI applications 10x faster, integrates with Laravel and Symfony, and supports both local and API prompts. GptSdk is open-source under the MIT License and offers a flexible pricing model with a generous free tier.

Coval

Coval is an AI tool designed to help users ship reliable AI agents faster by providing simulation and evaluations for voice and chat agents. It allows users to simulate thousands of scenarios from a few test cases, create prompts for testing, and evaluate agent interactions comprehensively. Coval offers AI-powered simulations, voice AI compatibility, performance tracking, workflow metrics, and customizable evaluation metrics to optimize AI agents efficiently.

Langtail

Langtail is a platform that helps developers build, test, and deploy AI-powered applications. It provides a suite of tools to help developers debug prompts, run tests, and monitor the performance of their AI models. Langtail also offers a community forum where developers can share tips and tricks, and get help from other users.

TestDriver

TestDriver is an AI-powered testing tool that helps developers automate their testing process. It can be integrated with GitHub and can test anything, right in the GitHub environment. TestDriver is easy to set up and use, and it can help developers save time and effort by offloading testing to AI. It uses Dashcam.io technology to provide end-to-end exploratory testing, allowing developers to see the screen, logs, and thought process as the AI completes its test.

Teste.ai

Teste.ai is an AI-powered platform that allows users to create software testing scenarios and test cases using top-notch artificial intelligence technology. The platform offers a variety of tools based on AI to accelerate the software quality testing journey, helping testers cover a wide range of requirements with a vast array of test scenarios efficiently. Teste.ai's intelligent features enable users to save time and enhance efficiency in creating, executing, and managing software tests. With advanced AI integration, the platform provides automatic generation of test cases based on software documentation or specific requirements, ensuring comprehensive test coverage and precise responses to testing queries.

AI Generated Test Cases

AI Generated Test Cases is an innovative tool that leverages artificial intelligence to automatically generate test cases for software applications. By utilizing advanced algorithms and machine learning techniques, this tool can efficiently create a comprehensive set of test scenarios to ensure the quality and reliability of software products. With AI Generated Test Cases, software development teams can save time and effort in the testing phase, leading to faster release cycles and improved overall productivity.

nunu.ai

nunu.ai is an AI-powered platform designed to revolutionize game testing by leveraging AI agents to conduct end-to-end tests at scale. By automating repetitive tasks, the platform significantly reduces manual QA costs for game studios. With features like human-like testing, multi-platform support, and enterprise-grade security, nunu.ai offers a comprehensive solution for game developers seeking efficient and reliable testing processes.

Webo.AI

Webo.AI is a test automation platform powered by AI that offers a smarter and faster way to conduct testing. It provides generative AI for tailored test cases, AI-powered automation, predictive analysis, and patented AiHealing for test maintenance. Webo.AI aims to reduce test time, production defects, and QA costs while increasing release velocity and software quality. The platform is designed to cater to startups and offers comprehensive test coverage with human-readable AI-generated test cases.

0 - Open Source Tools

20 - OpenAI Gpts

Test Case GPT

I will provide guidance on testing, verification, and validation for QA roles.

Data Analysis Prompt Engineer

Specializes in creating, refining, and testing data analysis prompts based on user queries.

Inspection AI

Expert in testing, inspection, certification, compliant with OpenAI policies, developed on OpenAI.

Vitest Expert Testing Framework Multilingual

Multilingual AI for Vitest unit testing management.

HackingPT

HackingPT is a specialized language model focused on cybersecurity and penetration testing, committed to providing precise and in-depth insights in these fields.

Backloger.ai -Potential Corner Cases Detector!

Drop your requirements here and we'll Simply input, analyze, and refine for corner case detection!

GetPaths

This GPT takes in content related to an application, such as HTTP traffic, JavaScript files, source code, etc., and outputs lists of URLs that can be used for further testing.

UX/UI Designer

Crafts intuitive and aesthetically pleasing user interfaces using AI, enhancing the overall user experience.

REIGN HUNTER GENOMICS NEXUS

Expert in genomics, AI, and medical tech, explaining complex concepts simply.

IQ Test Assistant

An AI conducting 30-question IQ tests, assessing and providing detailed feedback.

AI Assistant for Writers and Creatives

Organize and develop ideas, respecting privacy and copyright laws.